Matteo Leonetti

Towards Generalisable Imitation Learning Through Conditioned Transition Estimation and Online Behaviour Alignment

Jan 24, 2026Abstract:State-of-the-art imitation learning from observation methods (ILfO) have recently made significant progress, but they still have some limitations: they need action-based supervised optimisation, assume that states have a single optimal action, and tend to apply teacher actions without full consideration of the actual environment state. While the truth may be out there in observed trajectories, existing methods struggle to extract it without supervision. In this work, we propose Unsupervised Imitation Learning from Observation (UfO) that addresses all of these limitations. UfO learns a policy through a two-stage process, in which the agent first obtains an approximation of the teacher's true actions in the observed state transitions, and then refines the learned policy further by adjusting agent trajectories to closely align them with the teacher's. Experiments we conducted in five widely used environments show that UfO not only outperforms the teacher and all other ILfO methods but also displays the smallest standard deviation. This reduction in standard deviation indicates better generalisation in unseen scenarios.

Imitation Learning for Adaptive Control of a Virtual Soft Exoglove

May 14, 2025

Abstract:The use of wearable robots has been widely adopted in rehabilitation training for patients with hand motor impairments. However, the uniqueness of patients' muscle loss is often overlooked. Leveraging reinforcement learning and a biologically accurate musculoskeletal model in simulation, we propose a customized wearable robotic controller that is able to address specific muscle deficits and to provide compensation for hand-object manipulation tasks. Video data of a same subject performing human grasping tasks is used to train a manipulation model through learning from demonstration. This manipulation model is subsequently fine-tuned to perform object-specific interaction tasks. The muscle forces in the musculoskeletal manipulation model are then weakened to simulate neurological motor impairments, which are later compensated by the actuation of a virtual wearable robotics glove. Results shows that integrating the virtual wearable robotic glove provides shared assistance to support the hand manipulator with weakened muscle forces. The learned exoglove controller achieved an average of 90.5\% of the original manipulation proficiency.

Learning Social Cost Functions for Human-Aware Path Planning

Jul 15, 2024Abstract:Achieving social acceptance is one of the main goals of Social Robotic Navigation. Despite this topic has received increasing interest in recent years, most of the research has focused on driving the robotic agent along obstacle-free trajectories, planning around estimates of future human motion to respect personal distances and optimize navigation. However, social interactions in everyday life are also dictated by norms that do not strictly depend on movement, such as when standing at the end of a queue rather than cutting it. In this paper, we propose a novel method to recognize common social scenarios and modify a traditional planner's cost function to adapt to them. This solution enables the robot to carry out different social navigation behaviors that would not arise otherwise, maintaining the robustness of traditional navigation. Our approach allows the robot to learn different social norms with a single learned model, rather than having different modules for each task. As a proof of concept, we consider the tasks of queuing and respect interaction spaces of groups of people talking to one another, but the method can be extended to other human activities that do not involve motion.

Proceedings of the AI-HRI Symposium at AAAI-FSS 2022

Sep 28, 2022Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration on AI theory and methods aimed at HRI since 2014. This year, after a review of the achievements of the AI-HRI community over the last decade in 2021, we are focusing on a visionary theme: exploring the future of AI-HRI. Accordingly, we added a Blue Sky Ideas track to foster a forward-thinking discussion on future research at the intersection of AI and HRI. As always, we appreciate all contributions related to any topic on AI/HRI and welcome new researchers who wish to take part in this growing community. With the success of past symposia, AI-HRI impacts a variety of communities and problems, and has pioneered the discussions in recent trends and interests. This year's AI-HRI Fall Symposium aims to bring together researchers and practitioners from around the globe, representing a number of university, government, and industry laboratories. In doing so, we hope to accelerate research in the field, support technology transition and user adoption, and determine future directions for our group and our research.

Beyond RMSE: Do machine-learned models of road user interaction produce human-like behavior?

Jun 22, 2022

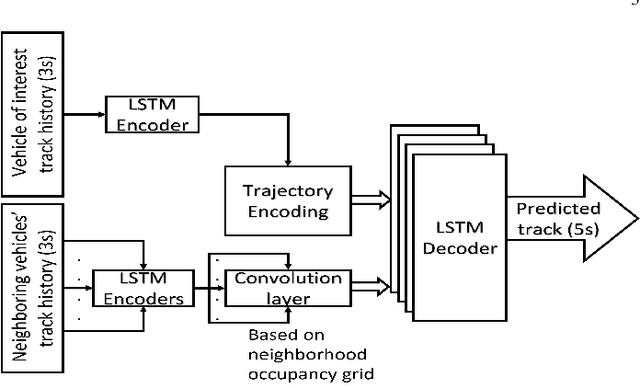

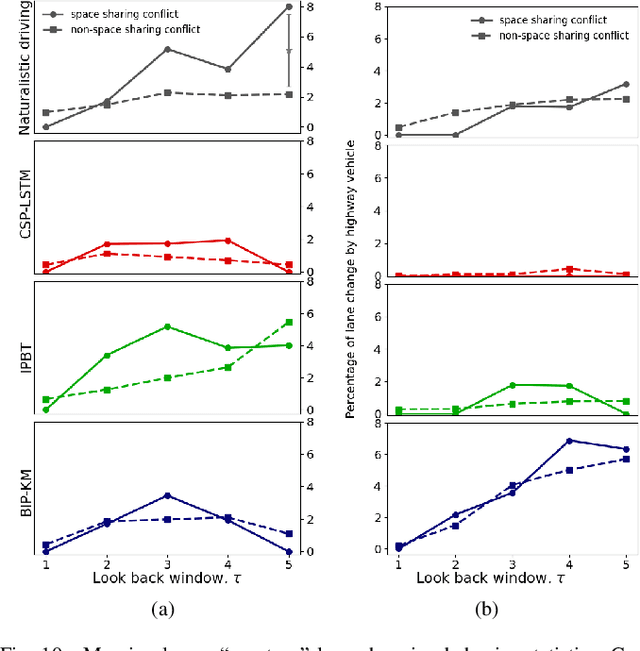

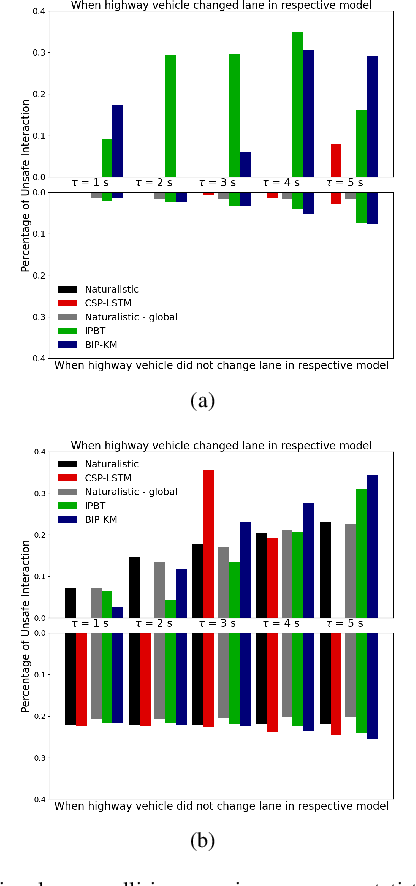

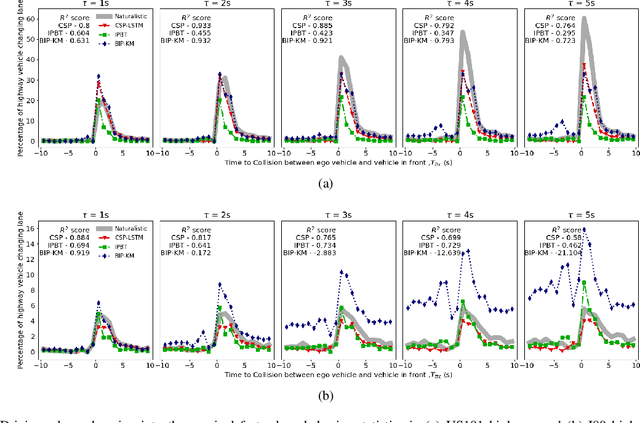

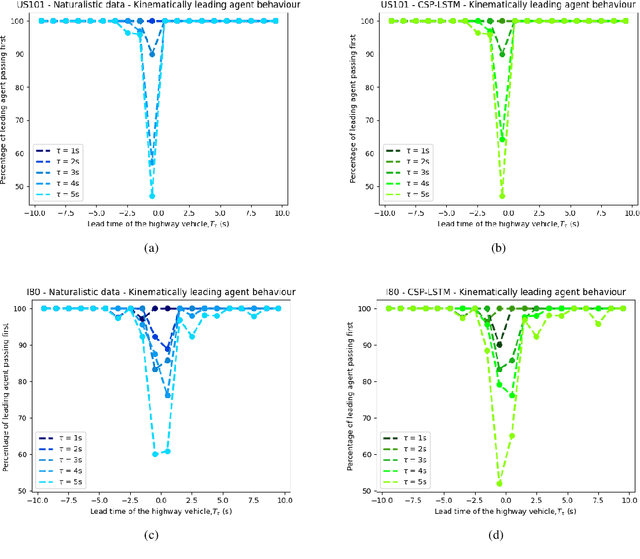

Abstract:Autonomous vehicles use a variety of sensors and machine-learned models to predict the behavior of surrounding road users. Most of the machine-learned models in the literature focus on quantitative error metrics like the root mean square error (RMSE) to learn and report their models' capabilities. This focus on quantitative error metrics tends to ignore the more important behavioral aspect of the models, raising the question of whether these models really predict human-like behavior. Thus, we propose to analyze the output of machine-learned models much like we would analyze human data in conventional behavioral research. We introduce quantitative metrics to demonstrate presence of three different behavioral phenomena in a naturalistic highway driving dataset: 1) The kinematics-dependence of who passes a merging point first 2) Lane change by an on-highway vehicle to accommodate an on-ramp vehicle 3) Lane changes by vehicles on the highway to avoid lead vehicle conflicts. Then, we analyze the behavior of three machine-learned models using the same metrics. Even though the models' RMSE value differed, all the models captured the kinematic-dependent merging behavior but struggled at varying degrees to capture the more nuanced courtesy lane change and highway lane change behavior. Additionally, the collision aversion analysis during lane changes showed that the models struggled to capture the physical aspect of human driving: leaving adequate gap between the vehicles. Thus, our analysis highlighted the inadequacy of simple quantitative metrics and the need to take a broader behavioral perspective when analyzing machine-learned models of human driving predictions.

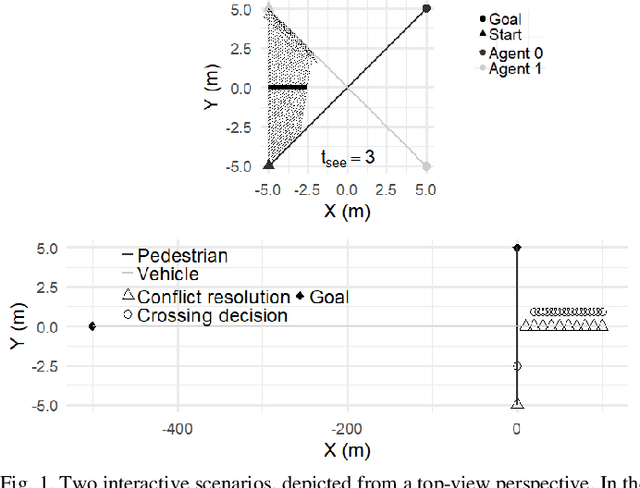

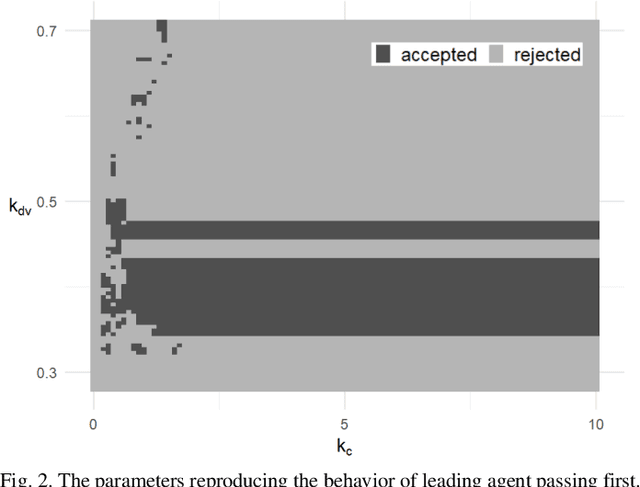

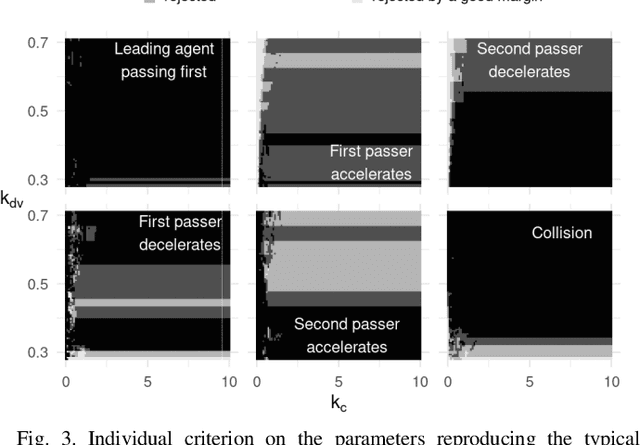

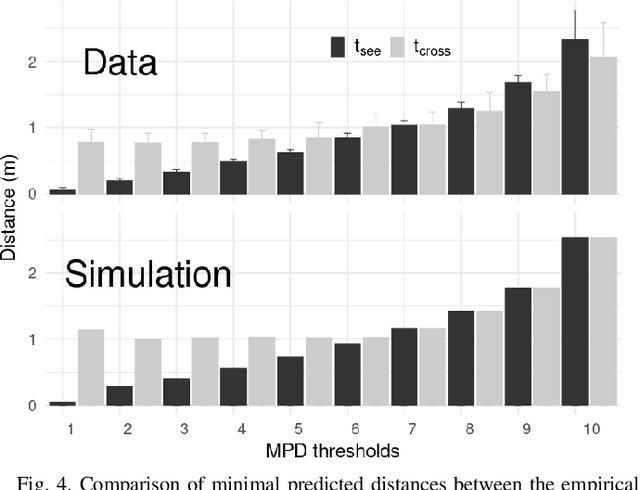

A Utility Maximization Model of Pedestrian and Driver Interactions

Oct 21, 2021

Abstract:Many models account for the traffic flow of road users but few take the details of local interactions into consideration and how they could deteriorate into safety-critical situations. Building on the concept of sensorimotor control, we develop a modeling framework applying the principles of utility maximization, motor primitives, and intermittent action decisions to account for the details of interactive behaviors among road users. The framework connects these principles to the decision theory and is applied to determine whether such an approach can reproduce the following phenomena: When two pedestrians travel on crossing paths, (a) their interaction is sensitive to initial asymmetries, and (b) based on which, they rapidly resolve collision conflict by adapting their behaviors. When a pedestrian crosses the road while facing an approaching car, (c) either road user yields to the other to resolve their conflict, akin to the pedestrian interaction, and (d) the outcome reveals a specific situational kinematics, associated with the nature of vehicle acceleration. We show that these phenomena emerge naturally from our modeling framework when the model can evolve its parameters as a consequence of the situations. We believe that the modeling framework and phenomenon-centered analysis offer promising tools to understand road user interactions. We conclude with a discussion on how the model can be instrumental in studying the safety-critical situations when including other variables in road-user interactions.

AI-HRI 2021 Proceedings

Sep 23, 2021Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration since 2014. During that time, these symposia provided a fertile ground for numerous collaborations and pioneered many discussions revolving trust in HRI, XAI for HRI, service robots, interactive learning, and more. This year, we aim to review the achievements of the AI-HRI community in the last decade, identify the challenges facing ahead, and welcome new researchers who wish to take part in this growing community. Taking this wide perspective, this year there will be no single theme to lead the symposium and we encourage AI-HRI submissions from across disciplines and research interests. Moreover, with the rising interest in AR and VR as part of an interaction and following the difficulties in running physical experiments during the pandemic, this year we specifically encourage researchers to submit works that do not include a physical robot in their evaluation, but promote HRI research in general. In addition, acknowledging that ethics is an inherent part of the human-robot interaction, we encourage submissions of works on ethics for HRI. Over the course of the two-day meeting, we will host a collaborative forum for discussion of current efforts in AI-HRI, with additional talks focused on the topics of ethics in HRI and ubiquitous HRI.

Meta-Reinforcement Learning for Heuristic Planning

Jul 06, 2021

Abstract:In Meta-Reinforcement Learning (meta-RL) an agent is trained on a set of tasks to prepare for and learn faster in new, unseen, but related tasks. The training tasks are usually hand-crafted to be representative of the expected distribution of test tasks and hence all used in training. We show that given a set of training tasks, learning can be both faster and more effective (leading to better performance in the test tasks), if the training tasks are appropriately selected. We propose a task selection algorithm, Information-Theoretic Task Selection (ITTS), based on information theory, which optimizes the set of tasks used for training in meta-RL, irrespectively of how they are generated. The algorithm establishes which training tasks are both sufficiently relevant for the test tasks, and different enough from one another. We reproduce different meta-RL experiments from the literature and show that ITTS improves the final performance in all of them.

Comparing merging behaviors observed in naturalistic data with behaviors generated by a machine learned model

Apr 21, 2021

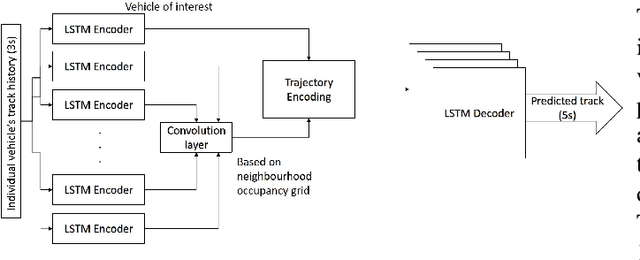

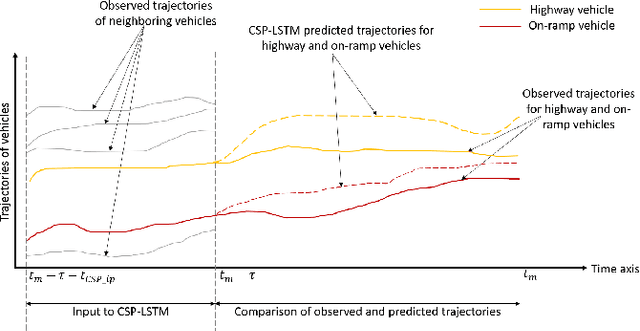

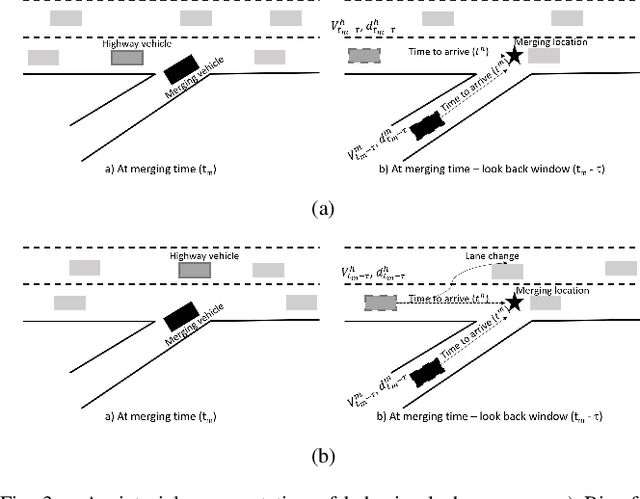

Abstract:There is quickly growing literature on machine-learned models that predict human driving trajectories in road traffic. These models focus their learning on low-dimensional error metrics, for example average distance between model-generated and observed trajectories. Such metrics permit relative comparison of models, but do not provide clearly interpretable information on how close to human behavior the models actually come, for example in terms of higher-level behavior phenomena that are known to be present in human driving. We study highway driving as an example scenario, and introduce metrics to quantitatively demonstrate the presence, in a naturalistic dataset, of two familiar behavioral phenomena: (1) The kinematics-dependent contest, between on-highway and on-ramp vehicles, of who passes the merging point first. (2) Courtesy lane changes away from the outermost lane, to leave space for a merging vehicle. Applying the exact same metrics to the output of a state-of-the-art machine-learned model, we show that the model is capable of reproducing the former phenomenon, but not the latter. We argue that this type of behavioral analysis provides information that is not available from conventional model-fitting metrics, and that it may be useful to analyze (and possibly fit) models also based on these types of behavioral criteria.

Proceedings of the AI-HRI Symposium at AAAI-FSS 2020

Nov 11, 2020Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration since 2014. In that time, the related topic of trust in robotics has been rapidly growing, with major research efforts at universities and laboratories across the world. Indeed, many of the past participants in AI-HRI have been or are now involved with research into trust in HRI. While trust has no consensus definition, it is regularly associated with predictability, reliability, inciting confidence, and meeting expectations. Furthermore, it is generally believed that trust is crucial for adoption of both AI and robotics, particularly when transitioning technologies from the lab to industrial, social, and consumer applications. However, how does trust apply to the specific situations we encounter in the AI-HRI sphere? Is the notion of trust in AI the same as that in HRI? We see a growing need for research that lives directly at the intersection of AI and HRI that is serviced by this symposium. Over the course of the two-day meeting, we propose to create a collaborative forum for discussion of current efforts in trust for AI-HRI, with a sub-session focused on the related topic of explainable AI (XAI) for HRI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge