Richard Romano

Multi-level and multi-modal feature fusion for accurate 3D object detection in Connected and Automated Vehicles

Dec 19, 2022

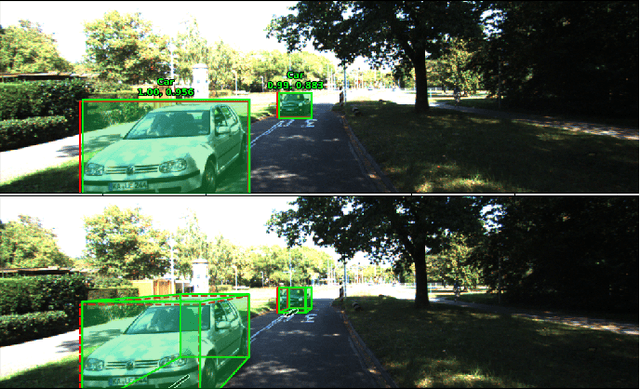

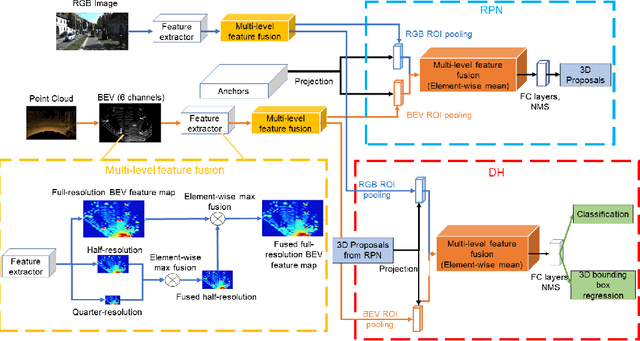

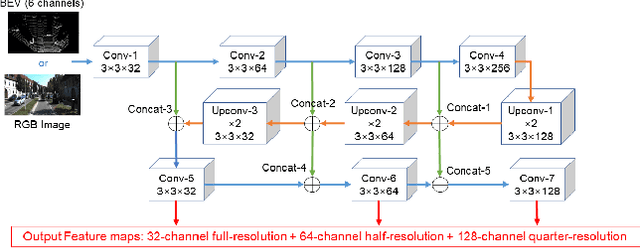

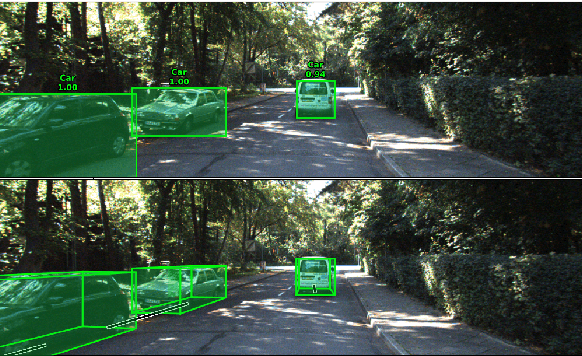

Abstract:Aiming at highly accurate object detection for connected and automated vehicles (CAVs), this paper presents a Deep Neural Network based 3D object detection model that leverages a three-stage feature extractor by developing a novel LIDAR-Camera fusion scheme. The proposed feature extractor extracts high-level features from two input sensory modalities and recovers the important features discarded during the convolutional process. The novel fusion scheme effectively fuses features across sensory modalities and convolutional layers to find the best representative global features. The fused features are shared by a two-stage network: the region proposal network (RPN) and the detection head (DH). The RPN generates high-recall proposals, and the DH produces final detection results. The experimental results show the proposed model outperforms more recent research on the KITTI 2D and 3D detection benchmark, particularly for distant and highly occluded instances.

Beyond RMSE: Do machine-learned models of road user interaction produce human-like behavior?

Jun 22, 2022

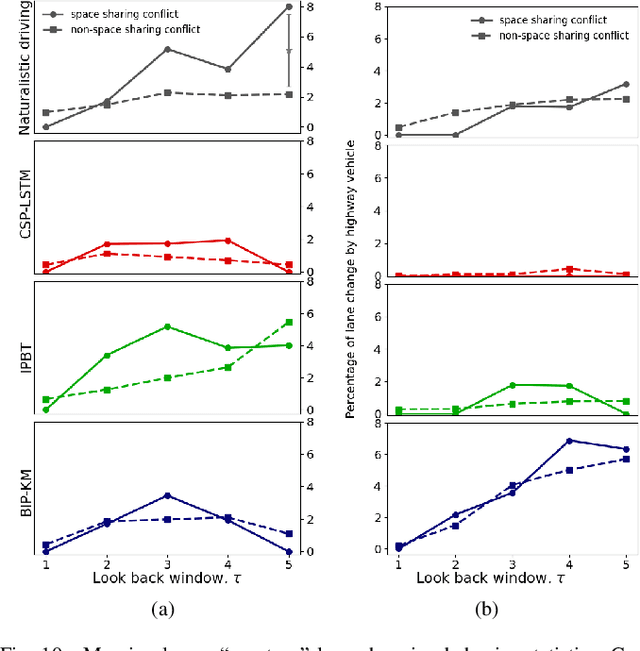

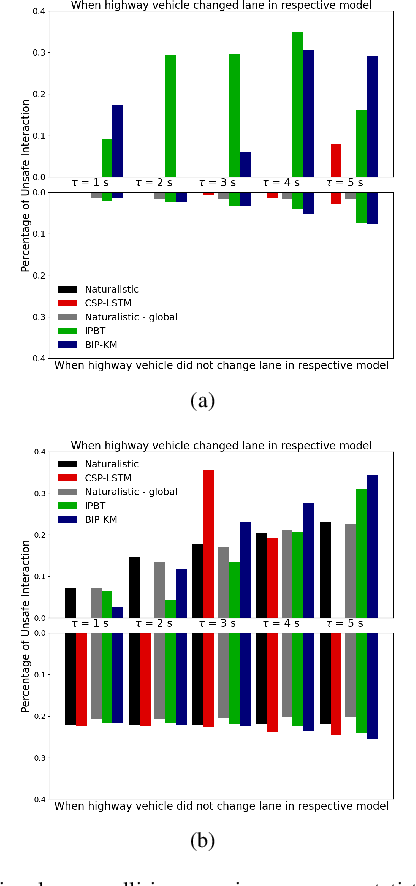

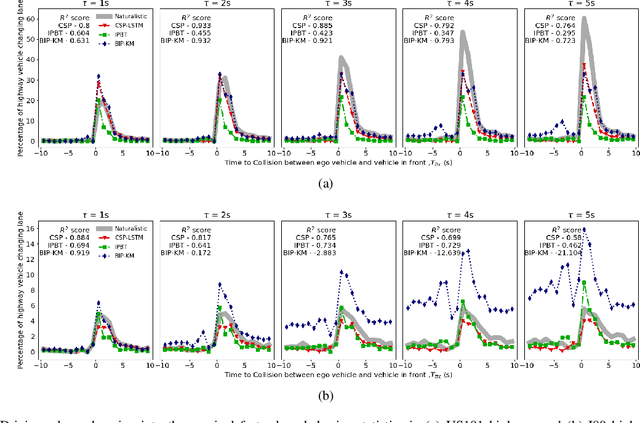

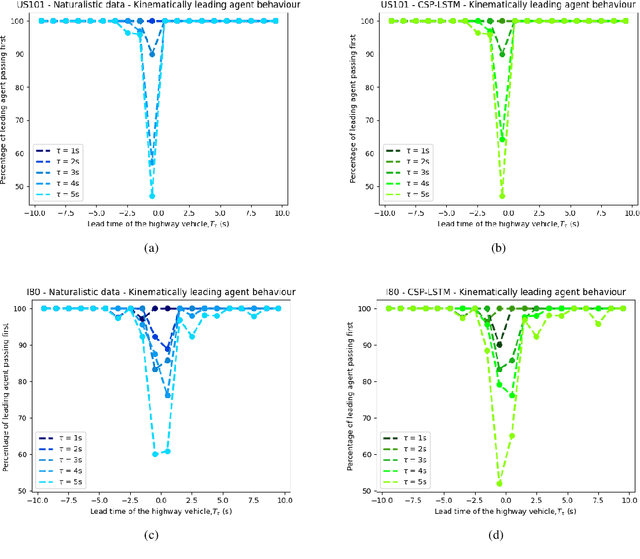

Abstract:Autonomous vehicles use a variety of sensors and machine-learned models to predict the behavior of surrounding road users. Most of the machine-learned models in the literature focus on quantitative error metrics like the root mean square error (RMSE) to learn and report their models' capabilities. This focus on quantitative error metrics tends to ignore the more important behavioral aspect of the models, raising the question of whether these models really predict human-like behavior. Thus, we propose to analyze the output of machine-learned models much like we would analyze human data in conventional behavioral research. We introduce quantitative metrics to demonstrate presence of three different behavioral phenomena in a naturalistic highway driving dataset: 1) The kinematics-dependence of who passes a merging point first 2) Lane change by an on-highway vehicle to accommodate an on-ramp vehicle 3) Lane changes by vehicles on the highway to avoid lead vehicle conflicts. Then, we analyze the behavior of three machine-learned models using the same metrics. Even though the models' RMSE value differed, all the models captured the kinematic-dependent merging behavior but struggled at varying degrees to capture the more nuanced courtesy lane change and highway lane change behavior. Additionally, the collision aversion analysis during lane changes showed that the models struggled to capture the physical aspect of human driving: leaving adequate gap between the vehicles. Thus, our analysis highlighted the inadequacy of simple quantitative metrics and the need to take a broader behavioral perspective when analyzing machine-learned models of human driving predictions.

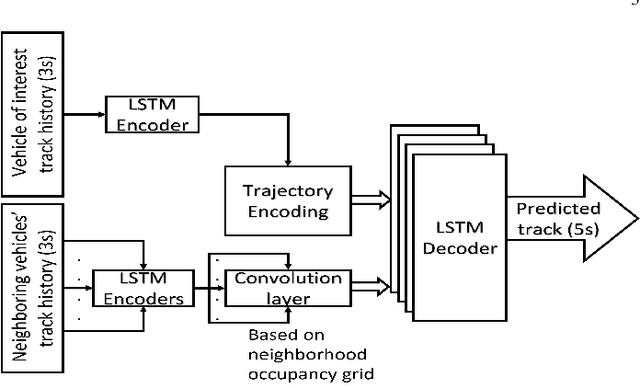

Maneuver-Aware Pooling for Vehicle Trajectory Prediction

Apr 29, 2021

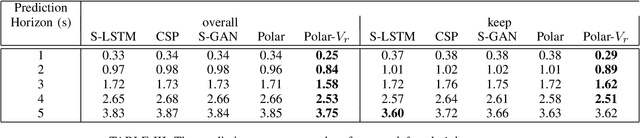

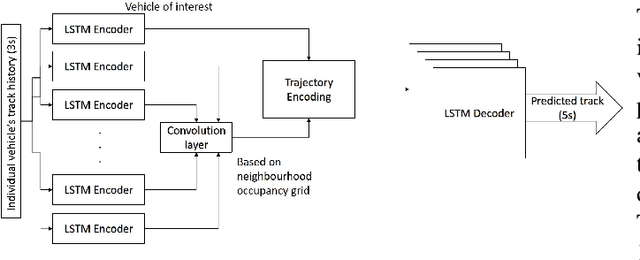

Abstract:Autonomous vehicles should be able to predict the future states of its environment and respond appropriately. Specifically, predicting the behavior of surrounding human drivers is vital for such platforms to share the same road with humans. Behavior of each of the surrounding vehicles is governed by the motion of its neighbor vehicles. This paper focuses on predicting the behavior of the surrounding vehicles of an autonomous vehicle on highways. We are motivated by improving the prediction accuracy when a surrounding vehicle performs lane change and highway merging maneuvers. We propose a novel pooling strategy to capture the inter-dependencies between the neighbor vehicles. Depending solely on Euclidean trajectory representation, the existing pooling strategies do not model the context information of the maneuvers intended by a surrounding vehicle. In contrast, our pooling mechanism employs polar trajectory representation, vehicles orientation and radial velocity. This results in an implicitly maneuver-aware pooling operation. We incorporated the proposed pooling mechanism into a generative encoder-decoder model, and evaluated our method on the public NGSIM dataset. The results of maneuver-based trajectory predictions demonstrate the effectiveness of the proposed method compared with the state-of-the-art approaches. Our "Pooling Toolbox" code is available at https://github.com/m-hasan-n/pooling.

Maneuver-based Anchor Trajectory Hypotheses at Roundabouts

Apr 22, 2021

Abstract:Predicting future behavior of the surrounding vehicles is crucial for self-driving platforms to safely navigate through other traffic. This is critical when making decisions like crossing an unsignalized intersection. We address the problem of vehicle motion prediction in a challenging roundabout environment by learning from human driver data. We extend existing recurrent encoder-decoder models to be advantageously combined with anchor trajectories to predict vehicle behaviors on a roundabout. Drivers' intentions are encoded by a set of maneuvers that correspond to semantic driving concepts. Accordingly, our model employs a set of maneuver-specific anchor trajectories that cover the space of possible outcomes at the roundabout. The proposed model can output a multi-modal distribution over the predicted future trajectories based on the maneuver-specific anchors. We evaluate our model using the public RounD dataset and the experiment results show the effectiveness of the proposed maneuver-based anchor regression in improving prediction accuracy, reducing the average RMSE to 28% less than the best baseline. Our code is available at https://github.com/m-hasan-n/roundabout.

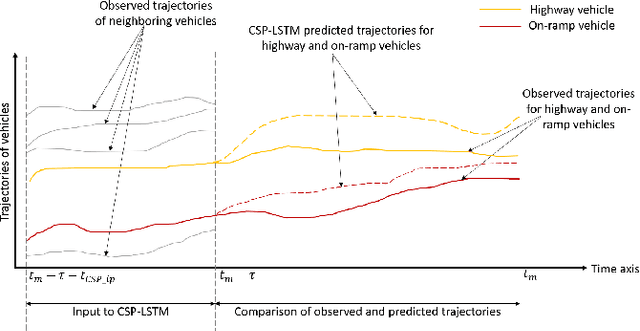

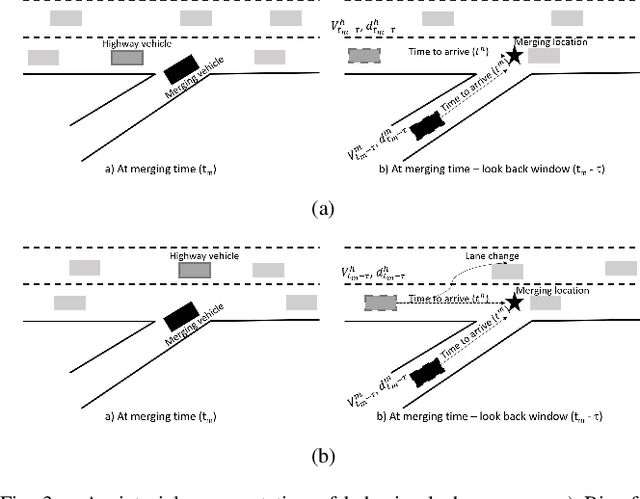

Comparing merging behaviors observed in naturalistic data with behaviors generated by a machine learned model

Apr 21, 2021

Abstract:There is quickly growing literature on machine-learned models that predict human driving trajectories in road traffic. These models focus their learning on low-dimensional error metrics, for example average distance between model-generated and observed trajectories. Such metrics permit relative comparison of models, but do not provide clearly interpretable information on how close to human behavior the models actually come, for example in terms of higher-level behavior phenomena that are known to be present in human driving. We study highway driving as an example scenario, and introduce metrics to quantitatively demonstrate the presence, in a naturalistic dataset, of two familiar behavioral phenomena: (1) The kinematics-dependent contest, between on-highway and on-ramp vehicles, of who passes the merging point first. (2) Courtesy lane changes away from the outermost lane, to leave space for a merging vehicle. Applying the exact same metrics to the output of a state-of-the-art machine-learned model, we show that the model is capable of reproducing the former phenomenon, but not the latter. We argue that this type of behavioral analysis provides information that is not available from conventional model-fitting metrics, and that it may be useful to analyze (and possibly fit) models also based on these types of behavioral criteria.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge