Mohamed Hasan

Human-like visual computing advances explainability and few-shot learning in deep neural networks for complex physiological data

Dec 26, 2025Abstract:Machine vision models, particularly deep neural networks, are increasingly applied to physiological signal interpretation, including electrocardiography (ECG), yet they typically require large training datasets and offer limited insight into the causal features underlying their predictions. This lack of data efficiency and interpretability constrains their clinical reliability and alignment with human reasoning. Here, we show that a perception-informed pseudo-colouring technique, previously demonstrated to enhance human ECG interpretation, can improve both explainability and few-shot learning in deep neural networks analysing complex physiological data. We focus on acquired, drug-induced long QT syndrome (LQTS) as a challenging case study characterised by heterogeneous signal morphology, variable heart rate, and scarce positive cases associated with life-threatening arrhythmias such as torsades de pointes. This setting provides a stringent test of model generalisation under extreme data scarcity. By encoding clinically salient temporal features, such as QT-interval duration, into structured colour representations, models learn discriminative and interpretable features from as few as one or five training examples. Using prototypical networks and a ResNet-18 architecture, we evaluate one-shot and few-shot learning on ECG images derived from single cardiac cycles and full 10-second rhythms. Explainability analyses show that pseudo-colouring guides attention toward clinically meaningful ECG features while suppressing irrelevant signal components. Aggregating multiple cardiac cycles further improves performance, mirroring human perceptual averaging across heartbeats. Together, these findings demonstrate that human-like perceptual encoding can bridge data efficiency, explainability, and causal reasoning in medical machine intelligence.

Beyond RMSE: Do machine-learned models of road user interaction produce human-like behavior?

Jun 22, 2022

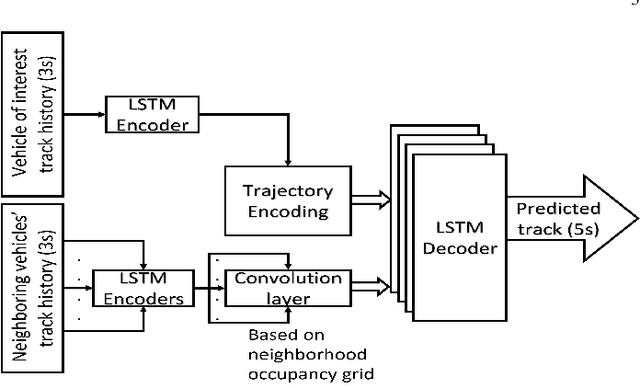

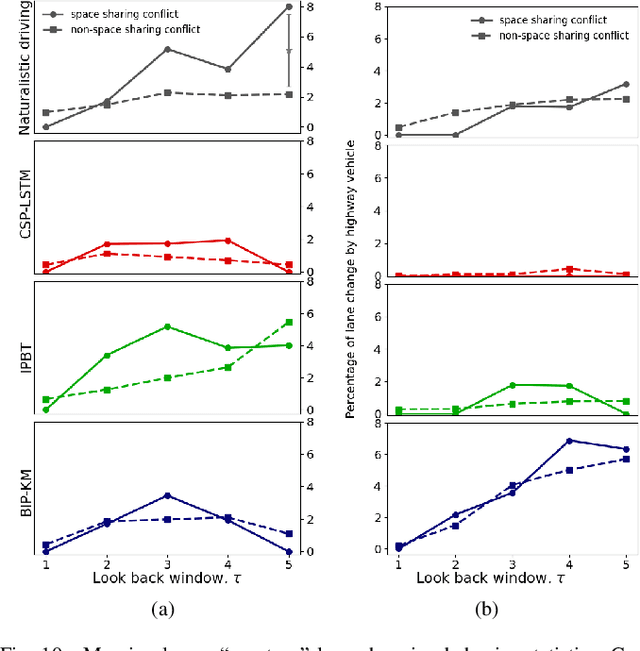

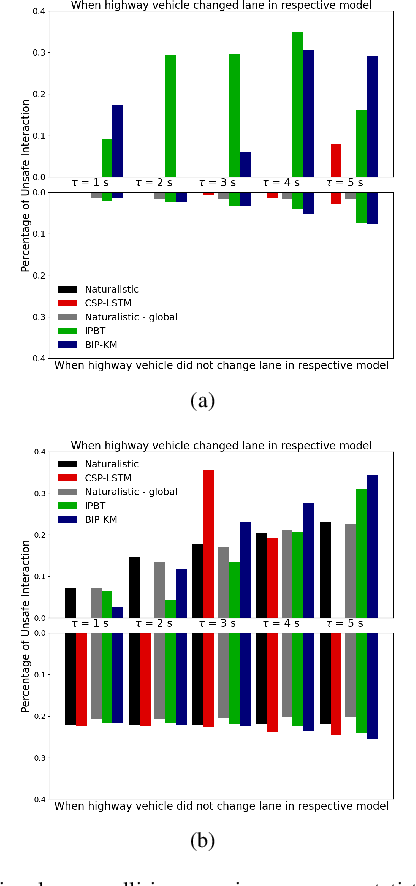

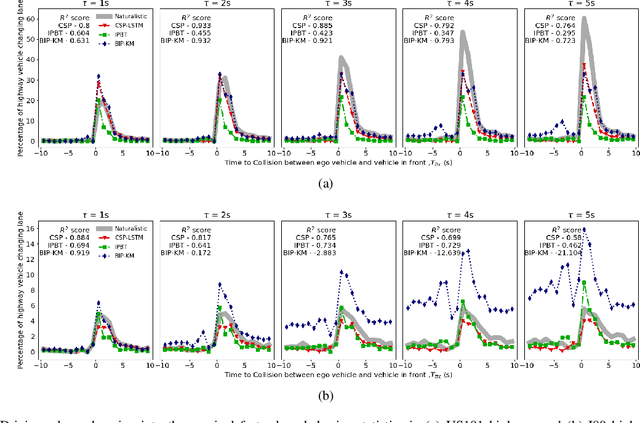

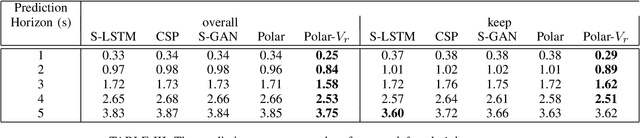

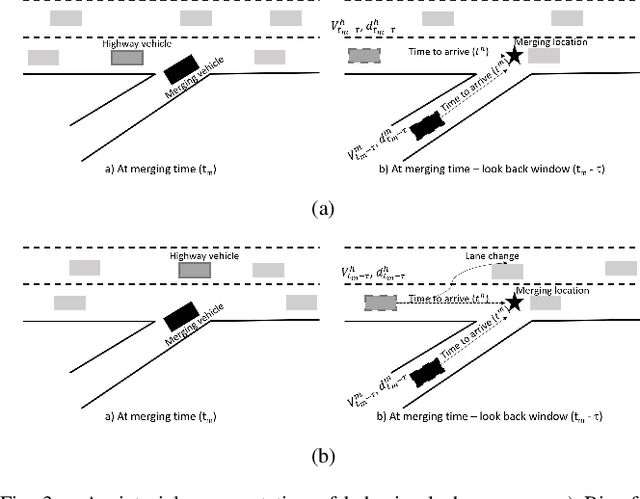

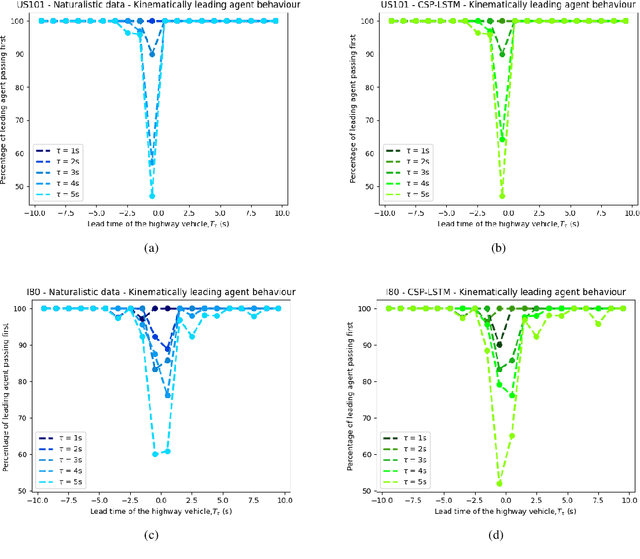

Abstract:Autonomous vehicles use a variety of sensors and machine-learned models to predict the behavior of surrounding road users. Most of the machine-learned models in the literature focus on quantitative error metrics like the root mean square error (RMSE) to learn and report their models' capabilities. This focus on quantitative error metrics tends to ignore the more important behavioral aspect of the models, raising the question of whether these models really predict human-like behavior. Thus, we propose to analyze the output of machine-learned models much like we would analyze human data in conventional behavioral research. We introduce quantitative metrics to demonstrate presence of three different behavioral phenomena in a naturalistic highway driving dataset: 1) The kinematics-dependence of who passes a merging point first 2) Lane change by an on-highway vehicle to accommodate an on-ramp vehicle 3) Lane changes by vehicles on the highway to avoid lead vehicle conflicts. Then, we analyze the behavior of three machine-learned models using the same metrics. Even though the models' RMSE value differed, all the models captured the kinematic-dependent merging behavior but struggled at varying degrees to capture the more nuanced courtesy lane change and highway lane change behavior. Additionally, the collision aversion analysis during lane changes showed that the models struggled to capture the physical aspect of human driving: leaving adequate gap between the vehicles. Thus, our analysis highlighted the inadequacy of simple quantitative metrics and the need to take a broader behavioral perspective when analyzing machine-learned models of human driving predictions.

Maneuver-Aware Pooling for Vehicle Trajectory Prediction

Apr 29, 2021

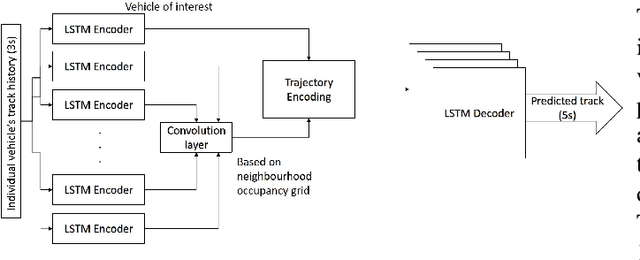

Abstract:Autonomous vehicles should be able to predict the future states of its environment and respond appropriately. Specifically, predicting the behavior of surrounding human drivers is vital for such platforms to share the same road with humans. Behavior of each of the surrounding vehicles is governed by the motion of its neighbor vehicles. This paper focuses on predicting the behavior of the surrounding vehicles of an autonomous vehicle on highways. We are motivated by improving the prediction accuracy when a surrounding vehicle performs lane change and highway merging maneuvers. We propose a novel pooling strategy to capture the inter-dependencies between the neighbor vehicles. Depending solely on Euclidean trajectory representation, the existing pooling strategies do not model the context information of the maneuvers intended by a surrounding vehicle. In contrast, our pooling mechanism employs polar trajectory representation, vehicles orientation and radial velocity. This results in an implicitly maneuver-aware pooling operation. We incorporated the proposed pooling mechanism into a generative encoder-decoder model, and evaluated our method on the public NGSIM dataset. The results of maneuver-based trajectory predictions demonstrate the effectiveness of the proposed method compared with the state-of-the-art approaches. Our "Pooling Toolbox" code is available at https://github.com/m-hasan-n/pooling.

Maneuver-based Anchor Trajectory Hypotheses at Roundabouts

Apr 22, 2021

Abstract:Predicting future behavior of the surrounding vehicles is crucial for self-driving platforms to safely navigate through other traffic. This is critical when making decisions like crossing an unsignalized intersection. We address the problem of vehicle motion prediction in a challenging roundabout environment by learning from human driver data. We extend existing recurrent encoder-decoder models to be advantageously combined with anchor trajectories to predict vehicle behaviors on a roundabout. Drivers' intentions are encoded by a set of maneuvers that correspond to semantic driving concepts. Accordingly, our model employs a set of maneuver-specific anchor trajectories that cover the space of possible outcomes at the roundabout. The proposed model can output a multi-modal distribution over the predicted future trajectories based on the maneuver-specific anchors. We evaluate our model using the public RounD dataset and the experiment results show the effectiveness of the proposed maneuver-based anchor regression in improving prediction accuracy, reducing the average RMSE to 28% less than the best baseline. Our code is available at https://github.com/m-hasan-n/roundabout.

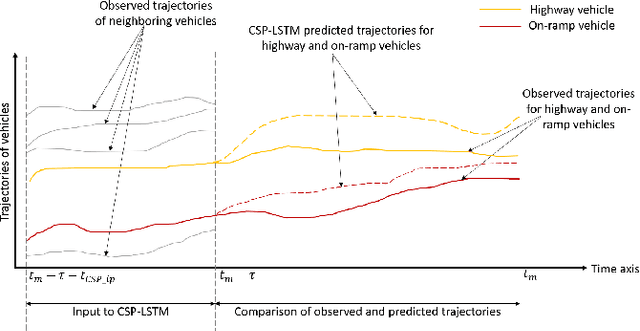

Comparing merging behaviors observed in naturalistic data with behaviors generated by a machine learned model

Apr 21, 2021

Abstract:There is quickly growing literature on machine-learned models that predict human driving trajectories in road traffic. These models focus their learning on low-dimensional error metrics, for example average distance between model-generated and observed trajectories. Such metrics permit relative comparison of models, but do not provide clearly interpretable information on how close to human behavior the models actually come, for example in terms of higher-level behavior phenomena that are known to be present in human driving. We study highway driving as an example scenario, and introduce metrics to quantitatively demonstrate the presence, in a naturalistic dataset, of two familiar behavioral phenomena: (1) The kinematics-dependent contest, between on-highway and on-ramp vehicles, of who passes the merging point first. (2) Courtesy lane changes away from the outermost lane, to leave space for a merging vehicle. Applying the exact same metrics to the output of a state-of-the-art machine-learned model, we show that the model is capable of reproducing the former phenomenon, but not the latter. We argue that this type of behavioral analysis provides information that is not available from conventional model-fitting metrics, and that it may be useful to analyze (and possibly fit) models also based on these types of behavioral criteria.

Human-like Planning for Reaching in Cluttered Environments

Mar 03, 2020

Abstract:Humans, in comparison to robots, are remarkably adept at reaching for objects in cluttered environments. The best existing robot planners are based on random sampling of configuration space -- which becomes excessively high-dimensional with large number of objects. Consequently, most planners often fail to efficiently find object manipulation plans in such environments. We addressed this problem by identifying high-level manipulation plans in humans, and transferring these skills to robot planners. We used virtual reality to capture human participants reaching for a target object on a tabletop cluttered with obstacles. From this, we devised a qualitative representation of the task space to abstract the decision making, irrespective of the number of obstacles. Based on this representation, human demonstrations were segmented and used to train decision classifiers. Using these classifiers, our planner produced a list of waypoints in task space. These waypoints provided a high-level plan, which could be transferred to an arbitrary robot model and used to initialise a local trajectory optimiser. We evaluated this approach through testing on unseen human VR data, a physics-based robot simulation, and a real robot (dataset and code are publicly available). We found that the human-like planner outperformed a state-of-the-art standard trajectory optimisation algorithm, and was able to generate effective strategies for rapid planning -- irrespective of the number of obstacles in the environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge