Wisdom C. Agboh

AUTOLab at the University of California, Berkeley, University of Leeds

The Teenager's Problem: Efficient Garment Decluttering With Grasp Optimization

Oct 25, 2023

Abstract:This paper addresses the ''Teenager's Problem'': efficiently removing scattered garments from a planar surface. As grasping and transporting individual garments is highly inefficient, we propose analytical policies to select grasp locations for multiple garments using an overhead camera. Two classes of methods are considered: depth-based, which use overhead depth data to find efficient grasps, and segment-based, which use segmentation on the RGB overhead image (without requiring any depth data); grasp efficiency is measured by Objects per Transport, which denotes the average number of objects removed per trip to the laundry basket. Experiments suggest that both depth- and segment-based methods easily reduce Objects per Transport (OpT) by $20\%$; furthermore, these approaches complement each other, with combined hybrid methods yielding improvements of $34\%$. Finally, a method employing consolidation (with segmentation) is considered, which manipulates the garments on the work surface to increase OpT; this yields an improvement of $67\%$ over the baseline, though at a cost of additional physical actions.

Push-MOG: Efficient Pushing to Consolidate Polygonal Objects for Multi-Object Grasping

Jun 24, 2023

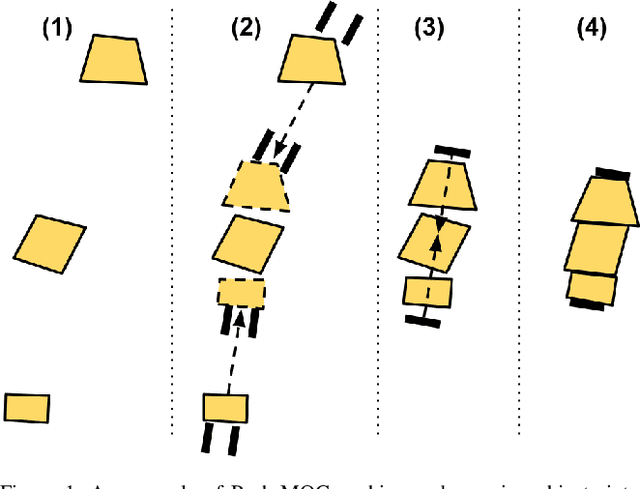

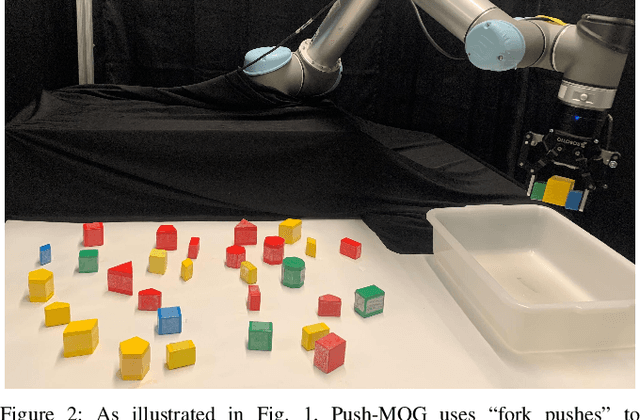

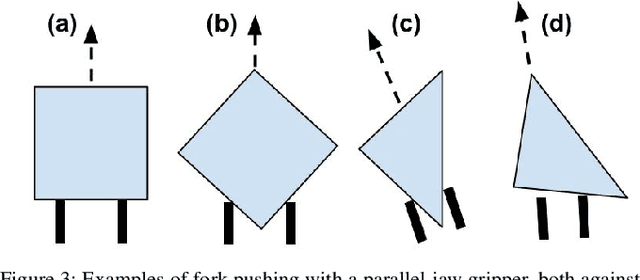

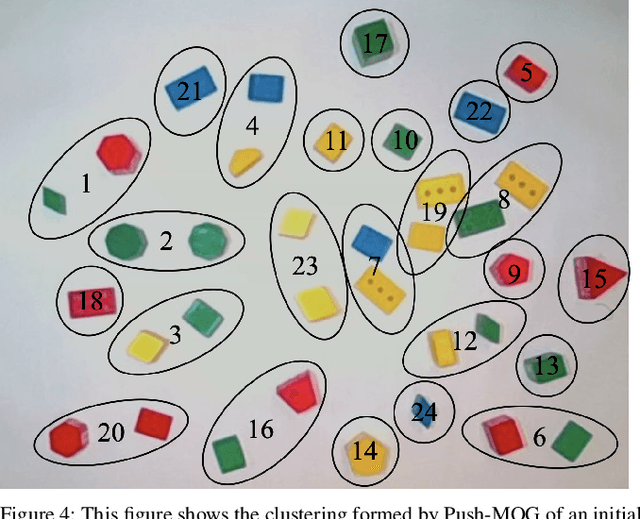

Abstract:Recently, robots have seen rapidly increasing use in homes and warehouses to declutter by collecting objects from a planar surface and placing them into a container. While current techniques grasp objects individually, Multi-Object Grasping (MOG) can improve efficiency by increasing the average number of objects grasped per trip (OpT). However, grasping multiple objects requires the objects to be aligned and in close proximity. In this work, we propose Push-MOG, an algorithm that computes "fork pushing" actions using a parallel-jaw gripper to create graspable object clusters. In physical decluttering experiments, we find that Push-MOG enables multi-object grasps, increasing the average OpT by 34%. Code and videos will be available at https://sites.google.com/berkeley.edu/push-mog.

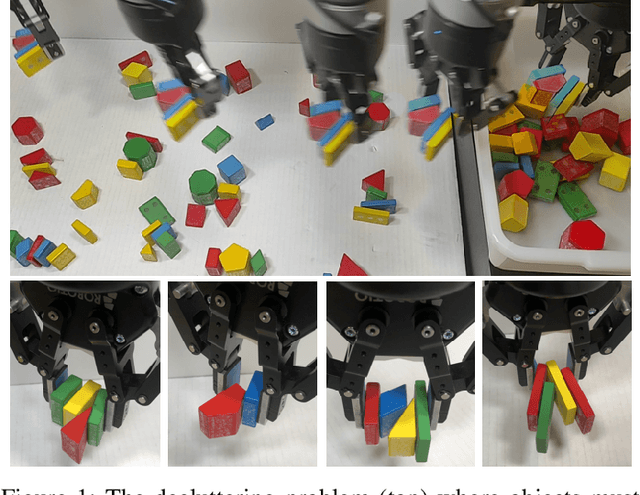

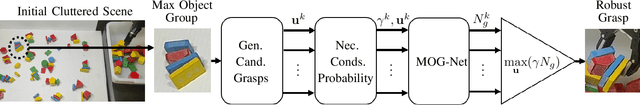

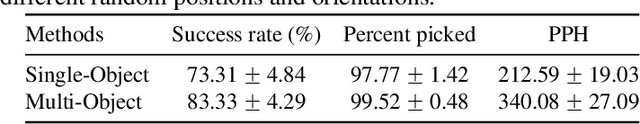

Learning to Efficiently Plan Robust Frictional Multi-Object Grasps

Oct 13, 2022

Abstract:We consider a decluttering problem where multiple rigid convex polygonal objects rest in randomly placed positions and orientations on a planar surface and must be efficiently transported to a packing box using both single and multi-object grasps. Prior work considered frictionless multi-object grasping. In this paper, we introduce friction to increase picks per hour. We train a neural network using real examples to plan robust multi-object grasps. In physical experiments, we find an 11.7% increase in success rates, a 1.7x increase in picks per hour, and an 8.2x decrease in grasp planning time compared to prior work on multi-object grasping. Videos are available at https://youtu.be/pEZpHX5FZIs.

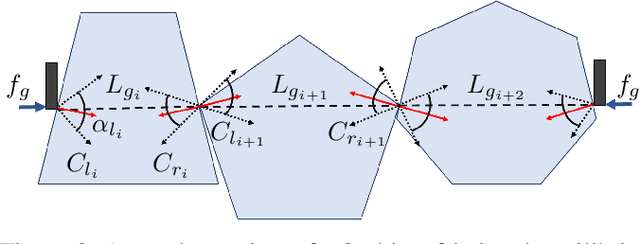

Multi-Object Grasping in the Plane

Jun 01, 2022

Abstract:We consider the problem where multiple rigid convex polygonal objects rest in randomly placed positions and orientations on a planar surface visible from an overhead camera. The objective is to efficiently grasp and transport all objects into a bin. Specifically, we explore multi-object push-grasps where multiple objects are pushed together before the grasp can occur. We provide necessary conditions for multi-object push-grasps and apply these to filter inadmissible grasps in a novel multi-object grasp planner. We find that our planner is 19 times faster than a Mujoco simulator baseline. We also propose a picking algorithm that uses both single- and multi-object grasps to pick objects. In physical grasping experiments, compared to a single-object picking baseline, we find that the multi-object grasping system achieves 13.6% higher grasp success and is 59.9% faster. See https://sites.google.com/view/multi-object-grasping for videos, code, and data.

Robust Physics-Based Manipulation by Interleaving Open and Closed-Loop Execution

May 18, 2021

Abstract:We present a planning and control framework for physics-based manipulation under uncertainty. The key idea is to interleave robust open-loop execution with closed-loop control. We derive robustness metrics through contraction theory. We use these metrics to plan trajectories that are robust to both state uncertainty and model inaccuracies. However, fully robust trajectories are extremely difficult to find or may not exist for many multi-contact manipulation problems. We separate a trajectory into robust and non-robust segments through a minimum cost path search on a robustness graph. Robust segments are executed open-loop and non-robust segments are executed with model-predictive control. We conduct experiments on a real robotic system for reaching in clutter. Our results suggest that the open and closed-loop approach results in up to 35% more real-world success compared to open-loop baselines and a 40% reduction in execution time compared to model-predictive control. We show for the first time that partially open-loop manipulation plans generated with our approach reach similar success rates to model-predictive control, while achieving a more fluent/real-time execution. A video showing real-robot executions can be found at https://youtu.be/rPOPCwHfV4g.

Occlusion-Aware Search for Object Retrieval in Clutter

Nov 10, 2020

Abstract:We address the manipulation task of retrieving a target object from a cluttered shelf. When the target object is hidden, the robot must search through the clutter for retrieving it. Solving this task requires reasoning over the likely locations of the target object. It also requires physics reasoning over multi-object interactions and future occlusions. In this work, we present a data-driven approach for generating occlusion-aware actions in closed-loop. We present a hybrid planner that explores likely states generated from a learned distribution over the location of the target object. The search is guided by a heuristic trained with reinforcement learning to evaluate occluded observations. We evaluate our approach in different environments with varying clutter densities and physics parameters. The results validate that our approach can search and retrieve a target object in different physics environments, while only being trained in simulation. It achieves near real-time behaviour with a success rate exceeding 88%.

Human-like Planning for Reaching in Cluttered Environments

Mar 03, 2020

Abstract:Humans, in comparison to robots, are remarkably adept at reaching for objects in cluttered environments. The best existing robot planners are based on random sampling of configuration space -- which becomes excessively high-dimensional with large number of objects. Consequently, most planners often fail to efficiently find object manipulation plans in such environments. We addressed this problem by identifying high-level manipulation plans in humans, and transferring these skills to robot planners. We used virtual reality to capture human participants reaching for a target object on a tabletop cluttered with obstacles. From this, we devised a qualitative representation of the task space to abstract the decision making, irrespective of the number of obstacles. Based on this representation, human demonstrations were segmented and used to train decision classifiers. Using these classifiers, our planner produced a list of waypoints in task space. These waypoints provided a high-level plan, which could be transferred to an arbitrary robot model and used to initialise a local trajectory optimiser. We evaluated this approach through testing on unseen human VR data, a physics-based robot simulation, and a real robot (dataset and code are publicly available). We found that the human-like planner outperformed a state-of-the-art standard trajectory optimisation algorithm, and was able to generate effective strategies for rapid planning -- irrespective of the number of obstacles in the environment.

Combining Coarse and Fine Physics for Manipulation using Parallel-in-Time Integration

Mar 20, 2019

Abstract:We present a method for fast and accurate physics-based predictions during non-prehensile manipulation planning and control. Given an initial state and a sequence of controls, the problem of predicting the resulting sequence of states is a key component of a variety of model-based planning and control algorithms. We propose combining a coarse (i.e. computationally cheap but not very accurate) predictive physics model, with a fine (i.e. computationally expensive but accurate) predictive physics model, to generate a hybrid model that is at the required speed and accuracy for a given manipulation task. Our approach is based on the Parareal algorithm, a parallel-in-time integration method used for computing numerical solutions for general systems of ordinary differential equations. We use Parareal to combine a coarse pushing model with an off-the-shelf physics engine to deliver physics-based predictions that are as accurate as the physics engine but runs in substantially less wall-clock time, thanks to Parareal being amenable to parallelization. We use these physics-based predictions in a model-predictive-control framework based on trajectory optimization, to plan pushing actions that avoid an obstacle and reach a goal location. We show that by combining the two physics models, we can achieve the same success rates as the planner that uses the off-the-shelf physics engine directly, but significantly faster. We present experiments in simulation and on a real robotic setup.

Pushing Fast and Slow: Task-Adaptive Planning for Non-prehensile Manipulation Under Uncertainty

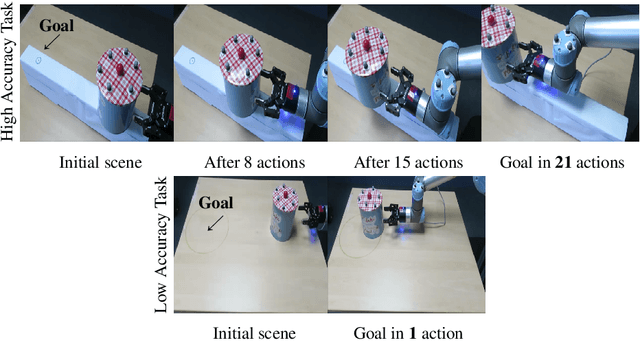

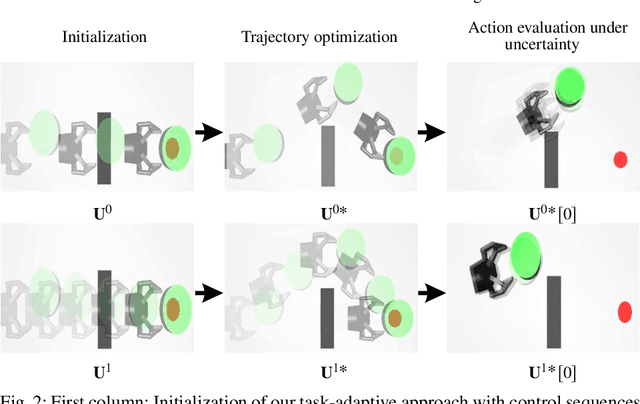

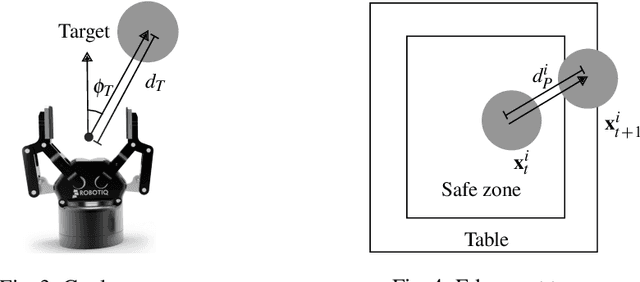

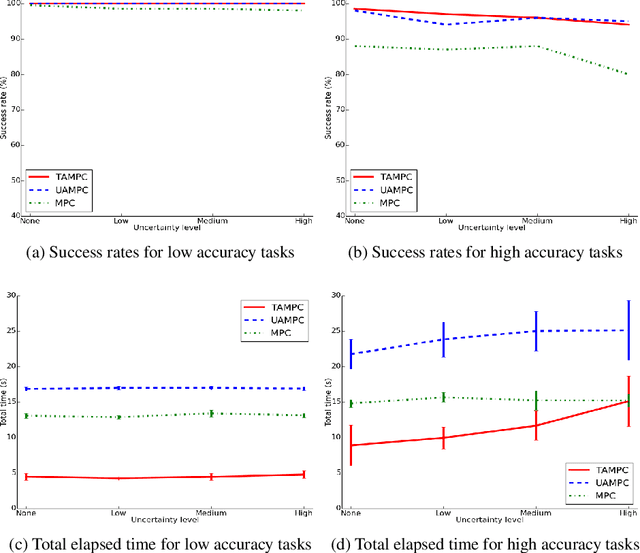

Jan 21, 2019

Abstract:We propose a planning and control approach to physics-based manipulation. The key feature of the algorithm is that it can adapt to the accuracy requirements of a task, by slowing down and generating `careful' motion when the task requires high accuracy, and by speeding up and moving fast when the task tolerates inaccuracy. We formulate the problem as an MDP with action-dependent stochasticity and propose an approximate online solution to it. We use a trajectory optimizer with a deterministic model to suggest promising actions to the MDP, to reduce computation time spent on evaluating different actions. We conducted experiments in simulation and on a real robotic system. Our results show that with a task-adaptive planning and control approach, a robot can choose fast or slow actions depending on the task accuracy and uncertainty level. The robot makes these decisions online and is able to maintain high success rates while completing manipulation tasks as fast as possible.

One-Shot Observation Learning

Oct 17, 2018

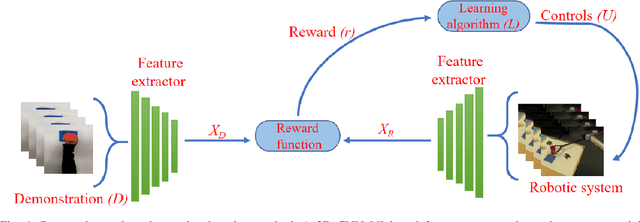

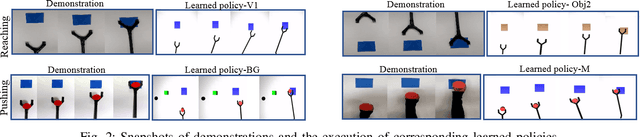

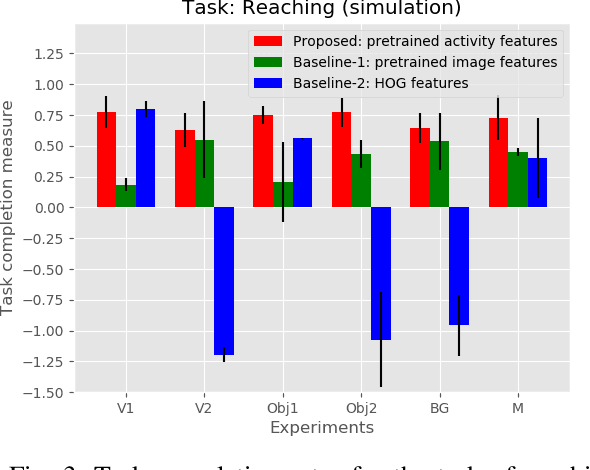

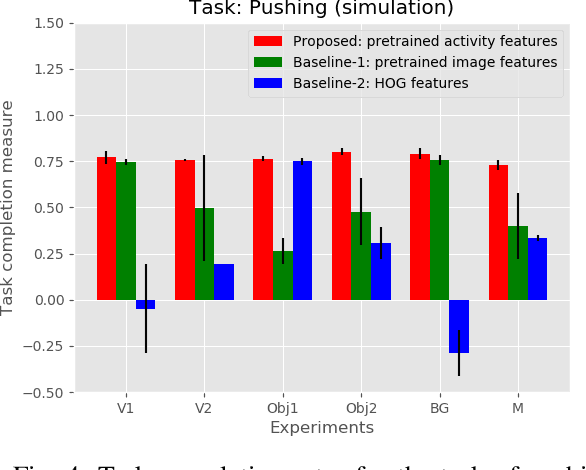

Abstract:Observation learning is the process of learning a task by observing an expert demonstrator. We present a robust observation learning method for robotic systems. Our principle contributions are in introducing a one shot learning method where only a single demonstration is needed for learning and in proposing a novel feature extraction method for extracting unique activity features from the demonstration. Reward values are then generated from these demonstrations. We use a learning algorithm with these rewards to learn the controls for a robotic manipulator to perform the demonstrated task. With simulation and real robot experiments, we show that the proposed method can be used to learn tasks from a single demonstration under varying conditions of viewpoints, object properties, morphology of manipulators and scene backgrounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge