Tianshuang Qiu

AUTOLab at the University of California, Berkeley

Omni-Scan: Creating Visually-Accurate Digital Twin Object Models Using a Bimanual Robot with Handover and Gaussian Splat Merging

Aug 01, 2025Abstract:3D Gaussian Splats (3DGSs) are 3D object models derived from multi-view images. Such "digital twins" are useful for simulations, virtual reality, marketing, robot policy fine-tuning, and part inspection. 3D object scanning usually requires multi-camera arrays, precise laser scanners, or robot wrist-mounted cameras, which have restricted workspaces. We propose Omni-Scan, a pipeline for producing high-quality 3D Gaussian Splat models using a bi-manual robot that grasps an object with one gripper and rotates the object with respect to a stationary camera. The object is then re-grasped by a second gripper to expose surfaces that were occluded by the first gripper. We present the Omni-Scan robot pipeline using DepthAny-thing, Segment Anything, as well as RAFT optical flow models to identify and isolate objects held by a robot gripper while removing the gripper and the background. We then modify the 3DGS training pipeline to support concatenated datasets with gripper occlusion, producing an omni-directional (360 degree view) model of the object. We apply Omni-Scan to part defect inspection, finding that it can identify visual or geometric defects in 12 different industrial and household objects with an average accuracy of 83%. Interactive videos of Omni-Scan 3DGS models can be found at https://berkeleyautomation.github.io/omni-scan/

Breathless: An 8-hour Performance Contrasting Human and Robot Expressiveness

Nov 19, 2024

Abstract:This paper describes the robot technology behind an original performance that pairs a human dancer (Cuan) with an industrial robot arm for an eight-hour dance that unfolds over the timespan of an American workday. To control the robot arm, we combine a range of sinusoidal motions with varying amplitude, frequency and offset at each joint to evoke human motions common in physical labor such as stirring, digging, and stacking. More motions were developed using deep learning techniques for video-based human-pose tracking and extraction. We combine these pre-recorded motions with improvised robot motions created live by putting the robot into teach-mode and triggering force sensing from the robot joints onstage. All motions are combined with commercial and original music using a custom suite of python software with AppleScript, Keynote, and Zoom to facilitate on-stage communication with the dancer. The resulting performance contrasts the expressivity of the human body with the precision of robot machinery. Video, code and data are available on the project website: https://sites.google.com/playing.studio/breathless

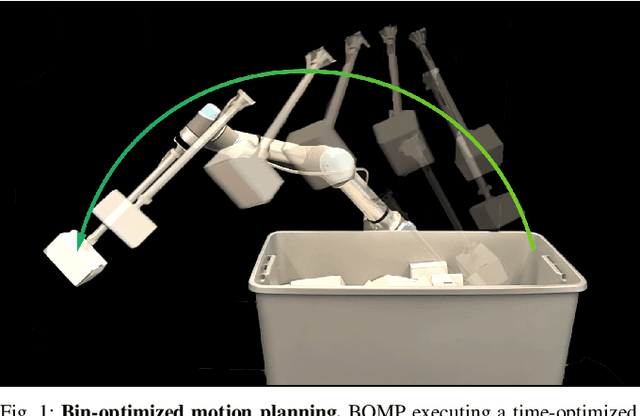

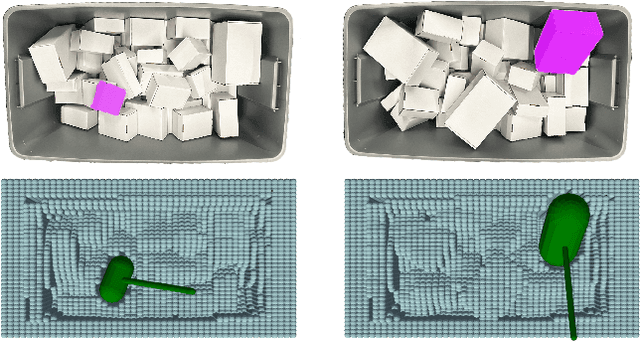

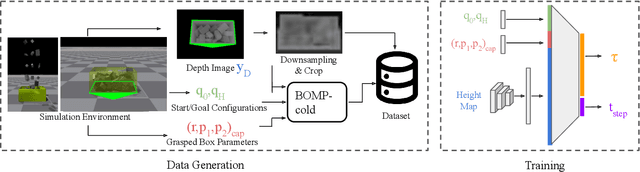

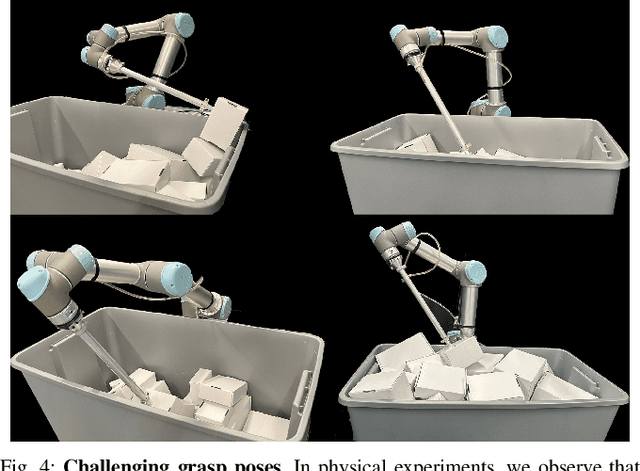

BOMP: Bin-Optimized Motion Planning

Oct 31, 2024

Abstract:In logistics, the ability to quickly compute and execute pick-and-place motions from bins is critical to increasing productivity. We present Bin-Optimized Motion Planning (BOMP), a motion planning framework that plans arm motions for a six-axis industrial robot with a long-nosed suction tool to remove boxes from deep bins. BOMP considers robot arm kinematics, actuation limits, the dimensions of a grasped box, and a varying height map of a bin environment to rapidly generate time-optimized, jerk-limited, and collision-free trajectories. The optimization is warm-started using a deep neural network trained offline in simulation with 25,000 scenes and corresponding trajectories. Experiments with 96 simulated and 15 physical environments suggest that BOMP generates collision-free trajectories that are up to 58 % faster than baseline sampling-based planners and up to 36 % faster than an industry-standard Up-Over-Down algorithm, which has an extremely low 15 % success rate in this context. BOMP also generates jerk-limited trajectories while baselines do not. Website: https://sites.google.com/berkeley.edu/bomp.

FogROS2-PLR: Probabilistic Latency-Reliability For Cloud Robotics

Oct 07, 2024

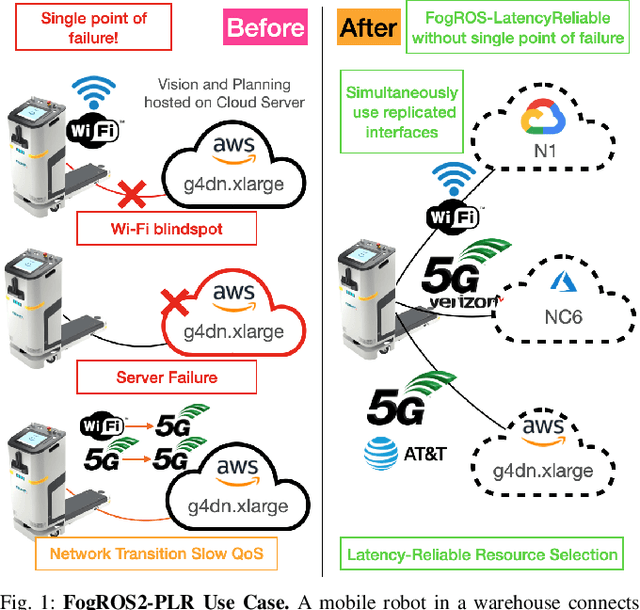

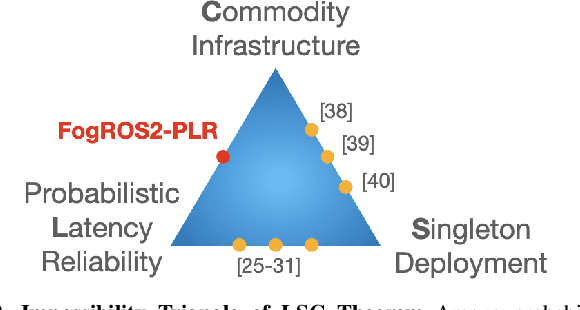

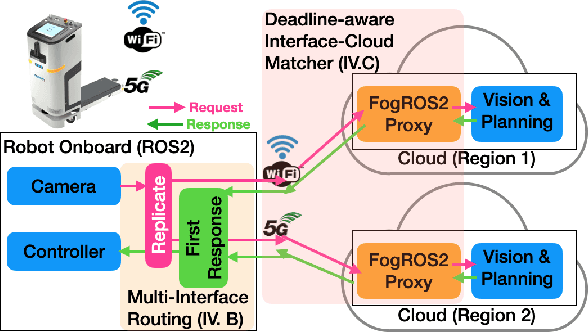

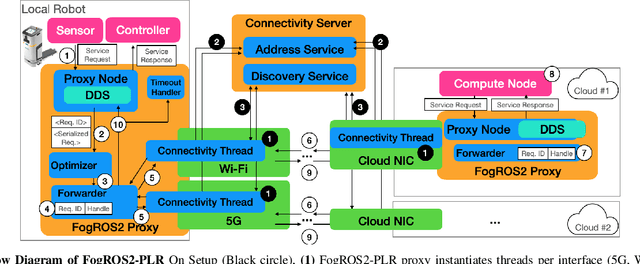

Abstract:Cloud robotics enables robots to offload computationally intensive tasks to cloud servers for performance, cost, and ease of management. However, the network and cloud computing infrastructure are not designed for reliable timing guarantees, due to fluctuating Quality-of-Service (QoS). In this work, we formulate an impossibility triangle theorem for: Latency reliability, Singleton server, and Commodity hardware. The LSC theorem suggests that providing replicated servers with uncorrelated failures can exponentially reduce the probability of missing a deadline. We present FogROS2-Probabilistic Latency Reliability (PLR) that uses multiple independent network interfaces to send requests to replicated cloud servers and uses the first response back. We design routing mechanisms to discover, connect, and route through non-default network interfaces on robots. FogROS2-PLR optimizes the selection of interfaces to servers to minimize the probability of missing a deadline. We conduct a cloud-connected driving experiment with two 5G service providers, demonstrating FogROS2-PLR effectively provides smooth service quality even if one of the service providers experiences low coverage and base station handover. We use 99 Percentile (P99) latency to evaluate anomalous long-tail latency behavior. In one experiment, FogROS2-PLR improves P99 latency by up to 3.7x compared to using one service provider. We deploy FogROS2-PLR on a physical Stretch 3 robot performing an indoor human-tracking task. Even in a fully covered Wi-Fi and 5G environment, FogROS2-PLR improves the responsiveness of the robot reducing mean latency by 36% and P99 latency by 33%.

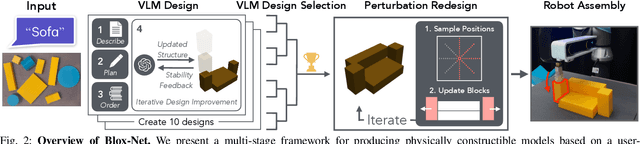

Blox-Net: Generative Design-for-Robot-Assembly Using VLM Supervision, Physics Simulation, and a Robot with Reset

Sep 25, 2024

Abstract:Generative AI systems have shown impressive capabilities in creating text, code, and images. Inspired by the rich history of research in industrial ''Design for Assembly'', we introduce a novel problem: Generative Design-for-Robot-Assembly (GDfRA). The task is to generate an assembly based on a natural language prompt (e.g., ''giraffe'') and an image of available physical components, such as 3D-printed blocks. The output is an assembly, a spatial arrangement of these components, and instructions for a robot to build this assembly. The output must 1) resemble the requested object and 2) be reliably assembled by a 6 DoF robot arm with a suction gripper. We then present Blox-Net, a GDfRA system that combines generative vision language models with well-established methods in computer vision, simulation, perturbation analysis, motion planning, and physical robot experimentation to solve a class of GDfRA problems with minimal human supervision. Blox-Net achieved a Top-1 accuracy of 63.5% in the ''recognizability'' of its designed assemblies (eg, resembling giraffe as judged by a VLM). These designs, after automated perturbation redesign, were reliably assembled by a robot, achieving near-perfect success across 10 consecutive assembly iterations with human intervention only during reset prior to assembly. Surprisingly, this entire design process from textual word (''giraffe'') to reliable physical assembly is performed with zero human intervention.

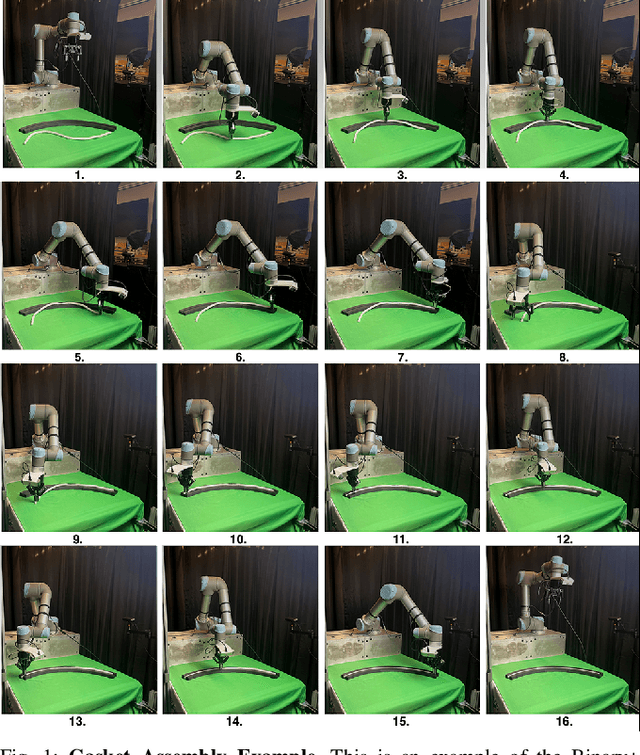

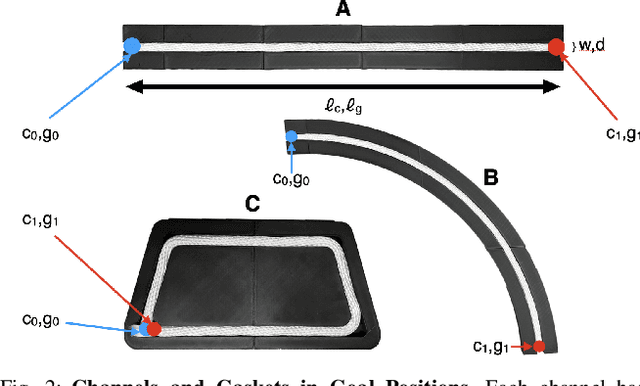

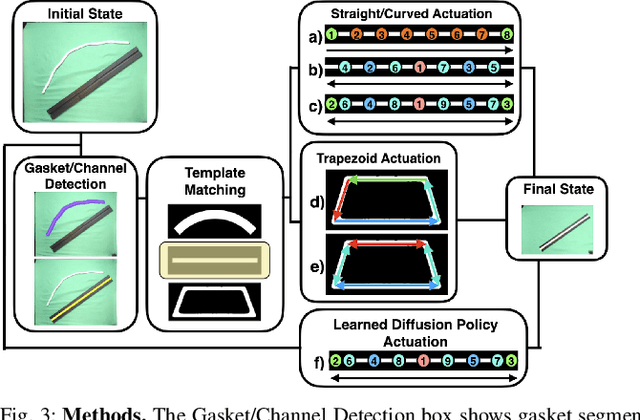

Automating Deformable Gasket Assembly

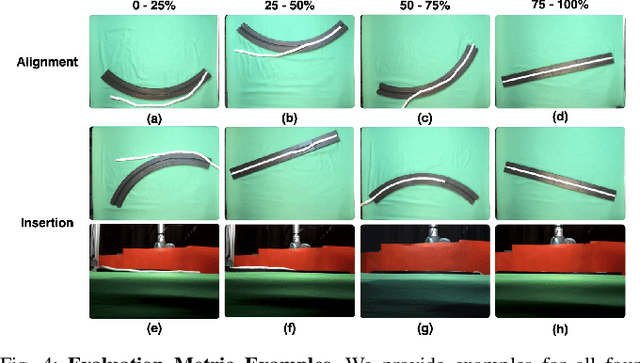

Aug 22, 2024

Abstract:In Gasket Assembly, a deformable gasket must be aligned and pressed into a narrow channel. This task is common for sealing surfaces in the manufacturing of automobiles, appliances, electronics, and other products. Gasket Assembly is a long-horizon, high-precision task and the gasket must align with the channel and be fully pressed in to achieve a secure fit. To compare approaches, we present 4 methods for Gasket Assembly: one policy from deep imitation learning and three procedural algorithms. We evaluate these methods with 100 physical trials. Results suggest that the Binary+ algorithm succeeds in 10/10 on the straight channel whereas the learned policy based on 250 human teleoperated demonstrations succeeds in 8/10 trials and is significantly slower. Code, CAD models, videos, and data can be found at https://berkeleyautomation.github.io/robot-gasket/

The Teenager's Problem: Efficient Garment Decluttering With Grasp Optimization

Oct 25, 2023

Abstract:This paper addresses the ''Teenager's Problem'': efficiently removing scattered garments from a planar surface. As grasping and transporting individual garments is highly inefficient, we propose analytical policies to select grasp locations for multiple garments using an overhead camera. Two classes of methods are considered: depth-based, which use overhead depth data to find efficient grasps, and segment-based, which use segmentation on the RGB overhead image (without requiring any depth data); grasp efficiency is measured by Objects per Transport, which denotes the average number of objects removed per trip to the laundry basket. Experiments suggest that both depth- and segment-based methods easily reduce Objects per Transport (OpT) by $20\%$; furthermore, these approaches complement each other, with combined hybrid methods yielding improvements of $34\%$. Finally, a method employing consolidation (with segmentation) is considered, which manipulates the garments on the work surface to increase OpT; this yields an improvement of $67\%$ over the baseline, though at a cost of additional physical actions.

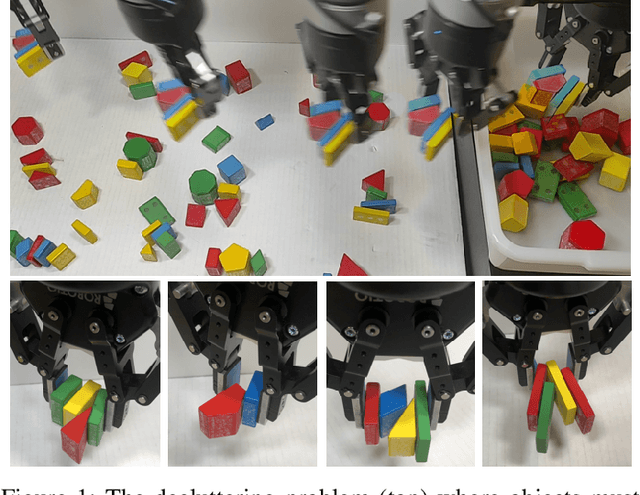

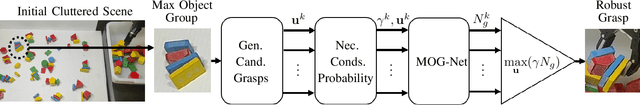

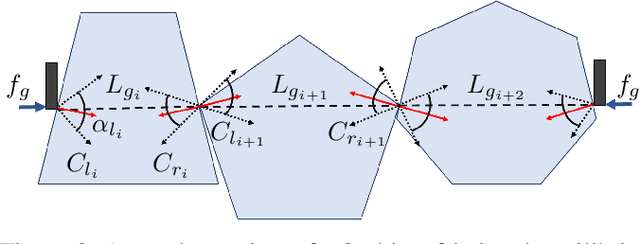

Learning to Efficiently Plan Robust Frictional Multi-Object Grasps

Oct 13, 2022

Abstract:We consider a decluttering problem where multiple rigid convex polygonal objects rest in randomly placed positions and orientations on a planar surface and must be efficiently transported to a packing box using both single and multi-object grasps. Prior work considered frictionless multi-object grasping. In this paper, we introduce friction to increase picks per hour. We train a neural network using real examples to plan robust multi-object grasps. In physical experiments, we find an 11.7% increase in success rates, a 1.7x increase in picks per hour, and an 8.2x decrease in grasp planning time compared to prior work on multi-object grasping. Videos are available at https://youtu.be/pEZpHX5FZIs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge