Wissam Bejjani

Occlusion-Aware Search for Object Retrieval in Clutter

Nov 10, 2020

Abstract:We address the manipulation task of retrieving a target object from a cluttered shelf. When the target object is hidden, the robot must search through the clutter for retrieving it. Solving this task requires reasoning over the likely locations of the target object. It also requires physics reasoning over multi-object interactions and future occlusions. In this work, we present a data-driven approach for generating occlusion-aware actions in closed-loop. We present a hybrid planner that explores likely states generated from a learned distribution over the location of the target object. The search is guided by a heuristic trained with reinforcement learning to evaluate occluded observations. We evaluate our approach in different environments with varying clutter densities and physics parameters. The results validate that our approach can search and retrieve a target object in different physics environments, while only being trained in simulation. It achieves near real-time behaviour with a success rate exceeding 88%.

Learning Physics-Based Manipulation in Clutter: Combining Image-Based Generalization and Look-Ahead Planning

Apr 03, 2019

Abstract:Physics-based manipulation in clutter involves complex interaction between multiple objects. In this paper, we consider the problem of learning, from interaction in a physics simulator, manipulation skills to solve this multi-step sequential decision making problem in the real world. Our approach has two key properties: (i) the ability to generalize (over the shape and number of objects in the scene) using an abstract image-based representation that enables a neural network to learn useful features; and (ii) the ability to perform look-ahead planning using a physics simulator, which is essential for such multi-step problems. We show, in sets of simulated and real-world experiments (video available on https://youtu.be/EmkUQfyvwkY), that by learning to evaluate actions in an abstract image-based representation of the real world, the robot can generalize and adapt to the object shapes in challenging real-world environments.

Planning with a Receding Horizon for Manipulation in Clutter using a Learned Value Function

Jul 27, 2018

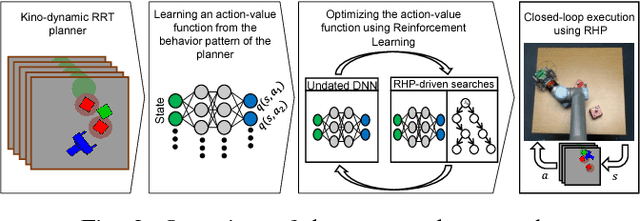

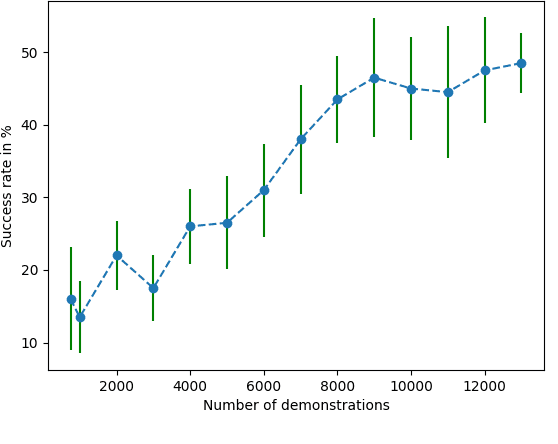

Abstract:Manipulation in clutter requires solving complex sequential decision making problems in an environment rich with physical interactions. The transfer of motion planning solutions from simulation to the real world, in open-loop, suffers from the inherent uncertainty in modelling real world physics. We propose interleaving planning and execution in real-time, in a closed-loop setting, using a Receding Horizon Planner (RHP) for pushing manipulation in clutter. In this context, we address the problem of finding a suitable value function based heuristic for efficient planning, and for estimating the cost-to-go from the horizon to the goal. We estimate such a value function first by using plans generated by an existing sampling-based planner. Then, we further optimize the value function through reinforcement learning. We evaluate our approach and compare it to state-of-the-art planning techniques for manipulation in clutter. We conduct experiments in simulation with artificially injected uncertainty on the physics parameters, as well as in real world tasks of manipulation in clutter. We show that this approach enables the robot to react to the uncertain dynamics of the real world effectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge