Rafael Papallas

Online state vector reduction during model predictive control with gradient-based trajectory optimisation

Aug 21, 2024

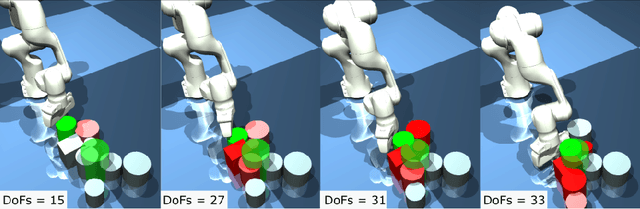

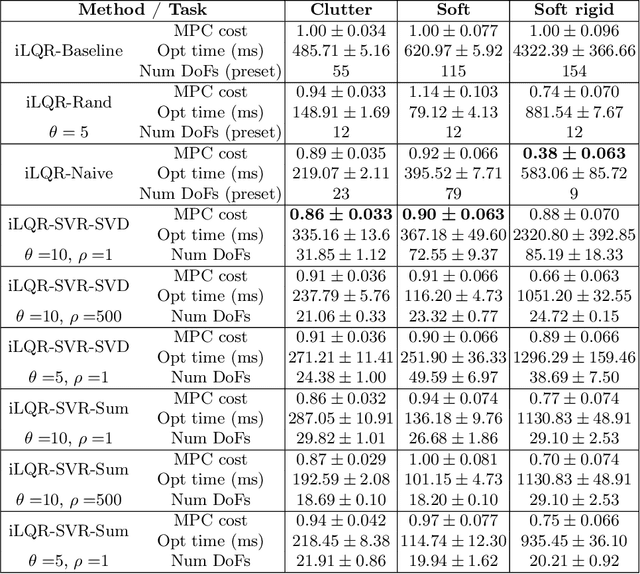

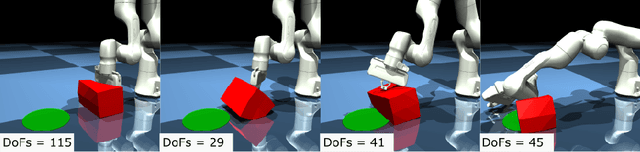

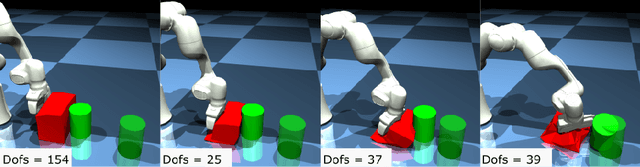

Abstract:Non-prehensile manipulation in high-dimensional systems is challenging for a variety of reasons, one of the main reasons is the computationally long planning times that come with a large state space. Trajectory optimisation algorithms have proved their utility in a wide variety of tasks, but, like most methods struggle scaling to the high dimensional systems ubiquitous to non-prehensile manipulation in clutter as well as deformable object manipulation. We reason that, during manipulation, different degrees of freedom will become more or less important to the task over time as the system evolves. We leverage this idea to reduce the number of degrees of freedom considered in a trajectory optimisation problem, to reduce planning times. This idea is particularly relevant in the context of model predictive control (MPC) where the cost landscape of the optimisation problem is constantly evolving. We provide simulation results under asynchronous MPC and show our methods are capable of achieving better overall performance due to the decreased policy lag whilst still being able to optimise trajectories effectively.

Real-Time Physics-Based Object Pose Tracking during Non-Prehensile Manipulation

Nov 24, 2022

Abstract:We propose a method to track the 6D pose of an object over time, while the object is under non-prehensile manipulation by a robot. At any given time during the manipulation of the object, we assume access to the robot joint controls and an image from a camera looking at the scene. We use the robot joint controls to perform a physics-based prediction of how the object might be moving. We then combine this prediction with the observation coming from the camera, to estimate the object pose as accurately as possible. We use a particle filtering approach to combine the control information with the visual information. We compare the proposed method with two baselines: (i) using only an image-based pose estimation system at each time-step, and (ii) a particle filter which does not perform the computationally expensive physics predictions, but assumes the object moves with constant velocity. Our results show that making physics-based predictions is worth the computational cost, resulting in more accurate tracking, and estimating object pose even when the object is not clearly visible to the camera.

Human-Guided Planner for Non-Prehensile Manipulation

Apr 04, 2020

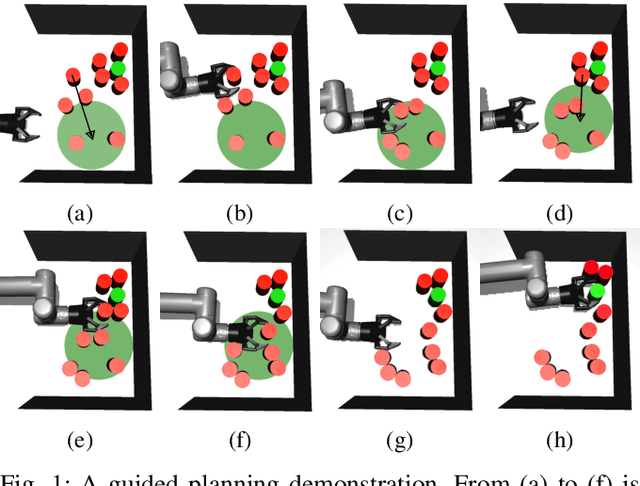

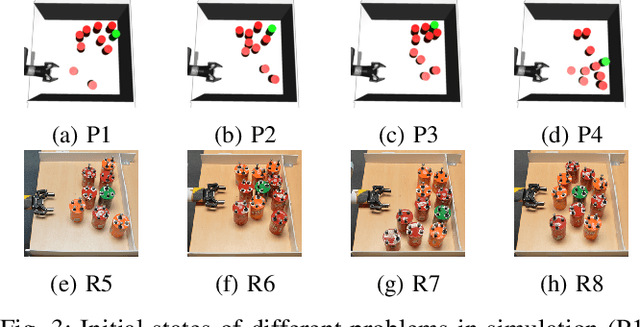

Abstract:We present a human-guided planner for non-prehensile manipulation in clutter. Most recent approaches to manipulation in clutter employs randomized planning, however, the problem remains a challenging one where the planning times are still in the order of tens of seconds or minutes, and the success rates are low for difficult instances of the problem. We build on these control-based randomized planning approaches, but we investigate using them in conjunction with human-operator input. We show that with a minimal amount of human input, the low-level planner can solve the problem faster and with higher success rates.

Non-Prehensile Manipulation in Clutter with Human-In-The-Loop

Apr 07, 2019

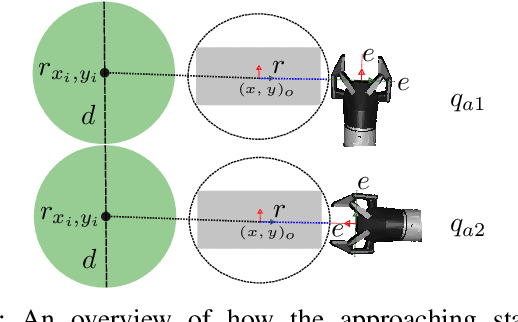

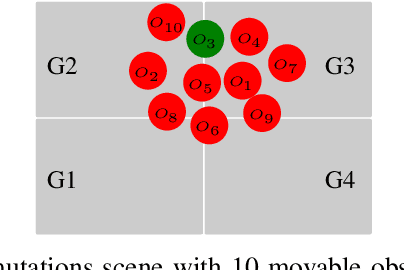

Abstract:We propose a human-operator guided planning approach to pushing-based robotic manipulation in clutter. Most recent approaches to this problem employs the power of randomized planning (e.g. control-sampling based Kinodynamic RRT) to produce a fast working solution. We build on these control-based randomized planning approaches, but we investigate using them in conjunction with human-operator input. In our framework, the human operator supplies a highlevel plan, in the form of an ordered sequence of objects and their approximate goal positions. We present experiments in simulation and on a real robotic setup, where we compare the success rate and planning times of our human-in-theloop approach with fully autonomous sampling-based planners. We show that the human-operator provided guidance makes the low-level kinodynamic planner solve the planning problem faster and with higher success rates.

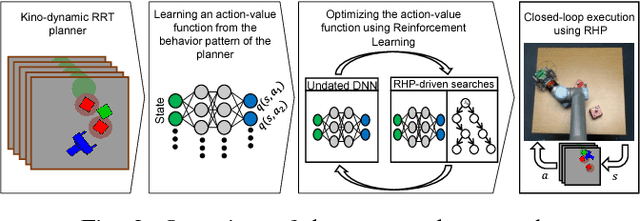

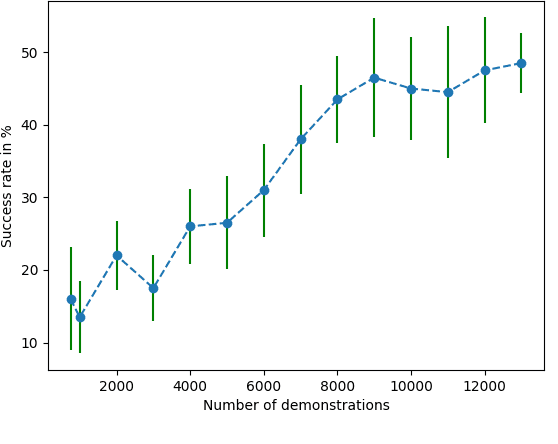

Planning with a Receding Horizon for Manipulation in Clutter using a Learned Value Function

Jul 27, 2018

Abstract:Manipulation in clutter requires solving complex sequential decision making problems in an environment rich with physical interactions. The transfer of motion planning solutions from simulation to the real world, in open-loop, suffers from the inherent uncertainty in modelling real world physics. We propose interleaving planning and execution in real-time, in a closed-loop setting, using a Receding Horizon Planner (RHP) for pushing manipulation in clutter. In this context, we address the problem of finding a suitable value function based heuristic for efficient planning, and for estimating the cost-to-go from the horizon to the goal. We estimate such a value function first by using plans generated by an existing sampling-based planner. Then, we further optimize the value function through reinforcement learning. We evaluate our approach and compare it to state-of-the-art planning techniques for manipulation in clutter. We conduct experiments in simulation with artificially injected uncertainty on the physics parameters, as well as in real world tasks of manipulation in clutter. We show that this approach enables the robot to react to the uncertain dynamics of the real world effectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge