Ricardo Luna Gutierrez

Fast 3D Surrogate Modeling for Data Center Thermal Management

Nov 13, 2025

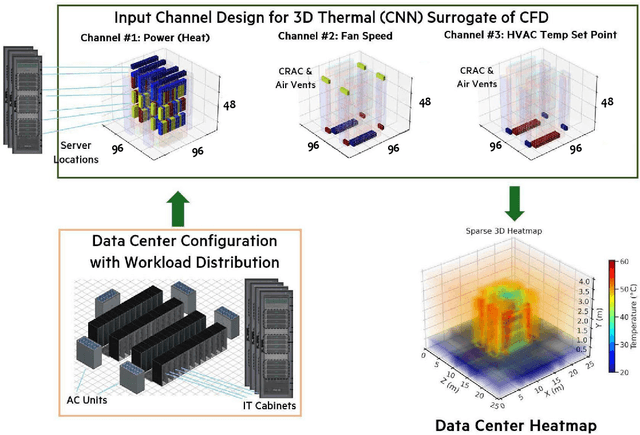

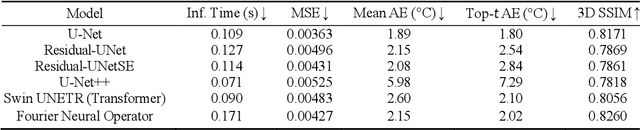

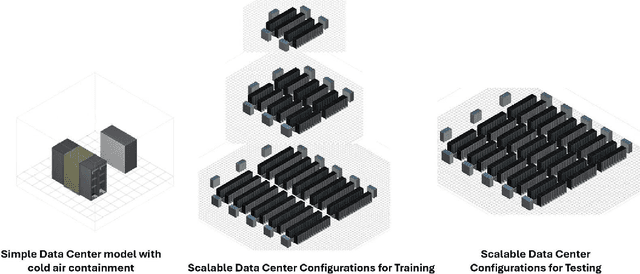

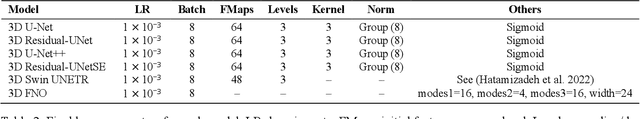

Abstract:Reducing energy consumption and carbon emissions in data centers by enabling real-time temperature prediction is critical for sustainability and operational efficiency. Achieving this requires accurate modeling of the 3D temperature field to capture airflow dynamics and thermal interactions under varying operating conditions. Traditional thermal CFD solvers, while accurate, are computationally expensive and require expert-crafted meshes and boundary conditions, making them impractical for real-time use. To address these limitations, we develop a vision-based surrogate modeling framework that operates directly on a 3D voxelized representation of the data center, incorporating server workloads, fan speeds, and HVAC temperature set points. We evaluate multiple architectures, including 3D CNN U-Net variants, a 3D Fourier Neural Operator, and 3D vision transformers, to map these thermal inputs to high-fidelity heat maps. Our results show that the surrogate models generalize across data center configurations and achieve up to 20,000x speedup (hundreds of milliseconds vs. hours). This fast and accurate estimation of hot spots and temperature distribution enables real-time cooling control and workload redistribution, leading to substantial energy savings (7\%) and reduced carbon footprint.

Robustness Evaluation for Video Models with Reinforcement Learning

Jun 05, 2025Abstract:Evaluating the robustness of Video classification models is very challenging, specifically when compared to image-based models. With their increased temporal dimension, there is a significant increase in complexity and computational cost. One of the key challenges is to keep the perturbations to a minimum to induce misclassification. In this work, we propose a multi-agent reinforcement learning approach (spatial and temporal) that cooperatively learns to identify the given video's sensitive spatial and temporal regions. The agents consider temporal coherence in generating fine perturbations, leading to a more effective and visually imperceptible attack. Our method outperforms the state-of-the-art solutions on the Lp metric and the average queries. Our method enables custom distortion types, making the robustness evaluation more relevant to the use case. We extensively evaluate 4 popular models for video action recognition on two popular datasets, HMDB-51 and UCF-101.

Coordinated Robustness Evaluation Framework for Vision-Language Models

Jun 05, 2025Abstract:Vision-language models, which integrate computer vision and natural language processing capabilities, have demonstrated significant advancements in tasks such as image captioning and visual question and answering. However, similar to traditional models, they are susceptible to small perturbations, posing a challenge to their robustness, particularly in deployment scenarios. Evaluating the robustness of these models requires perturbations in both the vision and language modalities to learn their inter-modal dependencies. In this work, we train a generic surrogate model that can take both image and text as input and generate joint representation which is further used to generate adversarial perturbations for both the text and image modalities. This coordinated attack strategy is evaluated on the visual question and answering and visual reasoning datasets using various state-of-the-art vision-language models. Our results indicate that the proposed strategy outperforms other multi-modal attacks and single-modality attacks from the recent literature. Our results demonstrate their effectiveness in compromising the robustness of several state-of-the-art pre-trained multi-modal models such as instruct-BLIP, ViLT and others.

Hierarchical Multi-Agent Framework for Carbon-Efficient Liquid-Cooled Data Center Clusters

Feb 12, 2025Abstract:Reducing the environmental impact of cloud computing requires efficient workload distribution across geographically dispersed Data Center Clusters (DCCs) and simultaneously optimizing liquid and air (HVAC) cooling with time shift of workloads within individual data centers (DC). This paper introduces Green-DCC, which proposes a Reinforcement Learning (RL) based hierarchical controller to optimize both workload and liquid cooling dynamically in a DCC. By incorporating factors such as weather, carbon intensity, and resource availability, Green-DCC addresses realistic constraints and interdependencies. We demonstrate how the system optimizes multiple data centers synchronously, enabling the scope of digital twins, and compare the performance of various RL approaches based on carbon emissions and sustainability metrics while also offering a framework and benchmark simulation for broader ML research in sustainability.

Reinforcement Learning Platform for Adversarial Black-box Attacks with Custom Distortion Filters

Jan 23, 2025

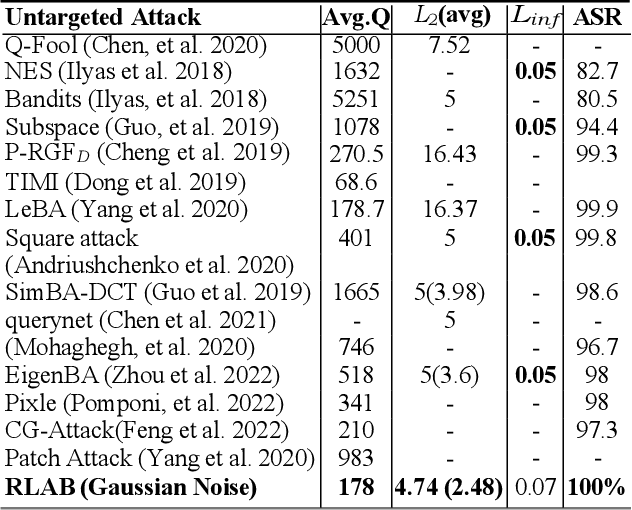

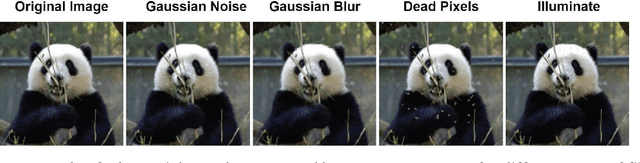

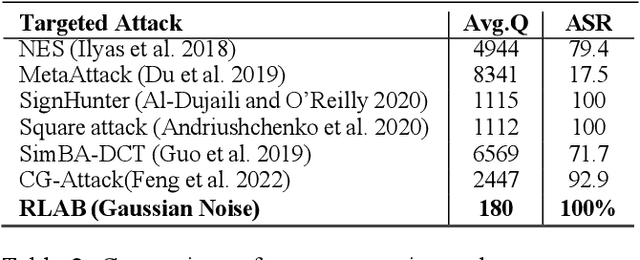

Abstract:We present a Reinforcement Learning Platform for Adversarial Black-box untargeted and targeted attacks, RLAB, that allows users to select from various distortion filters to create adversarial examples. The platform uses a Reinforcement Learning agent to add minimum distortion to input images while still causing misclassification by the target model. The agent uses a novel dual-action method to explore the input image at each step to identify sensitive regions for adding distortions while removing noises that have less impact on the target model. This dual action leads to faster and more efficient convergence of the attack. The platform can also be used to measure the robustness of image classification models against specific distortion types. Also, retraining the model with adversarial samples significantly improved robustness when evaluated on benchmark datasets. The proposed platform outperforms state-of-the-art methods in terms of the average number of queries required to cause misclassification. This advances trustworthiness with a positive social impact.

A Configurable Pythonic Data Center Model for Sustainable Cooling and ML Integration

Apr 18, 2024

Abstract:There have been growing discussions on estimating and subsequently reducing the operational carbon footprint of enterprise data centers. The design and intelligent control for data centers have an important impact on data center carbon footprint. In this paper, we showcase PyDCM, a Python library that enables extremely fast prototyping of data center design and applies reinforcement learning-enabled control with the purpose of evaluating key sustainability metrics including carbon footprint, energy consumption, and observing temperature hotspots. We demonstrate these capabilities of PyDCM and compare them to existing works in EnergyPlus for modeling data centers. PyDCM can also be used as a standalone Gymnasium environment for demonstrating sustainability-focused data center control.

RTDK-BO: High Dimensional Bayesian Optimization with Reinforced Transformer Deep kernels

Oct 09, 2023Abstract:Bayesian Optimization (BO), guided by Gaussian process (GP) surrogates, has proven to be an invaluable technique for efficient, high-dimensional, black-box optimization, a critical problem inherent to many applications such as industrial design and scientific computing. Recent contributions have introduced reinforcement learning (RL) to improve the optimization performance on both single function optimization and \textit{few-shot} multi-objective optimization. However, even few-shot techniques fail to exploit similarities shared between closely related objectives. In this paper, we combine recent developments in Deep Kernel Learning (DKL) and attention-based Transformer models to improve the modeling powers of GP surrogates with meta-learning. We propose a novel method for improving meta-learning BO surrogates by incorporating attention mechanisms into DKL, empowering the surrogates to adapt to contextual information gathered during the BO process. We combine this Transformer Deep Kernel with a learned acquisition function trained with continuous Soft Actor-Critic Reinforcement Learning to aid in exploration. This Reinforced Transformer Deep Kernel (RTDK-BO) approach yields state-of-the-art results in continuous high-dimensional optimization problems.

Meta-Reinforcement Learning for Heuristic Planning

Jul 06, 2021

Abstract:In Meta-Reinforcement Learning (meta-RL) an agent is trained on a set of tasks to prepare for and learn faster in new, unseen, but related tasks. The training tasks are usually hand-crafted to be representative of the expected distribution of test tasks and hence all used in training. We show that given a set of training tasks, learning can be both faster and more effective (leading to better performance in the test tasks), if the training tasks are appropriately selected. We propose a task selection algorithm, Information-Theoretic Task Selection (ITTS), based on information theory, which optimizes the set of tasks used for training in meta-RL, irrespectively of how they are generated. The algorithm establishes which training tasks are both sufficiently relevant for the test tasks, and different enough from one another. We reproduce different meta-RL experiments from the literature and show that ITTS improves the final performance in all of them.

Information-theoretic Task Selection for Meta-Reinforcement Learning

Nov 02, 2020

Abstract:In Meta-Reinforcement Learning (meta-RL) an agent is trained on a set of tasks to prepare for and learn faster in new, unseen, but related tasks. The training tasks are usually hand-crafted to be representative of the expected distribution of test tasks and hence all used in training. We show that given a set of training tasks, learning can be both faster and more effective (leading to better performance in the test tasks), if the training tasks are appropriately selected. We propose a task selection algorithm, Information-Theoretic Task Selection (ITTS), based on information theory, which optimizes the set of tasks used for training in meta-RL, irrespectively of how they are generated. The algorithm establishes which training tasks are both sufficiently relevant for the test tasks, and different enough from one another. We reproduce different meta-RL experiments from the literature and show that ITTS improves the final performance in all of them.

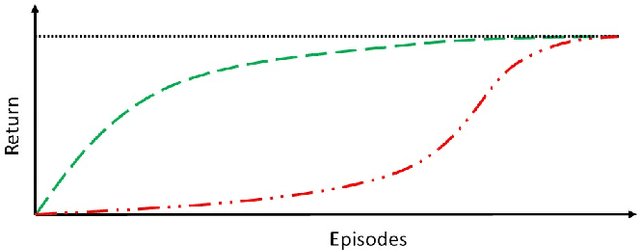

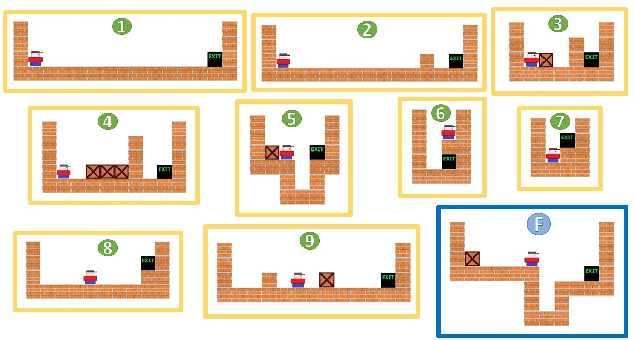

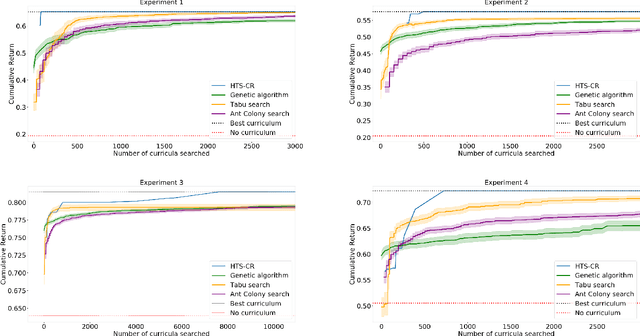

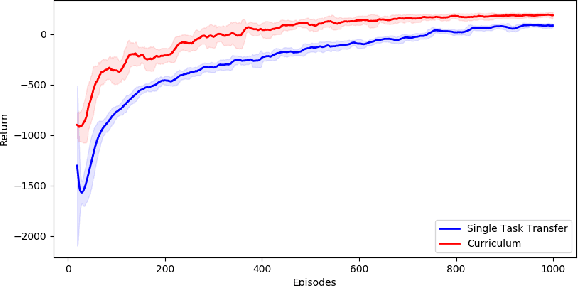

Curriculum Learning for Cumulative Return Maximization

Jun 13, 2019

Abstract:Curriculum learning has been successfully used in reinforcement learning to accelerate the learning process, through knowledge transfer between tasks of increasing complexity. Critical tasks, in which suboptimal exploratory actions must be minimized, can benefit from curriculum learning, and its ability to shape exploration through transfer. We propose a task sequencing algorithm maximizing the cumulative return, that is, the return obtained by the agent across all the learning episodes. By maximizing the cumulative return, the agent not only aims at achieving high rewards as fast as possible, but also at doing so while limiting suboptimal actions. We experimentally compare our task sequencing algorithm to several popular metaheuristic algorithms for combinatorial optimization, and show that it achieves significantly better performance on the problem of cumulative return maximization. Furthermore, we validate our algorithm on a critical task, optimizing a home controller for a micro energy grid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge