Francesco Foglino

Curriculum Learning with a Progression Function

Aug 02, 2020

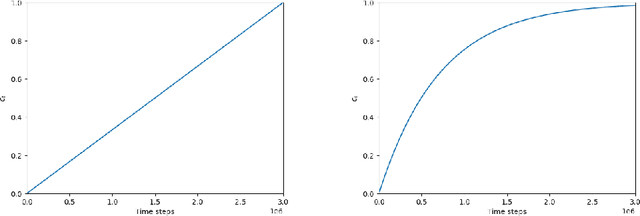

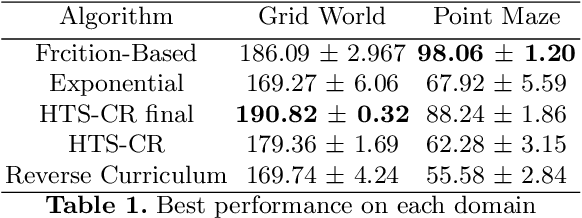

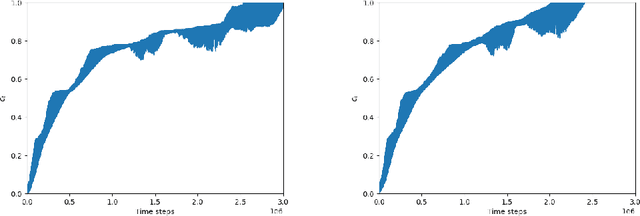

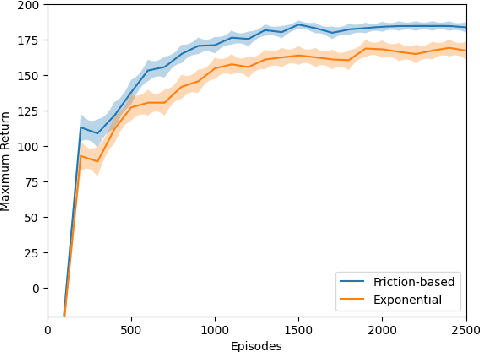

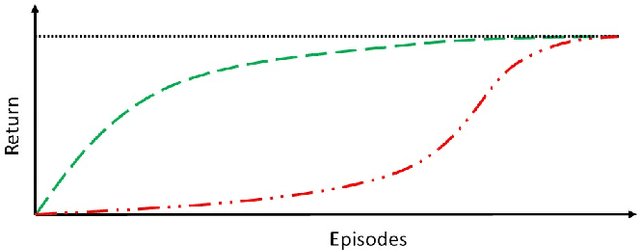

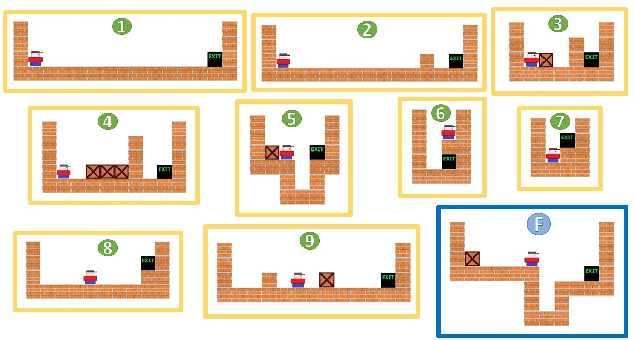

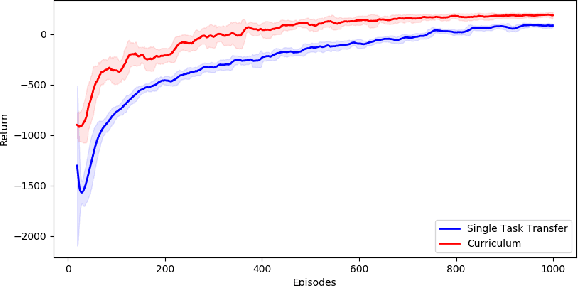

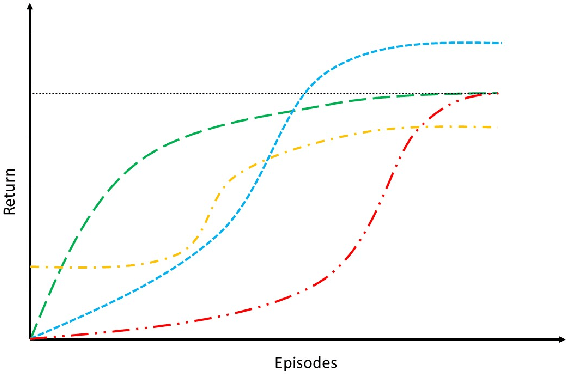

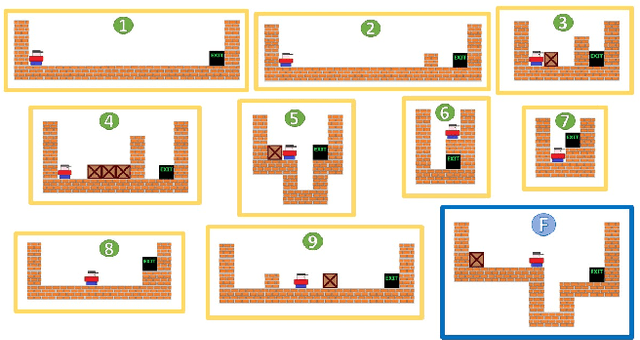

Abstract:Curriculum Learning for Reinforcement Learning is an increasingly popular technique that involves training an agent on a defined sequence of intermediate tasks, called a Curriculum, to increase the agent's performance and learning speed. This paper introduces a novel paradigm for automatic curriculum generation based on a progression of task complexity. Different progression functions are introduced, including an autonomous online task progression based on the performance of the agent. The progression function also determines how long the agent should train on each intermediate task, which is an open problem in other task-based curriculum approaches. The benefits and wide applicability of our approach are shown by empirically comparing its performance to two state-of-the-art Curriculum Learning algorithms on a grid world and on a complex simulated navigation domain.

A gray-box approach for curriculum learning

Jun 17, 2019

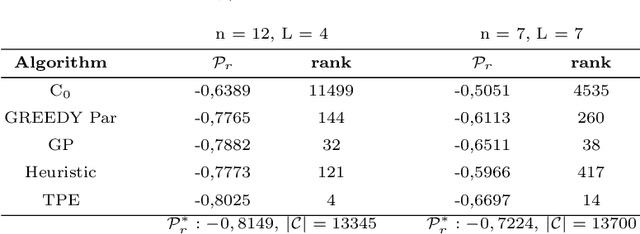

Abstract:Curriculum learning is often employed in deep reinforcement learning to let the agent progress more quickly towards better behaviors. Numerical methods for curriculum learning in the literature provides only initial heuristic solutions, with little to no guarantee on their quality. We define a new gray-box function that, including a suitable scheduling problem, can be effectively used to reformulate the curriculum learning problem. We propose different efficient numerical methods to address this gray-box reformulation. Preliminary numerical results on a benchmark task in the curriculum learning literature show the viability of the proposed approach.

* 10 pages, 1 figure

Curriculum Learning for Cumulative Return Maximization

Jun 13, 2019

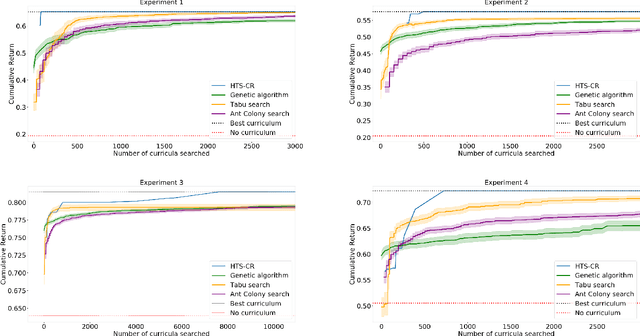

Abstract:Curriculum learning has been successfully used in reinforcement learning to accelerate the learning process, through knowledge transfer between tasks of increasing complexity. Critical tasks, in which suboptimal exploratory actions must be minimized, can benefit from curriculum learning, and its ability to shape exploration through transfer. We propose a task sequencing algorithm maximizing the cumulative return, that is, the return obtained by the agent across all the learning episodes. By maximizing the cumulative return, the agent not only aims at achieving high rewards as fast as possible, but also at doing so while limiting suboptimal actions. We experimentally compare our task sequencing algorithm to several popular metaheuristic algorithms for combinatorial optimization, and show that it achieves significantly better performance on the problem of cumulative return maximization. Furthermore, we validate our algorithm on a critical task, optimizing a home controller for a micro energy grid.

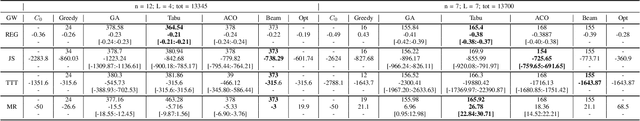

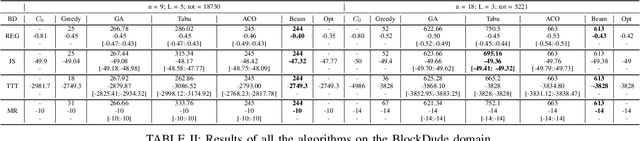

An Optimization Framework for Task Sequencing in Curriculum Learning

Mar 11, 2019

Abstract:Curriculum learning in reinforcement learning is used to shape exploration by presenting the agent with increasingly complex tasks. The idea of curriculum learning has been largely applied in both animal training and pedagogy. In reinforcement learning, all previous task sequencing methods have shaped exploration with the objective of reducing the time to reach a given performance level. We propose novel uses of curriculum learning, which arise from choosing different objective functions. Furthermore, we define a general optimization framework for task sequencing and evaluate the performance of popular metaheuristic search methods on several tasks. We show that curriculum learning can be successfully used to: improve the initial performance, take fewer suboptimal actions during exploration, and discover better policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge