Friederike Eyssel

Measuring Transparency in Intelligent Robots

Aug 29, 2024

Abstract:As robots become increasingly integrated into our daily lives, the need to make them transparent has never been more critical. Yet, despite its importance in human-robot interaction, a standardized measure of robot transparency has been missing until now. This paper addresses this gap by presenting the first comprehensive scale to measure perceived transparency in robotic systems, available in English, German, and Italian languages. Our approach conceptualizes transparency as a multidimensional construct, encompassing explainability, legibility, predictability, and meta-understanding. The proposed scale was a product of a rigorous three-stage process involving 1,223 participants. Firstly, we generated the items of our scale, secondly, we conducted an exploratory factor analysis, and thirdly, a confirmatory factor analysis served to validate the factor structure of the newly developed TOROS scale. The final scale encompasses 26 items and comprises three factors: Illegibility, Explainability, and Predictability. TOROS demonstrates high cross-linguistic reliability, inter-factor correlation, model fit, internal consistency, and convergent validity across the three cross-national samples. This empirically validated tool enables the assessment of robot transparency and contributes to the theoretical understanding of this complex construct. By offering a standardized measure, we facilitate consistent and comparable research in human-robot interaction in which TOROS can serve as a benchmark.

Trust and Cognitive Load During Human-Robot Interaction

Sep 11, 2019

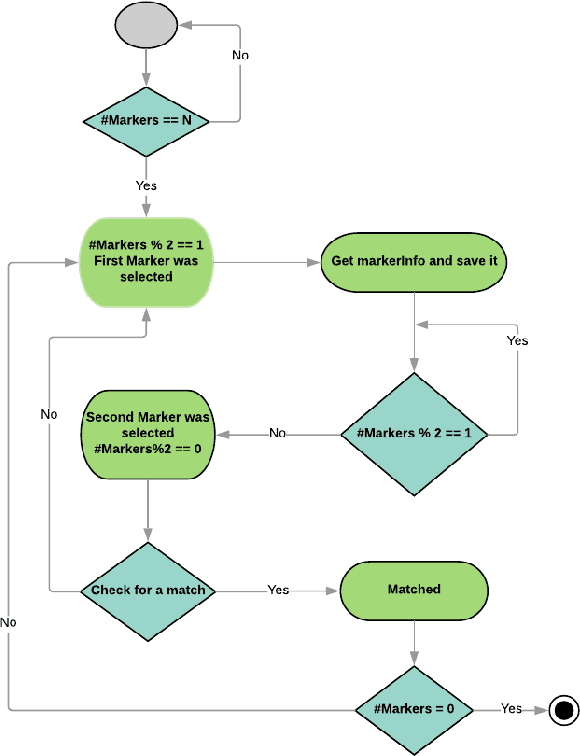

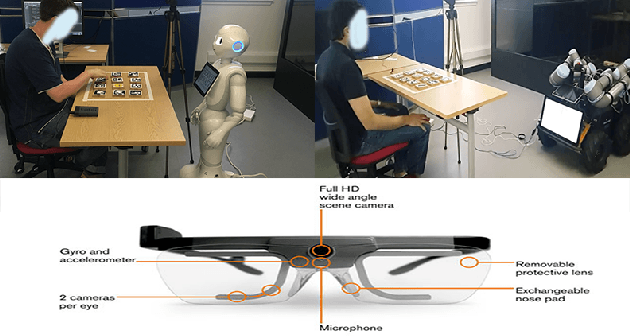

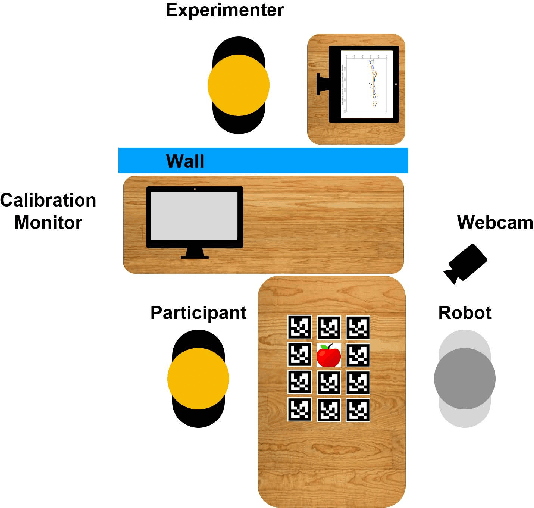

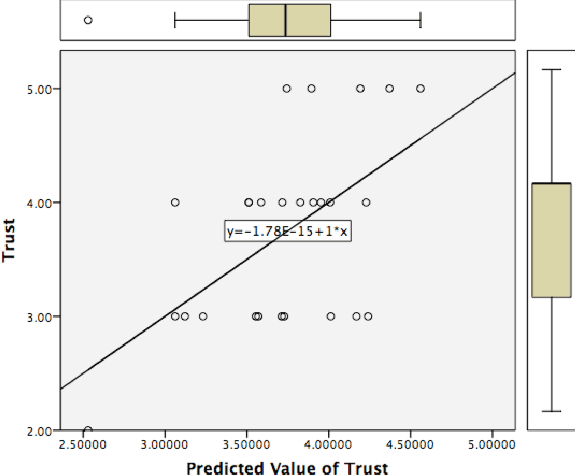

Abstract:This paper presents an exploratory study to understand the relationship between a humans' cognitive load, trust, and anthropomorphism during human-robot interaction. To understand the relationship, we created a \say{Matching the Pair} game that participants could play collaboratively with one of two robot types, Husky or Pepper. The goal was to understand if humans would trust the robot as a teammate while being in the game-playing situation that demanded a high level of cognitive load. Using a humanoid vs. a technical robot, we also investigated the impact of physical anthropomorphism and we furthermore tested the impact of robot error rate on subsequent judgments and behavior. Our results showed that there was an inversely proportional relationship between trust and cognitive load, suggesting that as the amount of cognitive load increased in the participants, their ratings of trust decreased. We also found a triple interaction impact between robot-type, error-rate and participant's ratings of trust. We found that participants perceived Pepper to be more trustworthy in comparison with the Husky robot after playing the game with both robots under high error-rate condition. On the contrary, Husky was perceived as more trustworthy than Pepper when it was depicted as featuring a low error-rate. Our results are interesting and call further investigation of the impact of physical anthropomorphism in combination with variable error-rates of the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge