Alessandra Rossi

Measuring Transparency in Intelligent Robots

Aug 29, 2024

Abstract:As robots become increasingly integrated into our daily lives, the need to make them transparent has never been more critical. Yet, despite its importance in human-robot interaction, a standardized measure of robot transparency has been missing until now. This paper addresses this gap by presenting the first comprehensive scale to measure perceived transparency in robotic systems, available in English, German, and Italian languages. Our approach conceptualizes transparency as a multidimensional construct, encompassing explainability, legibility, predictability, and meta-understanding. The proposed scale was a product of a rigorous three-stage process involving 1,223 participants. Firstly, we generated the items of our scale, secondly, we conducted an exploratory factor analysis, and thirdly, a confirmatory factor analysis served to validate the factor structure of the newly developed TOROS scale. The final scale encompasses 26 items and comprises three factors: Illegibility, Explainability, and Predictability. TOROS demonstrates high cross-linguistic reliability, inter-factor correlation, model fit, internal consistency, and convergent validity across the three cross-national samples. This empirically validated tool enables the assessment of robot transparency and contributes to the theoretical understanding of this complex construct. By offering a standardized measure, we facilitate consistent and comparable research in human-robot interaction in which TOROS can serve as a benchmark.

Increasing Transparency of Reinforcement Learning using Shielding for Human Preferences and Explanations

Nov 28, 2023Abstract:The adoption of Reinforcement Learning (RL) in several human-centred applications provides robots with autonomous decision-making capabilities and adaptability based on the observations of the operating environment. In such scenarios, however, the learning process can make robots' behaviours unclear and unpredictable to humans, thus preventing a smooth and effective Human-Robot Interaction (HRI). As a consequence, it becomes crucial to avoid robots performing actions that are unclear to the user. In this work, we investigate whether including human preferences in RL (concerning the actions the robot performs during learning) improves the transparency of a robot's behaviours. For this purpose, a shielding mechanism is included in the RL algorithm to include human preferences and to monitor the learning agent's decisions. We carried out a within-subjects study involving 26 participants to evaluate the robot's transparency in terms of Legibility, Predictability, and Expectability in different settings. Results indicate that considering human preferences during learning improves Legibility with respect to providing only Explanations, and combining human preferences with explanations elucidating the rationale behind the robot's decisions further amplifies transparency. Results also confirm that an increase in transparency leads to an increase in the safety, comfort, and reliability of the robot. These findings show the importance of transparency during learning and suggest a paradigm for robotic applications with human in the loop.

Common (good) practices measuring trust in HRI

Nov 20, 2023Abstract:Trust in robots is widely believed to be imperative for the adoption of robots into people's daily lives. It is, therefore, understandable that the literature of the last few decades focuses on measuring how much people trust robots -- and more generally, any agent - to foster such trust in these technologies. Researchers have been exploring how people trust robot in different ways, such as measuring trust on human-robot interactions (HRI) based on textual descriptions or images without any physical contact, during and after interacting with the technology. Nevertheless, trust is a complex behaviour, and it is affected and depends on several factors, including those related to the interacting agents (e.g. humans, robots, pets), itself (e.g. capabilities, reliability), the context (e.g. task), and the environment (e.g. public spaces vs private spaces vs working spaces). In general, most roboticists agree that insufficient levels of trust lead to a risk of disengagement while over-trust in technology can cause over-reliance and inherit dangers, for example, in emergency situations. It is, therefore, very important that the research community has access to reliable methods to measure people's trust in robots and technology. In this position paper, we outline current methods and their strengths, identify (some) weakly covered aspects and discuss the potential for covering a more comprehensive amount of factors influencing trust in HRI.

SCRITA 2023: Trust, Acceptance and Social Cues in Human-Robot Interaction

Nov 09, 2023Abstract:This workshop focused on identifying the challenges and dynamics between people and robots to foster short interactions and long-lasting relationships in different fields, from educational, service, collaborative, companion, care-home and medical robotics. For that, this workshop facilitated a discussion about people's trust towards robots "in the field", inviting workshop participants to contribute their past experiences and lessons learnt.

Current and Future Challenges in Humanoid Robotics -- An Empirical Investigation

Oct 14, 2023

Abstract:The goal of RoboCup is to make research in the area of robotics measurable over time, and grow a community that works together to solve increasingly difficult challenges over the years. The most ambitious of these challenges it to be able to play against the human world champions in soccer in 2050. To better understand what members of the RoboCup community believes to be the state of the art and the main challenges in the next decade and towards the 2050 game, we developed a survey and distributed it to members of different experience level and background within the community. We present data from 39 responses. Results highlighted that locomotion, awareness and decision-making, and robustness of robots are among those considered of high importance for the community, while human-robot interaction and natural language processing and generation are rated of low in importance and difficulty.

IEEE Trust, Acceptance and Social Cues in Human-Robot Interaction -- SCRITA 2022 Workshop

Aug 28, 2022Abstract:The Trust, Acceptance and Social Cues in Human-Robot Interaction - SCRITA is the 5th edition of a series of workshops held in conjunction with the IEEE RO-MAN conference. This workshop focuses on addressing the challenges and development of the dynamics between people and robots in order to foster short interactions and long-lasting relationships in different fields, from educational, service, collaborative, companion, care-home and medical robotics. In particular, we aimed in investigating how robots can manipulate (i.e. creating, improving, and recovering) people's ability of accepting and trusting them for a fruitful and successful coexistence between humans and people. While advanced progresses are reached in studying and evaluating the factors affecting acceptance and trust of people in robots in controlled or short-term (repeated interactions) setting, developing service and personal robots, that are accepted and trusted by people where the supervision of operators is not possible, still presents an open challenge for scientists in robotics, AI and HRI fields. In such unstructured static and dynamic human-centred environments scenarios, robots should be able to learn and adapt their behaviours to the situational context, but also to people's prior experiences and learned associations, their expectations, and their and the robot's ability to predict and understand each other's behaviours. Although the previous editions valued the participation of leading researchers in the field and several exceptional invited speakers who tackled down some fundamental points in this research domains, we wish to continue to further explore the role of trust in robotics to present groundbreaking research to effectively design and develop socially acceptable and trustable robots to be deployed "in the wild". Website: https://scrita.herts.ac.uk

* SCRITA 2022 workshop proceedings including 8 articles

The Road to a Successful HRI: AI, Trust and ethicS-TRAITS

Jun 07, 2022Abstract:The aim of this workshop is to foster the exchange of insights on past and ongoing research towards effective and long-lasting collaborations between humans and robots. This workshop will provide a forum for representatives from academia and industry communities to analyse the different aspects of HRI that impact on its success. We particularly focus on AI techniques required to implement autonomous and proactive interactions, on the factors that enhance, undermine, or recover humans' acceptance and trust in robots, and on the potential ethical and legal concerns related to the deployment of such robots in human-centred environments. Website: https://sites.google.com/view/traits-hri-2022

* TRAITS Workshop Proceedings including 7 articles

A ROS Architecture for Personalised HRI with a Bartender Social Robot

Mar 15, 2022

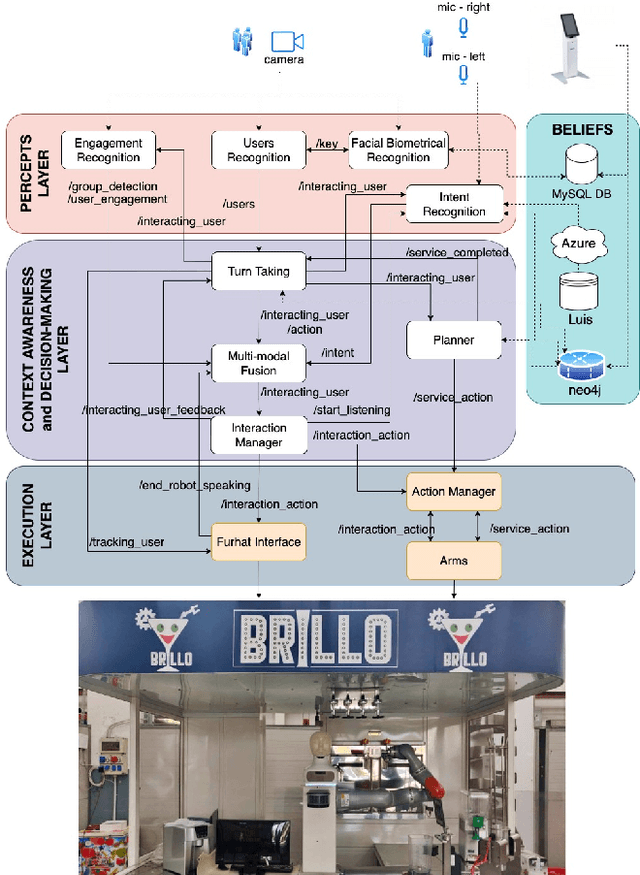

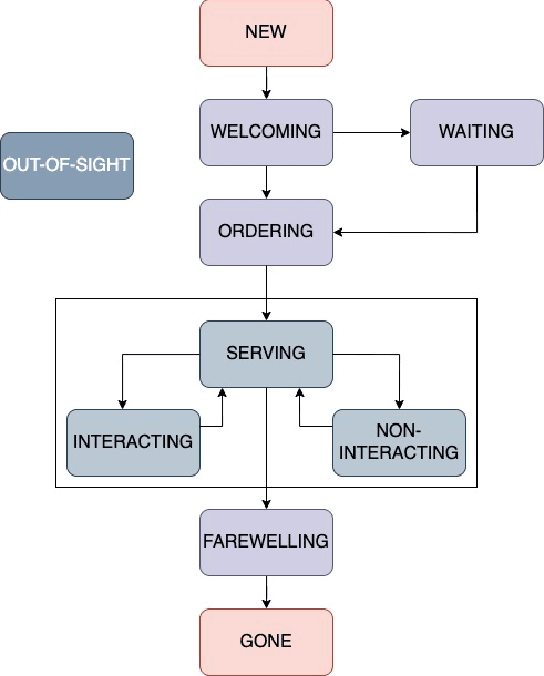

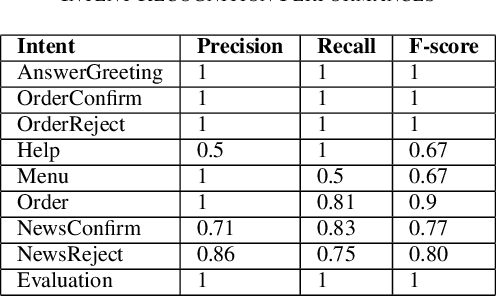

Abstract:BRILLO (Bartending Robot for Interactive Long-Lasting Operations) project has the overall goal of creating an autonomous robotic bartender that can interact with customers while accomplishing its bartending tasks. In such a scenario, people's novelty effect connected to the use of an attractive technology is destined to wear off and, consequently, it negatively affects the success of the service robotics application. For this reason, providing personalised natural interaction while accessing its services is of paramount importance for increasing users' engagement and, consequently, their loyalty. In this paper, we present the developed three-layers ROS architecture integrating a perception layer managing the processing of different social signals, a decision-making layer for handling multi-party interactions, and an execution layer controlling the behaviour of a complex robot composed of arms and a face. Finally, user modelling through a beliefs layer allows for personalised interaction.

Trust, Acceptance and Social Cues in Human-Robot Interaction -- SCRITA 2021

Aug 19, 2021Abstract:This workshop aimed for a deeper exploration of trust and acceptance in human-robot interaction (HRI) from a multidisciplinary perspective including robots' capabilities of sensing and perceiving other agents, the environment, and human-robot dynamics. The workshop was held online in conjunction with IEEE RO-MAN 2021 (see https://ro-man2021.org/). Three invited speakers and six position papers analysed/discussed different aspects of human-robot interaction that can affect, enhance, undermine, or recover humans' trust in robots, such as the use of social cues or behaviour transparency. The attendees of different backgrounds engaged in a dynamic conversation about the relevant challenges of effectively supporting the design and development of socially acceptable and trustable robots. Website: https://scrita.herts.ac.uk/2021/

I Know What You Would Like to Drink: Benefits and Detriments of Sharing Personal Info with a Bartender Robot

Apr 02, 2021Abstract:This paper introduces benefits and detriments of a robot bartender that is capable of adapting the interaction with human users according to their preferences in drinks, music, and hobbies. We believe that a personalised experience during a human-robot interaction increases the human user's engagement with the robot and that such information will be used by the robot during the interaction. However, this implies that the users need to share several personal information with the robot. In this paper, we introduce the research topic and our approach to evaluate people's perceptions and consideration of their privacy with a robot. We present a within-subject study in which participants interacted twice with a robot that firstly had not any previous info about the users, and, then, having a knowledge of their preferences. We observed that less than 60\% of the participants were not concerned about sharing personal information with the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge