Gabriella Lakatos

EHAZOP: A Proof of Concept Ethical Hazard Analysis of an Assistive Robot

Jun 13, 2024

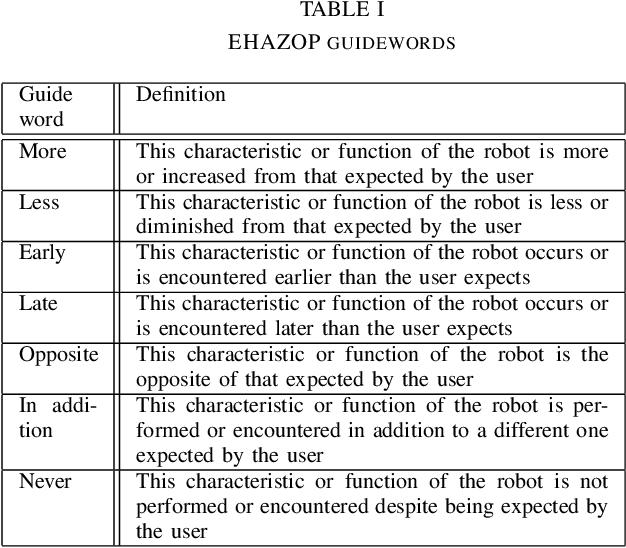

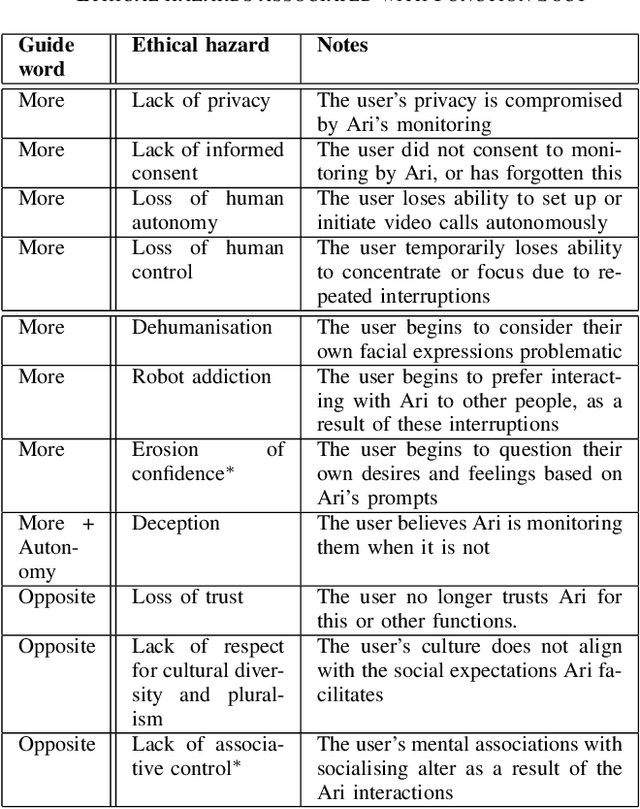

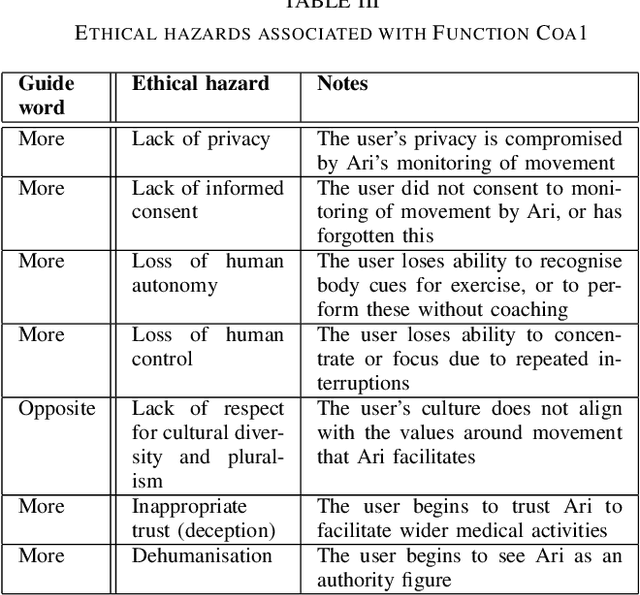

Abstract:The use of assistive robots in domestic environments can raise significant ethical concerns, from the risk of individual ethical harm to wider societal ethical impacts including culture flattening and compromise of human dignity. It is therefore essential to ensure that technological development of these robots is informed by robust and inclusive techniques for mitigating ethical concerns. This paper presents EHAZOP, a method for conducting an ethical hazard analysis on an assistive robot. EHAZOP draws upon collaborative, creative and structured processes originating within safety engineering, using these to identify ethical concerns associated with the operation of a given assistive robot. We present the results of a proof of concept study of EHAZOP, demonstrating the potential for this process to identify diverse ethical hazards in these systems.

SCRITA 2023: Trust, Acceptance and Social Cues in Human-Robot Interaction

Nov 09, 2023Abstract:This workshop focused on identifying the challenges and dynamics between people and robots to foster short interactions and long-lasting relationships in different fields, from educational, service, collaborative, companion, care-home and medical robotics. For that, this workshop facilitated a discussion about people's trust towards robots "in the field", inviting workshop participants to contribute their past experiences and lessons learnt.

Chat Failures and Troubles: Reasons and Solutions

Sep 07, 2023Abstract:This paper examines some common problems in Human-Robot Interaction (HRI) causing failures and troubles in Chat. A given use case's design decisions start with the suitable robot, the suitable chatting model, identifying common problems that cause failures, identifying potential solutions, and planning continuous improvement. In conclusion, it is recommended to use a closed-loop control algorithm that guides the use of trained Artificial Intelligence (AI) pre-trained models and provides vocabulary filtering, re-train batched models on new datasets, learn online from data streams, and/or use reinforcement learning models to self-update the trained models and reduce errors.

* 4 pages

Communicative Robot Signals: Presenting a New Typology for Human-Robot Interaction

Feb 08, 2023

Abstract:We present a new typology for classifying signals from robots when they communicate with humans. For inspiration, we use ethology, the study of animal behaviour and previous efforts from literature as guides in defining the typology. The typology is based on communicative signals that consist of five properties: the origin where the signal comes from, the deliberateness of the signal, the signal's reference, the genuineness of the signal, and its clarity (i.e., how implicit or explicit it is). Using the accompanying worksheet, the typology is straightforward to use to examine communicative signals from previous human-robot interactions and provides guidance for designers to use the typology when designing new robot behaviours.

IEEE Trust, Acceptance and Social Cues in Human-Robot Interaction -- SCRITA 2022 Workshop

Aug 28, 2022Abstract:The Trust, Acceptance and Social Cues in Human-Robot Interaction - SCRITA is the 5th edition of a series of workshops held in conjunction with the IEEE RO-MAN conference. This workshop focuses on addressing the challenges and development of the dynamics between people and robots in order to foster short interactions and long-lasting relationships in different fields, from educational, service, collaborative, companion, care-home and medical robotics. In particular, we aimed in investigating how robots can manipulate (i.e. creating, improving, and recovering) people's ability of accepting and trusting them for a fruitful and successful coexistence between humans and people. While advanced progresses are reached in studying and evaluating the factors affecting acceptance and trust of people in robots in controlled or short-term (repeated interactions) setting, developing service and personal robots, that are accepted and trusted by people where the supervision of operators is not possible, still presents an open challenge for scientists in robotics, AI and HRI fields. In such unstructured static and dynamic human-centred environments scenarios, robots should be able to learn and adapt their behaviours to the situational context, but also to people's prior experiences and learned associations, their expectations, and their and the robot's ability to predict and understand each other's behaviours. Although the previous editions valued the participation of leading researchers in the field and several exceptional invited speakers who tackled down some fundamental points in this research domains, we wish to continue to further explore the role of trust in robotics to present groundbreaking research to effectively design and develop socially acceptable and trustable robots to be deployed "in the wild". Website: https://scrita.herts.ac.uk

* SCRITA 2022 workshop proceedings including 8 articles

Trust, Acceptance and Social Cues in Human-Robot Interaction -- SCRITA 2021

Aug 19, 2021Abstract:This workshop aimed for a deeper exploration of trust and acceptance in human-robot interaction (HRI) from a multidisciplinary perspective including robots' capabilities of sensing and perceiving other agents, the environment, and human-robot dynamics. The workshop was held online in conjunction with IEEE RO-MAN 2021 (see https://ro-man2021.org/). Three invited speakers and six position papers analysed/discussed different aspects of human-robot interaction that can affect, enhance, undermine, or recover humans' trust in robots, such as the use of social cues or behaviour transparency. The attendees of different backgrounds engaged in a dynamic conversation about the relevant challenges of effectively supporting the design and development of socially acceptable and trustable robots. Website: https://scrita.herts.ac.uk/2021/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge