Patrick Holthaus

EHAZOP: A Proof of Concept Ethical Hazard Analysis of an Assistive Robot

Jun 13, 2024

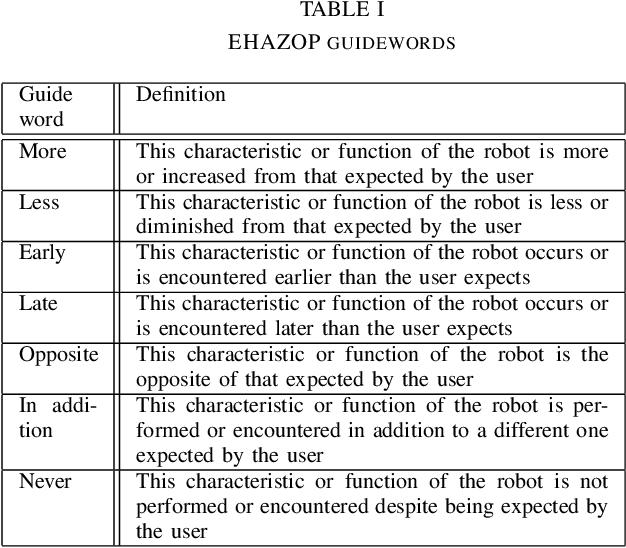

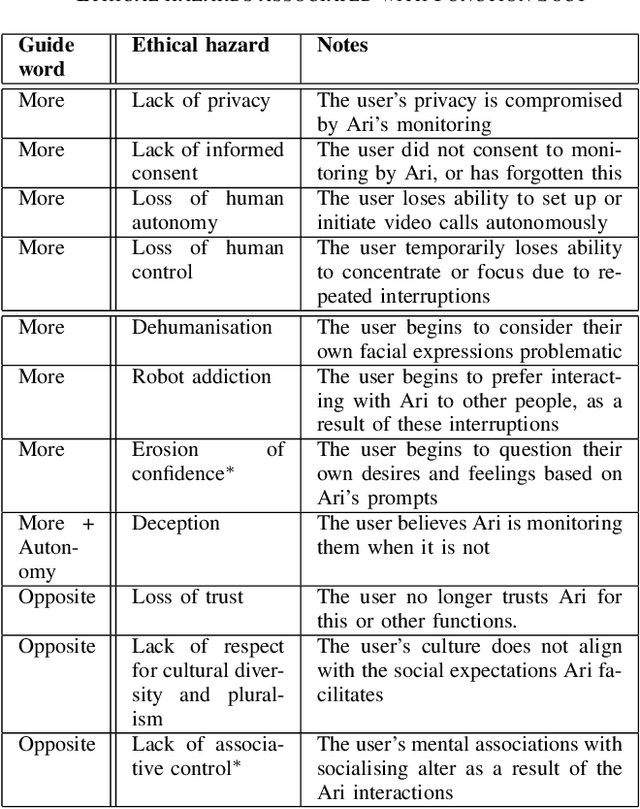

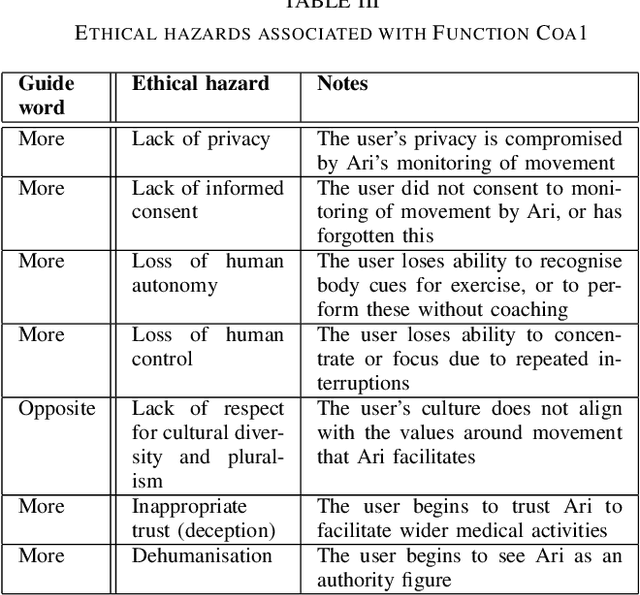

Abstract:The use of assistive robots in domestic environments can raise significant ethical concerns, from the risk of individual ethical harm to wider societal ethical impacts including culture flattening and compromise of human dignity. It is therefore essential to ensure that technological development of these robots is informed by robust and inclusive techniques for mitigating ethical concerns. This paper presents EHAZOP, a method for conducting an ethical hazard analysis on an assistive robot. EHAZOP draws upon collaborative, creative and structured processes originating within safety engineering, using these to identify ethical concerns associated with the operation of a given assistive robot. We present the results of a proof of concept study of EHAZOP, demonstrating the potential for this process to identify diverse ethical hazards in these systems.

Common (good) practices measuring trust in HRI

Nov 20, 2023Abstract:Trust in robots is widely believed to be imperative for the adoption of robots into people's daily lives. It is, therefore, understandable that the literature of the last few decades focuses on measuring how much people trust robots -- and more generally, any agent - to foster such trust in these technologies. Researchers have been exploring how people trust robot in different ways, such as measuring trust on human-robot interactions (HRI) based on textual descriptions or images without any physical contact, during and after interacting with the technology. Nevertheless, trust is a complex behaviour, and it is affected and depends on several factors, including those related to the interacting agents (e.g. humans, robots, pets), itself (e.g. capabilities, reliability), the context (e.g. task), and the environment (e.g. public spaces vs private spaces vs working spaces). In general, most roboticists agree that insufficient levels of trust lead to a risk of disengagement while over-trust in technology can cause over-reliance and inherit dangers, for example, in emergency situations. It is, therefore, very important that the research community has access to reliable methods to measure people's trust in robots and technology. In this position paper, we outline current methods and their strengths, identify (some) weakly covered aspects and discuss the potential for covering a more comprehensive amount of factors influencing trust in HRI.

SCRITA 2023: Trust, Acceptance and Social Cues in Human-Robot Interaction

Nov 09, 2023Abstract:This workshop focused on identifying the challenges and dynamics between people and robots to foster short interactions and long-lasting relationships in different fields, from educational, service, collaborative, companion, care-home and medical robotics. For that, this workshop facilitated a discussion about people's trust towards robots "in the field", inviting workshop participants to contribute their past experiences and lessons learnt.

Chat Failures and Troubles: Reasons and Solutions

Sep 07, 2023Abstract:This paper examines some common problems in Human-Robot Interaction (HRI) causing failures and troubles in Chat. A given use case's design decisions start with the suitable robot, the suitable chatting model, identifying common problems that cause failures, identifying potential solutions, and planning continuous improvement. In conclusion, it is recommended to use a closed-loop control algorithm that guides the use of trained Artificial Intelligence (AI) pre-trained models and provides vocabulary filtering, re-train batched models on new datasets, learn online from data streams, and/or use reinforcement learning models to self-update the trained models and reduce errors.

* 4 pages

How Do Human Users Teach a Continual Learning Robot in Repeated Interactions?

Jun 30, 2023

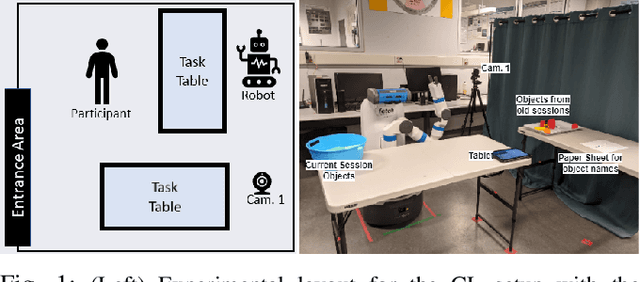

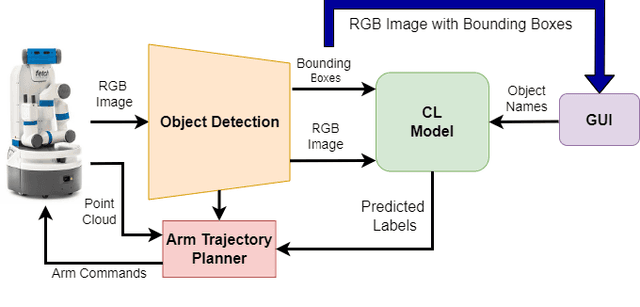

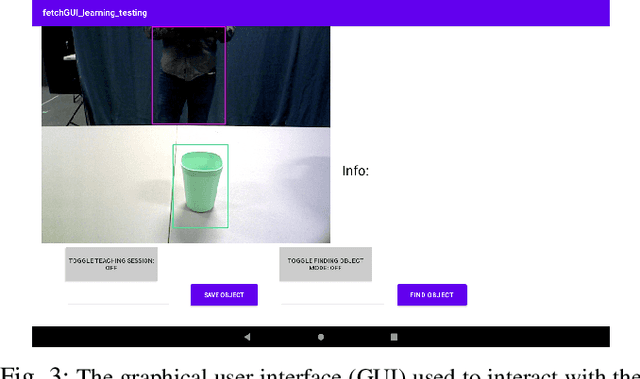

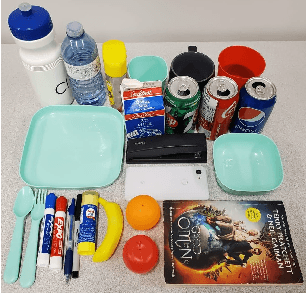

Abstract:Continual learning (CL) has emerged as an important avenue of research in recent years, at the intersection of Machine Learning (ML) and Human-Robot Interaction (HRI), to allow robots to continually learn in their environments over long-term interactions with humans. Most research in continual learning, however, has been robot-centered to develop continual learning algorithms that can quickly learn new information on static datasets. In this paper, we take a human-centered approach to continual learning, to understand how humans teach continual learning robots over the long term and if there are variations in their teaching styles. We conducted an in-person study with 40 participants that interacted with a continual learning robot in 200 sessions. In this between-participant study, we used two different CL models deployed on a Fetch mobile manipulator robot. An extensive qualitative and quantitative analysis of the data collected in the study shows that there is significant variation among the teaching styles of individual users indicating the need for personalized adaptation to their distinct teaching styles. The results also show that although there is a difference in the teaching styles between expert and non-expert users, the style does not have an effect on the performance of the continual learning robot. Finally, our analysis shows that the constrained experimental setups that have been widely used to test most continual learning techniques are not adequate, as real users interact with and teach continual learning robots in a variety of ways. Our code is available at https://github.com/aliayub7/cl_hri.

Continual Learning through Human-Robot Interaction -- Human Perceptions of a Continual Learning Robot in Repeated Interactions

May 22, 2023Abstract:For long-term deployment in dynamic real-world environments, assistive robots must continue to learn and adapt to their environments. Researchers have developed various computational models for continual learning (CL) that can allow robots to continually learn from limited training data, and avoid forgetting previous knowledge. While these CL models can mitigate forgetting on static, systematically collected datasets, it is unclear how human users might perceive a robot that continually learns over multiple interactions with them. In this paper, we developed a system that integrates CL models for object recognition with a Fetch mobile manipulator robot and allows human participants to directly teach and test the robot over multiple sessions. We conducted an in-person study with 60 participants who interacted with our system in 300 sessions (5 sessions per participant). We conducted a between-participant study with three different CL models (3 experimental conditions) to understand human perceptions of continual learning robots over multiple sessions. Our results suggest that participants' perceptions of trust, competence, and usability of a continual learning robot significantly decrease over multiple sessions if the robot forgets previously learned objects. However, the perceived task load on participants for teaching and testing the robot remains the same over multiple sessions even if the robot forgets previously learned objects. Our results also indicate that state-of-the-art CL models might perform unreliably when applied to robots interacting with human participants. Further, continual learning robots are not perceived as very trustworthy or competent by human participants, regardless of the underlying continual learning model or the session number.

Interactive robots as inclusive tools to increase diversity in higher education

Mar 02, 2023Abstract:There is a major lack of diversity in engineering, technology, and computing subjects in higher education. The resulting underrepresentation of some population groups contributes largely to gender and ethnicity pay gaps and social disadvantages. We aim to increase the diversity among students in such subjects by investigating the use of interactive robots as a tool that can get prospective students from different backgrounds interested in robotics as their field of study. For that, we will survey existing solutions that have proven to be successful in engaging underrepresented groups with technical subjects in educational settings. Moreover, we examine two recent outreach events at the University of Hertfordshire against inclusivity criteria. Based on that, we suggest specific activities for higher education institutions that follow an inclusive approach using interactive robots to attract prospective students at open days and other outreach events. Our suggestions provide tangible actions that can be easily implemented by higher education institutions to make technical subjects more appealing to everyone and thereby tackle inequalities in student uptake.

Communicative Robot Signals: Presenting a New Typology for Human-Robot Interaction

Feb 08, 2023

Abstract:We present a new typology for classifying signals from robots when they communicate with humans. For inspiration, we use ethology, the study of animal behaviour and previous efforts from literature as guides in defining the typology. The typology is based on communicative signals that consist of five properties: the origin where the signal comes from, the deliberateness of the signal, the signal's reference, the genuineness of the signal, and its clarity (i.e., how implicit or explicit it is). Using the accompanying worksheet, the typology is straightforward to use to examine communicative signals from previous human-robot interactions and provides guidance for designers to use the typology when designing new robot behaviours.

IEEE Trust, Acceptance and Social Cues in Human-Robot Interaction -- SCRITA 2022 Workshop

Aug 28, 2022Abstract:The Trust, Acceptance and Social Cues in Human-Robot Interaction - SCRITA is the 5th edition of a series of workshops held in conjunction with the IEEE RO-MAN conference. This workshop focuses on addressing the challenges and development of the dynamics between people and robots in order to foster short interactions and long-lasting relationships in different fields, from educational, service, collaborative, companion, care-home and medical robotics. In particular, we aimed in investigating how robots can manipulate (i.e. creating, improving, and recovering) people's ability of accepting and trusting them for a fruitful and successful coexistence between humans and people. While advanced progresses are reached in studying and evaluating the factors affecting acceptance and trust of people in robots in controlled or short-term (repeated interactions) setting, developing service and personal robots, that are accepted and trusted by people where the supervision of operators is not possible, still presents an open challenge for scientists in robotics, AI and HRI fields. In such unstructured static and dynamic human-centred environments scenarios, robots should be able to learn and adapt their behaviours to the situational context, but also to people's prior experiences and learned associations, their expectations, and their and the robot's ability to predict and understand each other's behaviours. Although the previous editions valued the participation of leading researchers in the field and several exceptional invited speakers who tackled down some fundamental points in this research domains, we wish to continue to further explore the role of trust in robotics to present groundbreaking research to effectively design and develop socially acceptable and trustable robots to be deployed "in the wild". Website: https://scrita.herts.ac.uk

* SCRITA 2022 workshop proceedings including 8 articles

How does a robot's social credibility relate to its perceived trustworthiness?

Aug 21, 2021

Abstract:This position paper aims to highlight and discuss the role of a robot's social credibility in interaction with humans. In particular, I want to explore a potential relation between social credibility and a robot's acceptability and ultimately its trustworthiness. I thereby also review and expand the notion of social credibility as a measure of how well the robot obeys social norms during interaction with the concept of conscious acknowledgement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge