Chrystopher L. Nehaniv

Self-Reproduction and Evolution in Cellular Automata: 25 Years after Evoloops

Feb 06, 2024

Abstract:The year of 2024 marks the 25th anniversary of the publication of evoloops, an evolutionary variant of Chris Langton's self-reproducing loops which proved that Darwinian evolution of self-reproducing organisms by variation and natural selection is possible within deterministic cellular automata. Over the last few decades, this line of Artificial Life research has since undergone several important developments. Although it experienced a relative dormancy of activities for a while, the recent rise of interest in open-ended evolution and the success of continuous cellular automata models have brought researchers' attention back to how to make spatio-temporal patterns self-reproduce and evolve within spatially distributed computational media. This article provides a review of the relevant literature on this topic over the past 25 years and highlights the major accomplishments made so far, the challenges being faced, and promising future research directions.

A Personalized Household Assistive Robot that Learns and Creates New Breakfast Options through Human-Robot Interaction

Jun 30, 2023Abstract:For robots to assist users with household tasks, they must first learn about the tasks from the users. Further, performing the same task every day, in the same way, can become boring for the robot's user(s), therefore, assistive robots must find creative ways to perform tasks in the household. In this paper, we present a cognitive architecture for a household assistive robot that can learn personalized breakfast options from its users and then use the learned knowledge to set up a table for breakfast. The architecture can also use the learned knowledge to create new breakfast options over a longer period of time. The proposed cognitive architecture combines state-of-the-art perceptual learning algorithms, computational implementation of cognitive models of memory encoding and learning, a task planner for picking and placing objects in the household, a graphical user interface (GUI) to interact with the user and a novel approach for creating new breakfast options using the learned knowledge. The architecture is integrated with the Fetch mobile manipulator robot and validated, as a proof-of-concept system evaluation in a large indoor environment with multiple kitchen objects. Experimental results demonstrate the effectiveness of our architecture to learn personalized breakfast options from the user and generate new breakfast options never learned by the robot.

How Do Human Users Teach a Continual Learning Robot in Repeated Interactions?

Jun 30, 2023

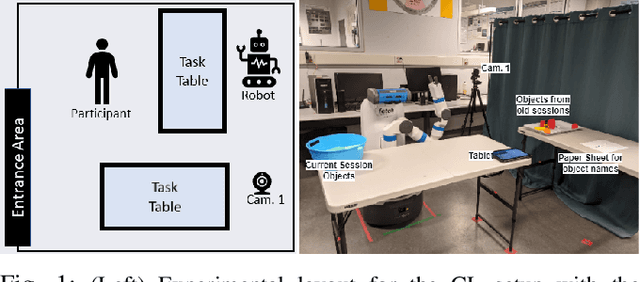

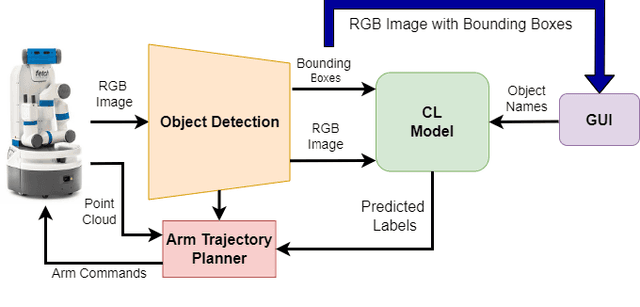

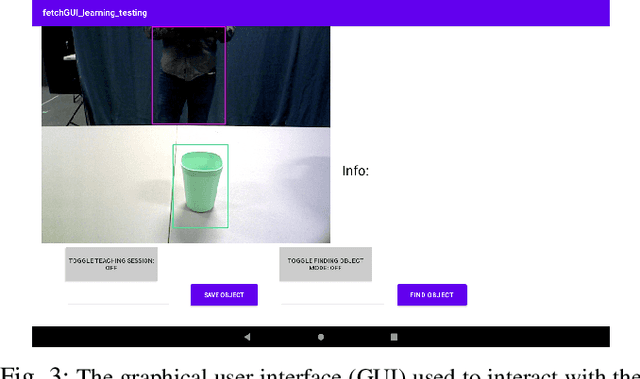

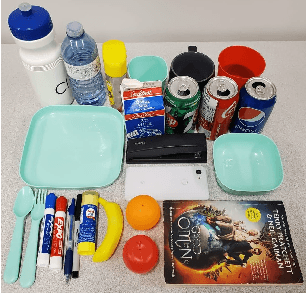

Abstract:Continual learning (CL) has emerged as an important avenue of research in recent years, at the intersection of Machine Learning (ML) and Human-Robot Interaction (HRI), to allow robots to continually learn in their environments over long-term interactions with humans. Most research in continual learning, however, has been robot-centered to develop continual learning algorithms that can quickly learn new information on static datasets. In this paper, we take a human-centered approach to continual learning, to understand how humans teach continual learning robots over the long term and if there are variations in their teaching styles. We conducted an in-person study with 40 participants that interacted with a continual learning robot in 200 sessions. In this between-participant study, we used two different CL models deployed on a Fetch mobile manipulator robot. An extensive qualitative and quantitative analysis of the data collected in the study shows that there is significant variation among the teaching styles of individual users indicating the need for personalized adaptation to their distinct teaching styles. The results also show that although there is a difference in the teaching styles between expert and non-expert users, the style does not have an effect on the performance of the continual learning robot. Finally, our analysis shows that the constrained experimental setups that have been widely used to test most continual learning techniques are not adequate, as real users interact with and teach continual learning robots in a variety of ways. Our code is available at https://github.com/aliayub7/cl_hri.

Continual Learning through Human-Robot Interaction -- Human Perceptions of a Continual Learning Robot in Repeated Interactions

May 22, 2023Abstract:For long-term deployment in dynamic real-world environments, assistive robots must continue to learn and adapt to their environments. Researchers have developed various computational models for continual learning (CL) that can allow robots to continually learn from limited training data, and avoid forgetting previous knowledge. While these CL models can mitigate forgetting on static, systematically collected datasets, it is unclear how human users might perceive a robot that continually learns over multiple interactions with them. In this paper, we developed a system that integrates CL models for object recognition with a Fetch mobile manipulator robot and allows human participants to directly teach and test the robot over multiple sessions. We conducted an in-person study with 60 participants who interacted with our system in 300 sessions (5 sessions per participant). We conducted a between-participant study with three different CL models (3 experimental conditions) to understand human perceptions of continual learning robots over multiple sessions. Our results suggest that participants' perceptions of trust, competence, and usability of a continual learning robot significantly decrease over multiple sessions if the robot forgets previously learned objects. However, the perceived task load on participants for teaching and testing the robot remains the same over multiple sessions even if the robot forgets previously learned objects. Our results also indicate that state-of-the-art CL models might perform unreliably when applied to robots interacting with human participants. Further, continual learning robots are not perceived as very trustworthy or competent by human participants, regardless of the underlying continual learning model or the session number.

Don't Forget to Buy Milk: Contextually Aware Grocery Reminder Household Robot

Jul 20, 2022

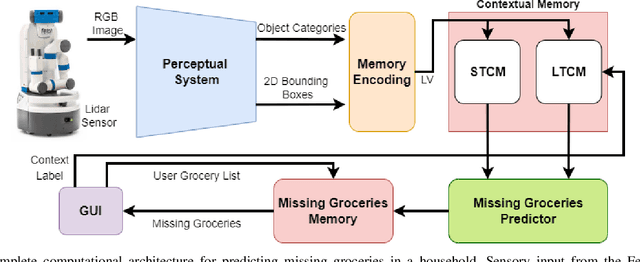

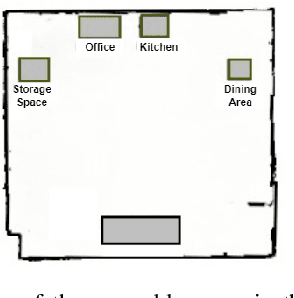

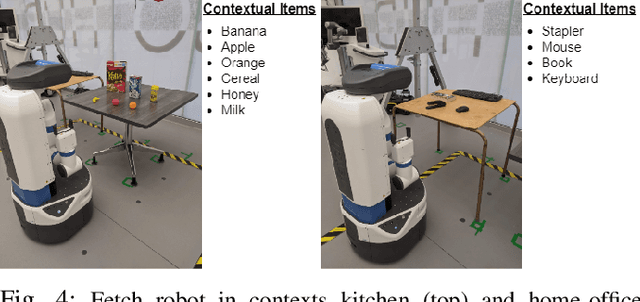

Abstract:Assistive robots operating in household environments would require items to be available in the house to perform assistive tasks. However, when these items run out, the assistive robot must remind its user to buy the missing items. In this paper, we present a computational architecture that can allow a robot to learn personalized contextual knowledge of a household through interactions with its user. The architecture can then use the learned knowledge to make predictions about missing items from the household over a long period of time. The architecture integrates state-of-the-art perceptual learning algorithms, cognitive models of memory encoding and learning, a reasoning module for predicting missing items from the household, and a graphical user interface (GUI) to interact with the user. The architecture is integrated with the Fetch mobile manipulator robot and validated in a large indoor environment with multiple contexts and objects. Our experimental results show that the robot can adapt to an environment by learning contextual knowledge through interactions with its user. The robot can also use the learned knowledge to correctly predict missing items over multiple weeks and it is robust against sensory and perceptual errors.

Robots Learning to Say `No': Prohibition and Rejective Mechanisms in Acquisition of Linguistic Negation

Oct 28, 2018

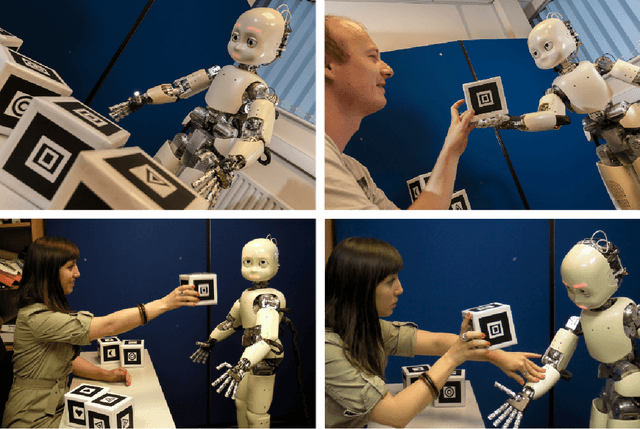

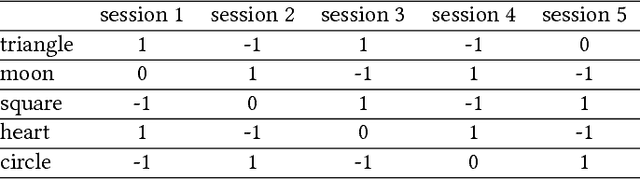

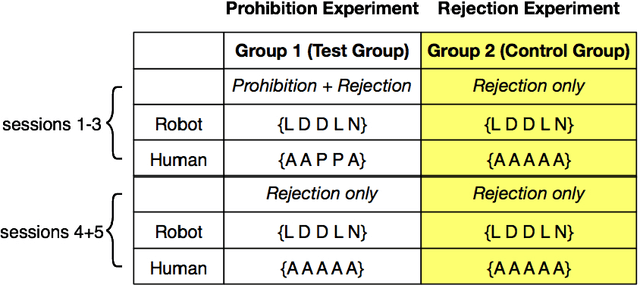

Abstract:`No' belongs to the first ten words used by children and embodies the first active form of linguistic negation. Despite its early occurrence the details of its acquisition process remain largely unknown. The circumstance that `no' cannot be construed as a label for perceptible objects or events puts it outside of the scope of most modern accounts of language acquisition. Moreover, most symbol grounding architectures will struggle to ground the word due to its non-referential character. In an experimental study involving the child-like humanoid robot iCub that was designed to illuminate the acquisition process of negation words, the robot is deployed in several rounds of speech-wise unconstrained interaction with na\"ive participants acting as its language teachers. The results corroborate the hypothesis that affect or volition plays a pivotal role in the socially distributed acquisition process. Negation words are prosodically salient within prohibitive utterances and negative intent interpretations such that they can be easily isolated from the teacher's speech signal. These words subsequently may be grounded in negative affective states. However, observations of the nature of prohibitive acts and the temporal relationships between its linguistic and extra-linguistic components raise serious questions over the suitability of Hebbian-type algorithms for language grounding.

Computational Understanding and Manipulation of Symmetries

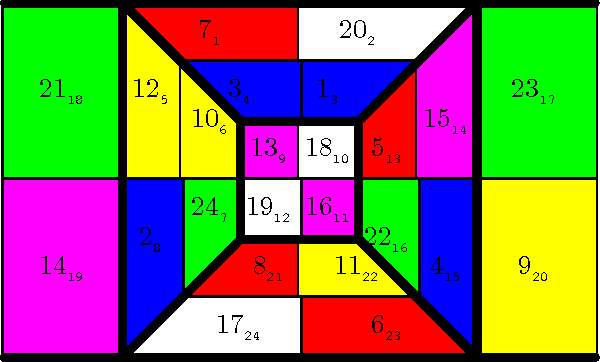

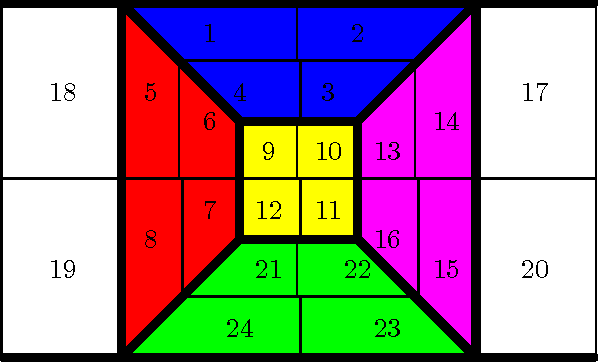

Oct 14, 2014

Abstract:For natural and artificial systems with some symmetry structure, computational understanding and manipulation can be achieved without learning by exploiting the algebraic structure. Here we describe this algebraic coordinatization method and apply it to permutation puzzles. Coordinatization yields a structural understanding, not just solutions for the puzzles.

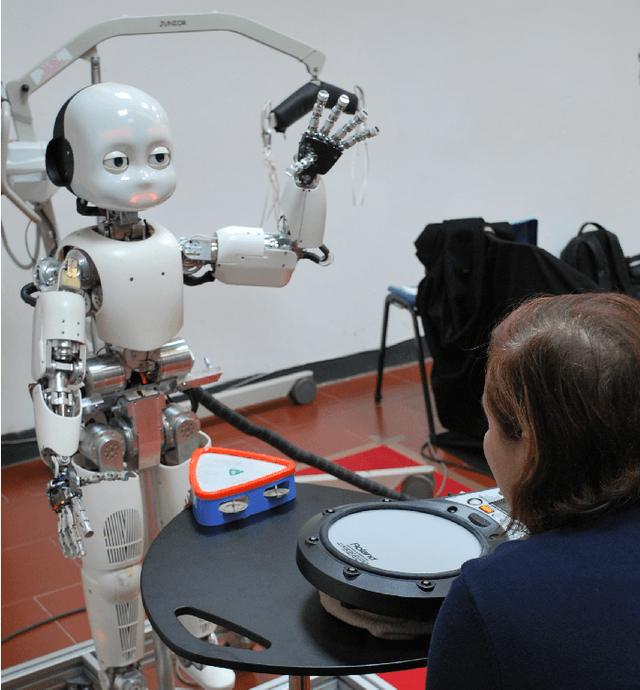

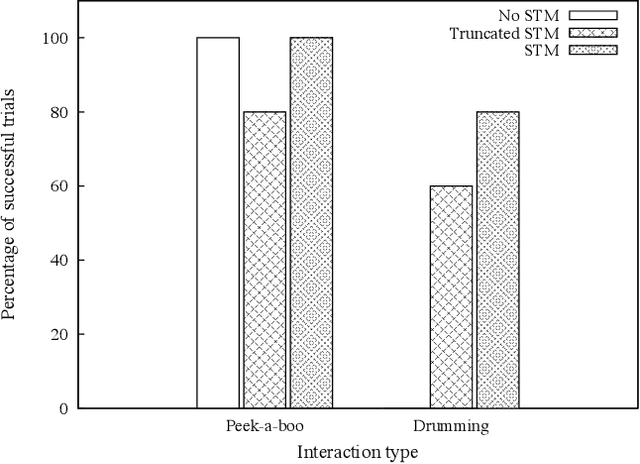

Interaction Histories and Short Term Memory: Enactive Development of Turn-taking Behaviors in a Childlike Humanoid Robot

Feb 25, 2012

Abstract:In this article, an enactive architecture is described that allows a humanoid robot to learn to compose simple actions into turn-taking behaviors while playing interaction games with a human partner. The robot's action choices are reinforced by social feedback from the human in the form of visual attention and measures of behavioral synchronization. We demonstrate that the system can acquire and switch between behaviors learned through interaction based on social feedback from the human partner. The role of reinforcement based on a short term memory of the interaction is experimentally investigated. Results indicate that feedback based only on the immediate state is insufficient to learn certain turn-taking behaviors. Therefore some history of the interaction must be considered in the acquisition of turn-taking, which can be efficiently handled through the use of short term memory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge