Paola Ardón

Model-Based Underwater 6D Pose Estimation from RGB

Feb 14, 2023

Abstract:Object pose estimation underwater allows an autonomous system to perform tracking and intervention tasks. Nonetheless, underwater target pose estimation is remarkably challenging due to, among many factors, limited visibility, light scattering, cluttered environments, and constantly varying water conditions. An approach is to employ sonar or laser sensing to acquire 3D data, but besides being costly, the resulting data is normally noisy. For this reason, the community has focused on extracting pose estimates from RGB input. However, the literature is scarce and exhibits low detection accuracy. In this work, we propose an approach consisting of a 2D object detection and a 6D pose estimation that reliably obtains object poses in different underwater scenarios. To test our pipeline, we collect and make available a dataset of 4 objects in 10 different real scenes with annotations for object detection and pose estimation. We test our proposal in real and synthetic settings and compare its performance with similar end-to-end methodologies for 6D object pose estimation. Our dataset contains some challenging objects with symmetrical shapes and poor texture. Regardless of such object characteristics, our proposed method outperforms stat-of-the-art pose accuracy by ~8%. We finally demonstrate the reliability of our pose estimation pipeline by doing experiments with an underwater manipulation in a reaching task.

Underwater Robot Manipulation: Advances, Challenges and Prospective Ventures

Jan 09, 2022

Abstract:Underwater manipulation is one of the most remarkable ongoing research subjects in robotics. \acp{I-AUV} not only have to cope with the technical challenges associated with traditional manipulation tasks but do so while currents and waves perturb the stability of the vehicle, and low-light, turbid water conditions impede perceiving the surroundings. Certainly, the dynamic nature and our limited understanding of the marine environment hinder the autonomous performance of underwater robot manipulation. This manuscript provides a discussion on previous research and the limiting factors that impose on the long-envisioned prospects of autonomous underwater manipulation to finally highlight research directions that have the potential to improve the autonomy capabilities of I-AUV.

Building Affordance Relations for Robotic Agents - A Review

May 14, 2021

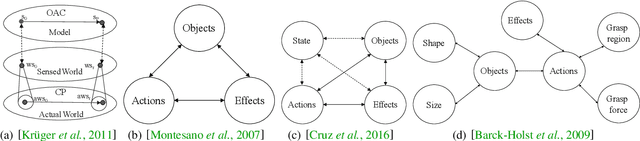

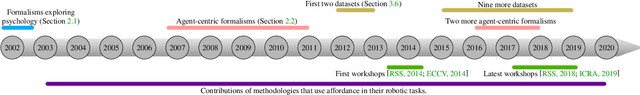

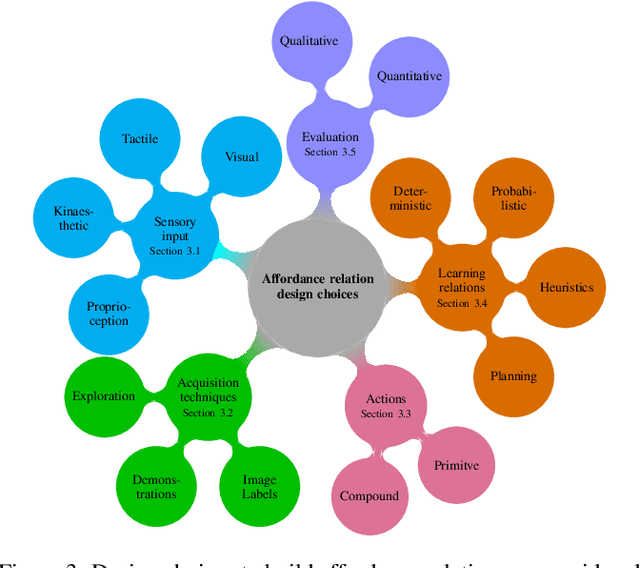

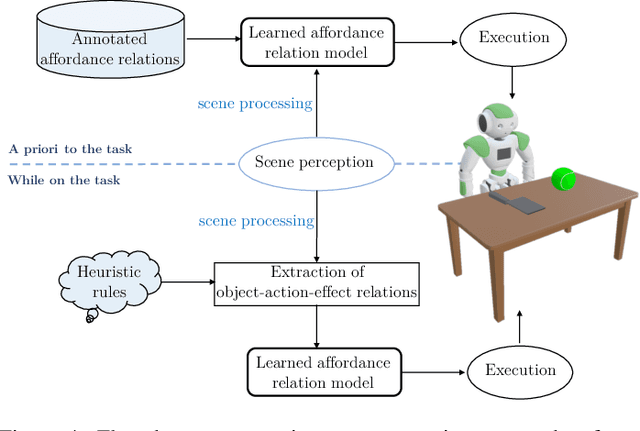

Abstract:Affordances describe the possibilities for an agent to perform actions with an object. While the significance of the affordance concept has been previously studied from varied perspectives, such as psychology and cognitive science, these approaches are not always sufficient to enable direct transfer, in the sense of implementations, to artificial intelligence (AI)-based systems and robotics. However, many efforts have been made to pragmatically employ the concept of affordances, as it represents great potential for AI agents to effectively bridge perception to action. In this survey, we review and find common ground amongst different strategies that use the concept of affordances within robotic tasks, and build on these methods to provide guidance for including affordances as a mechanism to improve autonomy. To this end, we outline common design choices for building representations of affordance relations, and their implications on the generalisation capabilities of an agent when facing previously unseen scenarios. Finally, we identify and discuss a range of interesting research directions involving affordances that have the potential to improve the capabilities of an AI agent.

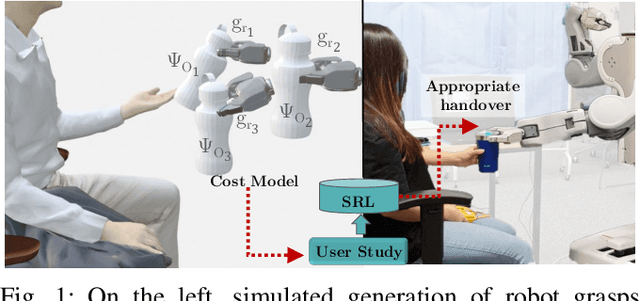

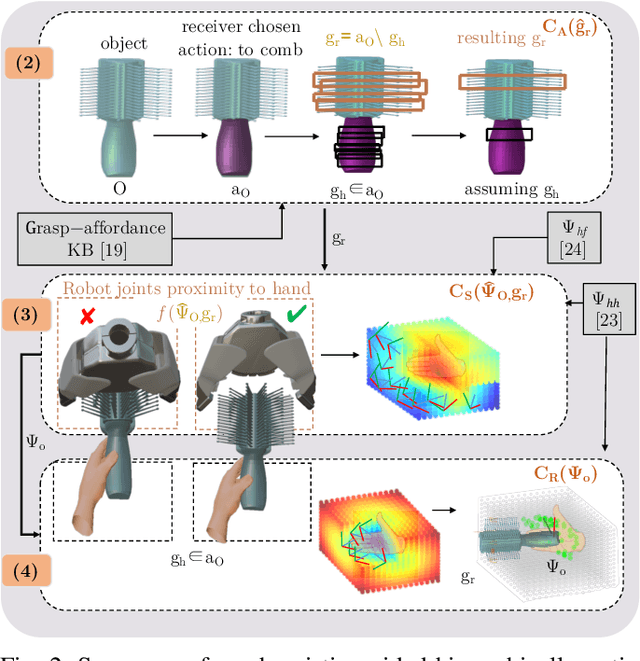

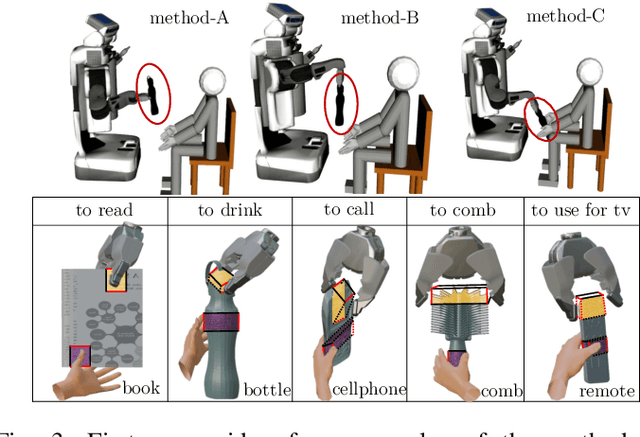

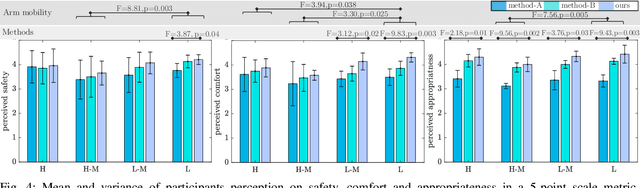

Affordance-Aware Handovers with Human Arm Mobility Constraints

Oct 29, 2020

Abstract:Reasoning about object handover configurations allows an assistive agent to estimate the appropriateness of handover for a receiver with different arm mobility capacities. While there are existing approaches to estimating the effectiveness of handovers, their findings are limited to users without arm mobility impairments and to specific objects. Therefore, current state-of-the-art approaches are unable to hand over novel objects to receivers with different arm mobility capacities. We propose a method that generalises handover behaviours to previously unseen objects, subject to the constraint of a user's arm mobility levels and the task context. We propose a heuristic-guided hierarchically optimised cost whose optimisation adapts object configurations for receivers with low arm mobility. This also ensures that the robot grasps consider the context of the user's upcoming task, i.e., the usage of the object. To understand preferences over handover configurations, we report on the findings of an online study, wherein we presented different handover methods, including ours, to $259$ users with different levels of arm mobility. We encapsulate these preferences in a SRL that is able to reason about the most suitable handover configuration given a receiver's arm mobility and upcoming task. We find that people's preferences over handover methods are correlated to their arm mobility capacities. In experiments with a PR2 robotic platform, we obtained an average handover accuracy of $90.8\%$ when generalising handovers to novel objects.

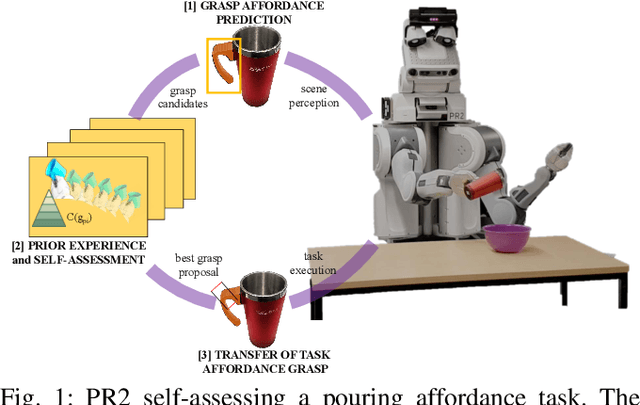

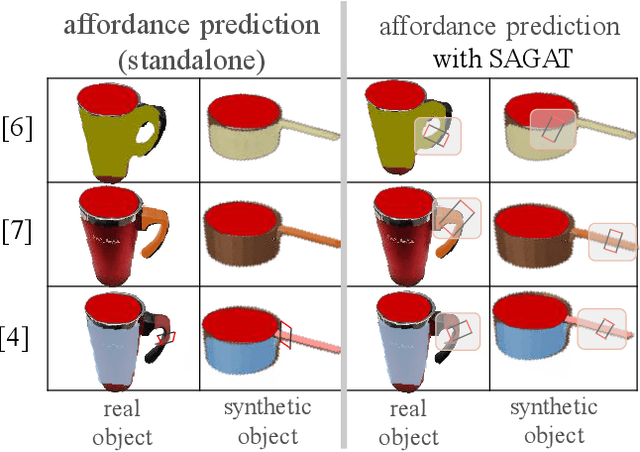

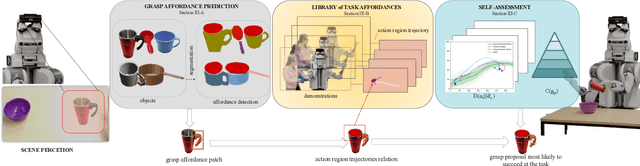

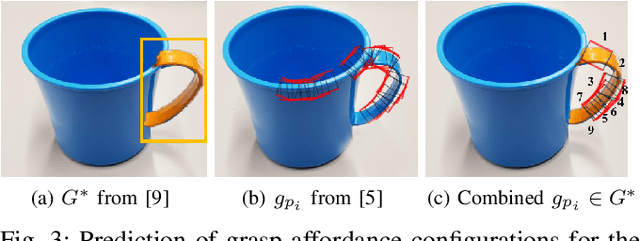

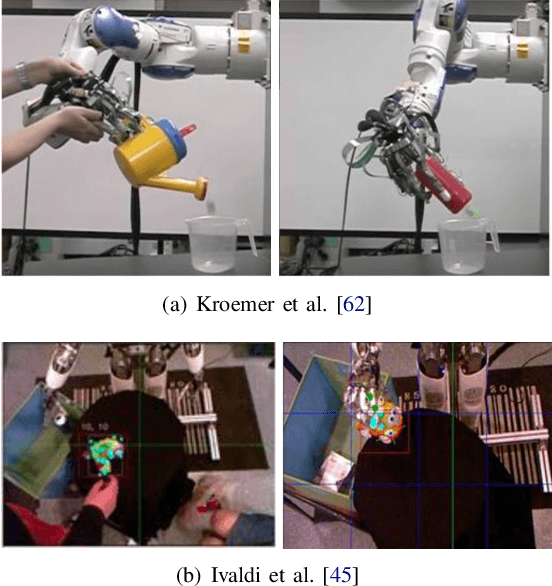

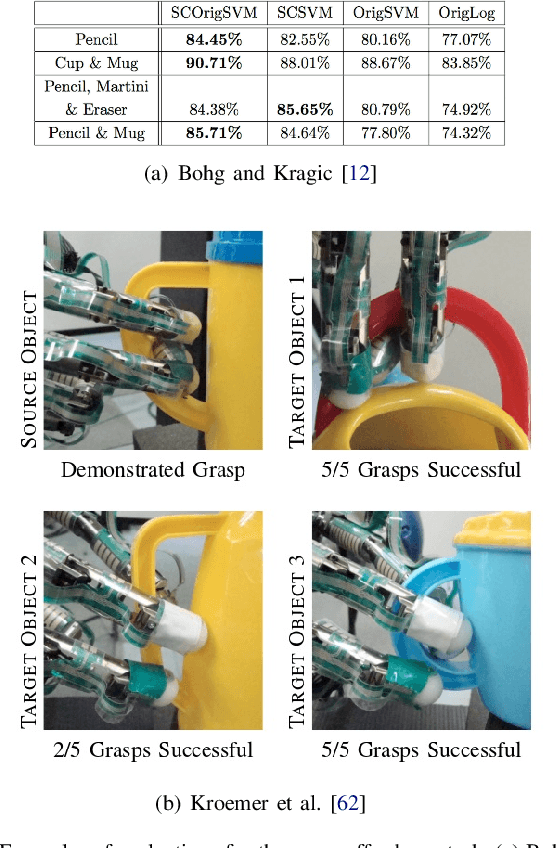

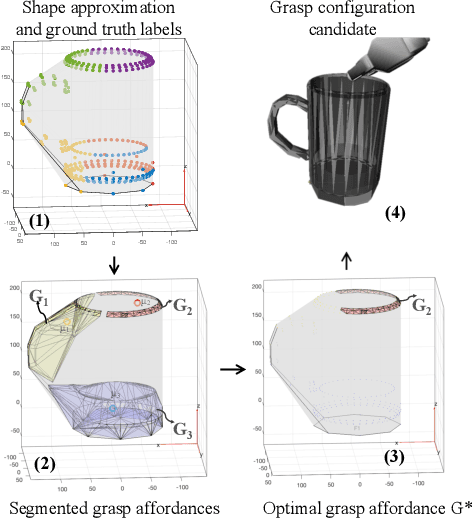

Self-Assessment of Grasp Affordance Transfer

Jul 04, 2020

Abstract:Reasoning about object grasp affordances allows an autonomous agent to estimate the most suitable grasp to execute a task. While current approaches for estimating grasp affordances are effective, their prediction is driven by hypotheses on visual features rather than an indicator of a proposal's suitability for an affordance task. Consequently, these works cannot guarantee any level of performance when executing a task and, in fact, not even ensure successful task completion. In this work, we present a pipeline for SAGAT based on prior experiences. We visually detect a grasp affordance region to extract multiple grasp affordance configuration candidates. Using these candidates, we forward simulate the outcome of executing the affordance task to analyse the relation between task outcome and grasp candidates. The relations are ranked by performance success with a heuristic confidence function and used to build a library of affordance task experiences. The library is later queried to perform one-shot transfer estimation of the best grasp configuration on new objects. Experimental evaluation shows that our method exhibits a significant performance improvement up to 11.7% against current state-of-the-art methods on grasp affordance detection. Experiments on a PR2 robotic platform demonstrate our method's highly reliable deployability to deal with real-world task affordance problems.

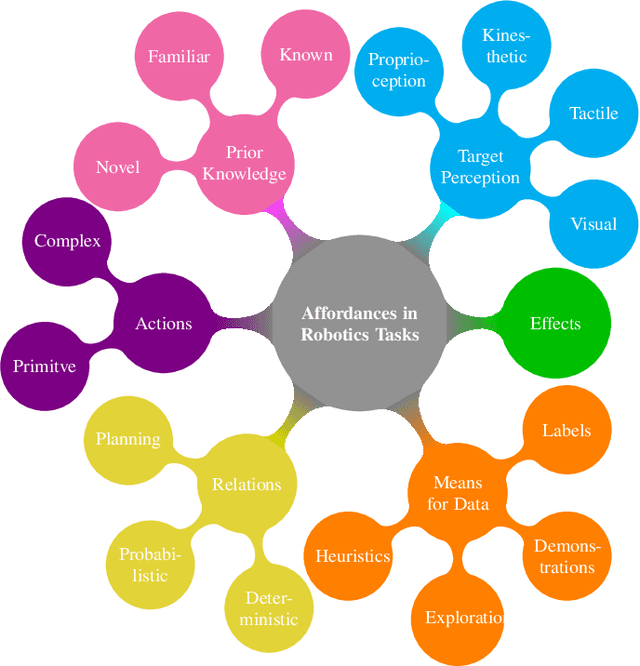

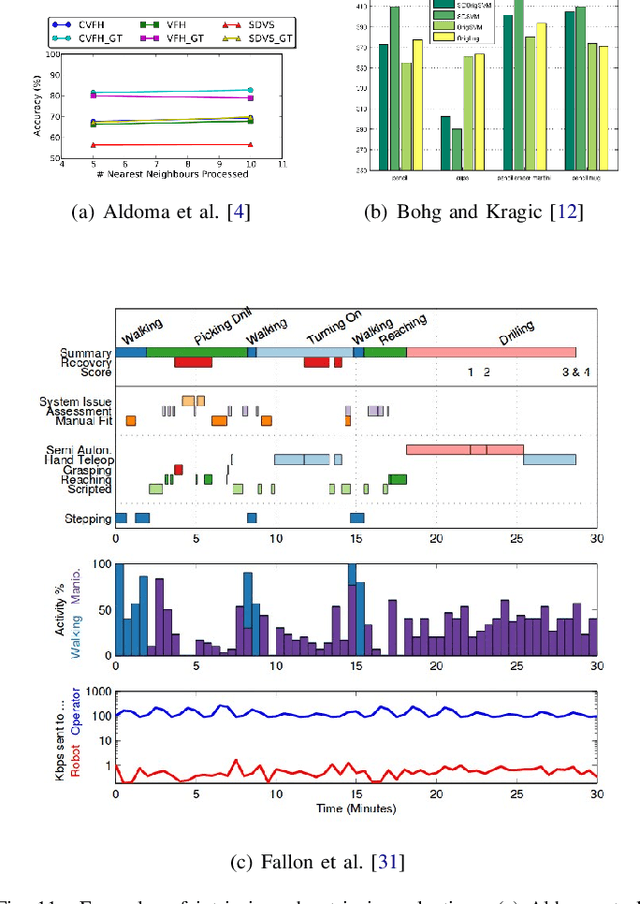

Affordances in Robotic Tasks -- A Survey

Apr 15, 2020

Abstract:Affordances are key attributes of what must be perceived by an autonomous robotic agent in order to effectively interact with novel objects. Historically, the concept derives from the literature in psychology and cognitive science, where affordances are discussed in a way that makes it hard for the definition to be directly transferred to computational specifications useful for robots. This review article is focused specifically on robotics, so we discuss the related literature from this perspective. In this survey, we classify the literature and try to find common ground amongst different approaches with a view to application in robotics. We propose a categorisation based on the level of prior knowledge that is assumed to build the relationship among different affordance components that matter for a particular robotic task. We also identify areas for future improvement and discuss possible directions that are likely to be fruitful in terms of impact on robotics practice.

Learning Generalisable Coupling Terms for Obstacle Avoidance via Low-dimensional Geometric Descriptors

Jun 24, 2019

Abstract:Unforeseen events are frequent in the real-world environments where robots are expected to assist, raising the need for fast replanning of the policy in execution to guarantee the system and environment safety. Inspired by human behavioural studies of obstacle avoidance and route selection, this paper presents a hierarchical framework which generates reactive yet bounded obstacle avoidance behaviours through a multi-layered analysis. The framework leverages the strengths of learning techniques and the versatility of dynamic movement primitives to efficiently unify perception, decision, and action levels via low-dimensional geometric descriptors of the environment. Experimental evaluation on synthetic environments and a real anthropomorphic manipulator proves that the robustness and generalisation capabilities of the proposed approach regardless of the obstacle avoidance scenario makes it suitable for robotic systems in real-world environments.

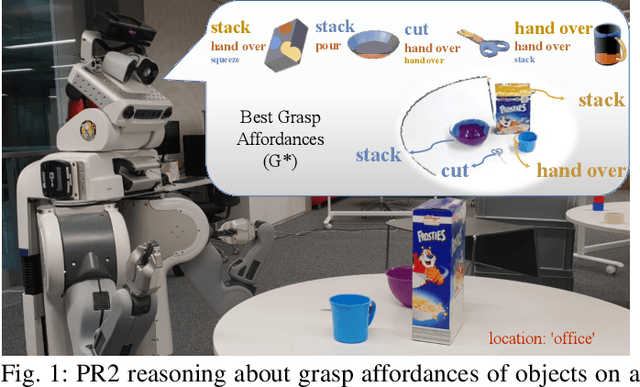

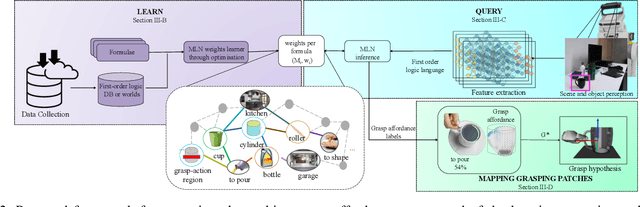

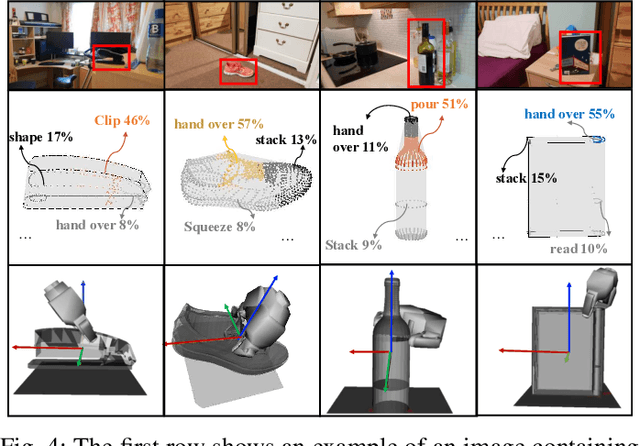

Learning Grasp Affordance Reasoning through Semantic Relations

Jun 24, 2019

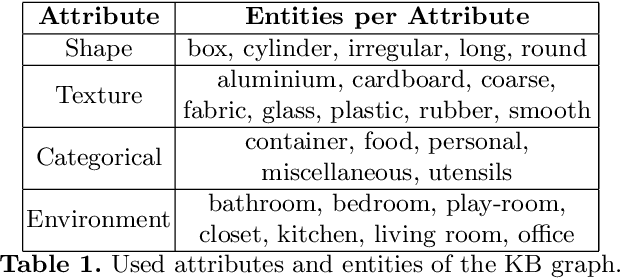

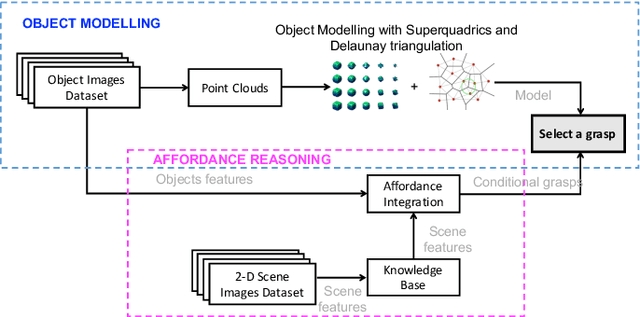

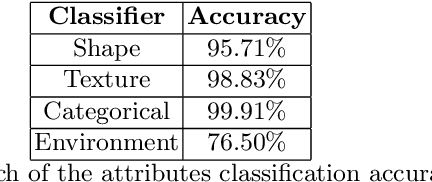

Abstract:Reasoning about object affordances allows an autonomous agent to perform generalised manipulation tasks among object instances. While current approaches to grasp affordance estimation are effective, they are limited to a single hypothesis. We present an approach for detection and extraction of multiple grasp affordances on an object via visual input. We define semantics as a combination of multiple attributes, which yields benefits in terms of generalisation for grasp affordance prediction. We use Markov Logic Networks to build a knowledge base graph representation to obtain a probability distribution of grasp affordances for an object. To harvest the knowledge base, we collect and make available a novel dataset that relates different semantic attributes. We achieve reliable mappings of the predicted grasp affordances on the object by learning prototypical grasping patches from several examples. We show our method's generalisation capabilities on grasp affordance prediction for novel instances and compare with similar methods in the literature. Moreover, using a robotic platform, on simulated and real scenarios, we evaluate the success of the grasping task when conditioned on the grasp affordance prediction.

Reasoning on Grasp-Action Affordances

May 25, 2019

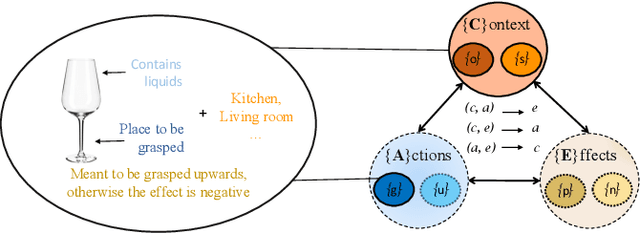

Abstract:Artificial intelligence is essential to succeed in challenging activities that involve dynamic environments, such as object manipulation tasks in indoor scenes. Most of the state-of-the-art literature explores robotic grasping methods by focusing exclusively on attributes of the target object. When it comes to human perceptual learning approaches, these physical qualities are not only inferred from the object, but also from the characteristics of the surroundings. This work proposes a method that includes environmental context to reason on an object affordance to then deduce its grasping regions. This affordance is reasoned using a ranked association of visual semantic attributes harvested in a knowledge base graph representation. The framework is assessed using standard learning evaluation metrics and the zero-shot affordance prediction scenario. The resulting grasping areas are compared with unseen labelled data to asses their accuracy matching percentage. The outcome of this evaluation suggest the autonomy capabilities of the proposed method for object interaction applications in indoor environments.

Learning and Composing Primitive Skills for Dual-arm Manipulation

May 25, 2019

Abstract:In an attempt to confer robots with complex manipulation capabilities, dual-arm anthropomorphic systems have become an important research topic in the robotics community. Most approaches in the literature rely upon a great understanding of the dynamics underlying the system's behaviour and yet offer limited autonomous generalisation capabilities. To address these limitations, this work proposes a modelisation for dual-arm manipulators based on dynamic movement primitives laying in two orthogonal spaces. The modularity and learning capabilities of this model are leveraged to formulate a novel end-to-end learning-based framework which (i) learns a library of primitive skills from human demonstrations, and (ii) composes such knowledge simultaneously and sequentially to confront novel scenarios. The feasibility of the proposal is evaluated by teaching the iCub humanoid the basic skills to succeed on simulated dual-arm pick-and-place tasks. The results suggest the learning and generalisation capabilities of the proposed framework extend to autonomously conduct undemonstrated dual-arm manipulation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge