Michael Mistry

Learning Long-Horizon Robot Manipulation Skills via Privileged Action

Feb 21, 2025

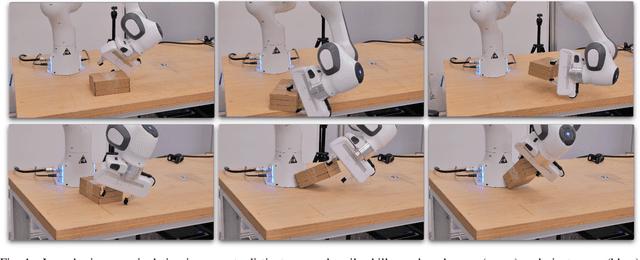

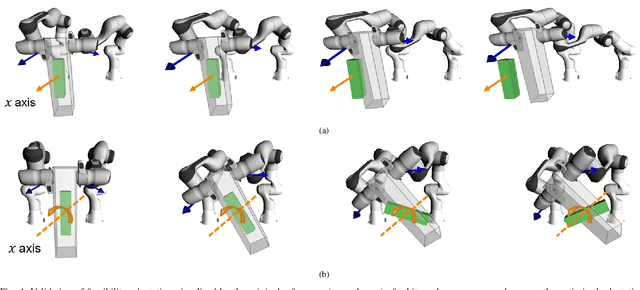

Abstract:Long-horizon contact-rich tasks are challenging to learn with reinforcement learning, due to ineffective exploration of high-dimensional state spaces with sparse rewards. The learning process often gets stuck in local optimum and demands task-specific reward fine-tuning for complex scenarios. In this work, we propose a structured framework that leverages privileged actions with curriculum learning, enabling the policy to efficiently acquire long-horizon skills without relying on extensive reward engineering or reference trajectories. Specifically, we use privileged actions in simulation with a general training procedure that would be infeasible to implement in real-world scenarios. These privileges include relaxed constraints and virtual forces that enhance interaction and exploration with objects. Our results successfully achieve complex multi-stage long-horizon tasks that naturally combine non-prehensile manipulation with grasping to lift objects from non-graspable poses. We demonstrate generality by maintaining a parsimonious reward structure and showing convergence to diverse and robust behaviors across various environments. Additionally, real-world experiments further confirm that the skills acquired using our approach are transferable to real-world environments, exhibiting robust and intricate performance. Our approach outperforms state-of-the-art methods in these tasks, converging to solutions where others fail.

Neural Lyapunov and Optimal Control

May 24, 2023

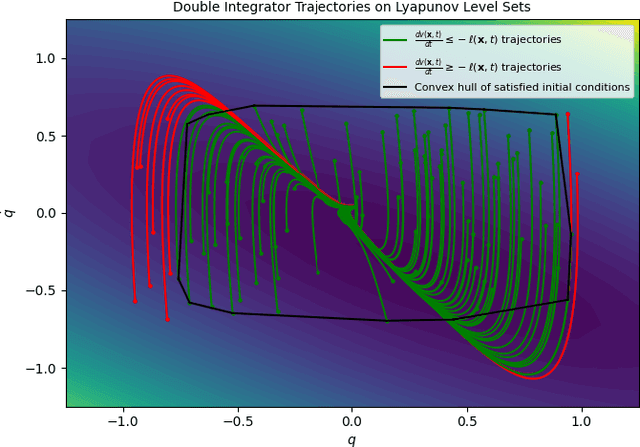

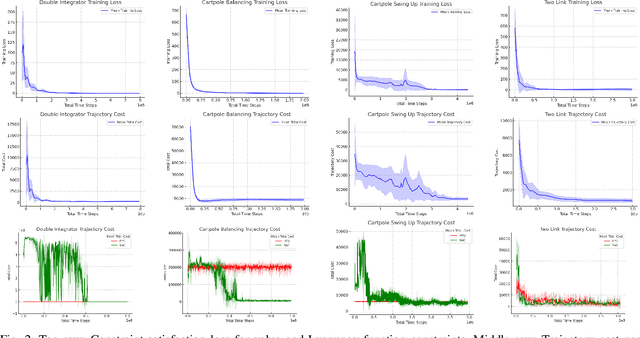

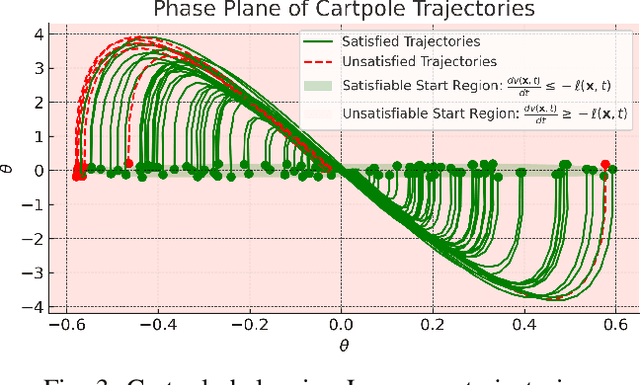

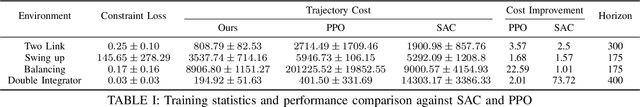

Abstract:Optimal control (OC) is an effective approach to controlling complex dynamical systems. However, traditional approaches to parameterising and learning controllers in optimal control have been ad-hoc, collecting data and fitting it to neural networks. However, this can lead to learnt controllers ignoring constraints like optimality and time variability. We introduce a unified framework that simultaneously solves control problems while learning corresponding Lyapunov or value functions. Our method formulates OC-like mathematical programs based on the Hamilton-Jacobi-Bellman (HJB) equation. We leverage the HJB optimality constraint and its relaxation to learn time-varying value and Lyapunov functions, implicitly ensuring the inclusion of constraints. We show the effectiveness of our approach on linear and nonlinear control-affine problems. Additionally, we demonstrate significant reductions in planning horizons (up to a factor of 25) when incorporating the learnt functions into Model Predictive Controllers.

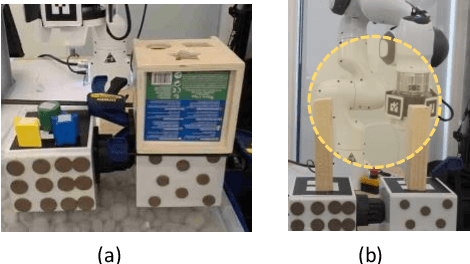

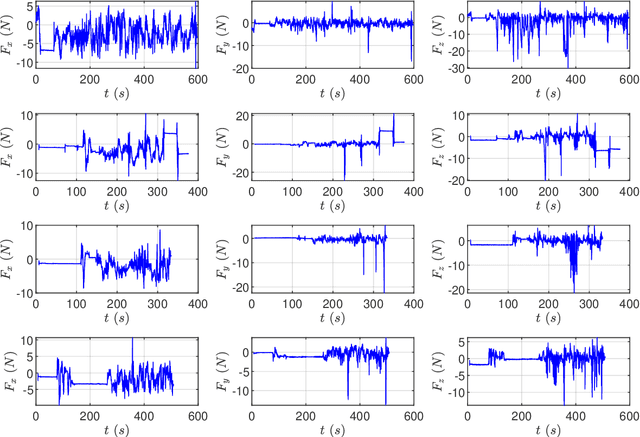

Achieving Dexterous Bidirectional Interaction in Uncertain Conditions for Medical Robotics

Jun 20, 2022

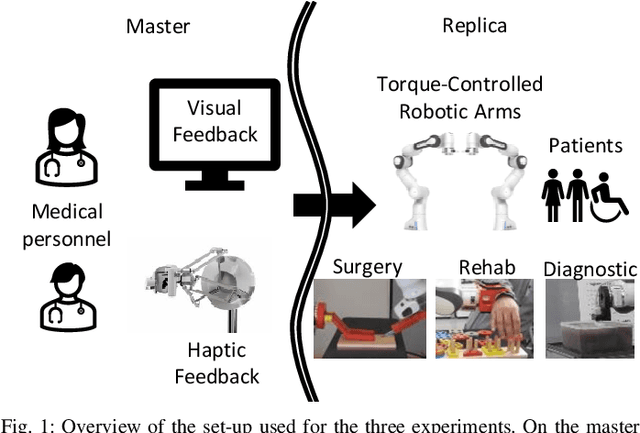

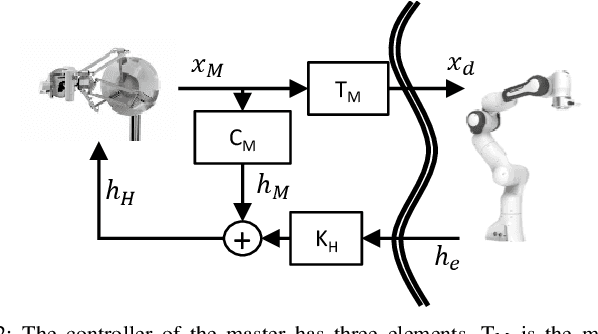

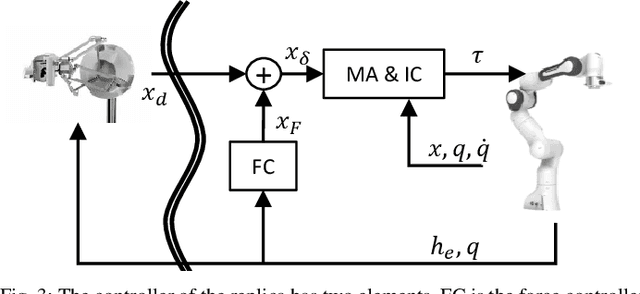

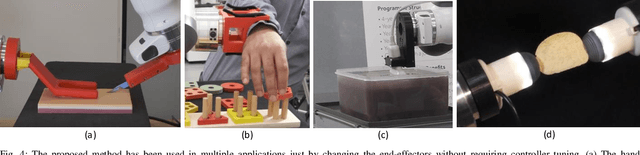

Abstract:Medical robotics can help improve and extend the reach of healthcare services. A major challenge for medical robots is the complex physical interaction between the robot and the patients which is required to be safe. This work presents the preliminary evaluation of a recently introduced control architecture based on the Fractal Impedance Control (FIC) in medical applications. The deployed FIC architecture is robust to delay between the master and the replica robots. It can switch online between an admittance and impedance behaviour, and it is robust to interaction with unstructured environments. Our experiments analyse three scenarios: teleoperated surgery, rehabilitation, and remote ultrasound scan. The experiments did not require any adjustment of the robot tuning, which is essential in medical applications where the operators do not have an engineering background required to tune the controller. Our results show that is possible to teleoperate the robot to cut using a scalpel, do an ultrasound scan, and perform remote occupational therapy. However, our experiments also highlighted the need for a better robots embodiment to precisely control the system in 3D dynamic tasks.

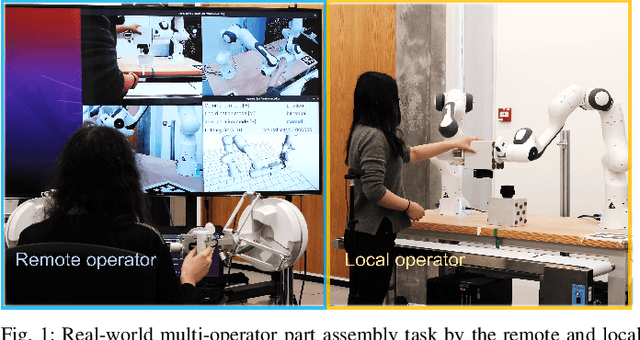

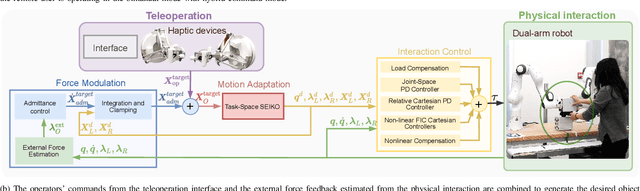

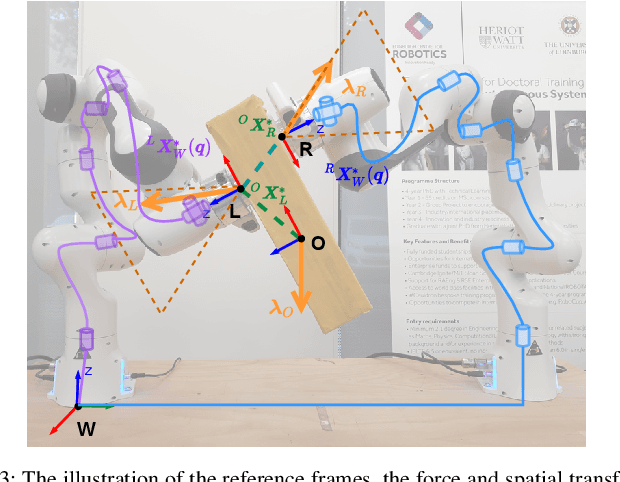

Collaborative Bimanual Manipulation Using Optimal Motion Adaptation and Interaction Control

Jun 01, 2022

Abstract:This work developed collaborative bimanual manipulation for reliable and safe human-robot collaboration, which allows remote and local human operators to work interactively for bimanual tasks. We proposed an optimal motion adaptation to retarget arbitrary commands from multiple human operators into feasible control references. The collaborative manipulation framework has three main modules: (1) contact force modulation for compliant physical interactions with objects via admittance control; (2) task-space sequential equilibrium and inverse kinematics optimization, which adapts interactive commands from multiple operators to feasible motions by satisfying the task constraints and physical limits of the robots; and (3) an interaction controller adopted from the fractal impedance control, which is robust to time delay and stable to superimpose multiple control efforts for generating desired joint torques and controlling the dual-arm robots. Extensive experiments demonstrated the capability of the collaborative bimanual framework, including (1) dual-arm teleoperation that adapts arbitrary infeasible commands that violate joint torque limits into continuous operations within safe boundaries, compared to failures without the proposed optimization; (2) robust maneuver of a stack of objects via physical interactions in presence of model inaccuracy; (3) collaborative multi-operator part assembly, and teleoperated industrial connector insertion, which validate the guaranteed stability of reliable human-robot co-manipulation.

Efficient Learning of Inverse Dynamics Models for Adaptive Computed Torque Control

May 10, 2022

Abstract:Modelling robot dynamics accurately is essential for control, motion optimisation and safe human-robot collaboration. Given the complexity of modern robotic systems, dynamics modelling remains non-trivial, mostly in the presence of compliant actuators, mechanical inaccuracies, friction and sensor noise. Recent efforts have focused on utilising data-driven methods such as Gaussian processes and neural networks to overcome these challenges, as they are capable of capturing these dynamics without requiring extensive knowledge beforehand. While Gaussian processes have shown to be an effective method for learning robotic dynamics with the ability to also represent the uncertainty in the learned model through its variance, they come at a cost of cubic time complexity rather than linear, as is the case for deep neural networks. In this work, we leverage the use of deep kernel models, which combine the computational efficiency of deep learning with the non-parametric flexibility of kernel methods (Gaussian processes), with the overarching goal of realising an accurate probabilistic framework for uncertainty quantification. Through using the predicted variance, we adapt the feedback gains as more accurate models are learned, leading to low-gain control without compromising tracking accuracy. Using simulated and real data recorded from a seven degree-of-freedom robotic manipulator, we illustrate how using stochastic variational inference with deep kernel models increases compliance in the computed torque controller, and retains tracking accuracy. We empirically show how our model outperforms current state-of-the-art methods with prediction uncertainty for online inverse dynamics model learning, and solidify its adaptation and generalisation capabilities across different setups.

Agile Maneuvers in Legged Robots: a Predictive Control Approach

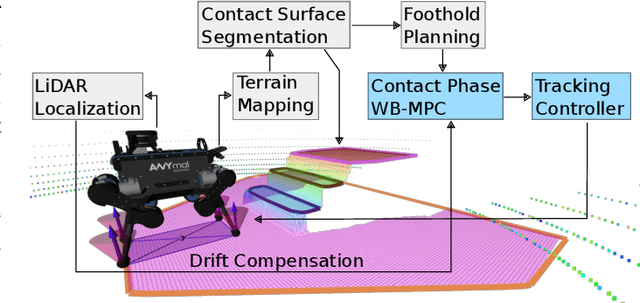

Mar 14, 2022

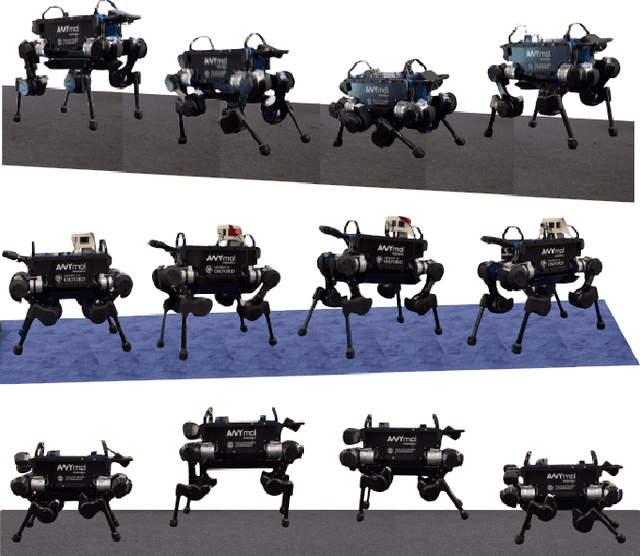

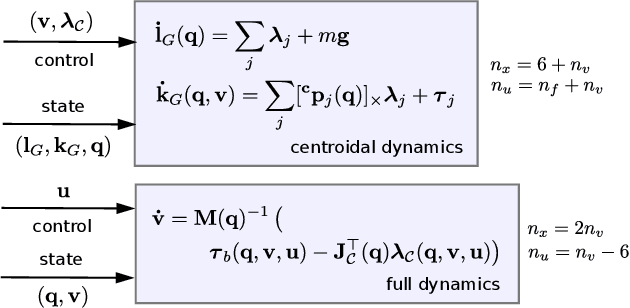

Abstract:Achieving agile maneuvers through multiple contact phases has been a longstanding challenge in legged robotics. It requires to derive motion plans and local control feedback policies in real-time to handle the nonholonomy of the kinetic momenta. While a few recent predictive control approaches based on centroidal momentum have been able to generate dynamic motions, they assume unlimited actuation capabilities. This assumption is quite restrictive and does not hold for agile maneuvers on most robots. In this work, we present a contact-phase predictive and state-feedback controllers that enables legged robots to plan and perform agile locomotion skills. Our predictive controller models the contact phases using a hybrid paradigm that considers the robot's actuation limits and full dynamics. We demonstrate the benefits of our approach on agile maneuvers on ANYmal robots in realistic scenarios. To the best of our knowledge, our work is the first to show that predictive control can handle actuation limits, generate agile locomotion maneuvers and execute locally optimal feedback policies on hardware without the use of a separate whole-body controller.

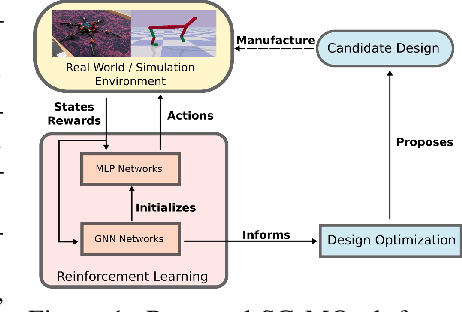

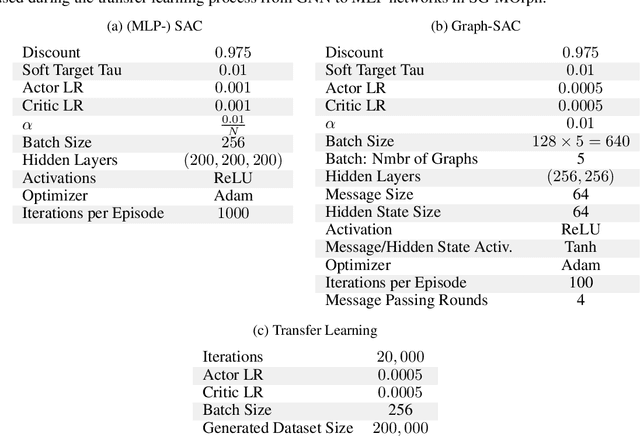

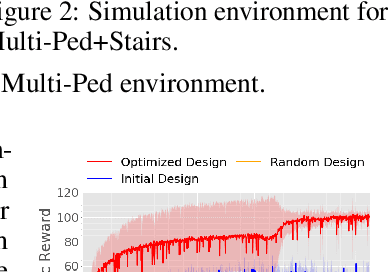

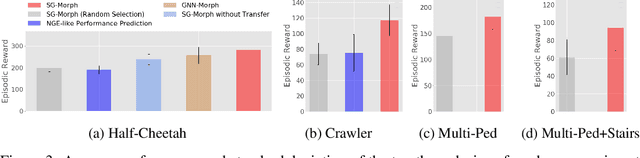

What Robot do I Need? Fast Co-Adaptation of Morphology and Control using Graph Neural Networks

Nov 03, 2021

Abstract:The co-adaptation of robot morphology and behaviour becomes increasingly important with the advent of fast 3D-manufacturing methods and efficient deep reinforcement learning algorithms. A major challenge for the application of co-adaptation methods to the real world is the simulation-to-reality-gap due to model and simulation inaccuracies. However, prior work focuses primarily on the study of evolutionary adaptation of morphologies exploiting analytical models and (differentiable) simulators with large population sizes, neglecting the existence of the simulation-to-reality-gap and the cost of manufacturing cycles in the real world. This paper presents a new approach combining classic high-frequency deep neural networks with computational expensive Graph Neural Networks for the data-efficient co-adaptation of agents with varying numbers of degrees-of-freedom. Evaluations in simulation show that the new method can co-adapt agents within such a limited number of production cycles by efficiently combining design optimization with offline reinforcement learning, that it allows for the direct application to real-world co-adaptation tasks in future work

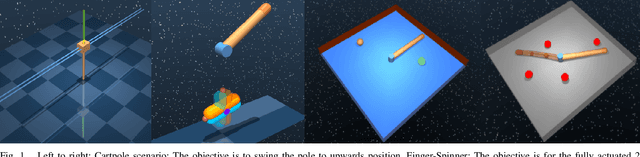

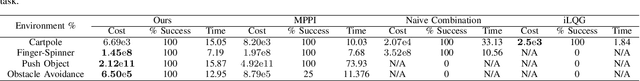

Optimal Control via Combined Inference and Numerical Optimization

Sep 23, 2021

Abstract:Derivative based optimization methods are efficient at solving optimal control problems near local optima. However, their ability to converge halts when derivative information vanishes. The inference approach to optimal control does not have strict requirements on the objective landscape. However, sampling, the primary tool for solving such problems, tends to be much slower in computation time. We propose a new method that combines second order methods with inference. We utilise the Kullback Leibler (KL) control framework to formulate an inference problem that computes the optimal controls from an adaptive distribution approximating the solution of the second order method. Our method allows for combining simple convex and non convex cost functions. This simplifies the process of cost function design and leverages the strengths of both inference and second order optimization. We compare our method to Model Predictive Path Integral (MPPI) and iterative Linear Quadratic Regulator (iLQG), outperforming both in sample efficiency and quality on manipulation and obstacle avoidance tasks.

A Unified Model with Inertia Shaping for Highly Dynamic Jumps of Legged Robots

Sep 09, 2021

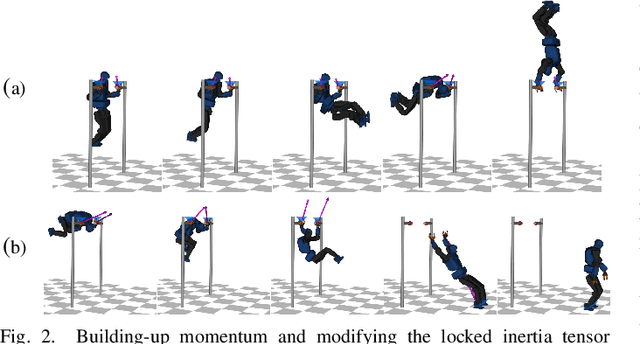

Abstract:To achieve highly dynamic jumps of legged robots, it is essential to control the rotational dynamics of the robot. In this paper, we aim to improve the jumping performance by proposing a unified model for planning highly dynamic jumps that can approximately model the centroidal inertia. This model abstracts the robot as a single rigid body for the base and point masses for the legs. The model is called the Lump Leg Single Rigid Body Model (LL-SRBM) and can be used to plan motions for both bipedal and quadrupedal robots. By taking the effects of leg dynamics into account, LL-SRBM provides a computationally efficient way for the motion planner to change the centroidal inertia of the robot with various leg configurations. Concurrently, we propose a novel contact detection method by using the norm of the average spatial velocity. After the contact is detected, the controller is switched to force control to achieve a soft landing. Twisting jump and forward jump experiments on the bipedal robot SLIDER and quadrupedal robot ANYmal demonstrate the improved jump performance by actively changing the centroidal inertia. These experiments also show the generalization and the robustness of the integrated planning and control framework.

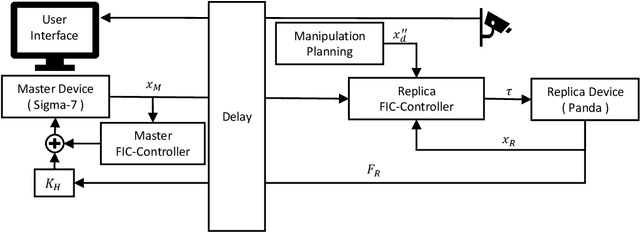

Fine Manipulation and Dynamic Interaction in Haptic Teleoperation

Sep 09, 2021

Abstract:Teleoperation of robots enables remote intervention in distant and dangerous tasks without putting the operator in harm's way. However, remote operation faces fundamental challenges due to limits in communication delay and bandwidth. The proposed work improves the performances of teleoperation architecture based on Fractal Impedance Controller (FIC), by integrating the most recent manipulation architecture in the haptic teleoperation pipeline. The updated controller takes advantage of the inverse kinematics optimisation in the manipulation, and hence improves dynamic interactions during fine manipulation without renouncing the robustness of the FIC controller. Additionally, the proposed method allows an online trade-off between the manipulation controller and the teleoperated behaviour, allowing a safe superimposition of these two behaviours. The validated experimental results show that the proposed method is robust to reduced communication bandwidth and delays. Moreover, we demonstrated that the remote teleoperated robot remains stable and safe to interact with, even when the communication with the master side is abruptly interrupted.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge