Maria E. Cabrera

Affordance-Aware Handovers with Human Arm Mobility Constraints

Oct 29, 2020

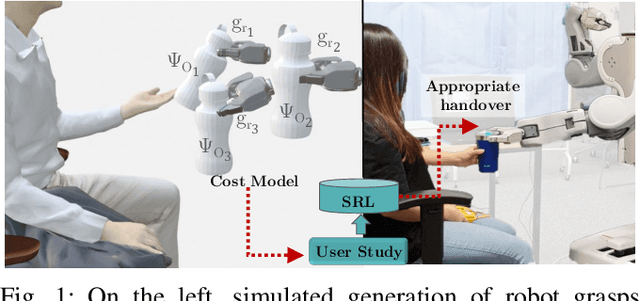

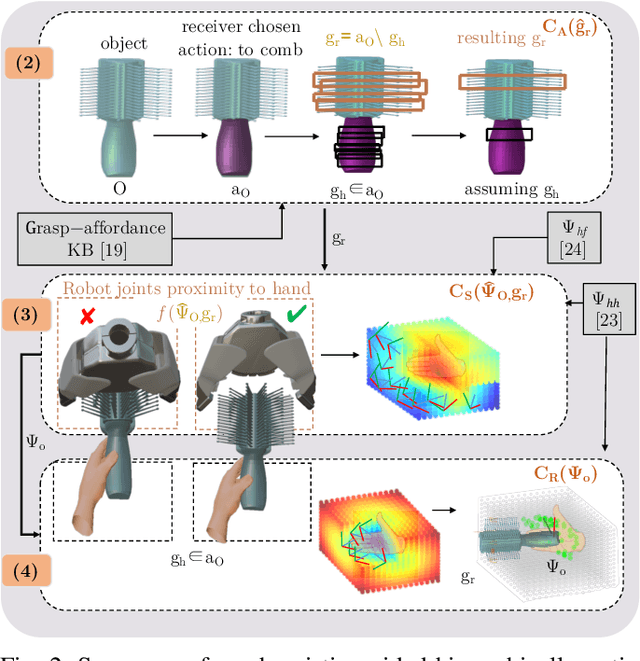

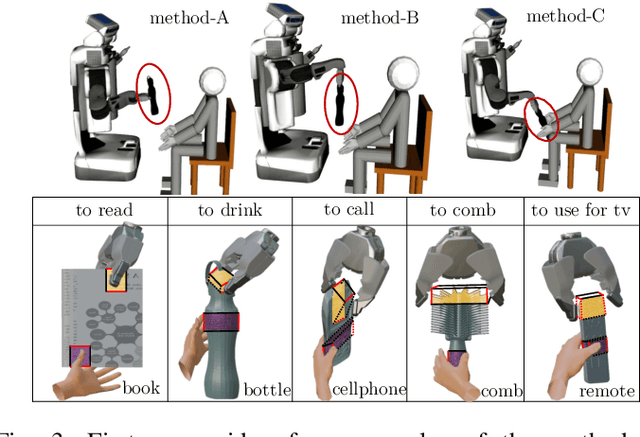

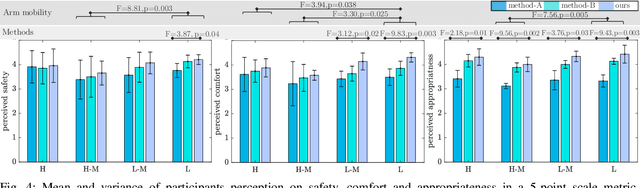

Abstract:Reasoning about object handover configurations allows an assistive agent to estimate the appropriateness of handover for a receiver with different arm mobility capacities. While there are existing approaches to estimating the effectiveness of handovers, their findings are limited to users without arm mobility impairments and to specific objects. Therefore, current state-of-the-art approaches are unable to hand over novel objects to receivers with different arm mobility capacities. We propose a method that generalises handover behaviours to previously unseen objects, subject to the constraint of a user's arm mobility levels and the task context. We propose a heuristic-guided hierarchically optimised cost whose optimisation adapts object configurations for receivers with low arm mobility. This also ensures that the robot grasps consider the context of the user's upcoming task, i.e., the usage of the object. To understand preferences over handover configurations, we report on the findings of an online study, wherein we presented different handover methods, including ours, to $259$ users with different levels of arm mobility. We encapsulate these preferences in a SRL that is able to reason about the most suitable handover configuration given a receiver's arm mobility and upcoming task. We find that people's preferences over handover methods are correlated to their arm mobility capacities. In experiments with a PR2 robotic platform, we obtained an average handover accuracy of $90.8\%$ when generalising handovers to novel objects.

Communication Modalities for Supervised Teleoperation in Highly Dexterous Tasks - Does one size fit all?

Apr 17, 2017

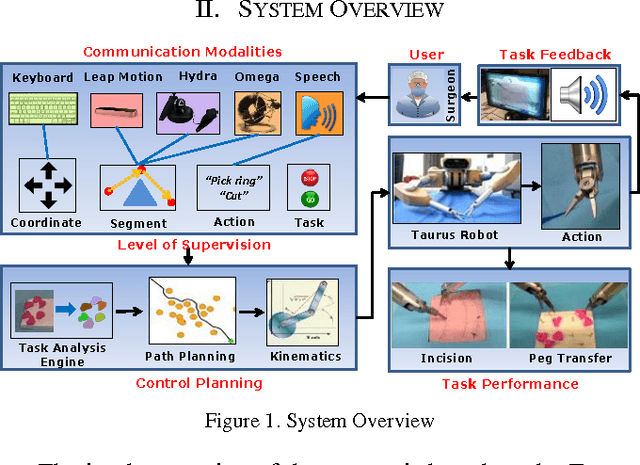

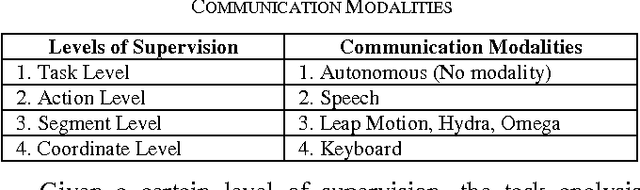

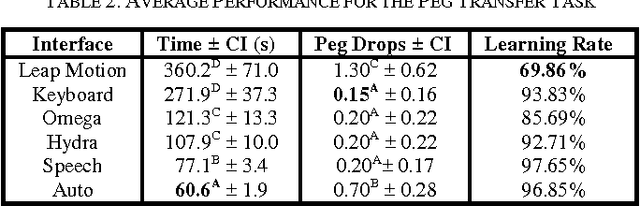

Abstract:This study tries to explain the connection between communication modalities and levels of supervision in teleoperation during a dexterous task, like surgery. This concept is applied to two surgical related tasks: incision and peg transfer. It was found that as the complexity of the task escalates, the combination linking human supervision with a more expressive modality shows better performance than other combinations of modalities and control. More specifically, in the peg transfer task, the combination of speech modality and action level supervision achieves shorter task completion time (77.1 +- 3.4 s) with fewer mistakes (0.20 +- 0.17 pegs dropped).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge