A Knowledge Driven Approach to Adaptive Assistance Using Preference Reasoning and Explanation

Paper and Code

Dec 05, 2020

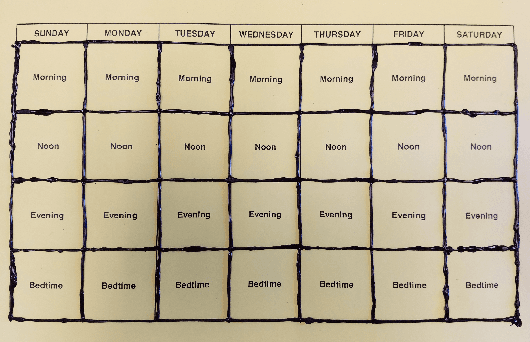

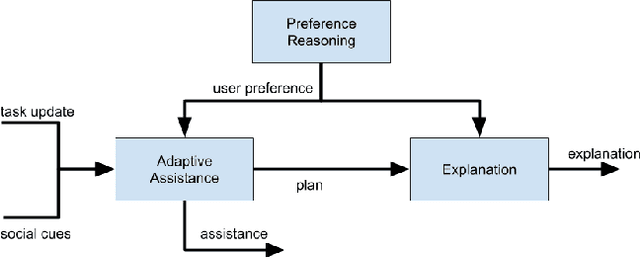

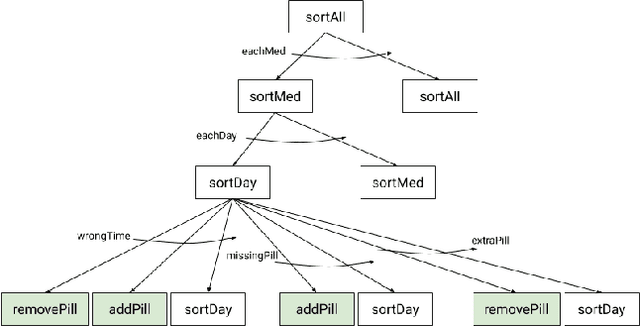

There is a need for socially assistive robots (SARs) to provide transparency in their behavior by explaining their reasoning. Additionally, the reasoning and explanation should represent the user's preferences and goals. To work towards satisfying this need for interpretable reasoning and representations, we propose the robot uses Analogical Theory of Mind to infer what the user is trying to do and uses the Hint Engine to find an appropriate assistance based on what the user is trying to do. If the user is unsure or confused, the robot provides the user with an explanation, generated by the Explanation Synthesizer. The explanation helps the user understand what the robot inferred about the user's preferences and why the robot decided to provide the assistance it gave. A knowledge-driven approach provides transparency to reasoning about preferences, assistance, and explanations, thereby facilitating the incorporation of user feedback and allowing the robot to learn and adapt to the user.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge