Miguel Arduengo

Task-Adaptive Robot Learning from Demonstration under Replication with Gaussian Process Models

Oct 15, 2020

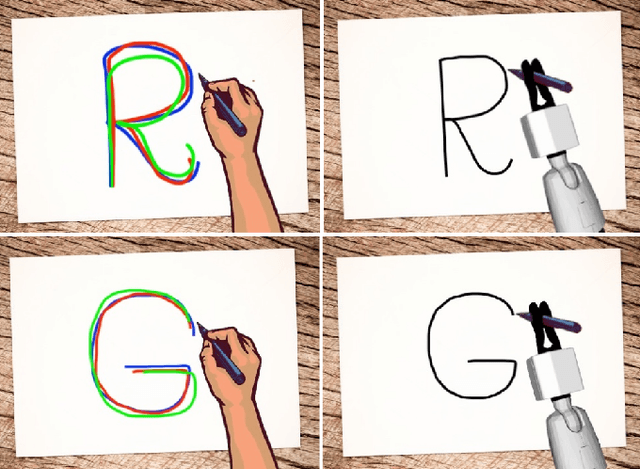

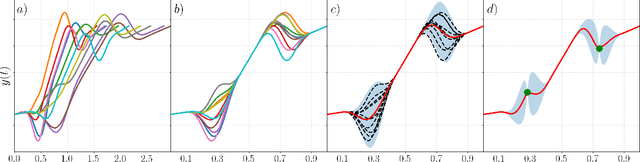

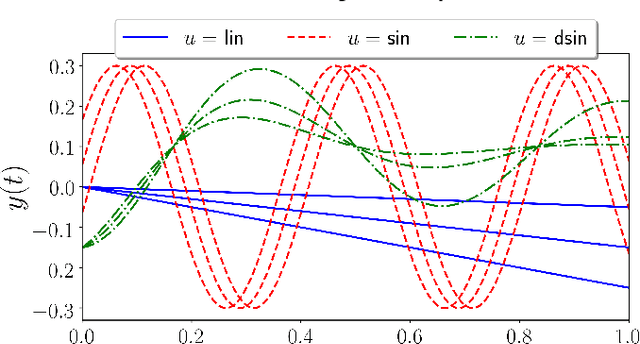

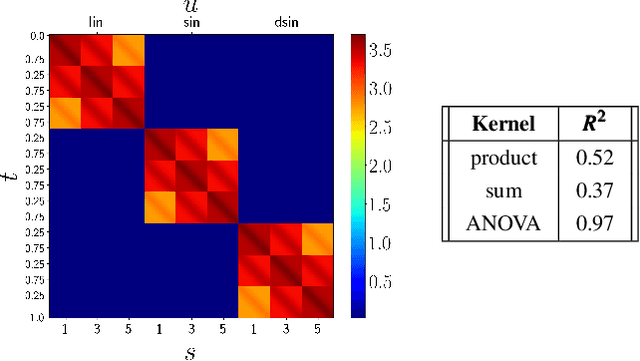

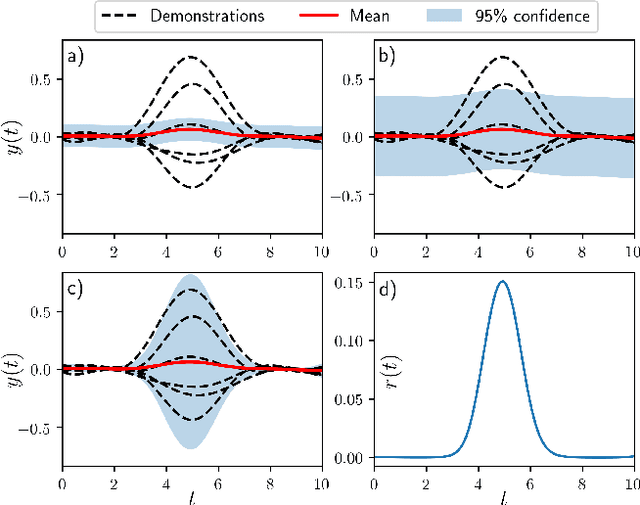

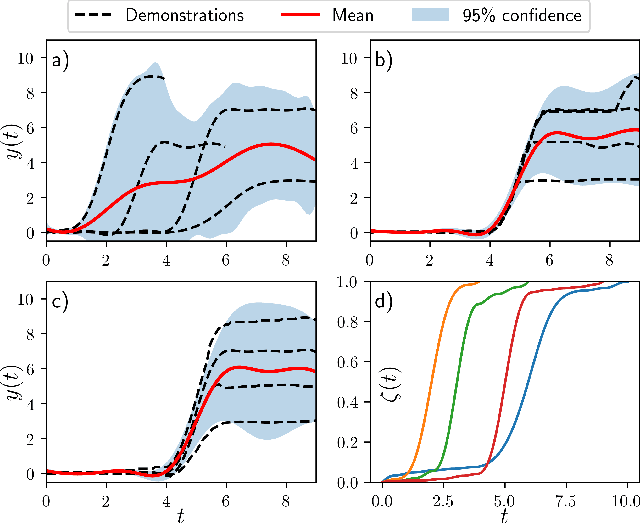

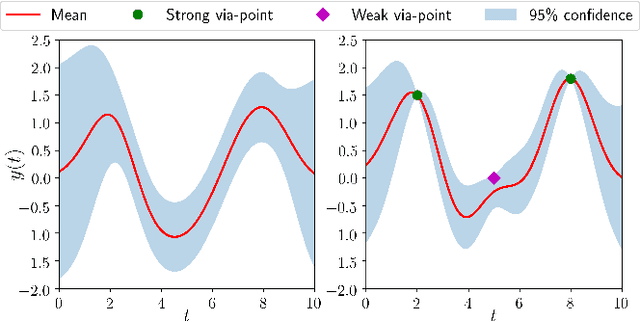

Abstract:Learning from Demonstration (LfD) is a paradigm that allows robots to learn complex manipulation tasks that can not be easily scripted, but can be demonstrated by a human teacher. One of the challenges of LfD is to enable robots to acquire skills that can be adapted to different scenarios. In this paper, we propose to achieve this by exploiting the variations in the demonstrations to retrieve an adaptive and robust policy, using Gaussian Process (GP) models. Adaptability is enhanced by incorporating task parameters into the model, which encode different specifications within the same task. With our formulation, these parameters can either be real, integer, or categorical. Furthermore, we propose a GP design that exploits the structure of replications, i.e., repeated demonstrations at identical conditions within data. Our method significantly reduces the computational cost of model fitting in complex tasks, where replications are essential to obtain a robust model. We illustrate our approach through several experiments on a handwritten letter demonstration dataset.

Gaussian-Process-based Robot Learning from Demonstration

Feb 23, 2020

Abstract:Endowed with higher levels of autonomy, robots are required to perform increasingly complex manipulation tasks. Learning from demonstration is arising as a promising paradigm for easily extending robot capabilities so that they adapt to unseen scenarios. We present a novel Gaussian-Process-based approach for learning manipulation skills from observations of a human teacher. This probabilistic representation allows to generalize over multiple demonstrations, and encode uncertainty variability along the different phases of the task. In this paper, we address how Gaussian Processes can be used to effectively learn a policy from trajectories in task space. We also present a method to efficiently adapt the policy to fulfill new requirements, and to modulate the robot behavior as a function of task uncertainty. This approach is illustrated through a real-world application using the TIAGo robot.

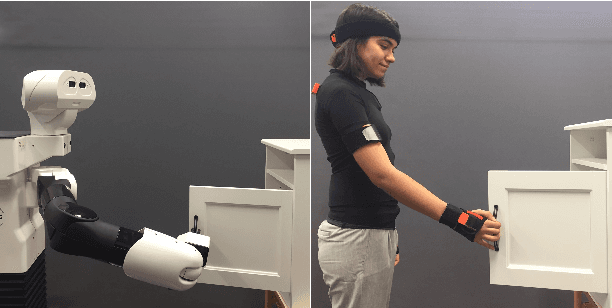

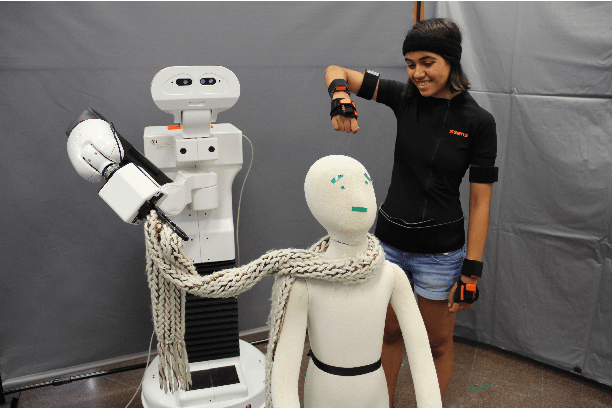

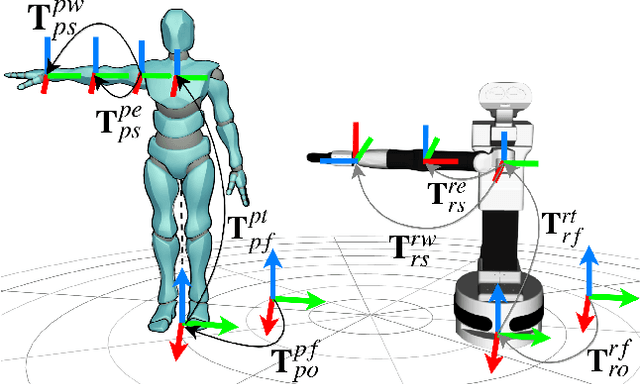

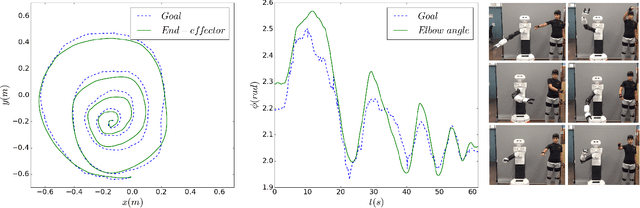

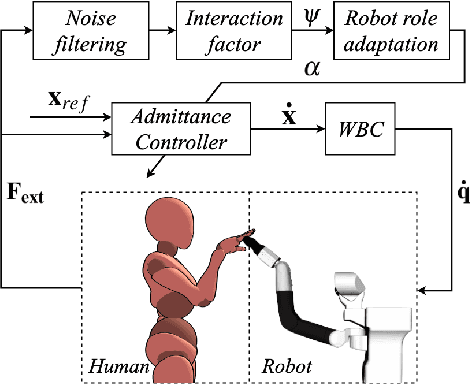

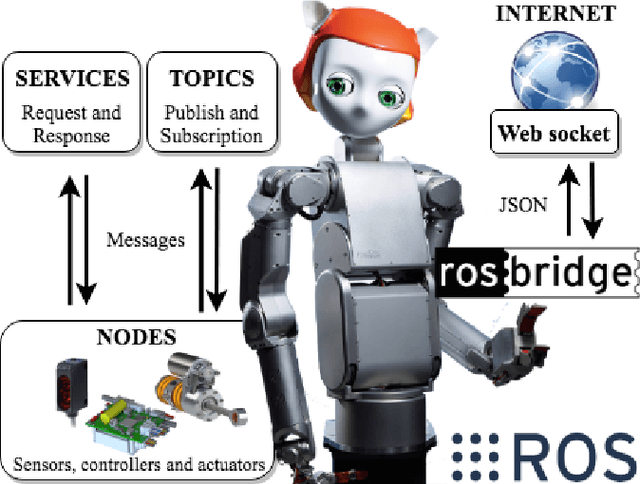

A Robot Teleoperation Framework for Human Motion Transfer

Sep 13, 2019

Abstract:Transferring human motion to a mobile robotic manipulator and ensuring safe physical human-robot interaction are crucial steps towards automating complex manipulation tasks in human-shared environments. In this work we present a robot whole-body teleoperation framework for human motion transfer. We propose a general solution to the correspondence problem: a mapping that defines an equivalence between the robot and observed human posture. For achieving real-time teleoperation and effective redundancy resolution, we make use of the whole-body paradigm with an adequate task hierarchy, and present a differential drive control algorithm to the wheeled robot base. To ensure safe physical human-robot interaction, we propose a variable admittance controller that stably adapts the dynamics of the end-effector to switch between stiff and compliant behaviors. We validate our approach through several experiments using the TIAGo robot. Results show effective real-time imitation and dynamic behavior adaptation. This could be an easy way for a non-expert to teach a rough manipulation skill to an assistive robot.

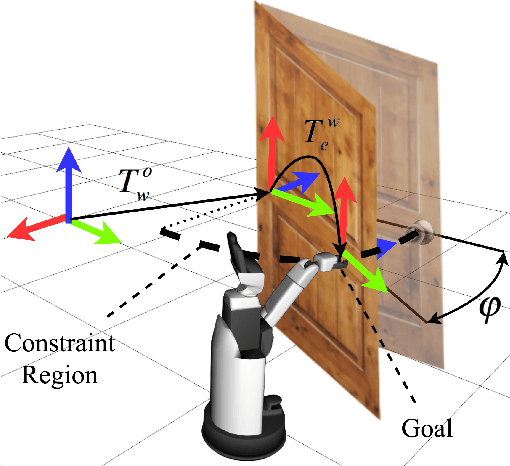

A Versatile Framework for Robust and Adaptive Door Operation with a Mobile Manipulator Robot

Feb 25, 2019

Abstract:The ability to deal with articulated objects is very important for robots assisting humans. In this work a general framework for the robust operation of different types of doors using an autonomous robotic mobile manipulator is proposed. To push the state-of-the-art in robustness and speed performance, we devise a novel algorithm that fuses a convolutional neural network with efficient point cloud processing. This advancement allows for real-time grasping pose estimation of single or multiple handles from RGB-D images, providing a speed up for assistive human-centered behaviors. In addition, we propose a versatile Bayesian framework that endows the robot with the ability to infer the door kinematic model from observations of its motion while opening it and learn from previous experiences or human demonstrations. Combining this probabilistic approach with a state-of-the-art motion planner, we achieve efficient door grasping and subsequent door operation regardless of the kinematic model using the Toyota Human Support Robot.

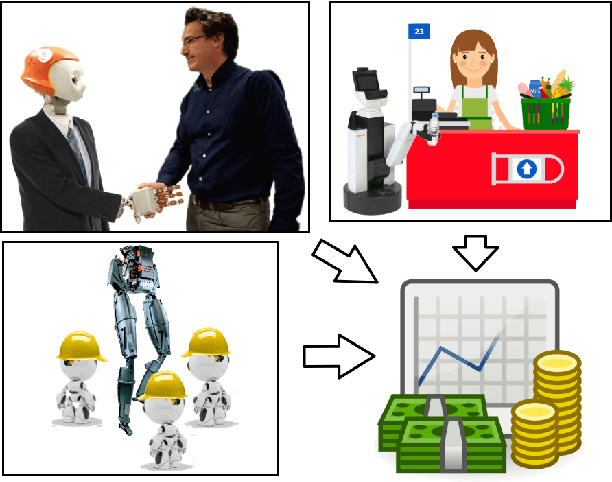

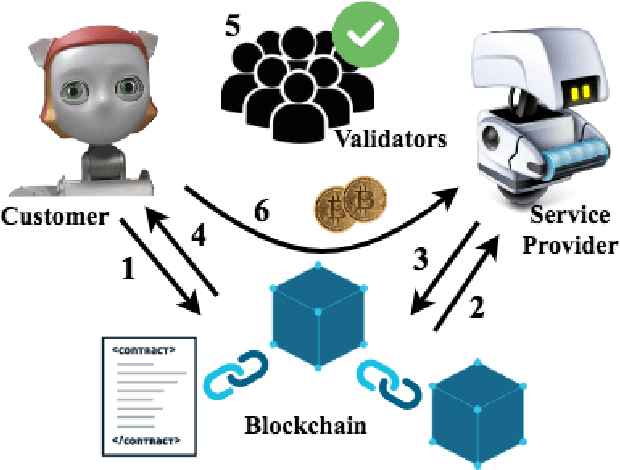

Robot Economy: Ready or Not, Here It Comes

Jan 20, 2019

Abstract:Automation is not a new phenomenon, and questions about its effects have long followed its advances. More than a half-century ago, US President Lyndon B. Johnson established a national commission to examine the impact of technology on the economy, declaring that automation "can be the ally of our prosperity if we will just look ahead". In this paper, our premise is that we are at a technological inflection point in which robots are developing the capacity to do greatly increase their cognitive and physical capabilities, and thus raising questions on labor dynamics. With increasing levels of autonomy and human-robot interaction, intelligent robots could soon accomplish new human-like capabilities such as engaging into social activities. Therefore, an increase in automation and autonomy capacity brings the question of robots directly participating in some economic activities as autonomous agents. In this paper, a technological framework describing a robot economy is outlined and the challenges it might represent in the current socio-economic scenario are pondered.

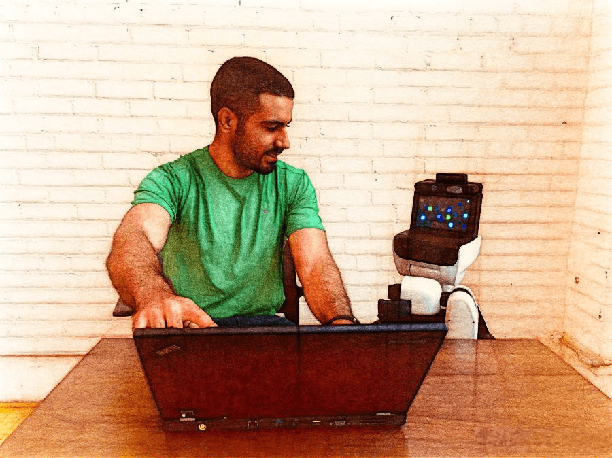

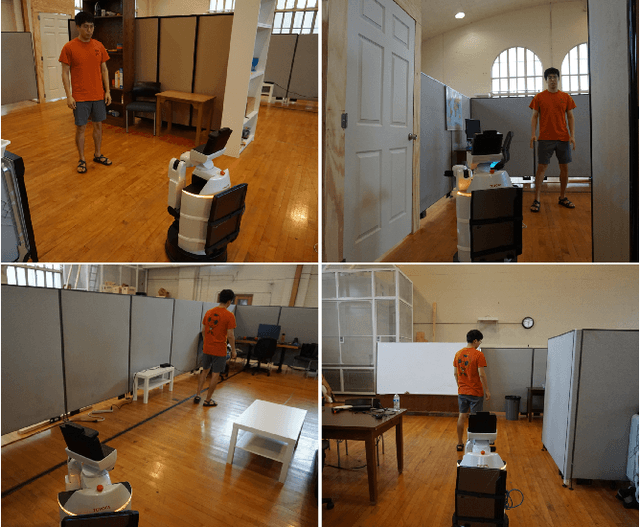

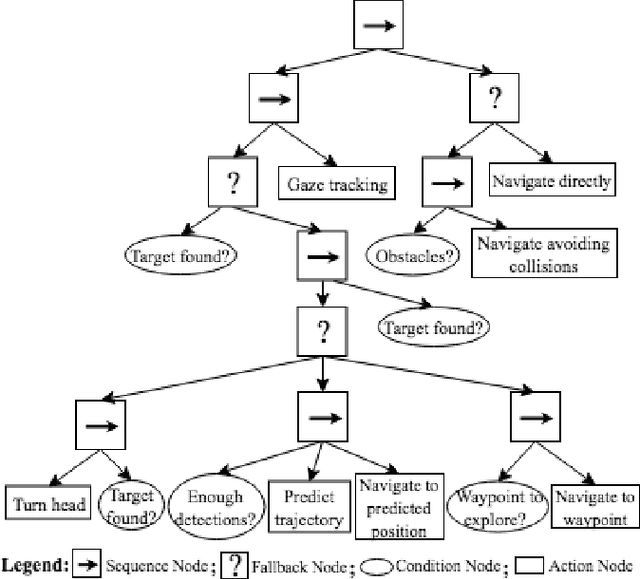

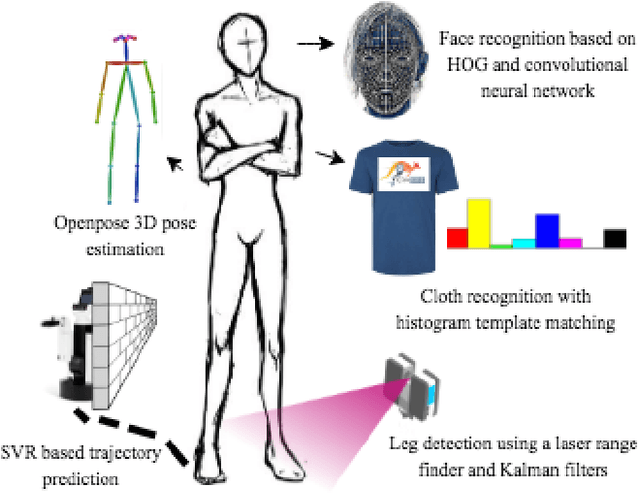

An Architecture for Person-Following using Active Target Search

Sep 24, 2018

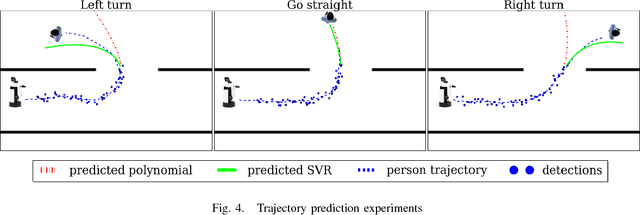

Abstract:This paper addresses a novel architecture for person-following robots using active search. The proposed system can be applied in real-time to general mobile robots for learning features of a human, detecting and tracking, and finally navigating towards that person. To succeed at person-following, perception, planning, and robot behavior need to be integrated properly. Toward this end, an active target searching capability, including prediction and navigation toward vantage locations for finding human targets, is proposed. The proposed capability aims at improving the robustness and efficiency for tracking and following people under dynamic conditions such as crowded environments. A multi-modal sensor information approach including fusing an RGB-D sensor and a laser scanner, is pursued to robustly track and identify human targets. Bayesian filtering for keeping track of human and a regression algorithm to predict the trajectory of people are investigated. In order to make the robot autonomous, the proposed framework relies on a behavior-tree structure. Using Toyota Human Support Robot (HSR), real-time experiments demonstrate that the proposed architecture can generate fast, efficient person-following behaviors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge