A Versatile Framework for Robust and Adaptive Door Operation with a Mobile Manipulator Robot

Paper and Code

Feb 25, 2019

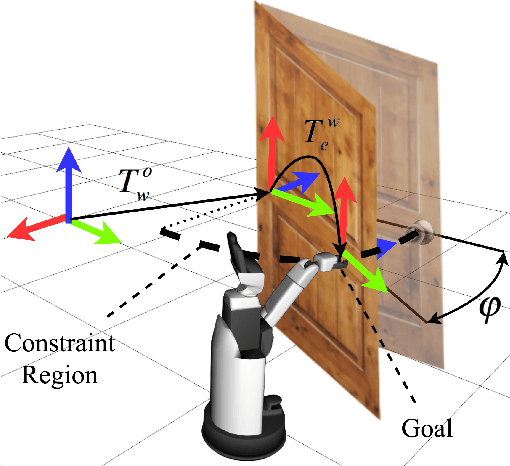

The ability to deal with articulated objects is very important for robots assisting humans. In this work a general framework for the robust operation of different types of doors using an autonomous robotic mobile manipulator is proposed. To push the state-of-the-art in robustness and speed performance, we devise a novel algorithm that fuses a convolutional neural network with efficient point cloud processing. This advancement allows for real-time grasping pose estimation of single or multiple handles from RGB-D images, providing a speed up for assistive human-centered behaviors. In addition, we propose a versatile Bayesian framework that endows the robot with the ability to infer the door kinematic model from observations of its motion while opening it and learn from previous experiences or human demonstrations. Combining this probabilistic approach with a state-of-the-art motion planner, we achieve efficient door grasping and subsequent door operation regardless of the kinematic model using the Toyota Human Support Robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge