Carlos Gonzalez

HARMONIC: A Content-Centric Cognitive Robotic Architecture

Sep 16, 2025Abstract:This paper introduces HARMONIC, a cognitive-robotic architecture designed for robots in human-robotic teams. HARMONIC supports semantic perception interpretation, human-like decision-making, and intentional language communication. It addresses the issues of safety and quality of results; aims to solve problems of data scarcity, explainability, and safety; and promotes transparency and trust. Two proof-of-concept HARMONIC-based robotic systems are demonstrated, each implemented in both a high-fidelity simulation environment and on physical robotic platforms.

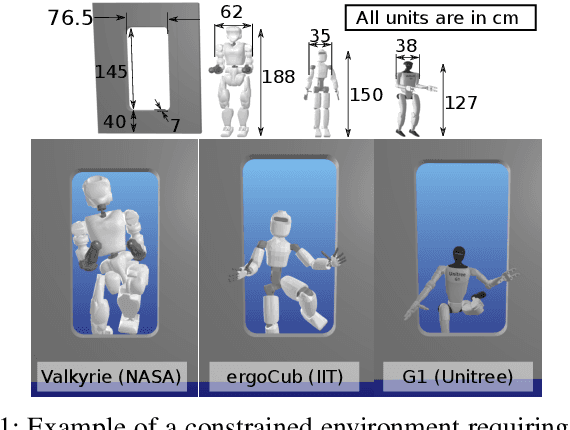

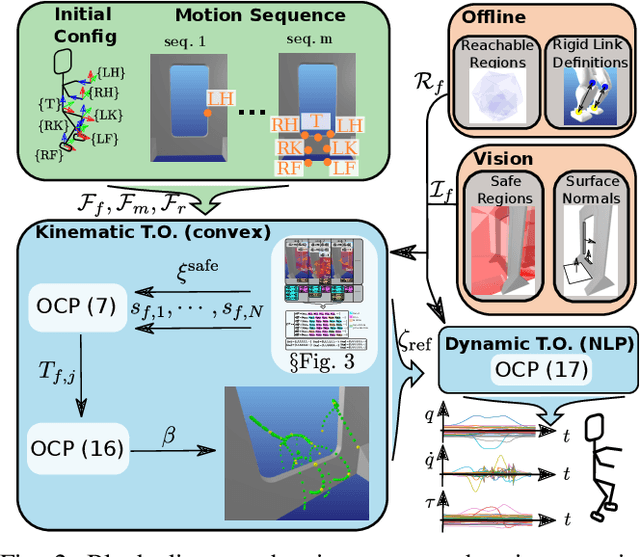

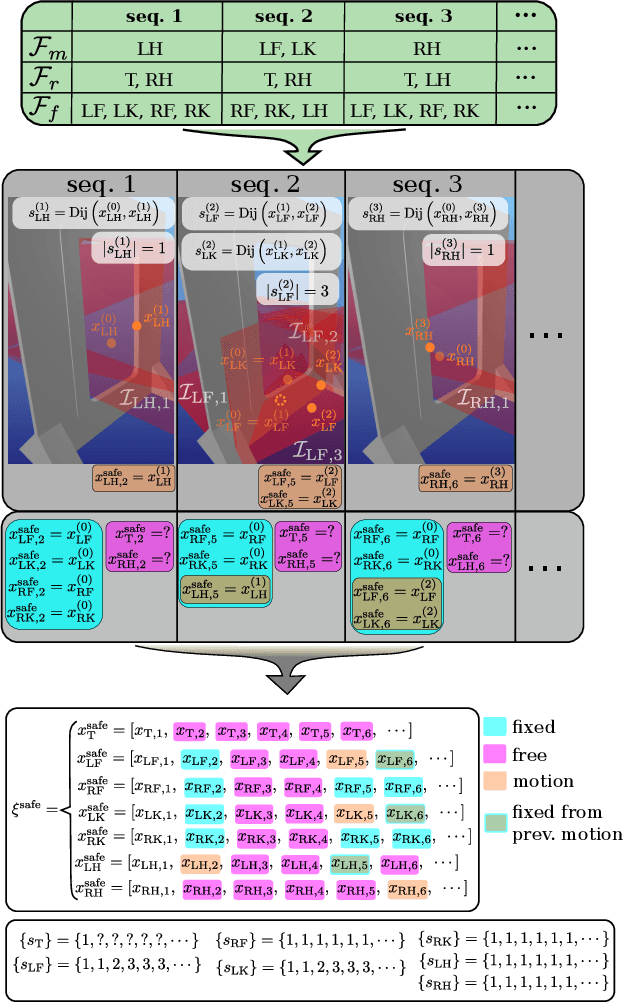

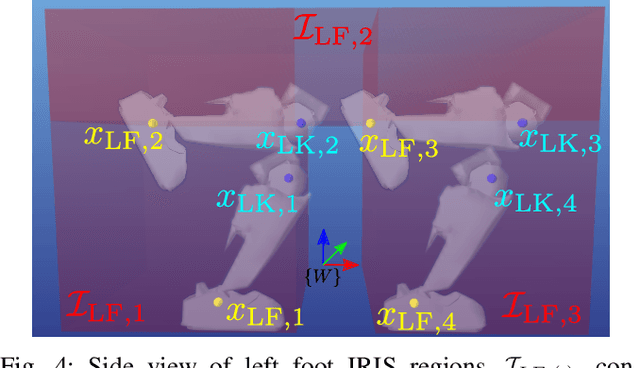

Guiding Collision-Free Humanoid Multi-Contact Locomotion using Convex Kinematic Relaxations and Dynamic Optimization

Oct 10, 2024

Abstract:Humanoid robots rely on multi-contact planners to navigate a diverse set of environments, including those that are unstructured and highly constrained. To synthesize stable multi-contact plans within a reasonable time frame, most planners assume statically stable motions or rely on reduced order models. However, these approaches can also render the problem infeasible in the presence of large obstacles or when operating near kinematic and dynamic limits. To that end, we propose a new multi-contact framework that leverages recent advancements in relaxing collision-free path planning into a convex optimization problem, extending it to be applicable to humanoid multi-contact navigation. Our approach generates near-feasible trajectories used as guides in a dynamic trajectory optimizer, altogether addressing the aforementioned limitations. We evaluate our computational approach showcasing three different-sized humanoid robots traversing a high-raised naval knee-knocker door using our proposed framework in simulation. Our approach can generate motion plans within a few seconds consisting of several multi-contact states, including dynamic feasibility in joint space.

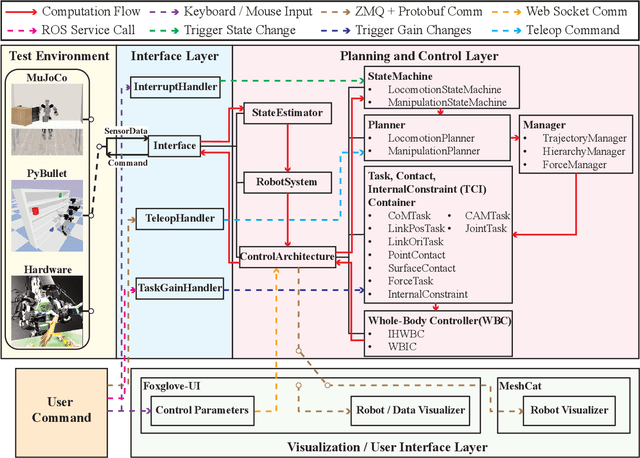

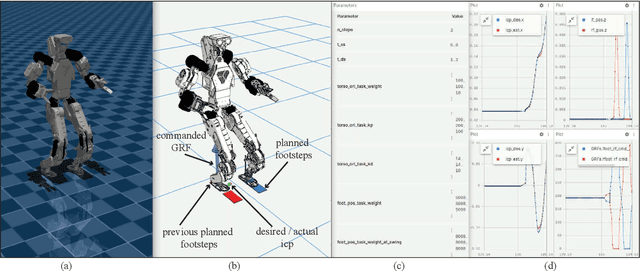

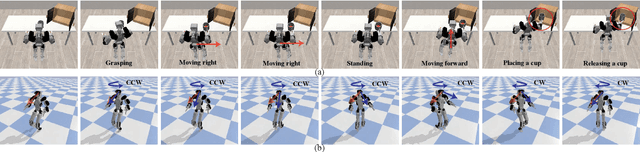

RPC: A Modular Framework for Robot Planning, Control, and Deployment

Sep 16, 2024

Abstract:This paper presents an open-source, lightweight, yet comprehensive software framework, named RPC, which integrates physics-based simulators, planning and control libraries, debugging tools, and a user-friendly operator interface. RPC enables users to thoroughly evaluate and develop control algorithms for robotic systems. While existing software frameworks provide some of these capabilities, integrating them into a cohesive system can be challenging and cumbersome. To overcome this challenge, we have modularized each component in RPC to ensure easy and seamless integration or replacement with new modules. Additionally, our framework currently supports a variety of model-based planning and control algorithms for robotic manipulators and legged robots, alongside essential debugging tools, making it easier for users to design and execute complex robotics tasks. The code and usage instructions of RPC are available at https://github.com/shbang91/rpc.

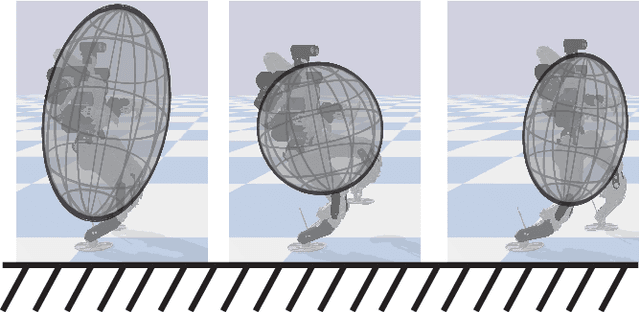

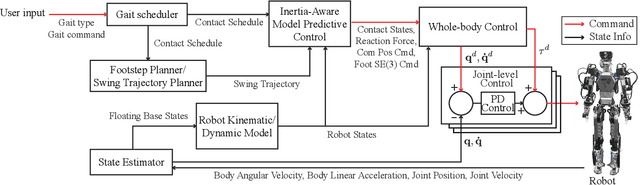

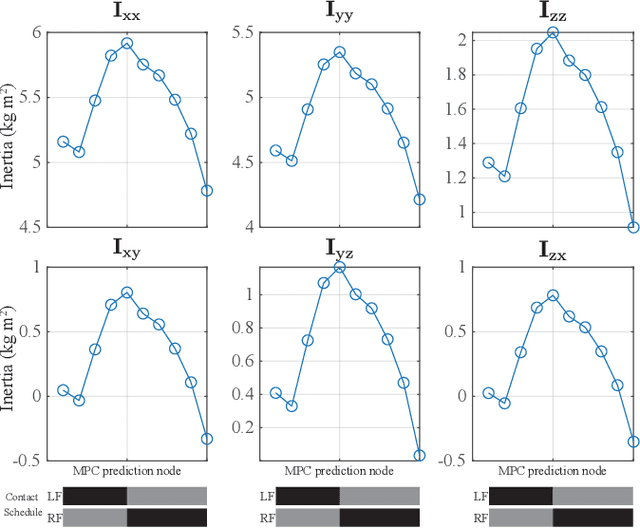

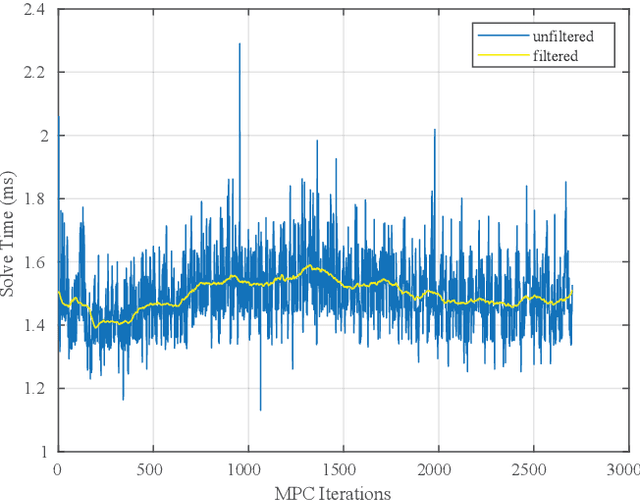

Variable Inertia Model Predictive Control for Fast Bipedal Maneuvers

Jul 23, 2024

Abstract:This paper proposes a novel control framework for agile and robust bipedal locomotion, addressing model discrepancies between full-body and reduced-order models. Specifically, assumptions such as constant centroidal inertia have introduced significant challenges and limitations in locomotion tasks. To enhance the agility and versatility of full-body humanoid robots, we formalize a Model Predictive Control (MPC) problem that accounts for the variable centroidal inertia of humanoid robots within a convex optimization framework, ensuring computational efficiency for real-time operations. In this formulation, we incorporate a centroidal inertia network designed to predict the variable centroidal inertia over the MPC horizon, taking into account the swing foot trajectories-an aspect often overlooked in ROM-based MPC frameworks. Moreover, we enhance the performance and stability of locomotion behaviors by synergizing the MPC-based approach with whole-body control (WBC). The effectiveness of our proposed framework is validated through simulations using our full-body humanoid robot, DRACO 3, demonstrating dynamic behaviors.

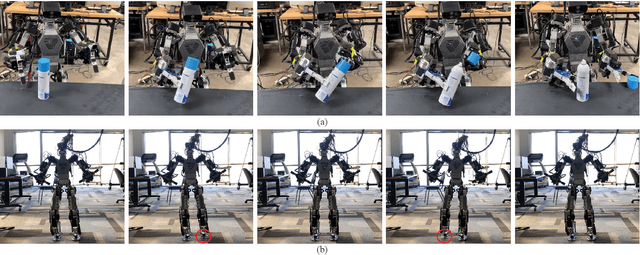

Deep Imitation Learning for Humanoid Loco-manipulation through Human Teleoperation

Sep 05, 2023Abstract:We tackle the problem of developing humanoid loco-manipulation skills with deep imitation learning. The difficulty of collecting task demonstrations and training policies for humanoids with a high degree of freedom presents substantial challenges. We introduce TRILL, a data-efficient framework for training humanoid loco-manipulation policies from human demonstrations. In this framework, we collect human demonstration data through an intuitive Virtual Reality (VR) interface. We employ the whole-body control formulation to transform task-space commands by human operators into the robot's joint-torque actuation while stabilizing its dynamics. By employing high-level action abstractions tailored for humanoid loco-manipulation, our method can efficiently learn complex sensorimotor skills. We demonstrate the effectiveness of TRILL in simulation and on a real-world robot for performing various loco-manipulation tasks. Videos and additional materials can be found on the project page: https://ut-austin-rpl.github.io/TRILL.

Control and Evaluation of a Humanoid Robot with Rolling Contact Knees

Oct 03, 2022

Abstract:In this paper, we introduce the humanoid robot DRACO 3 by providing a high-level description of its design and control. This robot features proximal actuation and mechanical artifacts to provide a high range of hip, knee and ankle motion. Its versatile design brings interesting problems as it requires a more elaborate control system to perform its motions. For this reason, we introduce a whole body controller (WBC) with support for rolling contact joints and show how it can be easily integrated into our previously presented open-source Planning and Control (PnC) framework. We then validate our controller experimentally on DRACO 3 by showing preliminary results carrying out two postural tasks. Lastly, we analyze the impact of the proximal actuation design and show where it stands in comparison to other adult-size humanoids.

Dictionary Learning with Accumulator Neurons

May 30, 2022

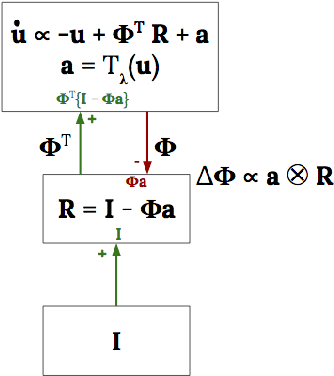

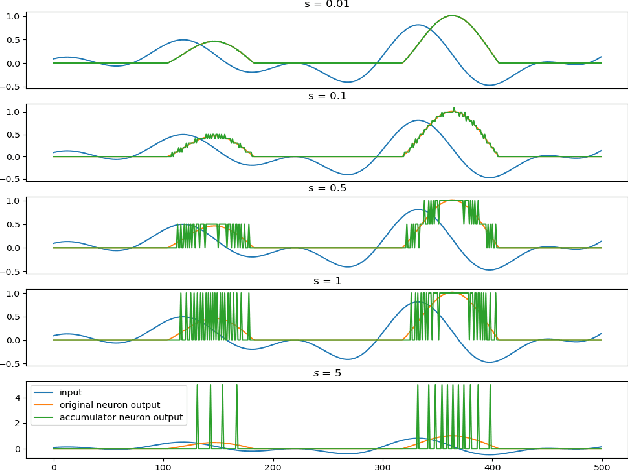

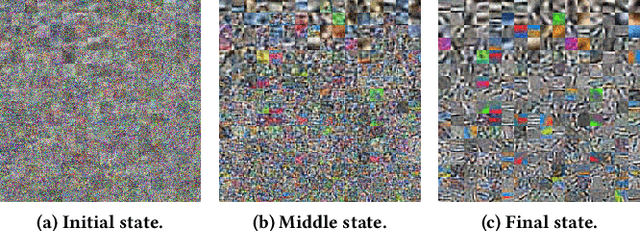

Abstract:The Locally Competitive Algorithm (LCA) uses local competition between non-spiking leaky integrator neurons to infer sparse representations, allowing for potentially real-time execution on massively parallel neuromorphic architectures such as Intel's Loihi processor. Here, we focus on the problem of inferring sparse representations from streaming video using dictionaries of spatiotemporal features optimized in an unsupervised manner for sparse reconstruction. Non-spiking LCA has previously been used to achieve unsupervised learning of spatiotemporal dictionaries composed of convolutional kernels from raw, unlabeled video. We demonstrate how unsupervised dictionary learning with spiking LCA (\hbox{S-LCA}) can be efficiently implemented using accumulator neurons, which combine a conventional leaky-integrate-and-fire (\hbox{LIF}) spike generator with an additional state variable that is used to minimize the difference between the integrated input and the spiking output. We demonstrate dictionary learning across a wide range of dynamical regimes, from graded to intermittent spiking, for inferring sparse representations of both static images drawn from the CIFAR database as well as video frames captured from a DVS camera. On a classification task that requires identification of the suite from a deck of cards being rapidly flipped through as viewed by a DVS camera, we find essentially no degradation in performance as the LCA model used to infer sparse spatiotemporal representations migrates from graded to spiking. We conclude that accumulator neurons are likely to provide a powerful enabling component of future neuromorphic hardware for implementing online unsupervised learning of spatiotemporal dictionaries optimized for sparse reconstruction of streaming video from event based DVS cameras.

Data-Driven Safety Verification for Legged Robots

Feb 24, 2022

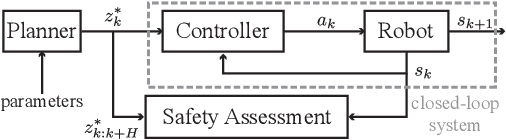

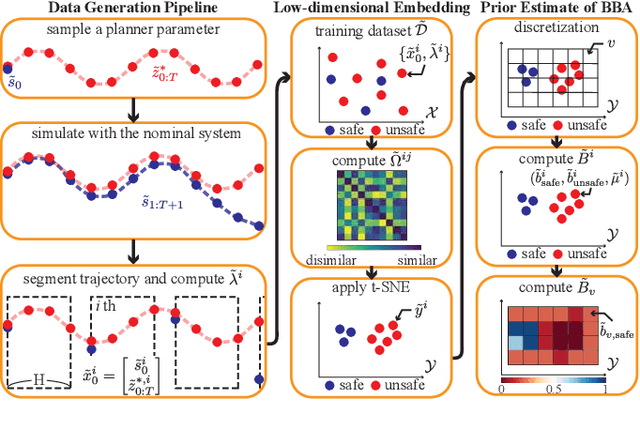

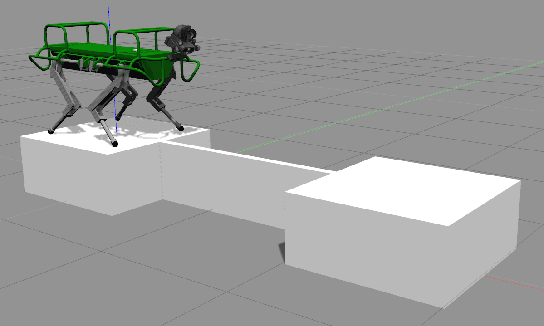

Abstract:Planning safe motions for legged robots requires sophisticated safety verification tools. However, designing such tools for such complex systems is challenging due to the nonlinear and high-dimensional nature of these systems' dynamics. In this letter, we present a probabilistic verification framework for legged systems, which evaluates the safety of planned trajectories by learning an assessment function from trajectories collected from a closed-loop system. Our approach does not require an analytic expression of the closed-loop dynamics, thus enabling safety verification of systems with complex models and controllers. Our framework consists of an offline stage that initializes a safety assessment function by simulating a nominal model and an online stage that adapts the function to address the sim-to-real gap. The performance of the proposed approach for safety verification is demonstrated using a quadruped balancing task and a humanoid reaching task. The results demonstrate that our framework accurately predicts the systems' safety both at the planning phase to generate robust trajectories and at execution phase to detect unexpected external disturbances.

Line Walking and Balancing for Legged Robots with Point Feet

Jul 02, 2020

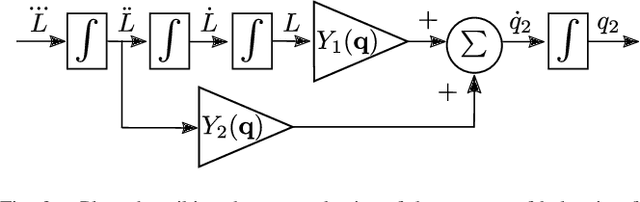

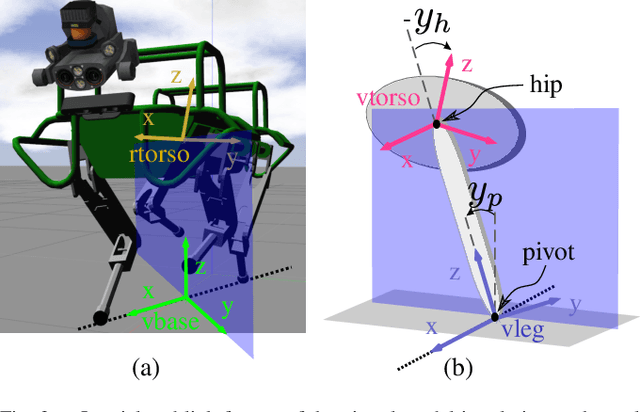

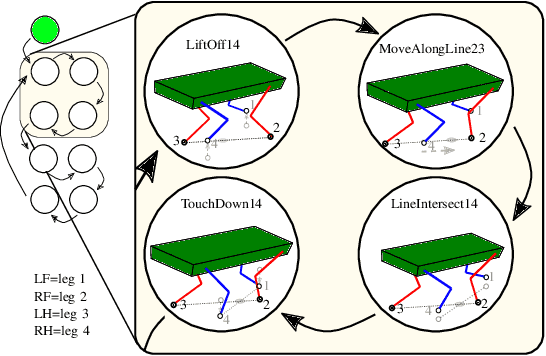

Abstract:The ability of legged systems to traverse highly-constrained environments depends by and large on the performance of their motion and balance controllers. This paper presents a controller that excels in a scenario that most state-of-the-art balance controllers have not yet addressed: line walking, or walking on nearly null support regions. Our approach uses a low-dimensional virtual model (2-DoF) to generate balancing actions through a previously derived four-term balance controller and transforms them to the robot through a derived kinematic mapping. The capabilities of this controller are tested in simulation, where we show the 90kg quadruped robot HyQ crossing a bridge of only 6 cm width (compared to its 4 cm diameter foot sphere), by balancing on two feet at any time while moving along a line. Additional simulations are carried to test the performance of the controller and the effect of external disturbances. The same controller is then used on the real robot to present for the first time a legged robot balancing on a contact line of nearly null support area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge