Marco Moletta

Real-Time Operator Takeover for Visuomotor Diffusion Policy Training

Feb 04, 2025

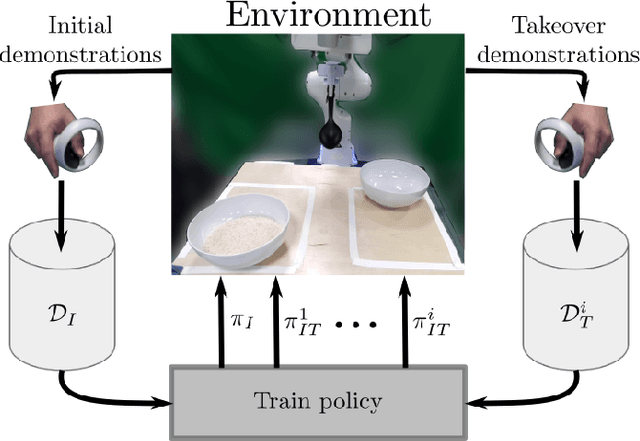

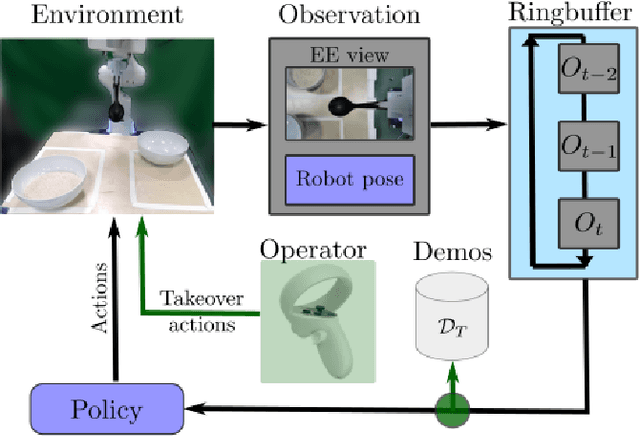

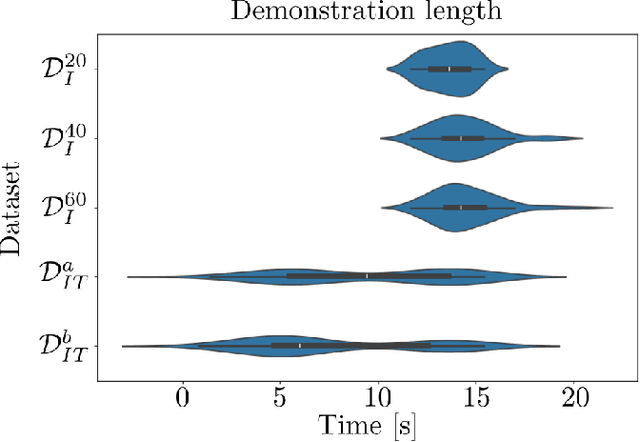

Abstract:We present a Real-Time Operator Takeover (RTOT) paradigm enabling operators to seamlessly take control of a live visuomotor diffusion policy, guiding the system back into desirable states or reinforcing specific demonstrations. We presents new insights in using the Mahalonobis distance to automaicaly identify undesirable states. Once the operator has intervened and redirected the system, the control is seamlessly returned to the policy, which resumes generating actions until further intervention is required. We demonstrate that incorporating the targeted takeover demonstrations significantly improves policy performance compared to training solely with an equivalent number of, but longer, initial demonstrations. We provide an in-depth analysis of using the Mahalanobis distance to detect out-of-distribution states, illustrating its utility for identifying critical failure points during execution. Supporting materials, including videos of initial and takeover demonstrations and all rice-scooping experiments, are available on the project website: https://operator-takeover.github.io/

Unfolding the Literature: A Review of Robotic Cloth Manipulation

Jul 01, 2024

Abstract:The realm of textiles spans clothing, households, healthcare, sports, and industrial applications. The deformable nature of these objects poses unique challenges that prior work on rigid objects cannot fully address. The increasing interest within the community in textile perception and manipulation has led to new methods that aim to address challenges in modeling, perception, and control, resulting in significant progress. However, this progress is often tailored to one specific textile or a subcategory of these textiles. To understand what restricts these methods and hinders current approaches from generalizing to a broader range of real-world textiles, this review provides an overview of the field, focusing specifically on how and to what extent textile variations are addressed in modeling, perception, benchmarking, and manipulation of textiles. We finally conclude by identifying key open problems and outlining grand challenges that will drive future advancements in the field.

Visual Action Planning with Multiple Heterogeneous Agents

Mar 25, 2024Abstract:Visual planning methods are promising to handle complex settings where extracting the system state is challenging. However, none of the existing works tackles the case of multiple heterogeneous agents which are characterized by different capabilities and/or embodiment. In this work, we propose a method to realize visual action planning in multi-agent settings by exploiting a roadmap built in a low-dimensional structured latent space and used for planning. To enable multi-agent settings, we infer possible parallel actions from a dataset composed of tuples associated with individual actions. Next, we evaluate feasibility and cost of them based on the capabilities of the multi-agent system and endow the roadmap with this information, building a capability latent space roadmap (C-LSR). Additionally, a capability suggestion strategy is designed to inform the human operator about possible missing capabilities when no paths are found. The approach is validated in a simulated burger cooking task and a real-world box packing task.

A Virtual Reality Framework for Human-Robot Collaboration in Cloth Folding

May 12, 2023Abstract:We present a virtual reality (VR) framework to automate the data collection process in cloth folding tasks. The framework uses skeleton representations to help the user define the folding plans for different classes of garments, allowing for replicating the folding on unseen items of the same class. We evaluate the framework in the context of automating garment folding tasks. A quantitative analysis is performed on 3 classes of garments, demonstrating that the framework reduces the need for intervention by the user. We also compare skeleton representations with RGB and binary images in a classification task on a large dataset of clothing items, motivating the use of the framework for other classes of garments.

EDO-Net: Learning Elastic Properties of Deformable Objects from Graph Dynamics

Sep 19, 2022

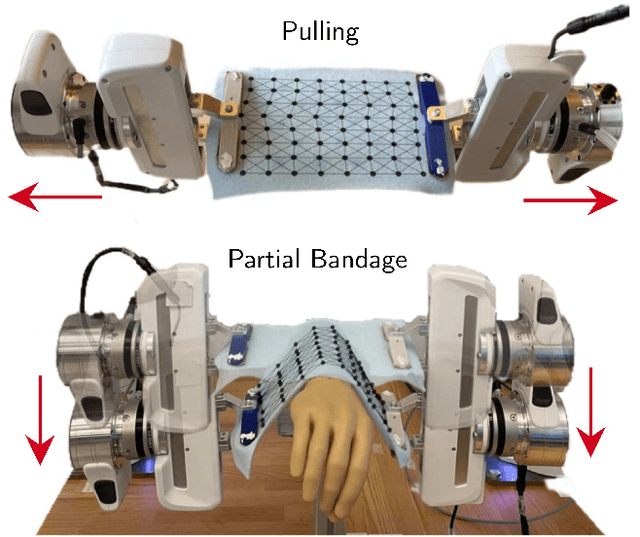

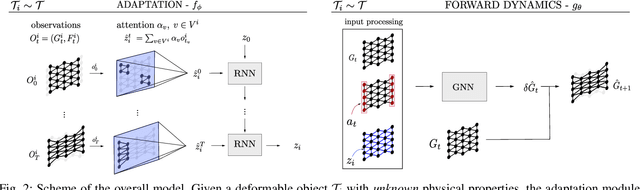

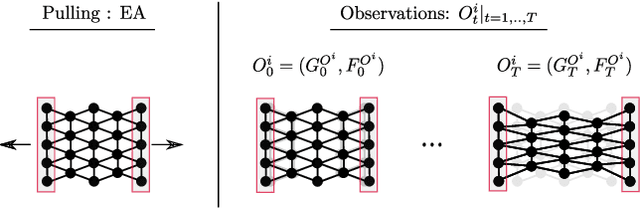

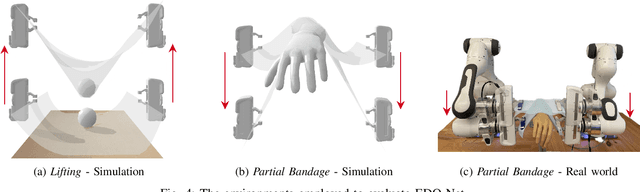

Abstract:We study the problem of learning graph dynamics of deformable objects which generalize to unknown physical properties. In particular, we leverage a latent representation of elastic physical properties of cloth-like deformable objects which we explore through a pulling interaction. We propose EDO-Net (Elastic Deformable Object - Net), a model trained in a self-supervised fashion on a large variety of samples with different elastic properties. EDO-Net jointly learns an adaptation module, responsible for extracting a latent representation of the physical properties of the object, and a forward-dynamics module, which leverages the latent representation to predict future states of cloth-like objects, represented as graphs. We evaluate EDO-Net both in simulation and real world, assessing its capabilities of: 1) generalizing to unknown physical properties of cloth-like deformable objects, 2) transferring the learned representation to new downstream tasks.

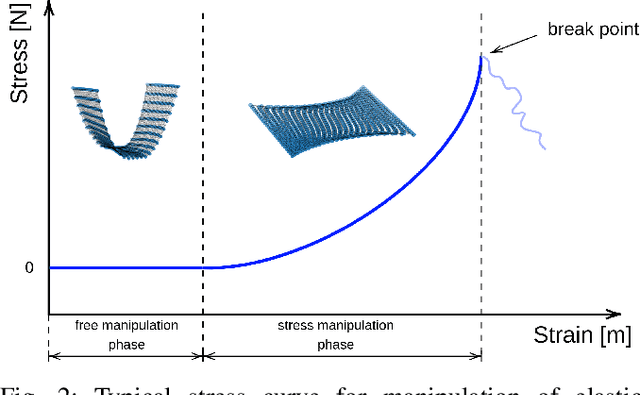

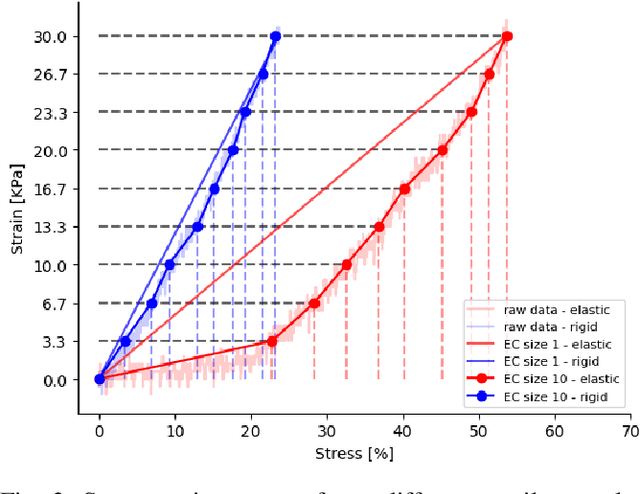

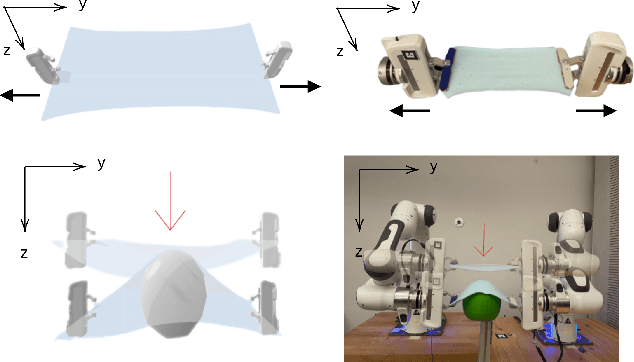

Elastic Context: Encoding Elasticity for Data-driven Models of Textiles

Sep 19, 2022

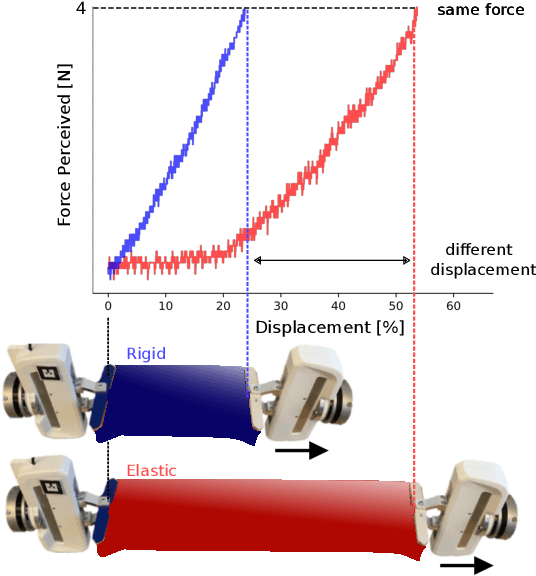

Abstract:Physical interaction with textiles, such as assistive dressing, relies on advanced dextreous capabilities. The underlying complexity in textile behavior when being pulled and stretched, is due to both the yarn material properties and the textile construction technique. Today, there are no commonly adopted and annotated datasets on which the various interaction or property identification methods are assessed. One important property that affects the interaction is material elasticity that results from both the yarn material and construction technique: these two are intertwined and, if not known a-priori, almost impossible to identify through sensing commonly available on robotic platforms. We introduce Elastic Context (EC), a concept that integrates various properties that affect elastic behavior, to enable a more effective physical interaction with textiles. The definition of EC relies on stress/strain curves commonly used in textile engineering, which we reformulated for robotic applications. We employ EC using Graph Neural Network (GNN) to learn generalized elastic behaviors of textiles. Furthermore, we explore the effect the dimension of the EC has on accurate force modeling of non-linear real-world elastic behaviors, highlighting the challenges of current robotic setups to sense textile properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge