Guillem Alenyà

HEXAR: a Hierarchical Explainability Architecture for Robots

Jan 06, 2026Abstract:As robotic systems become increasingly complex, the need for explainable decision-making becomes critical. Existing explainability approaches in robotics typically either focus on individual modules, which can be difficult to query from the perspective of high-level behaviour, or employ monolithic approaches, which do not exploit the modularity of robotic architectures. We present HEXAR (Hierarchical EXplainability Architecture for Robots), a novel framework that provides a plug-in, hierarchical approach to generate explanations about robotic systems. HEXAR consists of specialised component explainers using diverse explanation techniques (e.g., LLM-based reasoning, causal models, feature importance, etc) tailored to specific robot modules, orchestrated by an explainer selector that chooses the most appropriate one for a given query. We implement and evaluate HEXAR on a TIAGo robot performing assistive tasks in a home environment, comparing it against end-to-end and aggregated baseline approaches across 180 scenario-query variations. We observe that HEXAR significantly outperforms baselines in root cause identification, incorrect information exclusion, and runtime, offering a promising direction for transparent autonomous systems.

Multi-User Personalisation in Human-Robot Interaction: Using Quantitative Bipolar Argumentation Frameworks for Preferences Conflict Resolution

Nov 05, 2025Abstract:While personalisation in Human-Robot Interaction (HRI) has advanced significantly, most existing approaches focus on single-user adaptation, overlooking scenarios involving multiple stakeholders with potentially conflicting preferences. To address this, we propose the Multi-User Preferences Quantitative Bipolar Argumentation Framework (MUP-QBAF), a novel multi-user personalisation framework based on Quantitative Bipolar Argumentation Frameworks (QBAFs) that explicitly models and resolves multi-user preference conflicts. Unlike prior work in Argumentation Frameworks, which typically assumes static inputs, our approach is tailored to robotics: it incorporates both users' arguments and the robot's dynamic observations of the environment, allowing the system to adapt over time and respond to changing contexts. Preferences, both positive and negative, are represented as arguments whose strength is recalculated iteratively based on new information. The framework's properties and capabilities are presented and validated through a realistic case study, where an assistive robot mediates between the conflicting preferences of a caregiver and a care recipient during a frailty assessment task. This evaluation further includes a sensitivity analysis of argument base scores, demonstrating how preference outcomes can be shaped by user input and contextual observations. By offering a transparent, structured, and context-sensitive approach to resolving competing user preferences, this work advances the field of multi-user HRI. It provides a principled alternative to data-driven methods, enabling robots to navigate conflicts in real-world environments.

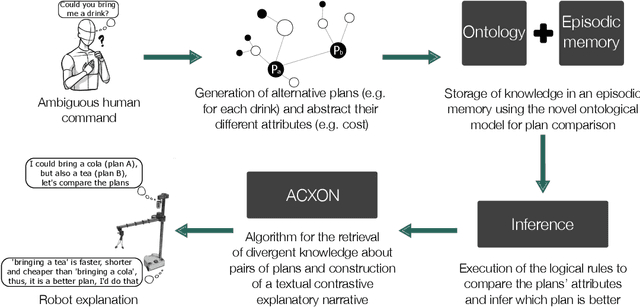

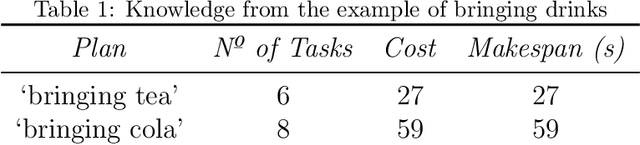

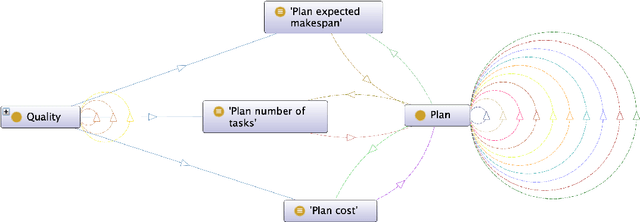

Ontological foundations for contrastive explanatory narration of robot plans

Sep 26, 2025

Abstract:Mutual understanding of artificial agents' decisions is key to ensuring a trustworthy and successful human-robot interaction. Hence, robots are expected to make reasonable decisions and communicate them to humans when needed. In this article, the focus is on an approach to modeling and reasoning about the comparison of two competing plans, so that robots can later explain the divergent result. First, a novel ontological model is proposed to formalize and reason about the differences between competing plans, enabling the classification of the most appropriate one (e.g., the shortest, the safest, the closest to human preferences, etc.). This work also investigates the limitations of a baseline algorithm for ontology-based explanatory narration. To address these limitations, a novel algorithm is presented, leveraging divergent knowledge between plans and facilitating the construction of contrastive narratives. Through empirical evaluation, it is observed that the explanations excel beyond the baseline method.

Temporal Counterfactual Explanations of Behaviour Tree Decisions

Sep 09, 2025Abstract:Explainability is a critical tool in helping stakeholders understand robots. In particular, the ability for robots to explain why they have made a particular decision or behaved in a certain way is useful in this regard. Behaviour trees are a popular framework for controlling the decision-making of robots and other software systems, and thus a natural question to ask is whether or not a system driven by a behaviour tree is capable of answering "why" questions. While explainability for behaviour trees has seen some prior attention, no existing methods are capable of generating causal, counterfactual explanations which detail the reasons for robot decisions and behaviour. Therefore, in this work, we introduce a novel approach which automatically generates counterfactual explanations in response to contrastive "why" questions. Our method achieves this by first automatically building a causal model from the structure of the behaviour tree as well as domain knowledge about the state and individual behaviour tree nodes. The resultant causal model is then queried and searched to find a set of diverse counterfactual explanations. We demonstrate that our approach is able to correctly explain the behaviour of a wide range of behaviour tree structures and states. By being able to answer a wide range of causal queries, our approach represents a step towards more transparent, understandable and ultimately trustworthy robotic systems.

Unfolding the Literature: A Review of Robotic Cloth Manipulation

Jul 01, 2024

Abstract:The realm of textiles spans clothing, households, healthcare, sports, and industrial applications. The deformable nature of these objects poses unique challenges that prior work on rigid objects cannot fully address. The increasing interest within the community in textile perception and manipulation has led to new methods that aim to address challenges in modeling, perception, and control, resulting in significant progress. However, this progress is often tailored to one specific textile or a subcategory of these textiles. To understand what restricts these methods and hinders current approaches from generalizing to a broader range of real-world textiles, this review provides an overview of the field, focusing specifically on how and to what extent textile variations are addressed in modeling, perception, benchmarking, and manipulation of textiles. We finally conclude by identifying key open problems and outlining grand challenges that will drive future advancements in the field.

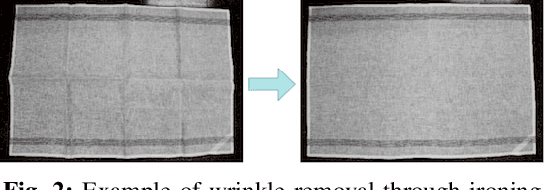

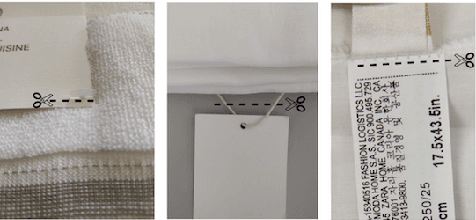

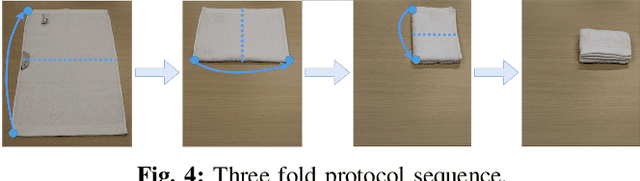

Standardization of Cloth Objects and its Relevance in Robotic Manipulation

Mar 07, 2024Abstract:The field of robotics faces inherent challenges in manipulating deformable objects, particularly in understanding and standardising fabric properties like elasticity, stiffness, and friction. While the significance of these properties is evident in the realm of cloth manipulation, accurately categorising and comprehending them in real-world applications remains elusive. This study sets out to address two primary objectives: (1) to provide a framework suitable for robotics applications to characterise cloth objects, and (2) to study how these properties influence robotic manipulation tasks. Our preliminary results validate the framework's ability to characterise cloth properties and compare cloth sets, and reveal the influence that different properties have on the outcome of five manipulation primitives. We believe that, in general, results on the manipulation of clothes should be reported along with a better description of the garments used in the evaluation. This paper proposes a set of these measures.

* 2024 ICRA International Conference on Robotics and Automation (ICRA)

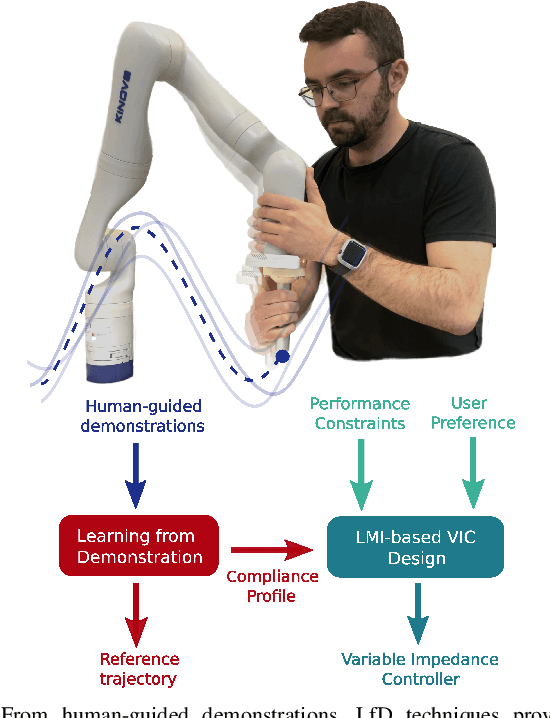

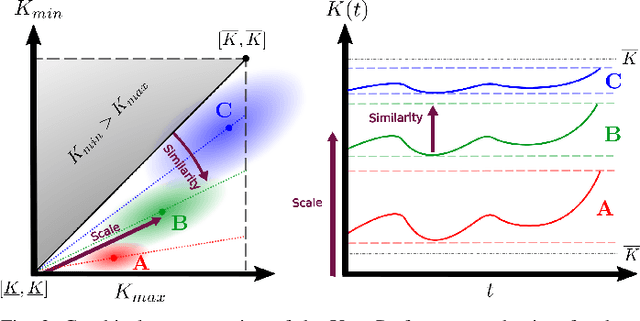

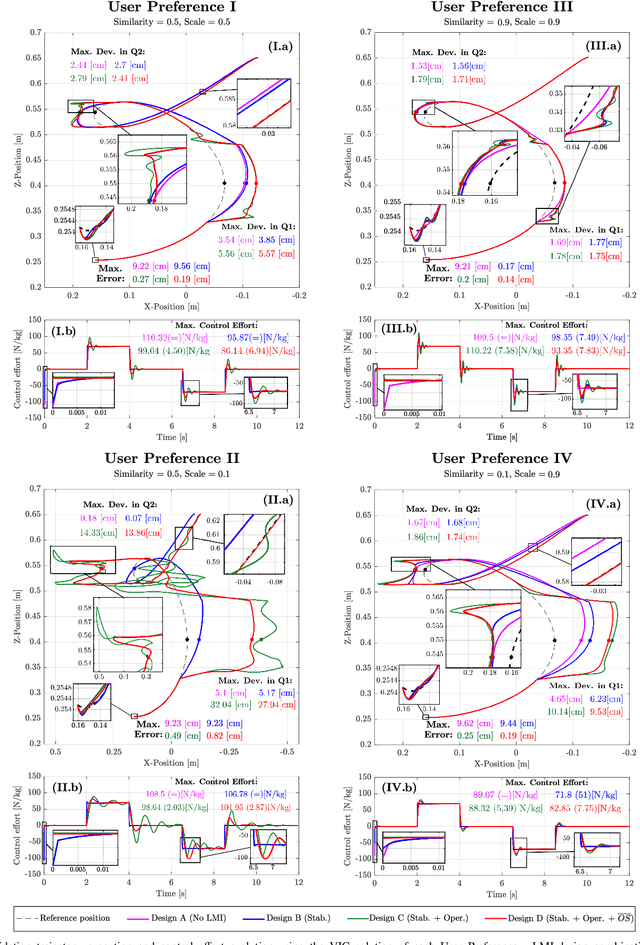

LMI-based Variable Impedance Controller design from User Demonstrations and Preferences

Sep 21, 2022

Abstract:In this paper, we introduce a new off-line method to find suitable parameters of a Variable Impedance Control using the Learning from Demonstrations (LfD) paradigm, fulfilling stability and performance constraints while taking into account user's intuition over the task. Considering a compliance profile obtained from human demonstrations, a Linear Parameter Varying (LPV) description of the VIC is given, which allows to state the design problem including stability and performance constraints as Linear Matrix Inequalities (LMIs). Therefore, using a solution-search method, we find the optimal solutions in terms of performance according to user preferences on the desired behaviour for the task. The design problem is validated by comparing the execution from the obtained controller against solutions from designs with relaxed conditions for different user preference sets in a 2-D trajectory tracking task. A pulley looping task is presented as a case study to evaluate the performance of the variable impedance controller against a constant one and its agility and leanness on two one-off modifications of the setup using the user preference mechanism. All the experiments have been performed using a 7-DoF KINOVA Gen3 manipulator.

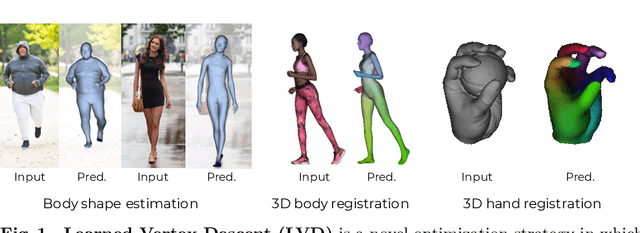

Learned Vertex Descent: A New Direction for 3D Human Model Fitting

May 12, 2022

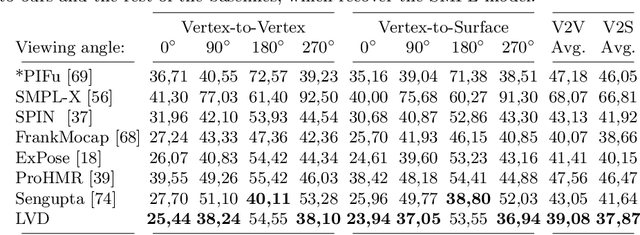

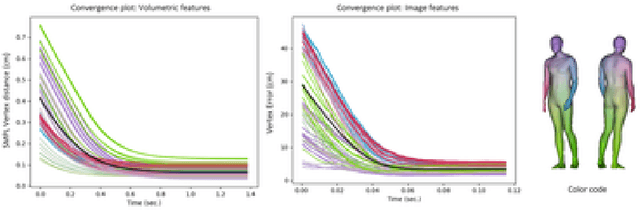

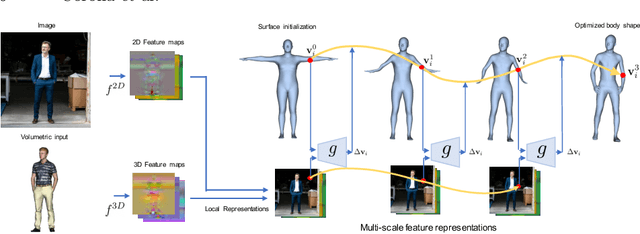

Abstract:We propose a novel optimization-based paradigm for 3D human model fitting on images and scans. In contrast to existing approaches that directly regress the parameters of a low-dimensional statistical body model (e.g. SMPL) from input images, we train an ensemble of per-vertex neural fields network. The network predicts, in a distributed manner, the vertex descent direction towards the ground truth, based on neural features extracted at the current vertex projection. At inference, we employ this network, dubbed LVD, within a gradient-descent optimization pipeline until its convergence, which typically occurs in a fraction of a second even when initializing all vertices into a single point. An exhaustive evaluation demonstrates that our approach is able to capture the underlying body of clothed people with very different body shapes, achieving a significant improvement compared to state-of-the-art. LVD is also applicable to 3D model fitting of humans and hands, for which we show a significant improvement to the SOTA with a much simpler and faster method.

Semantic State Estimation in Cloth Manipulation Tasks

Mar 22, 2022

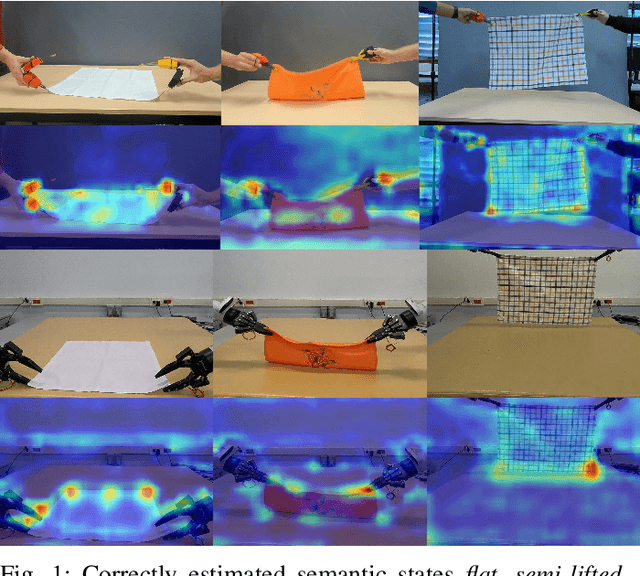

Abstract:Understanding of deformable object manipulations such as textiles is a challenge due to the complexity and high dimensionality of the problem. Particularly, the lack of a generic representation of semantic states (e.g., \textit{crumpled}, \textit{diagonally folded}) during a continuous manipulation process introduces an obstacle to identify the manipulation type. In this paper, we aim to solve the problem of semantic state estimation in cloth manipulation tasks. For this purpose, we introduce a new large-scale fully-annotated RGB image dataset showing various human demonstrations of different complicated cloth manipulations. We provide a set of baseline deep networks and benchmark them on the problem of semantic state estimation using our proposed dataset. Furthermore, we investigate the scalability of our semantic state estimation framework in robot monitoring tasks of long and complex cloth manipulations.

Household Cloth Object Set: Fostering Benchmarking in Deformable Object Manipulation

Nov 02, 2021

Abstract:Benchmarking of robotic manipulations is one of the open issues in robotic research. An important factor that has enabled progress in this area in the last decade is the existence of common object sets that have been shared among different research groups. However, the existing object sets are very limited when it comes to cloth-like objects that have unique particularities and challenges. This paper is a first step towards the design of a cloth object set to be distributed among research groups from the robotics cloth manipulation community. We present a set of household cloth objects and related tasks that serve to expose the challenges related to gathering such an object set and propose a roadmap to the design of common benchmarks in cloth manipulation tasks, with the intention to set the grounds for a future debate in the community that will be necessary to foster benchmarking for the manipulation of cloth-like objects. Some RGB-D and object scans are also collected as examples for the objects in relevant configurations. More details about the cloth set are shared in http://www.iri.upc.edu/groups/perception/ClothObjectSet/HouseholdClothSet.html.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge