Encoding cloth manipulations using a graph of states and transitions

Paper and Code

Sep 30, 2020

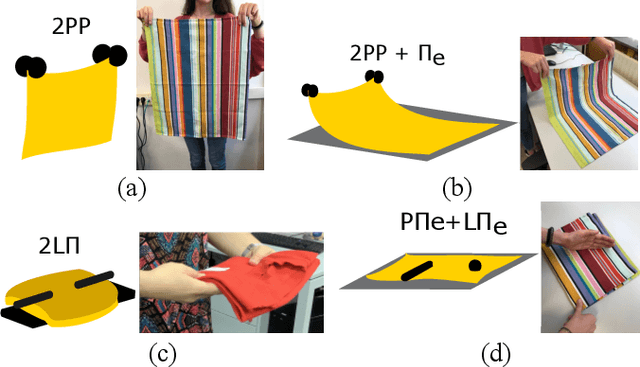

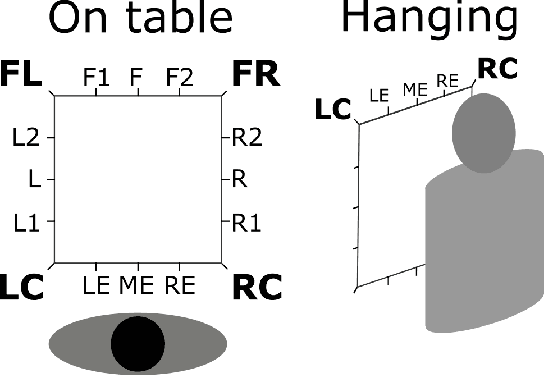

Cloth manipulation is very relevant for domestic robotic tasks, but it presents many challenges due to the complexity of representing, recognizing and predicting behaviour of cloth under manipulation. In this work, we propose a generic, compact and simplified representation of the states of cloth manipulation that allows for representing tasks as sequences of states and transitions. We also define a graph of manipulation primitives that encodes all the strategies to accomplish a task. Our novel representation is used to encode the task of folding a napkin, learned from an experiment with human subjects with video and motion data. We show how our simplified representation allows to obtain a map of meaningful motion primitives and to segment the motion data to obtain sets of trajectories, velocity and acceleration profiles corresponding to each manipulation primitive in the graph.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge