Berk Calli

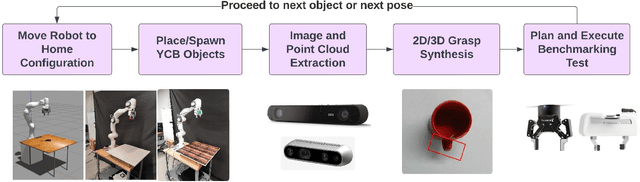

Developing Modular Grasping and Manipulation Pipeline Infrastructure to Streamline Performance Benchmarking

Apr 09, 2025Abstract:The robot manipulation ecosystem currently faces issues with integrating open-source components and reproducing results. This limits the ability of the community to benchmark and compare the performance of different solutions to one another in an effective manner, instead relying on largely holistic evaluations. As part of the COMPARE Ecosystem project, we are developing modular grasping and manipulation pipeline infrastructure in order to streamline performance benchmarking. The infrastructure will be used towards the establishment of standards and guidelines for modularity and improved open-source development and benchmarking. This paper provides a high-level overview of the architecture of the pipeline infrastructure, experiments conducted to exercise it during development, and future work to expand its modularity.

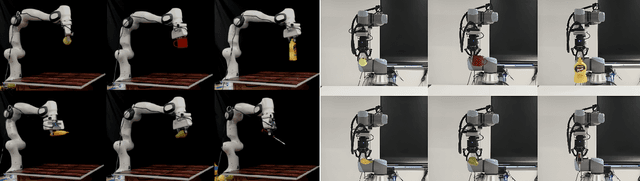

A Benchmarking Study of Vision-based Robotic Grasping Algorithms

Mar 14, 2025

Abstract:We present a benchmarking study of vision-based robotic grasping algorithms with distinct approaches, and provide a comparative analysis. In particular, we compare two machine-learning-based and two analytical algorithms using an existing benchmarking protocol from the literature and determine the algorithm's strengths and weaknesses under different experimental conditions. These conditions include variations in lighting, background textures, cameras with different noise levels, and grippers. We also run analogous experiments in simulations and with real robots and present the discrepancies. Some experiments are also run in two different laboratories using same protocols to further analyze the repeatability of our results. We believe that this study, comprising 5040 experiments, provides important insights into the role and challenges of systematic experimentation in robotic manipulation, and guides the development of new algorithms by considering the factors that could impact the performance. The experiment recordings and our benchmarking software are publicly available.

Image-Based Roadmaps for Vision-Only Planning and Control of Robotic Manipulators

Feb 26, 2025Abstract:This work presents a motion planning framework for robotic manipulators that computes collision-free paths directly in image space. The generated paths can then be tracked using vision-based control, eliminating the need for an explicit robot model or proprioceptive sensing. At the core of our approach is the construction of a roadmap entirely in image space. To achieve this, we explicitly define sampling, nearest-neighbor selection, and collision checking based on visual features rather than geometric models. We first collect a set of image-space samples by moving the robot within its workspace, capturing keypoints along its body at different configurations. These samples serve as nodes in the roadmap, which we construct using either learned or predefined distance metrics. At runtime, the roadmap generates collision-free paths directly in image space, removing the need for a robot model or joint encoders. We validate our approach through an experimental study in which a robotic arm follows planned paths using an adaptive vision-based control scheme to avoid obstacles. The results show that paths generated with the learned-distance roadmap achieved 100% success in control convergence, whereas the predefined image-space distance roadmap enabled faster transient responses but had a lower success rate in convergence.

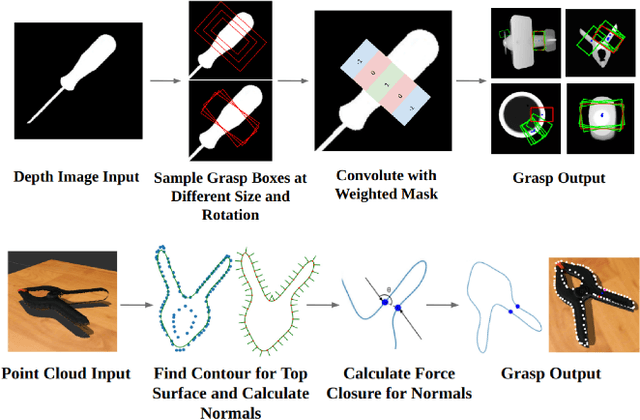

A Benchmarking Study on Vision-Based Grasp Synthesis Algorithms

Jul 21, 2023

Abstract:In this paper, we present a benchmarking study of vision-based grasp synthesis algorithms, each with distinct approaches, and provide a comparative analysis of their performance under different experimental conditions. In particular, we compare two machine-learning-based and two analytical algorithms to determine their strengths and weaknesses in different scenarios. In addition, we provide an open-source benchmarking tool developed from state-of-the-art benchmarking procedures and protocols to systematically evaluate different grasp synthesis algorithms. Our findings offer insights into the performance of the evaluated algorithms, which can aid in selecting the most appropriate algorithm for different scenarios.

Vision-based Oxy-fuel Torch Control for Robotic Metal Cutting

Jun 30, 2023

Abstract:The automation of key processes in metal cutting would substantially benefit many industries such as manufacturing and metal recycling. We present a vision-based control scheme for automated metal cutting with oxy-fuel torches, an established cutting medium in industry. The system consists of a robot equipped with a cutting torch and an eye-in-hand camera observing the scene behind a tinted visor. We develop a vision-based control algorithm to servo the torch's motion by visually observing its effects on the metal surface. As such, the vision system processes the metal surface's heat pool and computes its associated features, specifically pool convexity and intensity, which are then used for control. The operating conditions of the control problem are defined within which the stability is proven. In addition, metal cutting experiments are performed using a physical 1-DOF robot and oxy-fuel cutting equipment. Our results demonstrate the successful cutting of metal plates across three different plate thicknesses, relying purely on visual information without a priori knowledge of the thicknesses.

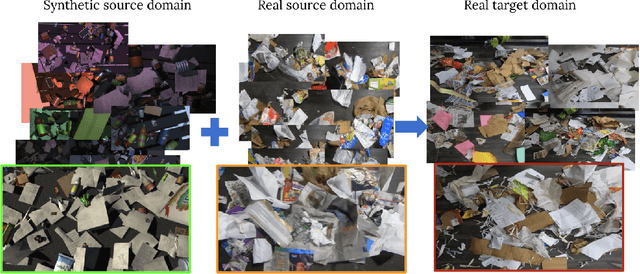

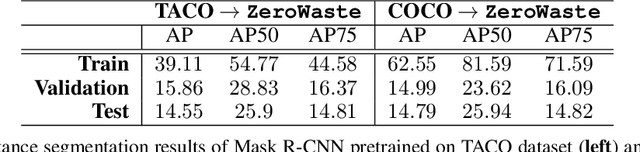

VisDA 2022 Challenge: Domain Adaptation for Industrial Waste Sorting

Mar 26, 2023

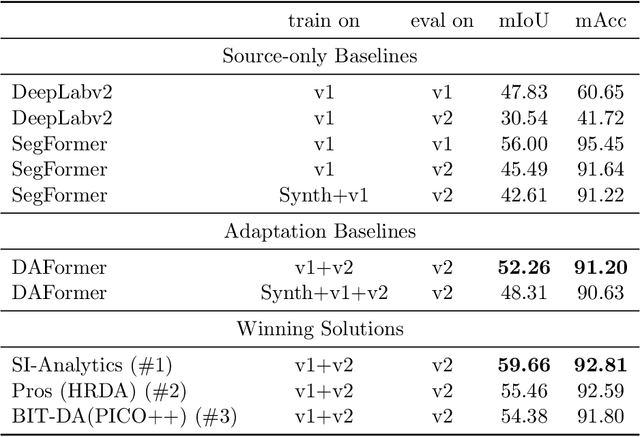

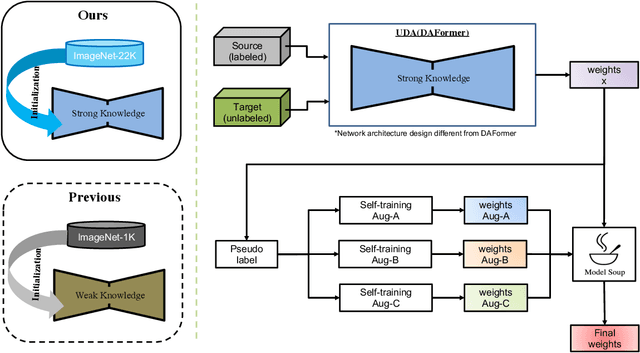

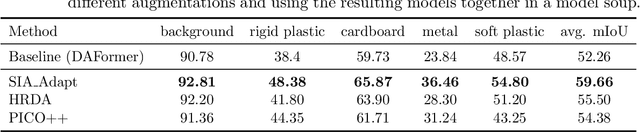

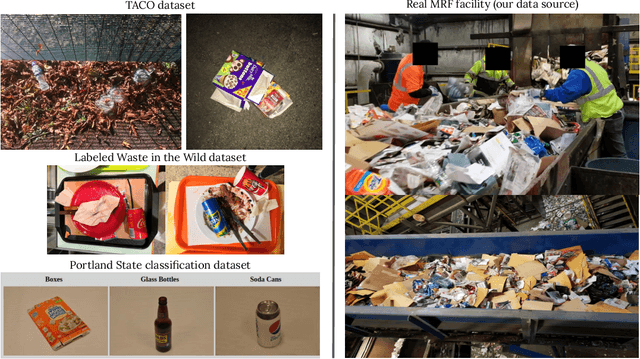

Abstract:Label-efficient and reliable semantic segmentation is essential for many real-life applications, especially for industrial settings with high visual diversity, such as waste sorting. In industrial waste sorting, one of the biggest challenges is the extreme diversity of the input stream depending on factors like the location of the sorting facility, the equipment available in the facility, and the time of year, all of which significantly impact the composition and visual appearance of the waste stream. These changes in the data are called ``visual domains'', and label-efficient adaptation of models to such domains is needed for successful semantic segmentation of industrial waste. To test the abilities of computer vision models on this task, we present the VisDA 2022 Challenge on Domain Adaptation for Industrial Waste Sorting. Our challenge incorporates a fully-annotated waste sorting dataset, ZeroWaste, collected from two real material recovery facilities in different locations and seasons, as well as a novel procedurally generated synthetic waste sorting dataset, SynthWaste. In this competition, we aim to answer two questions: 1) can we leverage domain adaptation techniques to minimize the domain gap? and 2) can synthetic data augmentation improve performance on this task and help adapt to changing data distributions? The results of the competition show that industrial waste detection poses a real domain adaptation problem, that domain generalization techniques such as augmentations, ensembling, etc., improve the overall performance on the unlabeled target domain examples, and that leveraging synthetic data effectively remains an open problem. See https://ai.bu.edu/visda-2022/

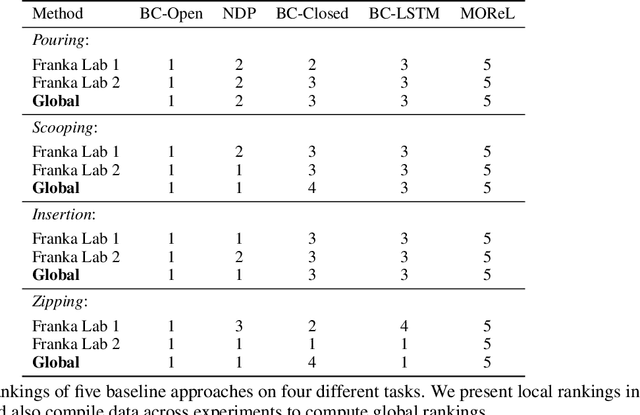

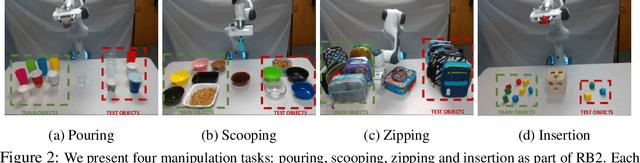

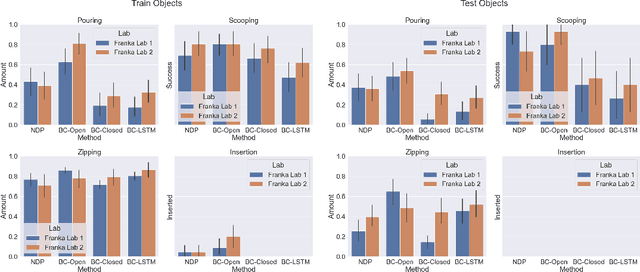

RB2: Robotic Manipulation Benchmarking with a Twist

Mar 15, 2022

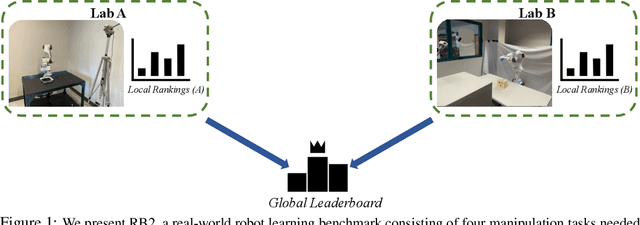

Abstract:Benchmarks offer a scientific way to compare algorithms using objective performance metrics. Good benchmarks have two features: (a) they should be widely useful for many research groups; (b) and they should produce reproducible findings. In robotic manipulation research, there is a trade-off between reproducibility and broad accessibility. If the benchmark is kept restrictive (fixed hardware, objects), the numbers are reproducible but the setup becomes less general. On the other hand, a benchmark could be a loose set of protocols (e.g. object sets) but the underlying variation in setups make the results non-reproducible. In this paper, we re-imagine benchmarking for robotic manipulation as state-of-the-art algorithmic implementations, alongside the usual set of tasks and experimental protocols. The added baseline implementations will provide a way to easily recreate SOTA numbers in a new local robotic setup, thus providing credible relative rankings between existing approaches and new work. However, these local rankings could vary between different setups. To resolve this issue, we build a mechanism for pooling experimental data between labs, and thus we establish a single global ranking for existing (and proposed) SOTA algorithms. Our benchmark, called Ranking-Based Robotics Benchmark (RB2), is evaluated on tasks that are inspired from clinically validated Southampton Hand Assessment Procedures. Our benchmark was run across two different labs and reveals several surprising findings. For example, extremely simple baselines like open-loop behavior cloning, outperform more complicated models (e.g. closed loop, RNN, Offline-RL, etc.) that are preferred by the field. We hope our fellow researchers will use RB2 to improve their research's quality and rigor.

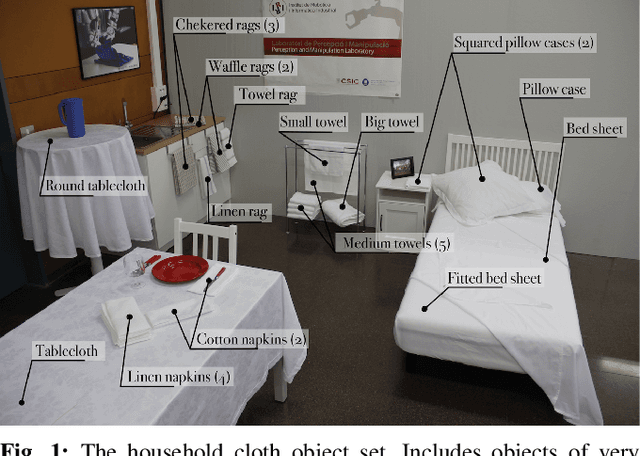

Household Cloth Object Set: Fostering Benchmarking in Deformable Object Manipulation

Nov 02, 2021

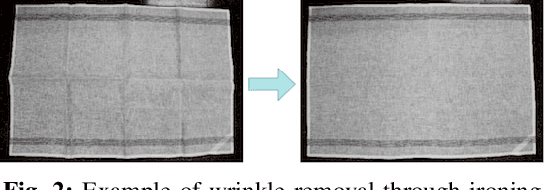

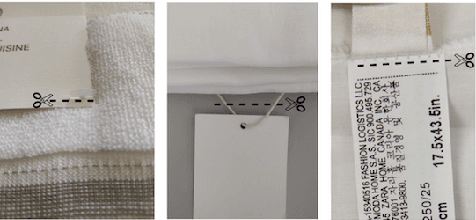

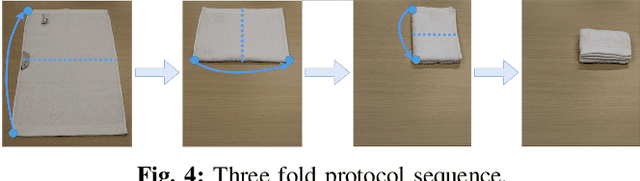

Abstract:Benchmarking of robotic manipulations is one of the open issues in robotic research. An important factor that has enabled progress in this area in the last decade is the existence of common object sets that have been shared among different research groups. However, the existing object sets are very limited when it comes to cloth-like objects that have unique particularities and challenges. This paper is a first step towards the design of a cloth object set to be distributed among research groups from the robotics cloth manipulation community. We present a set of household cloth objects and related tasks that serve to expose the challenges related to gathering such an object set and propose a roadmap to the design of common benchmarks in cloth manipulation tasks, with the intention to set the grounds for a future debate in the community that will be necessary to foster benchmarking for the manipulation of cloth-like objects. Some RGB-D and object scans are also collected as examples for the objects in relevant configurations. More details about the cloth set are shared in http://www.iri.upc.edu/groups/perception/ClothObjectSet/HouseholdClothSet.html.

Research Challenges and Progress in Robotic Grasping and Manipulation Competitions

Aug 03, 2021

Abstract:This paper discusses recent research progress in robotic grasping and manipulation in the light of the latest Robotic Grasping and Manipulation Competitions (RGMCs). We first provide an overview of past benchmarks and competitions related to the robotics manipulation field. Then, we discuss the methodology behind designing the manipulation tasks in RGMCs. We provide a detailed analysis of key challenges for each task and identify the most difficult aspects based on the competing teams' performance in recent years. We believe that such an analysis is insightful to determine the future research directions for the robotic manipulation domain.

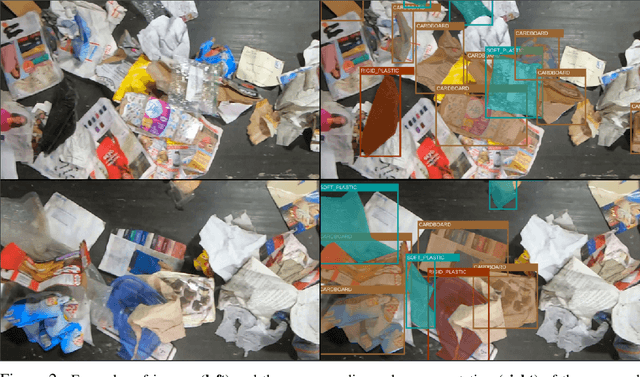

ZeroWaste Dataset: Towards Automated Waste Recycling

Jun 04, 2021

Abstract:Less than 35% of recyclable waste is being actually recycled in the US, which leads to increased soil and sea pollution and is one of the major concerns of environmental researchers as well as the common public. At the heart of the problem is the inefficiencies of the waste sorting process (separating paper, plastic, metal, glass, etc.) due to the extremely complex and cluttered nature of the waste stream. Automated waste detection strategies have a great potential to enable more efficient, reliable and safer waste sorting practices, but the literature lacks comprehensive datasets and methodology for the industrial waste sorting solutions. In this paper, we take a step towards computer-aided waste detection and present the first in-the-wild industrial-grade waste detection and segmentation dataset, ZeroWaste. This dataset contains over1800fully segmented video frames collected from a real waste sorting plant along with waste material labels for training and evaluation of the segmentation methods, as well as over6000unlabeled frames that can be further used for semi-supervised and self-supervised learning techniques. ZeroWaste also provides frames of the conveyor belt before and after the sorting process, comprising a novel setup that can be used for weakly-supervised segmentation. We present baselines for fully-, semi- and weakly-supervised segmentation methods. Our experimental results demonstrate that state-of-the-art segmentation methods struggle to correctly detect and classify target objects which suggests the challenging nature of our proposed in-the-wild dataset. We believe that ZeroWastewill catalyze research in object detection and semantic segmentation in extreme clutter as well as applications in the recycling domain. Our project page can be found athttp://ai.bu.edu/zerowaste/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge