Bharath K Rameshbabu

A Benchmarking Study of Vision-based Robotic Grasping Algorithms

Mar 14, 2025

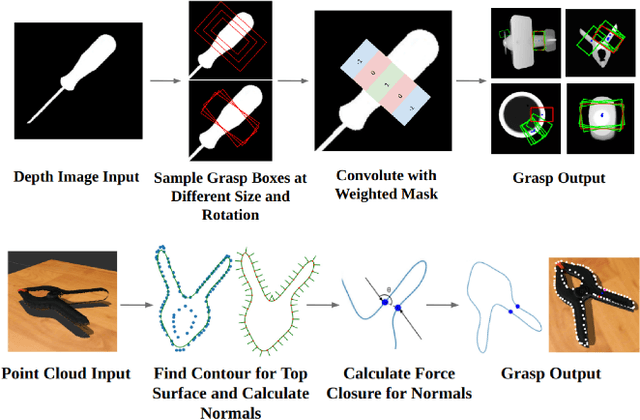

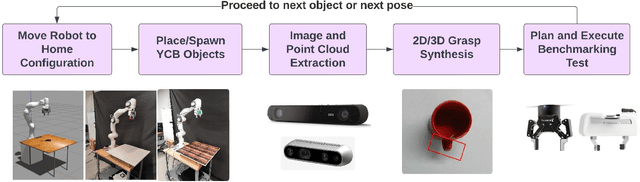

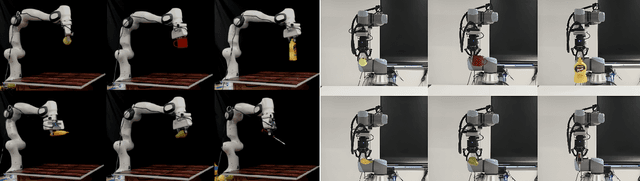

Abstract:We present a benchmarking study of vision-based robotic grasping algorithms with distinct approaches, and provide a comparative analysis. In particular, we compare two machine-learning-based and two analytical algorithms using an existing benchmarking protocol from the literature and determine the algorithm's strengths and weaknesses under different experimental conditions. These conditions include variations in lighting, background textures, cameras with different noise levels, and grippers. We also run analogous experiments in simulations and with real robots and present the discrepancies. Some experiments are also run in two different laboratories using same protocols to further analyze the repeatability of our results. We believe that this study, comprising 5040 experiments, provides important insights into the role and challenges of systematic experimentation in robotic manipulation, and guides the development of new algorithms by considering the factors that could impact the performance. The experiment recordings and our benchmarking software are publicly available.

A Benchmarking Study on Vision-Based Grasp Synthesis Algorithms

Jul 21, 2023

Abstract:In this paper, we present a benchmarking study of vision-based grasp synthesis algorithms, each with distinct approaches, and provide a comparative analysis of their performance under different experimental conditions. In particular, we compare two machine-learning-based and two analytical algorithms to determine their strengths and weaknesses in different scenarios. In addition, we provide an open-source benchmarking tool developed from state-of-the-art benchmarking procedures and protocols to systematically evaluate different grasp synthesis algorithms. Our findings offer insights into the performance of the evaluated algorithms, which can aid in selecting the most appropriate algorithm for different scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge