Holly Yanco

University of Massachusetts Lowell

Threading the Needle: Test and Evaluation of Early Stage UAS Capabilities to Autonomously Navigate GPS-Denied Environments in the DARPA Fast Lightweight Autonomy (FLA) Program

Apr 10, 2025Abstract:The DARPA Fast Lightweight Autonomy (FLA) program (2015 - 2018) served as a significant milestone in the development of UAS, particularly for autonomous navigation through unknown GPS-denied environments. Three performing teams developed UAS using a common hardware platform, focusing their contributions on autonomy algorithms and sensing. Several experiments were conducted that spanned indoor and outdoor environments, increasing in complexity over time. This paper reviews the testing methodology developed in order to benchmark and compare the performance of each team, each of the FLA Phase 1 experiments that were conducted, and a summary of the Phase 1 results.

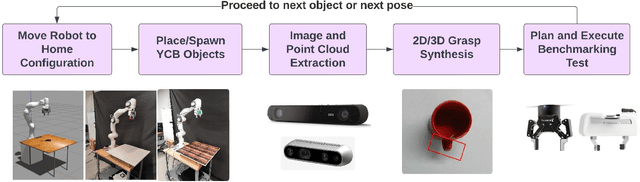

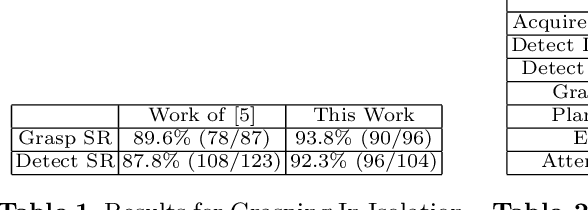

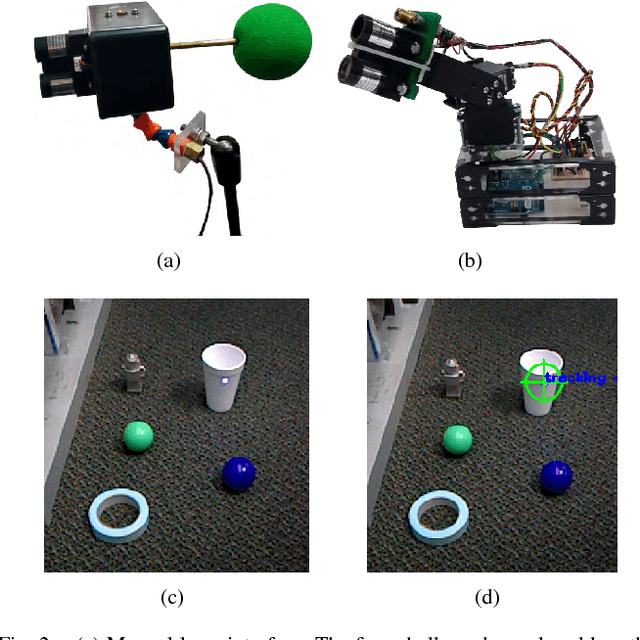

Developing Modular Grasping and Manipulation Pipeline Infrastructure to Streamline Performance Benchmarking

Apr 09, 2025Abstract:The robot manipulation ecosystem currently faces issues with integrating open-source components and reproducing results. This limits the ability of the community to benchmark and compare the performance of different solutions to one another in an effective manner, instead relying on largely holistic evaluations. As part of the COMPARE Ecosystem project, we are developing modular grasping and manipulation pipeline infrastructure in order to streamline performance benchmarking. The infrastructure will be used towards the establishment of standards and guidelines for modularity and improved open-source development and benchmarking. This paper provides a high-level overview of the architecture of the pipeline infrastructure, experiments conducted to exercise it during development, and future work to expand its modularity.

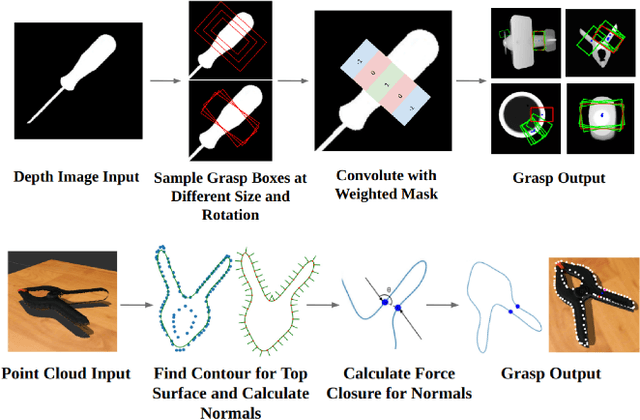

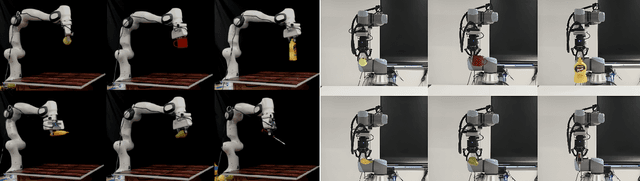

A Benchmarking Study of Vision-based Robotic Grasping Algorithms

Mar 14, 2025

Abstract:We present a benchmarking study of vision-based robotic grasping algorithms with distinct approaches, and provide a comparative analysis. In particular, we compare two machine-learning-based and two analytical algorithms using an existing benchmarking protocol from the literature and determine the algorithm's strengths and weaknesses under different experimental conditions. These conditions include variations in lighting, background textures, cameras with different noise levels, and grippers. We also run analogous experiments in simulations and with real robots and present the discrepancies. Some experiments are also run in two different laboratories using same protocols to further analyze the repeatability of our results. We believe that this study, comprising 5040 experiments, provides important insights into the role and challenges of systematic experimentation in robotic manipulation, and guides the development of new algorithms by considering the factors that could impact the performance. The experiment recordings and our benchmarking software are publicly available.

DECISIVE Benchmarking Data Report: sUAS Performance Results from Phase I

Jan 20, 2023

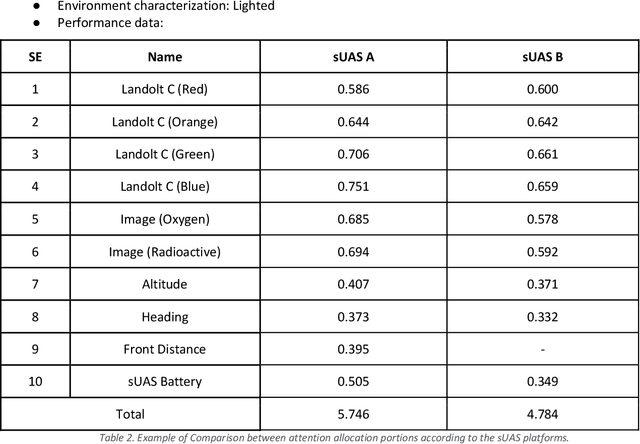

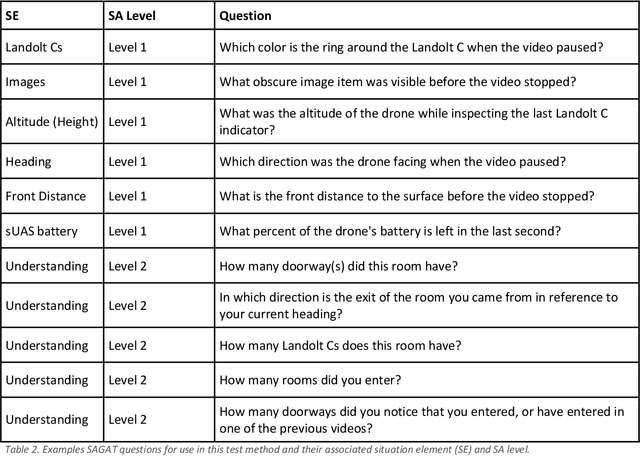

Abstract:This report reviews all results derived from performance benchmarking conducted during Phase I of the Development and Execution of Comprehensive and Integrated Subterranean Intelligent Vehicle Evaluations (DECISIVE) project by the University of Massachusetts Lowell, using the test methods specified in the DECISIVE Test Methods Handbook v1.1 for evaluating small unmanned aerial systems (sUAS) performance in subterranean and constrained indoor environments, spanning communications, field readiness, interface, obstacle avoidance, navigation, mapping, autonomy, trust, and situation awareness. Using those 20 test methods, over 230 tests were conducted across 8 sUAS platforms: Cleo Robotics Dronut X1P (P = prototype), FLIR Black Hornet PRS, Flyability Elios 2 GOV, Lumenier Nighthawk V3, Parrot ANAFI USA GOV, Skydio X2D, Teal Golden Eagle, and Vantage Robotics Vesper. Best in class criteria is specified for each applicable test method and the sUAS that match this criteria are named for each test method, including a high-level executive summary of their performance.

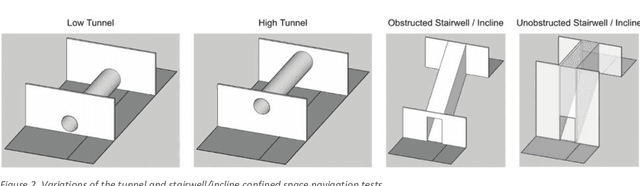

DECISIVE Test Methods Handbook: Test Methods for Evaluating sUAS in Subterranean and Constrained Indoor Environments, Version 1.1

Nov 01, 2022

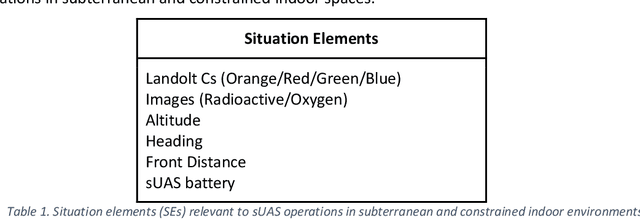

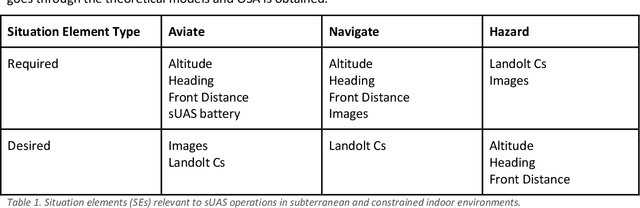

Abstract:This handbook outlines all test methods developed under the Development and Execution of Comprehensive and Integrated Subterranean Intelligent Vehicle Evaluations (DECISIVE) project by the University of Massachusetts Lowell for evaluating small unmanned aerial systems (sUAS) performance in subterranean and constrained indoor environments, spanning communications, field readiness, interface, obstacle avoidance, navigation, mapping, autonomy, trust, and situation awareness. For sUAS deployment in subterranean and constrained indoor environments, this puts forth two assumptions about applicable sUAS to be evaluated using these test methods: (1) able to operate without access to GPS signal, and (2) width from prop top to prop tip does not exceed 91 cm (36 in) wide (i.e., can physically fit through a typical doorway, although successful navigation through is not guaranteed). All test methods are specified using a common format: Purpose, Summary of Test Method, Apparatus and Artifacts, Equipment, Metrics, Procedure, and Example Data. All test methods are designed to be run in real-world environments (e.g., MOUT sites) or using fabricated apparatuses (e.g., test bays built from wood, or contained inside of one or more shipping containers).

AAAI SSS-22 Symposium on Closing the Assessment Loop: Communicating Proficiency and Intent in Human-Robot Teaming

Apr 05, 2022Abstract:The proposed symposium focuses understanding, modeling, and improving the efficacy of (a) communicating proficiency from human to robot and (b) communicating intent from a human to a robot. For example, how should a robot convey predicted ability on a new task? How should it report performance on a task that was just completed? How should a robot adapt its proficiency criteria based on human intentions and values? Communities in AI, robotics, HRI, and cognitive science have addressed related questions, but there are no agreed upon standards for evaluating proficiency and intent-based interactions. This is a pressing challenge for human-robot interaction for a variety of reasons. Prior work has shown that a robot that can assess its performance can alter human perception of the robot and decisions on control allocation. There is also significant evidence in robotics that accurately setting human expectations is critical, especially when proficiency is below human expectations. Moreover, proficiency assessment depends on context and intent, and a human teammate might increase or decrease performance standards, adapt tolerance for risk and uncertainty, demand predictive assessments that affect attention allocation, or otherwise reassess or adapt intent.

Implementing Virtual Reality for Teleoperation of a Humanoid Robot

Apr 23, 2021

Abstract:Our research explores the potential of a humanoid robot for work in unpredictable environments, but controlling a humanoid robot remains a very difficult problem. In our previous work, we designed a prototype virtual reality (VR) interface to allow an operator to command a humanoid robot. However, while usable, the initial interface was not sufficient for commanding the robot to perform the tasks; for example, in some cases, there was a lack of precision available for robot control. The interface was overly cumbersome in some areas as well. In this paper, we discuss numerous additions, inspired by traditional interfaces and virtual reality video games, to our prior implementation, providing additional ways to visualize and command a humanoid robot to perform difficult tasks within a virtual world.

Robotics Enabling the Workforce

Dec 16, 2020Abstract:Robotics has the potential to magnify the skilled workforce of the nation by complementing our workforce with automation: teams of people and robots will be able to do more than either could alone. The economic engine of the U.S. runs on the productivity of our people. The rise of automation offers new opportunities to enhance the work of our citizens and drive the innovation and prosperity of our industries. Most critically, we need research to understand how future robot technologies can best complement our workforce to get the best of both human and automated labor in a collaborative team. Investments made in robotics research and workforce development will lead to increased GDP, an increased export-import ratio, a growing middle class of skilled workers, and a U.S.-based supply chain that can withstand global pandemics and other disruptions. In order to make the United States a leader in robotics, we need to invest in basic research, technology development, K-16 education, and lifelong learning.

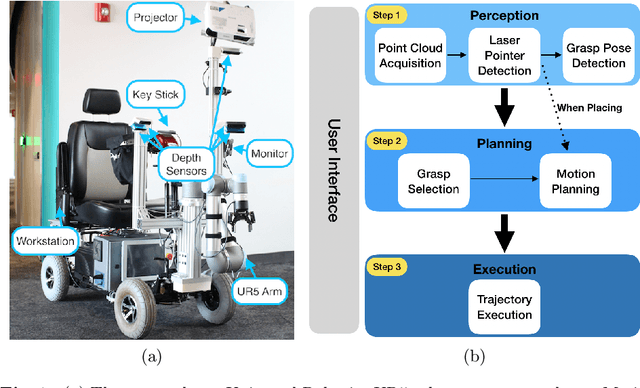

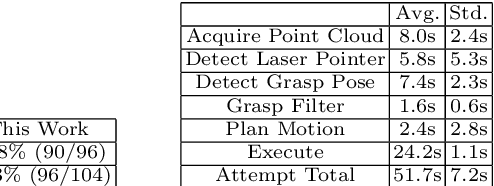

A Scooter-Mounted Robot Arm to Assist with Activities of Daily Life

Sep 25, 2018

Abstract:Many people with motor disabilities struggle with activities of daily life (ADLs), limiting their ability to live independently. This paper details a robotic mobility scooter developed to assist with manipulation-based ADLs to increase independence. We present a system comprised of a Universal Robotics UR5 robotic arm, a mobility scooter, five depth sensors, and a user interface which utilizes laser pointers. The system provides pick-and-drop and pick-and-place functionality in open world environments without modeling the objects or environment. We evaluate our system over several experimental scenarios and show an improvement relative to a baseline established for a similar system.

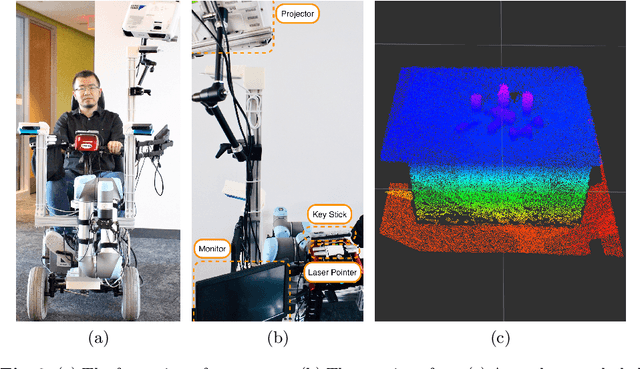

Open World Assistive Grasping Using Laser Selection

Jun 21, 2017

Abstract:Many people with motor disabilities are unable to complete activities of daily living (ADLs) without assistance. This paper describes a complete robotic system developed to provide mobile grasping assistance for ADLs. The system is comprised of a robot arm from a Rethink Robotics Baxter robot mounted to an assistive mobility device, a control system for that arm, and a user interface with a variety of access methods for selecting desired objects. The system uses grasp detection to allow previously unseen objects to be picked up by the system. The grasp detection algorithms also allow for objects to be grasped in cluttered environments. We evaluate our system in a number of experiments on a large variety of objects. Overall, we achieve an object selection success rate of 88% and a grasp detection success rate of 90% in a non-mobile scenario, and success rates of 89% and 72% in a mobile scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge