Andreas ten Pas

Efficient and Accurate Candidate Generation for Grasp Pose Detection in SE(3)

Apr 03, 2022

Abstract:Grasp detection of novel objects in unstructured environments is a key capability in robotic manipulation. For 2D grasp detection problems where grasps are assumed to lie in the plane, it is common to design a fully convolutional neural network that predicts grasps over an entire image in one step. However, this is not possible for grasp pose detection where grasp poses are assumed to exist in SE(3). In this case, it is common to approach the problem in two steps: grasp candidate generation and candidate classification. Since grasp candidate classification is typically expensive, the problem becomes one of efficiently identifying high quality candidate grasps. This paper proposes a new grasp candidate generation method that significantly outperforms major 3D grasp detection baselines. Supplementary material is available at https://atenpas.github.io/psn/.

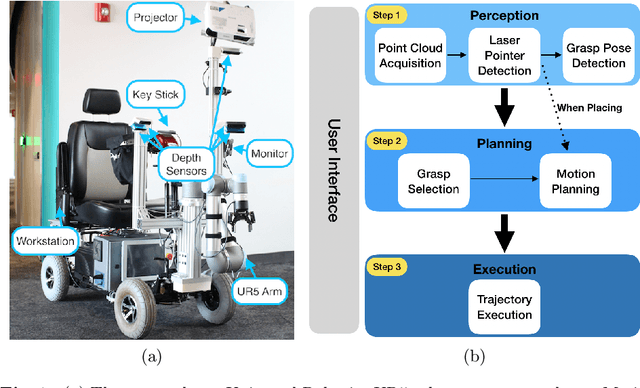

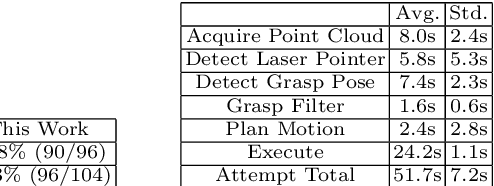

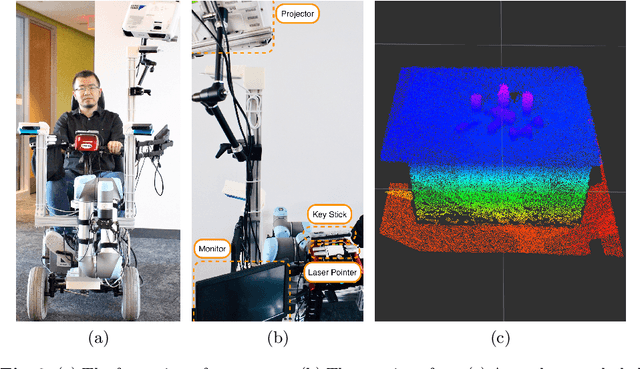

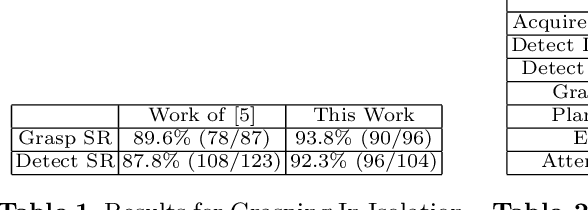

A Scooter-Mounted Robot Arm to Assist with Activities of Daily Life

Sep 25, 2018

Abstract:Many people with motor disabilities struggle with activities of daily life (ADLs), limiting their ability to live independently. This paper details a robotic mobility scooter developed to assist with manipulation-based ADLs to increase independence. We present a system comprised of a Universal Robotics UR5 robotic arm, a mobility scooter, five depth sensors, and a user interface which utilizes laser pointers. The system provides pick-and-drop and pick-and-place functionality in open world environments without modeling the objects or environment. We evaluate our system over several experimental scenarios and show an improvement relative to a baseline established for a similar system.

Pick and Place Without Geometric Object Models

Feb 22, 2018

Abstract:We propose a novel formulation of robotic pick and place as a deep reinforcement learning (RL) problem. Whereas most deep RL approaches to robotic manipulation frame the problem in terms of low level states and actions, we propose a more abstract formulation. In this formulation, actions are target reach poses for the hand and states are a history of such reaches. We show this approach can solve a challenging class of pick-place and regrasping problems where the exact geometry of the objects to be handled is unknown. The only information our method requires is: 1) the sensor perception available to the robot at test time; 2) prior knowledge of the general class of objects for which the system was trained. We evaluate our method using objects belonging to two different categories, mugs and bottles, both in simulation and on real hardware. Results show a major improvement relative to a shape primitives baseline.

Learning a visuomotor controller for real world robotic grasping using simulated depth images

Nov 17, 2017

Abstract:We want to build robots that are useful in unstructured real world applications, such as doing work in the household. Grasping in particular is an important skill in this domain, yet it remains a challenge. One of the key hurdles is handling unexpected changes or motion in the objects being grasped and kinematic noise or other errors in the robot. This paper proposes an approach to learning a closed-loop controller for robotic grasping that dynamically guides the gripper to the object. We use a wrist-mounted sensor to acquire depth images in front of the gripper and train a convolutional neural network to learn a distance function to true grasps for grasp configurations over an image. The training sensor data is generated in simulation, a major advantage over previous work that uses real robot experience, which is costly to obtain. Despite being trained in simulation, our approach works well on real noisy sensor images. We compare our controller in simulated and real robot experiments to a strong baseline for grasp pose detection, and find that our approach significantly outperforms the baseline in the presence of kinematic noise, perceptual errors and disturbances of the object during grasping.

Grasp Pose Detection in Point Clouds

Jun 29, 2017

Abstract:Recently, a number of grasp detection methods have been proposed that can be used to localize robotic grasp configurations directly from sensor data without estimating object pose. The underlying idea is to treat grasp perception analogously to object detection in computer vision. These methods take as input a noisy and partially occluded RGBD image or point cloud and produce as output pose estimates of viable grasps, without assuming a known CAD model of the object. Although these methods generalize grasp knowledge to new objects well, they have not yet been demonstrated to be reliable enough for wide use. Many grasp detection methods achieve grasp success rates (grasp successes as a fraction of the total number of grasp attempts) between 75% and 95% for novel objects presented in isolation or in light clutter. Not only are these success rates too low for practical grasping applications, but the light clutter scenarios that are evaluated often do not reflect the realities of real world grasping. This paper proposes a number of innovations that together result in a significant improvement in grasp detection performance. The specific improvement in performance due to each of our contributions is quantitatively measured either in simulation or on robotic hardware. Ultimately, we report a series of robotic experiments that average a 93% end-to-end grasp success rate for novel objects presented in dense clutter.

High precision grasp pose detection in dense clutter

Jun 22, 2017

Abstract:This paper considers the problem of grasp pose detection in point clouds. We follow a general algorithmic structure that first generates a large set of 6-DOF grasp candidates and then classifies each of them as a good or a bad grasp. Our focus in this paper is on improving the second step by using depth sensor scans from large online datasets to train a convolutional neural network. We propose two new representations of grasp candidates, and we quantify the effect of using prior knowledge of two forms: instance or category knowledge of the object to be grasped, and pretraining the network on simulated depth data obtained from idealized CAD models. Our analysis shows that a more informative grasp candidate representation as well as pretraining and prior knowledge significantly improve grasp detection. We evaluate our approach on a Baxter Research Robot and demonstrate an average grasp success rate of 93% in dense clutter. This is a 20% improvement compared to our prior work.

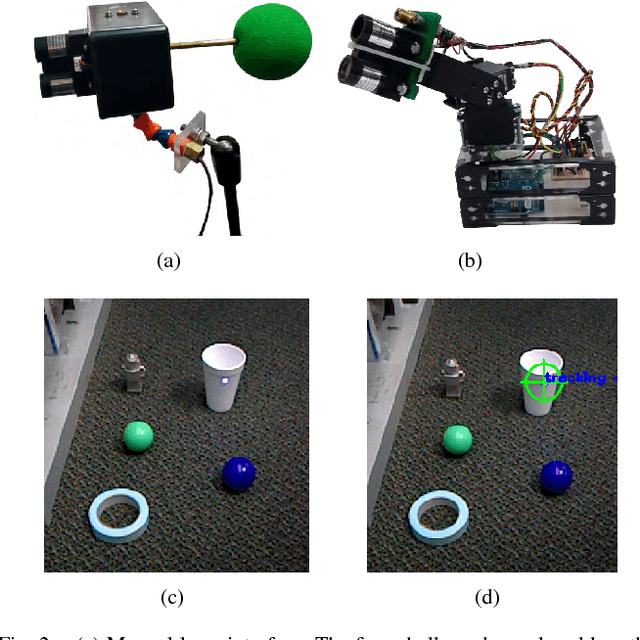

Open World Assistive Grasping Using Laser Selection

Jun 21, 2017

Abstract:Many people with motor disabilities are unable to complete activities of daily living (ADLs) without assistance. This paper describes a complete robotic system developed to provide mobile grasping assistance for ADLs. The system is comprised of a robot arm from a Rethink Robotics Baxter robot mounted to an assistive mobility device, a control system for that arm, and a user interface with a variety of access methods for selecting desired objects. The system uses grasp detection to allow previously unseen objects to be picked up by the system. The grasp detection algorithms also allow for objects to be grasped in cluttered environments. We evaluate our system in a number of experiments on a large variety of objects. Overall, we achieve an object selection success rate of 88% and a grasp detection success rate of 90% in a non-mobile scenario, and success rates of 89% and 72% in a mobile scenario.

Using Geometry to Detect Grasps in 3D Point Clouds

Apr 29, 2015

Abstract:This paper proposes a new approach to detecting grasp points on novel objects presented in clutter. The input to our algorithm is a point cloud and the geometric parameters of the robot hand. The output is a set of hand configurations that are expected to be good grasps. Our key idea is to use knowledge of the geometry of a good grasp to improve detection. First, we use a geometrically necessary condition to sample a large set of high quality grasp hypotheses. We were surprised to find that using simple geometric conditions for detection can result in a relatively high grasp success rate. Second, we use the notion of an antipodal grasp (a standard characterization of a good two fingered grasp) to help us classify these grasp hypotheses. In particular, we generate a large automatically labeled training set that gives us high classification accuracy. Overall, our method achieves an average grasp success rate of 88% when grasping novels objects presented in isolation and an average success rate of 73% when grasping novel objects presented in dense clutter. This system is available as a ROS package at http://wiki.ros.org/agile_grasp.

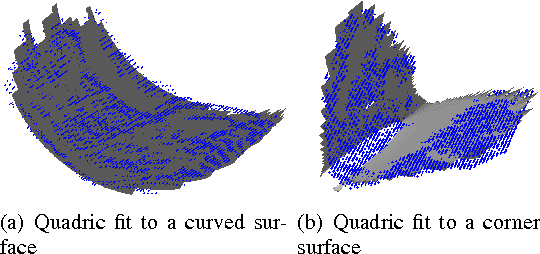

Localizing Grasp Affordances in 3-D Points Clouds Using Taubin Quadric Fitting

Nov 13, 2013

Abstract:Perception-for-grasping is a challenging problem in robotics. Inexpensive range sensors such as the Microsoft Kinect provide sensing capabilities that have given new life to the effort of developing robust and accurate perception methods for robot grasping. This paper proposes a new approach to localizing enveloping grasp affordances in 3-D point clouds efficiently. The approach is based on modeling enveloping grasp affordances as a cylindrical shells that corresponds to the geometry of the robot hand. A fast and accurate fitting method for quadratic surfaces is the core of our approach. An evaluation on a set of cluttered environments shows high precision and recall statistics. Our results also show that the approach compares favorably with some alternatives, and that it is efficient enough to be employed for robot grasping in real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge