Jordan Allspaw

Semi-Autonomous Planning and Visualization in Virtual Reality

Apr 23, 2021

Abstract:Virtual reality (VR) interfaces for robots provide a three-dimensional (3D) view of the robot in its environment, which allows people to better plan complex robot movements in tight or cluttered spaces. In our prior work, we created a VR interface to allow for the teleoperation of a humanoid robot. As detailed in this paper, we have now focused on a human-in-the-loop planner where the operator can send higher level manipulation and navigation goals in VR through functional waypoints, visualize the results of a robot planner in the 3D virtual space, and then deny, alter or confirm the plan to send to the robot. In addition, we have adapted our interface to also work for a mobile manipulation robot in addition to the humanoid robot. For a video demonstration please see the accompanying video at https://youtu.be/wEHZug_fxrA.

Implementing Virtual Reality for Teleoperation of a Humanoid Robot

Apr 23, 2021

Abstract:Our research explores the potential of a humanoid robot for work in unpredictable environments, but controlling a humanoid robot remains a very difficult problem. In our previous work, we designed a prototype virtual reality (VR) interface to allow an operator to command a humanoid robot. However, while usable, the initial interface was not sufficient for commanding the robot to perform the tasks; for example, in some cases, there was a lack of precision available for robot control. The interface was overly cumbersome in some areas as well. In this paper, we discuss numerous additions, inspired by traditional interfaces and virtual reality video games, to our prior implementation, providing additional ways to visualize and command a humanoid robot to perform difficult tasks within a virtual world.

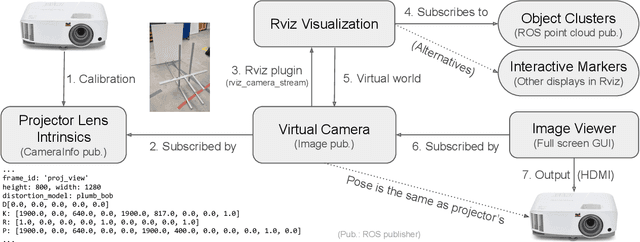

Projection Mapping Implementation: Enabling Direct Externalization of Perception Results and Action Intent to Improve Robot Explainability

Oct 05, 2020

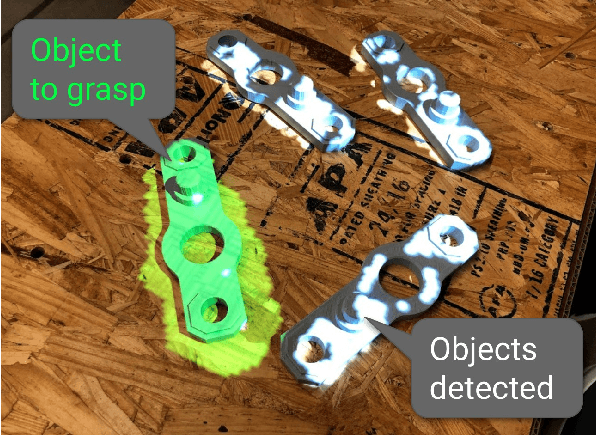

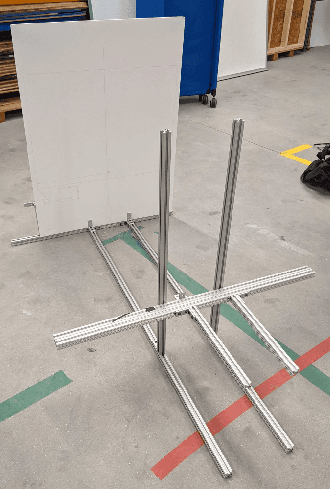

Abstract:Existing research on non-verbal cues, e.g., eye gaze or arm movement, may not accurately present a robot's internal states such as perception results and action intent. Projecting the states directly onto a robot's operating environment has the advantages of being direct, accurate, and more salient, eliminating mental inference about the robot's intention. However, there is a lack of tools for projection mapping in robotics, compared to established motion planning libraries (e.g., MoveIt). In this paper, we detail the implementation of projection mapping to enable researchers and practitioners to push the boundaries for better interaction between robots and humans. We also provide practical documentation and code for a sample manipulation projection mapping on GitHub: github.com/uml-robotics/projection_mapping.

Towards Mobile Multi-Task Manipulation in a Confined and Integrated Environment with Irregular Objects

Mar 03, 2020

Abstract:The FetchIt! Mobile Manipulation Challenge, held at the IEEE International Conference on Robots and Automation (ICRA) in May 2019, offered an environment with complex and integrated task sets, irregular objects, confined space, and machining, introducing new challenges in the mobile manipulation domain. Here we describe our efforts to address these challenges by demonstrating the assembly of a kit of mechanical parts in a caddy. In addition to implementation details, we examine the issues in this task set extensively, and we discuss our software architecture in the hope of providing a base for other researchers. To evaluate performance and consistency, we conducted 20 full runs, then examined failure cases with possible solutions. We conclude by identifying future research directions to address the open challenges.

Towards A Robot Explanation System: A Survey and Our Approach to State Summarization, Storage and Querying, and Human Interface

Sep 13, 2019

Abstract:As robot systems become more ubiquitous, developing understandable robot systems becomes increasingly important in order to build trust. In this paper, we present an approach to developing a holistic robot explanation system, which consists of three interconnected components: state summarization, storage and querying, and human interface. To find trends towards and gaps in the development of such an integrated system, a literature review was performed and categorized around those three components, with a focus on robotics applications. After the review of each component, we discuss our proposed approach for robot explanation. Finally, we summarize the system as a whole and review its functionality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge