Constantinos Chamzas

Learning Discrete Abstractions for Visual Rearrangement Tasks Using Vision-Guided Graph Coloring

Sep 17, 2025Abstract:Learning abstractions directly from data is a core challenge in robotics. Humans naturally operate at an abstract level, reasoning over high-level subgoals while delegating execution to low-level motor skills -- an ability that enables efficient problem solving in complex environments. In robotics, abstractions and hierarchical reasoning have long been central to planning, yet they are typically hand-engineered, demanding significant human effort and limiting scalability. Automating the discovery of useful abstractions directly from visual data would make planning frameworks more scalable and more applicable to real-world robotic domains. In this work, we focus on rearrangement tasks where the state is represented with raw images, and propose a method to induce discrete, graph-structured abstractions by combining structural constraints with an attention-guided visual distance. Our approach leverages the inherent bipartite structure of rearrangement problems, integrating structural constraints and visual embeddings into a unified framework. This enables the autonomous discovery of abstractions from vision alone, which can subsequently support high-level planning. We evaluate our method on two rearrangement tasks in simulation and show that it consistently identifies meaningful abstractions that facilitate effective planning and outperform existing approaches.

ActivePusher: Active Learning and Planning with Residual Physics for Nonprehensile Manipulation

Jun 05, 2025Abstract:Planning with learned dynamics models offers a promising approach toward real-world, long-horizon manipulation, particularly in nonprehensile settings such as pushing or rolling, where accurate analytical models are difficult to obtain. Although learning-based methods hold promise, collecting training data can be costly and inefficient, as it often relies on randomly sampled interactions that are not necessarily the most informative. To address this challenge, we propose ActivePusher, a novel framework that combines residual-physics modeling with kernel-based uncertainty-driven active learning to focus data acquisition on the most informative skill parameters. Additionally, ActivePusher seamlessly integrates with model-based kinodynamic planners, leveraging uncertainty estimates to bias control sampling toward more reliable actions. We evaluate our approach in both simulation and real-world environments and demonstrate that it improves data efficiency and planning success rates compared to baseline methods.

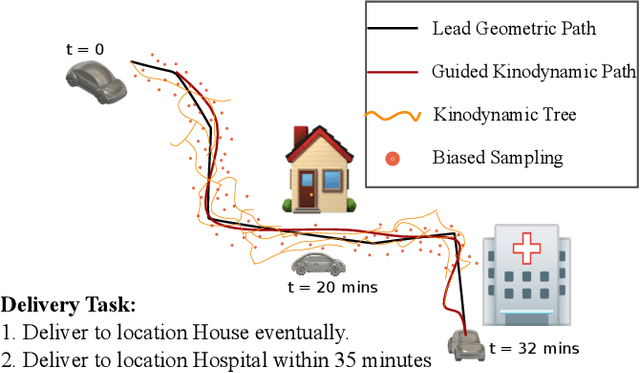

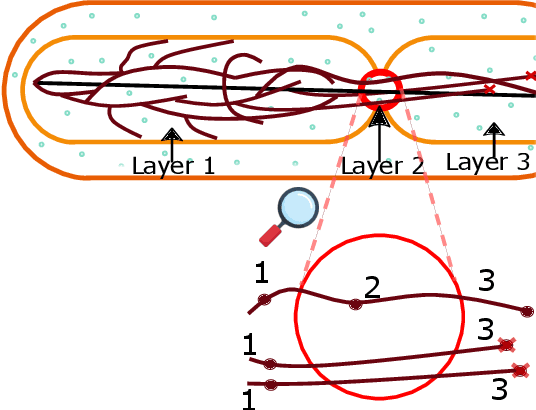

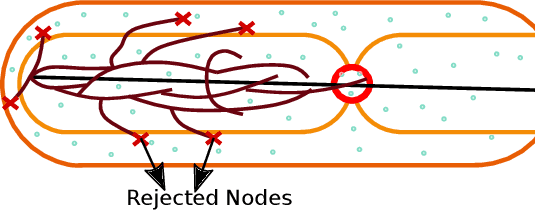

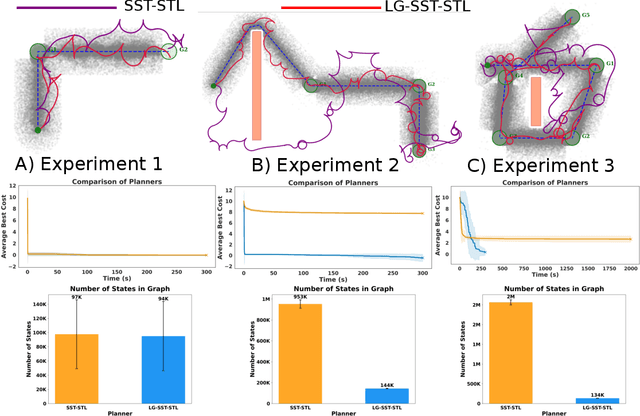

Multi-layer Motion Planning with Kinodynamic and Spatio-Temporal Constraints

Mar 10, 2025

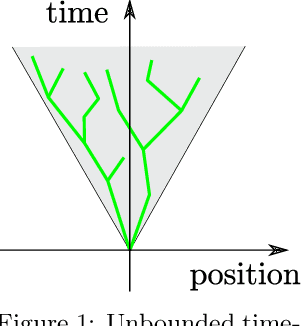

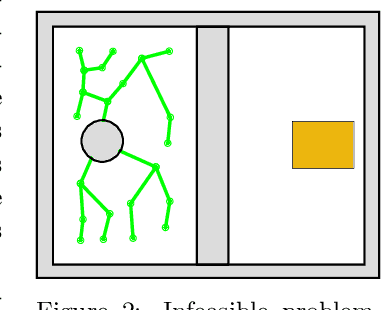

Abstract:We propose a novel, multi-layered planning approach for computing paths that satisfy both kinodynamic and spatiotemporal constraints. Our three-part framework first establishes potential sequences to meet spatial constraints, using them to calculate a geometric lead path. This path then guides an asymptotically optimal sampling-based kinodynamic planner, which minimizes an STL-robustness cost to jointly satisfy spatiotemporal and kinodynamic constraints. In our experiments, we test our method with a velocity-controlled Ackerman-car model and demonstrate significant efficiency gains compared to prior art. Additionally, our method is able to generate complex path maneuvers, such as crossovers, something that previous methods had not demonstrated.

Image-Based Roadmaps for Vision-Only Planning and Control of Robotic Manipulators

Feb 26, 2025Abstract:This work presents a motion planning framework for robotic manipulators that computes collision-free paths directly in image space. The generated paths can then be tracked using vision-based control, eliminating the need for an explicit robot model or proprioceptive sensing. At the core of our approach is the construction of a roadmap entirely in image space. To achieve this, we explicitly define sampling, nearest-neighbor selection, and collision checking based on visual features rather than geometric models. We first collect a set of image-space samples by moving the robot within its workspace, capturing keypoints along its body at different configurations. These samples serve as nodes in the roadmap, which we construct using either learned or predefined distance metrics. At runtime, the roadmap generates collision-free paths directly in image space, removing the need for a robot model or joint encoders. We validate our approach through an experimental study in which a robotic arm follows planned paths using an adaptive vision-based control scheme to avoid obstacles. The results show that paths generated with the learned-distance roadmap achieved 100% success in control convergence, whereas the predefined image-space distance roadmap enabled faster transient responses but had a lower success rate in convergence.

Expansion-GRR: Efficient Generation of Smooth Global Redundancy Resolution Roadmaps

May 22, 2024

Abstract:Global redundancy resolution (GRR) roadmap is a novel concept in robotics that facilitates the mapping from task space paths to configuration space paths in a legible, predictable, and repeatable way. Such roadmaps could find widespread utility in applications such as safe teleoperation, consistent path planning, and factory workcell design. However, the previous methods to compute GRR roadmaps often necessitate a lengthy computation time and produce non-smooth paths, limiting their practical efficacy. To address this challenge, we introduce a novel method Expansion-GRR that leverages efficient configuration space projections and enables a rapid generation of smooth roadmaps that satisfy the task constraints. Additionally, we propose a simple multi-seed strategy that further enhances the final quality. We conducted experiments in simulation with a 5-link planar manipulator and a Kinova arm. We were able to generate the GRR roadmaps up to 2 orders of magnitude faster while achieving higher smoothness. We also demonstrate the utility of the GRR roadmaps in teleoperation tasks where our method outperformed prior methods and reactive IK solvers in terms of success rate and solution quality.

Sampling-Based Motion Planning: A Comparative Review

Sep 22, 2023

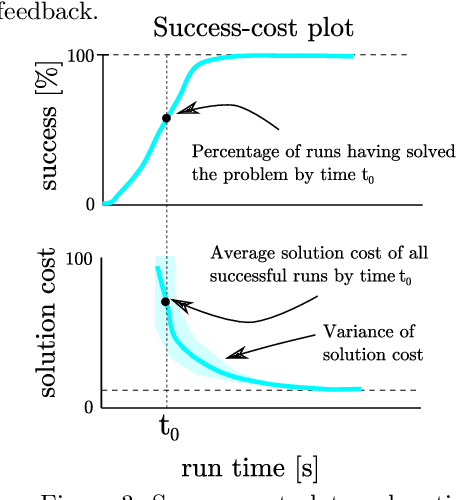

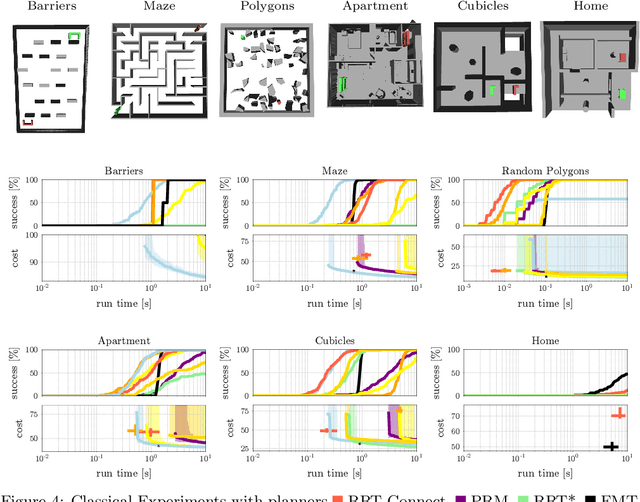

Abstract:Sampling-based motion planning is one of the fundamental paradigms to generate robot motions, and a cornerstone of robotics research. This comparative review provides an up-to-date guideline and reference manual for the use of sampling-based motion planning algorithms. This includes a history of motion planning, an overview about the most successful planners, and a discussion on their properties. It is also shown how planners can handle special cases and how extensions of motion planning can be accommodated. To put sampling-based motion planning into a larger context, a discussion of alternative motion generation frameworks is presented which highlights their respective differences to sampling-based motion planning. Finally, a set of sampling-based motion planners are compared on 24 challenging planning problems. This evaluation gives insights into which planners perform well in which situations and where future research would be required. This comparative review thereby provides not only a useful reference manual for researchers in the field, but also a guideline for practitioners to make informed algorithmic decisions.

Meta-Policy Learning over Plan Ensembles for Robust Articulated Object Manipulation

Jul 08, 2023

Abstract:Recent work has shown that complex manipulation skills, such as pushing or pouring, can be learned through state-of-the-art learning based techniques, such as Reinforcement Learning (RL). However, these methods often have high sample-complexity, are susceptible to domain changes, and produce unsafe motions that a robot should not perform. On the other hand, purely geometric model-based planning can produce complex behaviors that satisfy all the geometric constraints of the robot but might not be dynamically feasible for a given environment. In this work, we leverage a geometric model-based planner to build a mixture of path-policies on which a task-specific meta-policy can be learned to complete the task. In our results, we demonstrate that a successful meta-policy can be learned to push a door, while requiring little data and being robust to model uncertainty of the environment. We tested our method on a 7-DOF Franka-Emika Robot pushing a cabinet door in simulation.

Learning to Retrieve Relevant Experiences for Motion Planning

Apr 18, 2022

Abstract:Recent work has demonstrated that motion planners' performance can be significantly improved by retrieving past experiences from a database. Typically, the experience database is queried for past similar problems using a similarity function defined over the motion planning problems. However, to date, most works rely on simple hand-crafted similarity functions and fail to generalize outside their corresponding training dataset. To address this limitation, we propose (FIRE), a framework that extracts local representations of planning problems and learns a similarity function over them. To generate the training data we introduce a novel self-supervised method that identifies similar and dissimilar pairs of local primitives from past solution paths. With these pairs, a Siamese network is trained with the contrastive loss and the similarity function is realized in the network's latent space. We evaluate FIRE on an 8-DOF manipulator in five categories of motion planning problems with sensed environments. Our experiments show that FIRE retrieves relevant experiences which can informatively guide sampling-based planners even in problems outside its training distribution, outperforming other baselines.

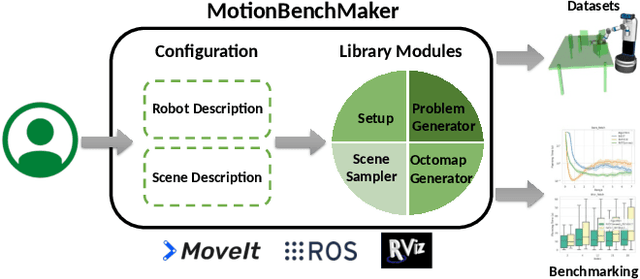

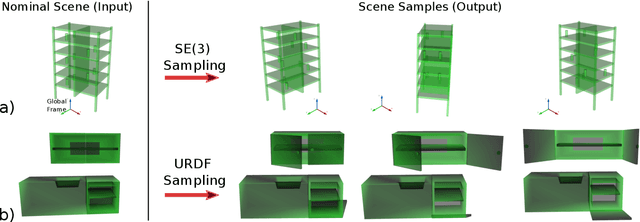

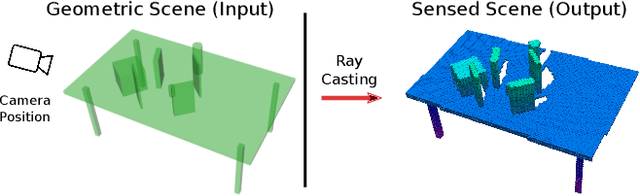

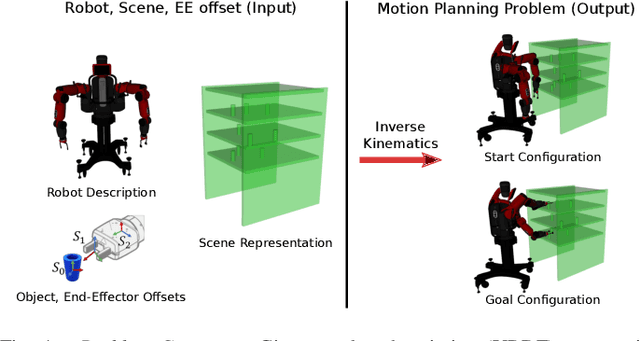

MotionBenchMaker: A Tool to Generate and Benchmark Motion Planning Datasets

Dec 13, 2021

Abstract:Recently, there has been a wealth of development in motion planning for robotic manipulation new motion planners are continuously proposed, each with their own unique strengths and weaknesses. However, evaluating new planners is challenging and researchers often create their own ad-hoc problems for benchmarking, which is time-consuming, prone to bias, and does not directly compare against other state-of-the-art planners. We present MotionBenchMaker, an open-source tool to generate benchmarking datasets for realistic robot manipulation problems. MotionBenchMaker is designed to be an extensible, easy-to-use tool that allows users to both generate datasets and benchmark them by comparing motion planning algorithms. Empirically, we show the benefit of using MotionBenchMaker as a tool to procedurally generate datasets which helps in the fair evaluation of planners. We also present a suite of 40 prefabricated datasets, with 5 different commonly used robots in 8 environments, to serve as a common ground to accelerate motion planning research.

Comparing Reconstruction- and Contrastive-based Models for Visual Task Planning

Sep 14, 2021

Abstract:Learning state representations enables robotic planning directly from raw observations such as images. Most methods learn state representations by utilizing losses based on the reconstruction of the raw observations from a lower-dimensional latent space. The similarity between observations in the space of images is often assumed and used as a proxy for estimating similarity between the underlying states of the system. However, observations commonly contain task-irrelevant factors of variation which are nonetheless important for reconstruction, such as varying lighting and different camera viewpoints. In this work, we define relevant evaluation metrics and perform a thorough study of different loss functions for state representation learning. We show that models exploiting task priors, such as Siamese networks with a simple contrastive loss, outperform reconstruction-based representations in visual task planning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge