Séverin Lemaignan

Socially Pertinent Robots in Gerontological Healthcare

Apr 11, 2024

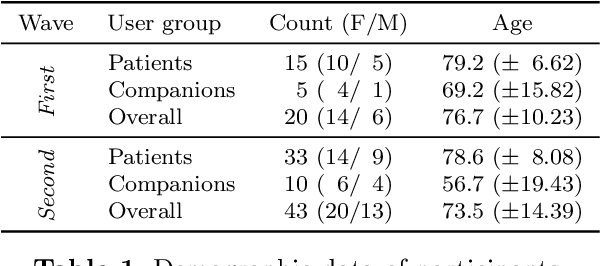

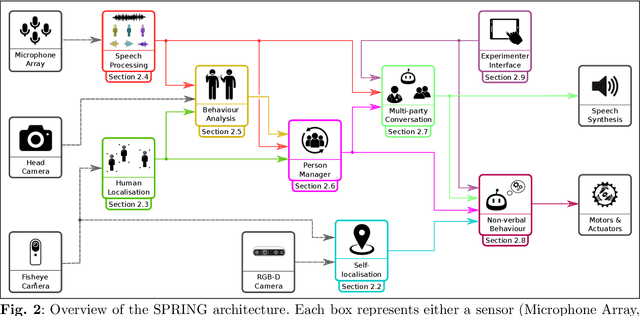

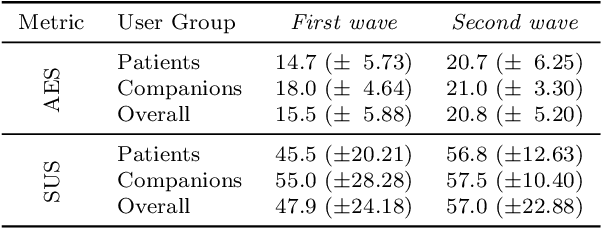

Abstract:Despite the many recent achievements in developing and deploying social robotics, there are still many underexplored environments and applications for which systematic evaluation of such systems by end-users is necessary. While several robotic platforms have been used in gerontological healthcare, the question of whether or not a social interactive robot with multi-modal conversational capabilities will be useful and accepted in real-life facilities is yet to be answered. This paper is an attempt to partially answer this question, via two waves of experiments with patients and companions in a day-care gerontological facility in Paris with a full-sized humanoid robot endowed with social and conversational interaction capabilities. The software architecture, developed during the H2020 SPRING project, together with the experimental protocol, allowed us to evaluate the acceptability (AES) and usability (SUS) with more than 60 end-users. Overall, the users are receptive to this technology, especially when the robot perception and action skills are robust to environmental clutter and flexible to handle a plethora of different interactions.

End-User Development for Human-Robot Interaction

Feb 27, 2024Abstract:End-user development (EUD) represents a key step towards making robotics accessible for experts and nonexperts alike. Within academia, researchers investigate novel ways that EUD tools can capture, represent, visualize, analyze, and test developer intent. At the same time, industry researchers increasingly build and ship programming tools that enable customers to interact with their robots. However, despite this growing interest, the role of EUD within HRI is not well defined. EUD struggles to situate itself within a growing array of alternative approaches to application development, such as robot learning and teleoperation. EUD further struggles due to the wide range of individuals who can be considered end users, such as independent third-party application developers, consumers, hobbyists, or even employees of the robot manufacturer. Key questions remain such as how EUD is justified over alternate approaches to application development, which contexts EUD is most suited for, who the target users of an EUD system are, and where interaction between a human and a robot takes place, amongst many other questions. We seek to address these challenges and questions by organizing the first End-User Development for Human-Robot Interaction (EUD4HRI) workshop at the 2024 International Conference of Human-Robot Interaction. The workshop will bring together researchers with a wide range of expertise across academia and industry, spanning perspectives from multiple subfields of robotics, with the primary goal being a consensus of perspectives about the role that EUD must play within human-robot interaction.

Bio-Inspired Grasping Controller for Sensorized 2-DoF Grippers

Nov 13, 2023Abstract:We present a holistic grasping controller, combining free-space position control and in-contact force-control for reliable grasping given uncertain object pose estimates. Employing tactile fingertip sensors, undesired object displacement during grasping is minimized by pausing the finger closing motion for individual joints on first contact until force-closure is established. While holding an object, the controller is compliant with external forces to avoid high internal object forces and prevent object damage. Gravity as an external force is explicitly considered and compensated for, thus preventing gravity-induced object drift. We evaluate the controller in two experiments on the TIAGo robot and its parallel-jaw gripper proving the effectiveness of the approach for robust grasping and minimizing object displacement. In a series of ablation studies, we demonstrate the utility of the individual controller components.

UNICEF Guidance on AI for Children: Application to the Design of a Social Robot For and With Autistic Children

Aug 27, 2021

Abstract:For a period of three weeks in June 2021, we embedded a social robot (Softbank Pepper) in a Special Educational Needs (SEN) school, with a focus on supporting the well-being of autistic children. Our methodology to design and embed the robot among this vulnerable population follows a comprehensive participatory approach. We used the research project as a test-bed to demonstrate in a complex real-world environment the importance and suitability of the nine UNICEF guidelines on AI for Children. The UNICEF guidelines on AI for Children closely align with several of the UN goals for sustainable development, and, as such, we report here our contribution to these goals.

LEADOR: A Method for End-to-End Participatory Design of Autonomous Social Robots

May 14, 2021

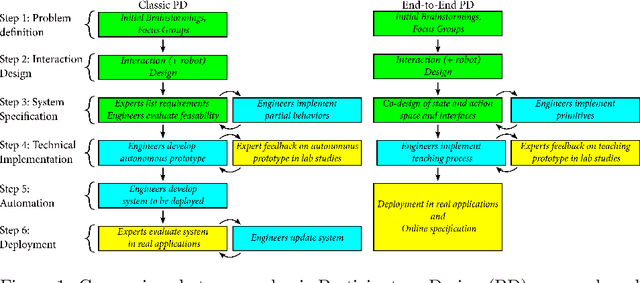

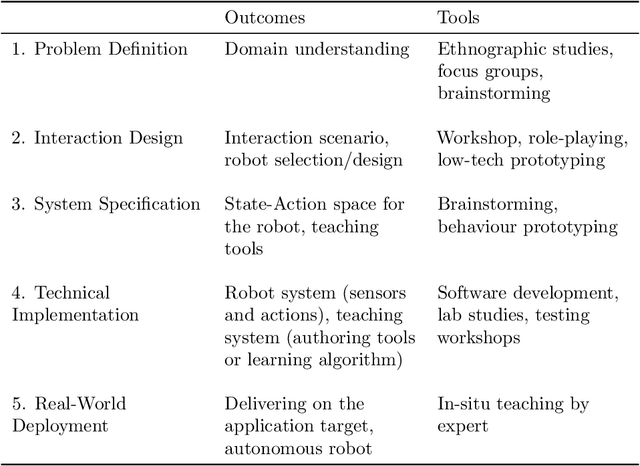

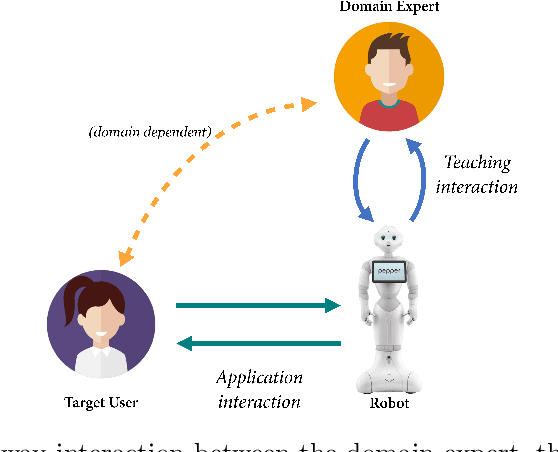

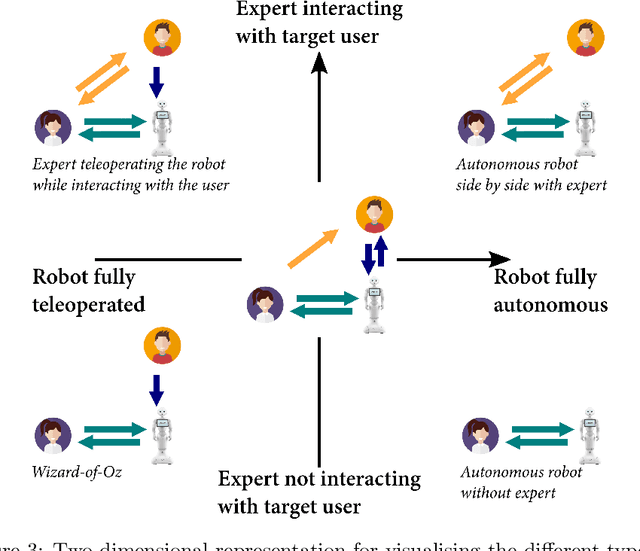

Abstract:Participatory Design (PD) in Human-Robot Interaction (HRI) typically remains limited to the early phases of development, with subsequent robot behaviours then being hardcoded by engineers or utilised in Wizard-of-Oz (WoZ) systems that rarely achieve autonomy. We present LEADOR (Led-by-Experts Automation and Design Of Robots) an end-to-end PD methodology for domain expert co-design, automation and evaluation of social robots. LEADOR starts with typical PD to co-design the interaction specifications and state and action space of the robot. It then replaces traditional offline programming or WoZ by an in-situ, online teaching phase where the domain expert can live-program or teach the robot how to behave while being embedded in the interaction context. We believe that this live teaching can be best achieved by adding a learning component to a WoZ setup, to capture experts' implicit knowledge, as they intuitively respond to the dynamics of the situation. The robot progressively learns an appropriate, expert-approved policy, ultimately leading to full autonomy, even in sensitive and/or ill-defined environments. However, LEADOR is agnostic to the exact technical approach used to facilitate this learning process. The extensive inclusion of the domain expert(s) in robot design represents established responsible innovation practice, lending credibility to the system both during the teaching phase and when operating autonomously. The combination of this expert inclusion with the focus on in-situ development also means LEADOR supports a mutual shaping approach to social robotics. We draw on two previously published, foundational works from which this (generalisable) methodology has been derived in order to demonstrate the feasibility and worth of this approach, provide concrete examples in its application and identify limitations and opportunities when applying this framework in new environments.

ROS for Human-Robot Interaction

Dec 27, 2020

Abstract:Integrating real-time, complex social signal processing into robotic systems -- especially in real-world, multi-party interaction situations -- is a challenge faced by many in the Human-Robot Interaction (HRI) community. The difficulty is compounded by the lack of any standard model for human representation that would facilitate the development and interoperability of social perception components and pipelines. We introduce in this paper a set of conventions and standard interfaces for HRI scenarios, designed to be used with the Robot Operating System (ROS). It directly aims at promoting interoperability and re-usability of core functionality between the many HRI-related software tools, from skeleton tracking, to face recognition, to natural language processing. Importantly, these interfaces are designed to be relevant to a broad range of HRI applications, from high-level crowd simulation, to group-level social interaction modelling, to detailed modelling of human kinematics. We demonstrate these interface by providing a reference pipeline implementation, packaged to be easily downloaded and evaluated by the community.

The Free-play Sandbox: a Methodology for the Evaluation of Social Robotics and a Dataset of Social Interactions

Dec 06, 2017

Abstract:Evaluating human-robot social interactions in a rigorous manner is notoriously difficult: studies are either conducted in labs with constrained protocols to allow for robust measurements and a degree of replicability, but at the cost of ecological validity; or in the wild, which leads to superior experimental realism, but often with limited replicability and at the expense of rigorous interaction metrics. We introduce a novel interaction paradigm, designed to elicit rich and varied social interactions while having desirable scientific properties (replicability, clear metrics, possibility of either autonomous or Wizard-of-Oz robot behaviours). This paradigm focuses on child-robot interactions, and builds on a sandboxed free-play environment. We present the rationale and design of the interaction paradigm, its methodological and technical aspects (including the open-source implementation of the software platform), as well as two large open datasets acquired with this paradigm, and meant to act as experimental baselines for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge