Bilge Mutlu

Robot-Assisted Group Tours for Blind People

Feb 04, 2026Abstract:Group interactions are essential to social functioning, yet effective engagement relies on the ability to recognize and interpret visual cues, making such engagement a significant challenge for blind people. In this paper, we investigate how a mobile robot can support group interactions for blind people. We used the scenario of a guided tour with mixed-visual groups involving blind and sighted visitors. Based on insights from an interview study with blind people (n=5) and museum experts (n=5), we designed and prototyped a robotic system that supported blind visitors to join group tours. We conducted a field study in a science museum where each blind participant (n=8) joined a group tour with one guide and two sighted participants (n=8). Findings indicated users' sense of safety from the robot's navigational support, concerns in the group participation, and preferences for obtaining environmental information. We present design implications for future robotic systems to support blind people's mixed-visual group participation.

MAP: Multi-user Personalization with Collaborative LLM-powered Agents

Mar 17, 2025Abstract:The widespread adoption of Large Language Models (LLMs) and LLM-powered agents in multi-user settings underscores the need for reliable, usable methods to accommodate diverse preferences and resolve conflicting directives. Drawing on conflict resolution theory, we introduce a user-centered workflow for multi-user personalization comprising three stages: Reflection, Analysis, and Feedback. We then present MAP -- a \textbf{M}ulti-\textbf{A}gent system for multi-user \textbf{P}ersonalization -- to operationalize this workflow. By delegating subtasks to specialized agents, MAP (1) retrieves and reflects on relevant user information, while enhancing reliability through agent-to-agent interactions, (2) provides detailed analysis for improved transparency and usability, and (3) integrates user feedback to iteratively refine results. Our user study findings (n=12) highlight MAP's effectiveness and usability for conflict resolution while emphasizing the importance of user involvement in resolution verification and failure management. This work highlights the potential of multi-agent systems to implement user-centered, multi-user personalization workflows and concludes by offering insights for personalization in multi-user contexts.

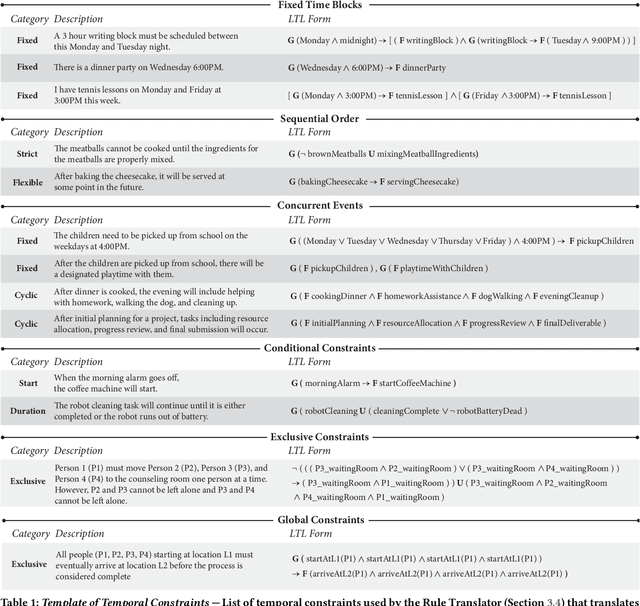

VeriPlan: Integrating Formal Verification and LLMs into End-User Planning

Feb 25, 2025

Abstract:Automated planning is traditionally the domain of experts, utilized in fields like manufacturing and healthcare with the aid of expert planning tools. Recent advancements in LLMs have made planning more accessible to everyday users due to their potential to assist users with complex planning tasks. However, LLMs face several application challenges within end-user planning, including consistency, accuracy, and user trust issues. This paper introduces VeriPlan, a system that applies formal verification techniques, specifically model checking, to enhance the reliability and flexibility of LLMs for end-user planning. In addition to the LLM planner, VeriPlan includes three additional core features -- a rule translator, flexibility sliders, and a model checker -- that engage users in the verification process. Through a user study (n=12), we evaluate VeriPlan, demonstrating improvements in the perceived quality, usability, and user satisfaction of LLMs. Our work shows the effective integration of formal verification and user-control features with LLMs for end-user planning tasks.

SET-PAiREd: Designing for Parental Involvement in Learning with an AI-Assisted Educational Robot

Feb 24, 2025Abstract:AI-assisted learning companion robots are increasingly used in early education. Many parents express concerns about content appropriateness, while they also value how AI and robots could supplement their limited skill, time, and energy to support their children's learning. We designed a card-based kit, SET, to systematically capture scenarios that have different extents of parental involvement. We developed a prototype interface, PAiREd, with a learning companion robot to deliver LLM-generated educational content that can be reviewed and revised by parents. Parents can flexibly adjust their involvement in the activity by determining what they want the robot to help with. We conducted an in-home field study involving 20 families with children aged 3-5. Our work contributes to an empirical understanding of the level of support parents with different expectations may need from AI and robots and a prototype that demonstrates an innovative interaction paradigm for flexibly including parents in supporting their children.

Connection-Coordination Rapport (CCR) Scale: A Dual-Factor Scale to Measure Human-Robot Rapport

Jan 21, 2025

Abstract:Robots, particularly in service and companionship roles, must develop positive relationships with people they interact with regularly to be successful. These positive human-robot relationships can be characterized as establishing "rapport," which indicates mutual understanding and interpersonal connection that form the groundwork for successful long-term human-robot interaction. However, the human-robot interaction research literature lacks scale instruments to assess human-robot rapport in a variety of situations. In this work, we developed the 18-item Connection-Coordination Rapport (CCR) Scale to measure human-robot rapport. We first ran Study 1 (N = 288) where online participants rated videos of human-robot interactions using a set of candidate items. Our Study 1 results showed the discovery of two factors in our scale, which we named "Connection" and "Coordination." We then evaluated this scale by running Study 2 (N = 201) where online participants rated a new set of human-robot interaction videos with our scale and an existing rapport scale from virtual agents research for comparison. We also validated our scale by replicating a prior in-person human-robot interaction study, Study 3 (N = 44), and found that rapport is rated significantly greater when participants interacted with a responsive robot (responsive condition) as opposed to an unresponsive robot (unresponsive condition). Results from these studies demonstrate high reliability and validity for the CCR scale, which can be used to measure rapport in both first-person and third-person perspectives. We encourage the adoption of this scale in future studies to measure rapport in a variety of human-robot interactions.

Exploring the Use of Robots for Diary Studies

Jan 10, 2025Abstract:As interest in studying in-the-wild human-robot interaction grows, there is a need for methods to collect data over time and in naturalistic or potentially private environments. HRI researchers have increasingly used the diary method for these studies, asking study participants to self-administer a structured data collection instrument, i.e., a diary, over a period of time. Although the diary method offers a unique window into settings that researchers may not have access to, they also lack the interactivity and probing that interview-based methods offer. In this paper, we explore a novel data collection method in which a robot plays the role of an interactive diary. We developed the Diary Robot system and performed in-home deployments for a week to evaluate the feasibility and effectiveness of this approach. Using traditional text-based and audio-based diaries as benchmarks, we found that robots are able to effectively elicit the intended information. We reflect on our findings, and describe scenarios where the utilization of robots in diary studies as a data collection instrument may be especially applicable.

Designing Telepresence Robots to Support Place Attachment

Jan 06, 2025

Abstract:People feel attached to places that are meaningful to them, which psychological research calls "place attachment." Place attachment is associated with self-identity, self-continuity, and psychological well-being. Even small cues, including videos, images, sounds, and scents, can facilitate feelings of connection and belonging to a place. Telepresence robots that allow people to see, hear, and interact with a remote place have the potential to establish and maintain a connection with places and support place attachment. In this paper, we explore the design space of robotic telepresence to promote place attachment, including how users might be guided in a remote place and whether they experience the environment individually or with others. We prototyped a telepresence robot that allows one or more remote users to visit a place and be guided by a local human guide or a conversational agent. Participants were 38 university alumni who visited their alma mater via the telepresence robot. Our findings uncovered four distinct user personas in the remote experience and highlighted the need for social participation to enhance place attachment. We generated design implications for future telepresence robot design to support people's connections with places of personal significance.

Understanding Generative AI in Robot Logic Parametrization

Nov 06, 2024

Abstract:Leveraging generative AI (for example, Large Language Models) for language understanding within robotics opens up possibilities for LLM-driven robot end-user development (EUD). Despite the numerous design opportunities it provides, little is understood about how this technology can be utilized when constructing robot program logic. In this paper, we outline the background in capturing natural language end-user intent and summarize previous use cases of LLMs within EUD. Taking the context of filmmaking as an example, we explore how a cinematography practitioner's intent to film a certain scene can be articulated using natural language, captured by an LLM, and further parametrized as low-level robot arm movement. We explore the capabilities of an LLM interpreting end-user intent and mapping natural language to predefined, cross-modal data in the process of iterative program development. We conclude by suggesting future opportunities for domain exploration beyond cinematography to support language-driven robotic camera navigation.

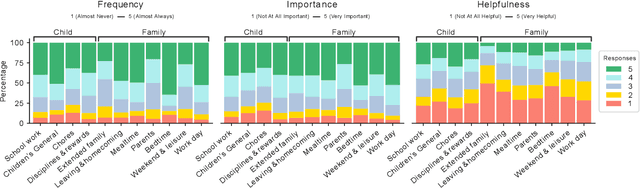

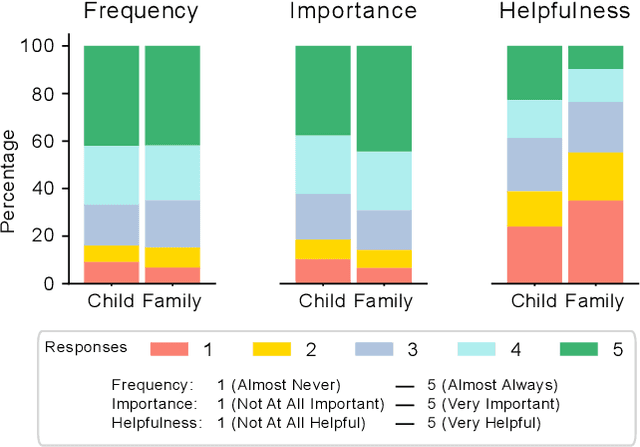

Robots in Family Routines: Development of and Initial Insights from the Family-Robot Routines Inventory

Jun 17, 2024

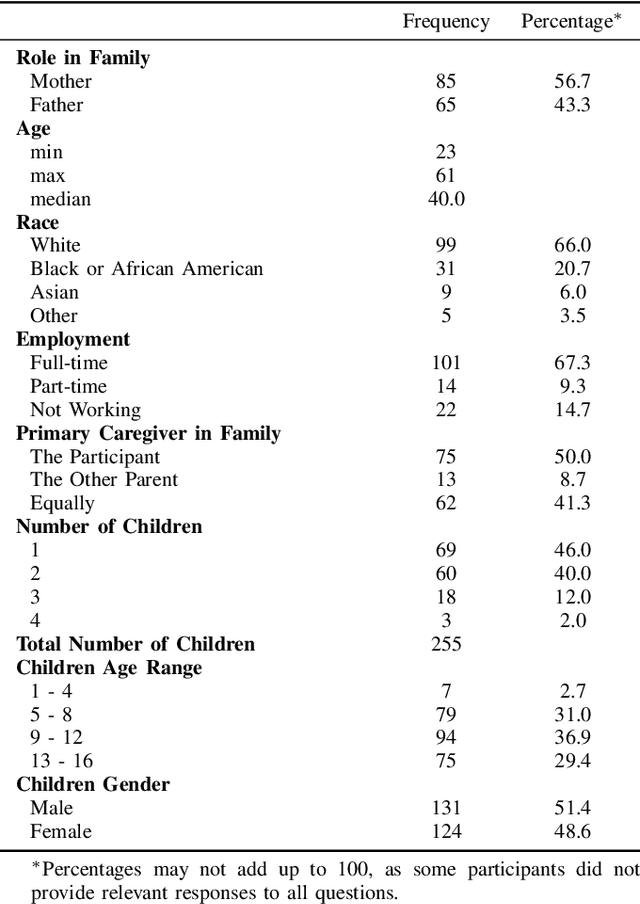

Abstract:Despite advances in areas such as the personalization of robots, sustaining adoption of robots for long-term use in families remains a challenge. Recent studies have identified integrating robots into families' routines and rituals as a promising approach to support long-term adoption. However, few studies explored the integration of robots into family routines and there is a gap in systematic measures to capture family preferences for robot integration. Building upon existing routine inventories, we developed Family-Robot Routines Inventory (FRRI), with 24 family routines and 24 child routine items, to capture parents' attitudes toward and expectations from the integration of robotic technology into their family routines. Using this inventory, we collected data from 150 parents through an online survey. Our analysis indicates that parents had varying perceptions for the utility of integrating robots into their routines. For example, parents found robot integration to be more helpful in children's individual routines, than to the collective routines of their families. We discuss the design implications of these preliminary findings, and how they may serve as a first step toward understanding the diverse challenges and demands of designing and integrating household robots for families.

Understanding On-the-Fly End-User Robot Programming

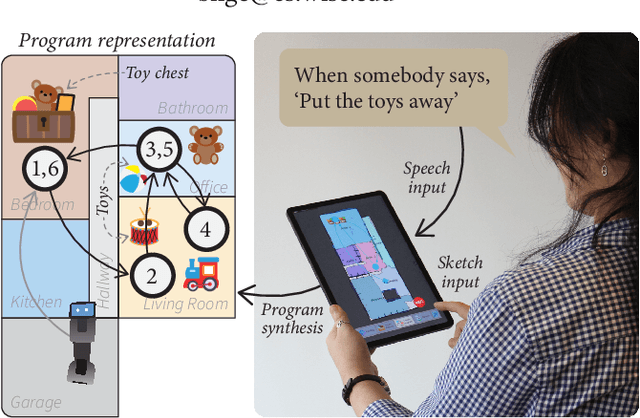

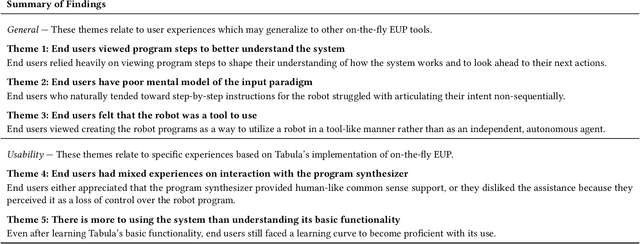

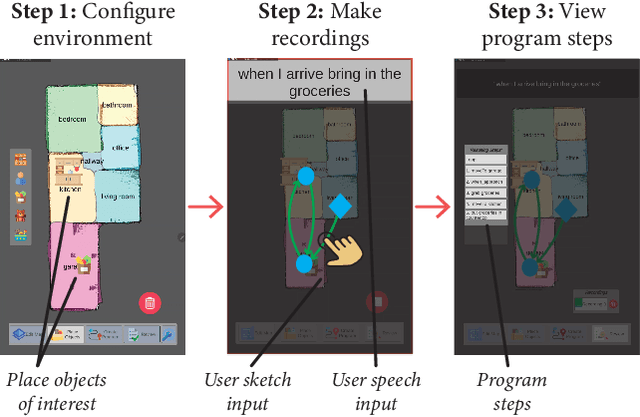

Jun 02, 2024

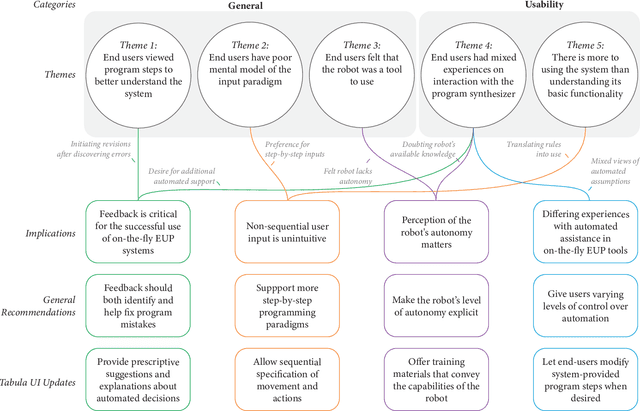

Abstract:Novel end-user programming (EUP) tools enable on-the-fly (i.e., spontaneous, easy, and rapid) creation of interactions with robotic systems. These tools are expected to empower users in determining system behavior, although very little is understood about how end users perceive, experience, and use these systems. In this paper, we seek to address this gap by investigating end-user experience with on-the-fly robot EUP. We trained 21 end users to use an existing on-the-fly EUP tool, asked them to create robot interactions for four scenarios, and assessed their overall experience. Our findings provide insight into how these systems should be designed to better support end-user experience with on-the-fly EUP, focusing on user interaction with an automatic program synthesizer that resolves imprecise user input, the use of multimodal inputs to express user intent, and the general process of programming a robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge