Katie Winkle

"Who Should I Believe?": User Interpretation and Decision-Making When a Family Healthcare Robot Contradicts Human Memory

Jun 26, 2025Abstract:Advancements in robotic capabilities for providing physical assistance, psychological support, and daily health management are making the deployment of intelligent healthcare robots in home environments increasingly feasible in the near future. However, challenges arise when the information provided by these robots contradicts users' memory, raising concerns about user trust and decision-making. This paper presents a study that examines how varying a robot's level of transparency and sociability influences user interpretation, decision-making and perceived trust when faced with conflicting information from a robot. In a 2 x 2 between-subjects online study, 176 participants watched videos of a Furhat robot acting as a family healthcare assistant and suggesting a fictional user to take medication at a different time from that remembered by the user. Results indicate that robot transparency influenced users' interpretation of information discrepancies: with a low transparency robot, the most frequent assumption was that the user had not correctly remembered the time, while with the high transparency robot, participants were more likely to attribute the discrepancy to external factors, such as a partner or another household member modifying the robot's information. Additionally, participants exhibited a tendency toward overtrust, often prioritizing the robot's recommendations over the user's memory, even when suspecting system malfunctions or third-party interference. These findings highlight the impact of transparency mechanisms in robotic systems, the complexity and importance associated with system access control for multi-user robots deployed in home environments, and the potential risks of users' over reliance on robots in sensitive domains such as healthcare.

Ice-Breakers, Turn-Takers and Fun-Makers: Exploring Robots for Groups with Teenagers

Apr 09, 2025

Abstract:Successful, enjoyable group interactions are important in public and personal contexts, especially for teenagers whose peer groups are important for self-identity and self-esteem. Social robots seemingly have the potential to positively shape group interactions, but it seems difficult to effect such impact by designing robot behaviors solely based on related (human interaction) literature. In this article, we take a user-centered approach to explore how teenagers envisage a social robot "group assistant". We engaged 16 teenagers in focus groups, interviews, and robot testing to capture their views and reflections about robots for groups. Over the course of a two-week summer school, participants co-designed the action space for such a robot and experienced working with/wizarding it for 10+ hours. This experience further altered and deepened their insights into using robots as group assistants. We report results regarding teenagers' views on the applicability and use of a robot group assistant, how these expectations evolved throughout the study, and their repeat interactions with the robot. Our results indicate that each group moves on a spectrum of need for the robot, reflected in use of the robot more (or less) for ice-breaking, turn-taking, and fun-making as the situation demanded.

Visualising Policy-Reward Interplay to Inform Zeroth-Order Preference Optimisation of Large Language Models

Mar 05, 2025

Abstract:Fine-tuning LLMs with first-order methods like back-propagation is computationally intensive. Zeroth-Order (ZO) optimisation, using function evaluations instead of gradients, reduces memory usage but suffers from slow convergence in high-dimensional models. As a result, ZO research in LLMs has mostly focused on classification, overlooking more complex generative tasks. In this paper, we introduce ZOPrO, a novel ZO algorithm designed for \textit{Preference Optimisation} in LLMs. We begin by analysing the interplay between policy and reward models during traditional (first-order) Preference Optimisation, uncovering patterns in their relative updates. Guided by these insights, we adapt Simultaneous Perturbation Stochastic Approximation (SPSA) with a targeted sampling strategy to accelerate convergence. Through experiments on summarisation, machine translation, and conversational assistants, we demonstrate that our method consistently enhances reward signals while achieving convergence times comparable to first-order methods. While it falls short of some state-of-the-art methods, our work is the first to apply Zeroth-Order methods to Preference Optimisation in LLMs, going beyond classification tasks and paving the way for a largely unexplored research direction. Code and visualisations are available at https://github.com/alessioGalatolo/VisZOPrO

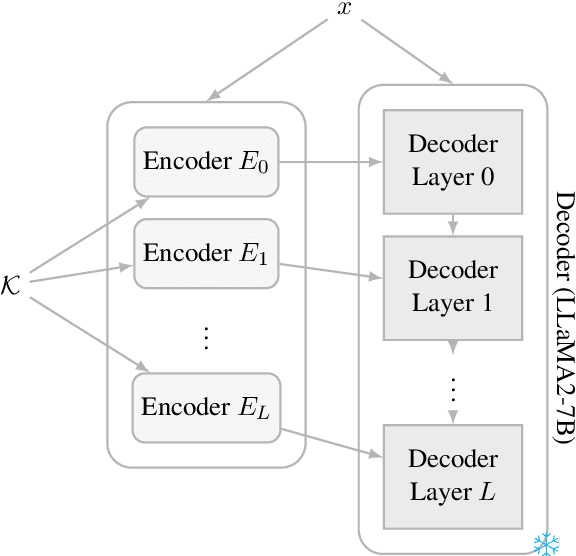

DIEKAE: Difference Injection for Efficient Knowledge Augmentation and Editing of Large Language Models

Jun 15, 2024

Abstract:Pretrained Language Models (PLMs) store extensive knowledge within their weights, enabling them to recall vast amount of information. However, relying on this parametric knowledge brings some limitations such as outdated information or gaps in the training data. This work addresses these problems by distinguish between two separate solutions: knowledge editing and knowledge augmentation. We introduce Difference Injection for Efficient Knowledge Augmentation and Editing (DIEK\AE), a new method that decouples knowledge processing from the PLM (LLaMA2-7B, in particular) by adopting a series of encoders. These encoders handle external knowledge and inject it into the PLM layers, significantly reducing computational costs and improving performance of the PLM. We propose a novel training technique for these encoders that does not require back-propagation through the PLM, thus greatly reducing the memory and time required to train them. Our findings demonstrate how our method is faster and more efficient compared to multiple baselines in knowledge augmentation and editing during both training and inference. We have released our code and data at https://github.com/alessioGalatolo/DIEKAE.

Love, Joy, and Autism Robots: A Metareview and Provocatype

Mar 08, 2024Abstract:Previous work has observed how Neurodivergence is often harmfully pathologized in Human-Computer Interaction (HCI) and Human-Robot interaction (HRI) research. We conduct a review of autism robot reviews and find the dominant research direction is Autistic people's second to lowest (24 of 25) research priority: interventions and treatments purporting to 'help' neurodivergent individuals to conform to neurotypical social norms, become better behaved, improve social and emotional skills, and otherwise 'fix' us -- rarely prioritizing the internal experiences that might lead to such differences. Furthermore, a growing body of evidence indicates many of the most popular current approaches risk inflicting lasting trauma and damage on Autistic people. We draw on the principles and findings of the latest Autism research, Feminist HRI, and Robotics to imagine a role reversal, analyze the implications, then conclude with actionable guidance on Autistic-led scientific methods and research directions.

Enactive Artificial Intelligence: Subverting Gender Norms in Robot-Human Interaction

Jan 24, 2023Abstract:Enactive Artificial Intelligence (eAI) motivates new directions towards gender-inclusive AI. Beyond a mirror reflecting our values, AI design has a profound impact on shaping the enaction of cultural identities. The traditionally unrepresentative, white, cisgender, heterosexual dominant narratives are partial, and thereby active vehicles of social marginalisation. Drawing from enactivism, the paper first characterises AI design as a cultural practice; which is then specified in feminist technoscience principles, i.e. how gender and other embodied identity markers are entangled in AI. These principles are then discussed in the specific case of feminist human-robot interaction. The paper, then, stipulates the conditions for eAI: an eAI robot is a robot that (1) plays a cultural role in individual and social identity, (2) this role takes the form of human-robot dynamical interaction, and (3) interaction is embodied. Drawing from eAI, finally, the paper offers guidelines for I. eAI gender-inclusive AI, and II. subverting existing gender norms of robot design.

PD/EUP Workshop Proceedings

Jul 15, 2022Abstract:People who need robots are often not the same as people who can program them. This key observation in human-robot interaction (HRI) has lead to a number of challenges when developing robotic applications, since developers must understand the exact needs of end-users. Participatory Design (PD), the process of including stakeholders such as end users early in the robot design process, has been used with noteworthy success in HRI, but typically remains limited to the early phases of development. Resulting robot behaviors are often then hardcoded by engineers or utilized in Wizard-of-Oz (WoZ) systems that rarely achieve autonomy. End-User Programming (EUP), i.e., the research of tools allowing end users with limited computer knowledge to program systems, has been widely applied to the design of robot behaviors for interaction with humans, but these tools risk being used solely as research demonstrations only existing for the amount of time required for them to be evaluated and published. In the PD/EUP Workshop, we aim to facilitate mutual learning between these communities and to create communication opportunities that could help the larger HRI community work towards end-user personalized and adaptable interactions. Both PD and EUP will be key requirements if we want robots to be useful for wider society. From this workshop, we expect new collaboration opportunities to emerge and we aim to formalize new methodologies that integrate PD and EUP approaches.

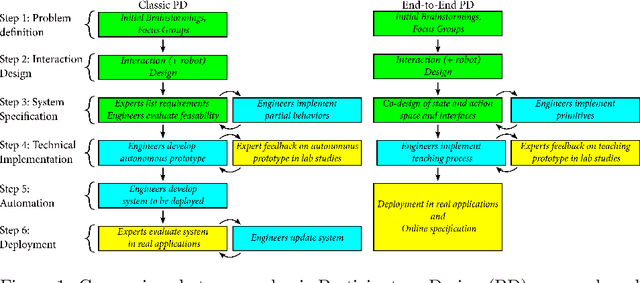

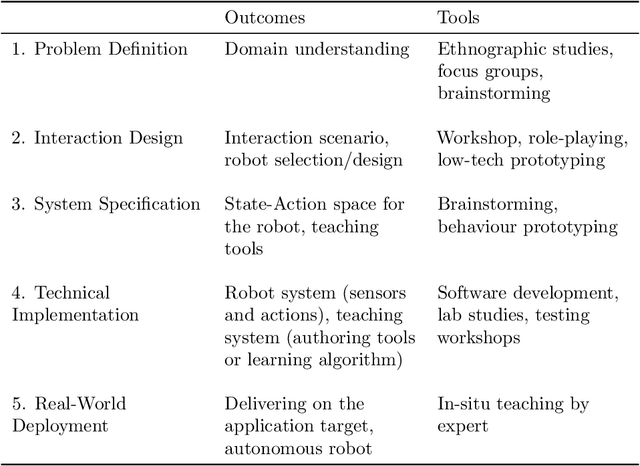

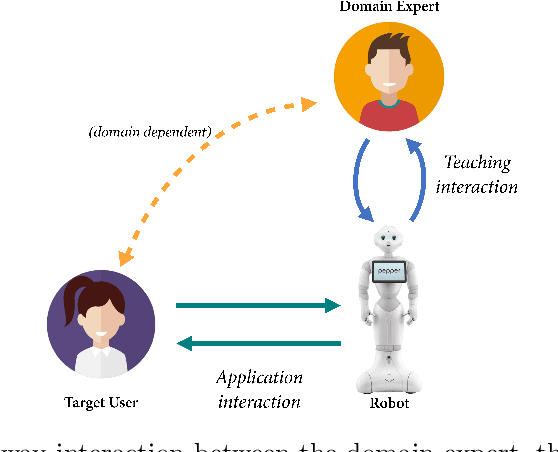

LEADOR: A Method for End-to-End Participatory Design of Autonomous Social Robots

May 14, 2021

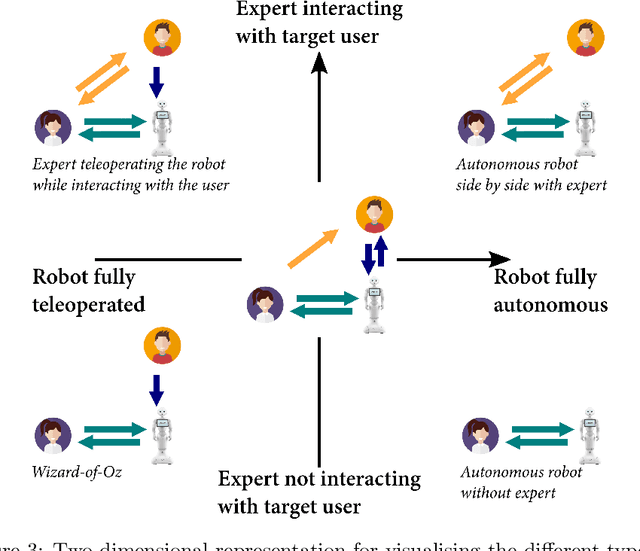

Abstract:Participatory Design (PD) in Human-Robot Interaction (HRI) typically remains limited to the early phases of development, with subsequent robot behaviours then being hardcoded by engineers or utilised in Wizard-of-Oz (WoZ) systems that rarely achieve autonomy. We present LEADOR (Led-by-Experts Automation and Design Of Robots) an end-to-end PD methodology for domain expert co-design, automation and evaluation of social robots. LEADOR starts with typical PD to co-design the interaction specifications and state and action space of the robot. It then replaces traditional offline programming or WoZ by an in-situ, online teaching phase where the domain expert can live-program or teach the robot how to behave while being embedded in the interaction context. We believe that this live teaching can be best achieved by adding a learning component to a WoZ setup, to capture experts' implicit knowledge, as they intuitively respond to the dynamics of the situation. The robot progressively learns an appropriate, expert-approved policy, ultimately leading to full autonomy, even in sensitive and/or ill-defined environments. However, LEADOR is agnostic to the exact technical approach used to facilitate this learning process. The extensive inclusion of the domain expert(s) in robot design represents established responsible innovation practice, lending credibility to the system both during the teaching phase and when operating autonomously. The combination of this expert inclusion with the focus on in-situ development also means LEADOR supports a mutual shaping approach to social robotics. We draw on two previously published, foundational works from which this (generalisable) methodology has been derived in order to demonstrate the feasibility and worth of this approach, provide concrete examples in its application and identify limitations and opportunities when applying this framework in new environments.

RoboTed: a case study in Ethical Risk Assessment

Jul 31, 2020

Abstract:Risk Assessment is a well known and powerful method for discovering and mitigating risks, and hence improving safety. Ethical Risk Assessment uses the same approach but extends the envelope of risk to cover ethical risks in addition to safety risks. In this paper we outline Ethical Risk Assessment (ERA) and set ERA within the broader framework of Responsible Robotics. We then illustrate ERA with a case study of a hypothetical smart robot toy teddy bear: RoboTed. The case study shows the value of ERA and how consideration of ethical risks can prompt design changes, resulting in a more ethical and sustainable robot.

Robot Accident Investigation: a case study in Responsible Robotics

May 15, 2020

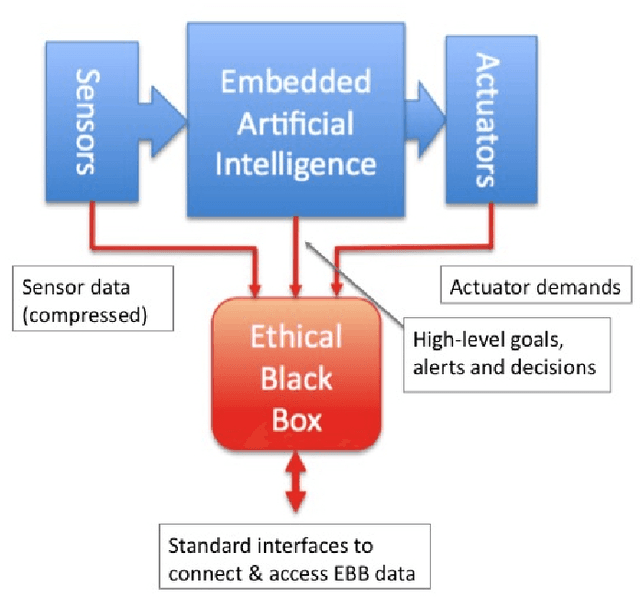

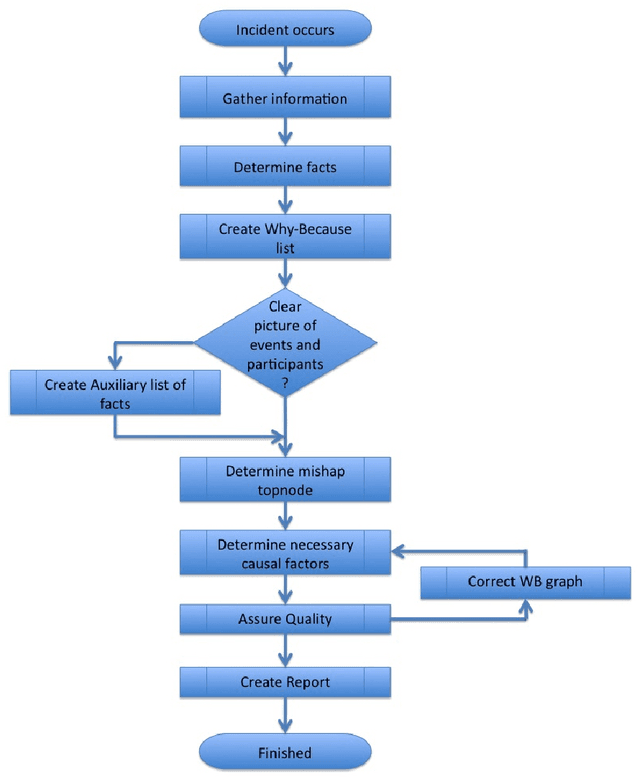

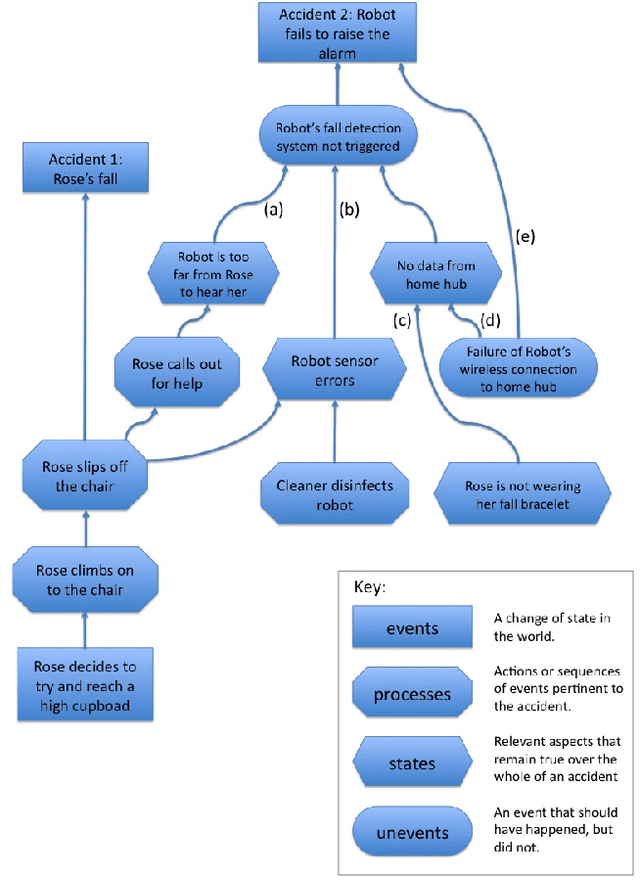

Abstract:Robot accidents are inevitable. Although rare, they have been happening since assembly-line robots were first introduced in the 1960s. But a new generation of social robots are now becoming commonplace. Often with sophisticated embedded artificial intelligence (AI) social robots might be deployed as care robots to assist elderly or disabled people to live independently. Smart robot toys offer a compelling interactive play experience for children and increasingly capable autonomous vehicles (AVs) the promise of hands-free personal transport and fully autonomous taxis. Unlike industrial robots which are deployed in safety cages, social robots are designed to operate in human environments and interact closely with humans; the likelihood of robot accidents is therefore much greater for social robots than industrial robots. This paper sets out a draft framework for social robot accident investigation; a framework which proposes both the technology and processes that would allow social robot accidents to be investigated with no less rigour than we expect of air or rail accident investigations. The paper also places accident investigation within the practice of responsible robotics, and makes the case that social robotics without accident investigation would be no less irresponsible than aviation without air accident investigation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge