Giulia Belgiovine

Ice-Breakers, Turn-Takers and Fun-Makers: Exploring Robots for Groups with Teenagers

Apr 09, 2025

Abstract:Successful, enjoyable group interactions are important in public and personal contexts, especially for teenagers whose peer groups are important for self-identity and self-esteem. Social robots seemingly have the potential to positively shape group interactions, but it seems difficult to effect such impact by designing robot behaviors solely based on related (human interaction) literature. In this article, we take a user-centered approach to explore how teenagers envisage a social robot "group assistant". We engaged 16 teenagers in focus groups, interviews, and robot testing to capture their views and reflections about robots for groups. Over the course of a two-week summer school, participants co-designed the action space for such a robot and experienced working with/wizarding it for 10+ hours. This experience further altered and deepened their insights into using robots as group assistants. We report results regarding teenagers' views on the applicability and use of a robot group assistant, how these expectations evolved throughout the study, and their repeat interactions with the robot. Our results indicate that each group moves on a spectrum of need for the robot, reflected in use of the robot more (or less) for ice-breaking, turn-taking, and fun-making as the situation demanded.

Building Knowledge from Interactions: An LLM-Based Architecture for Adaptive Tutoring and Social Reasoning

Apr 02, 2025

Abstract:Integrating robotics into everyday scenarios like tutoring or physical training requires robots capable of adaptive, socially engaging, and goal-oriented interactions. While Large Language Models show promise in human-like communication, their standalone use is hindered by memory constraints and contextual incoherence. This work presents a multimodal, cognitively inspired framework that enhances LLM-based autonomous decision-making in social and task-oriented Human-Robot Interaction. Specifically, we develop an LLM-based agent for a robot trainer, balancing social conversation with task guidance and goal-driven motivation. To further enhance autonomy and personalization, we introduce a memory system for selecting, storing and retrieving experiences, facilitating generalized reasoning based on knowledge built across different interactions. A preliminary HRI user study and offline experiments with a synthetic dataset validate our approach, demonstrating the system's ability to manage complex interactions, autonomously drive training tasks, and build and retrieve contextual memories, advancing socially intelligent robotics.

A Roadmap for Embodied and Social Grounding in LLMs

Sep 25, 2024Abstract:The fusion of Large Language Models (LLMs) and robotic systems has led to a transformative paradigm in the robotic field, offering unparalleled capabilities not only in the communication domain but also in skills like multimodal input handling, high-level reasoning, and plan generation. The grounding of LLMs knowledge into the empirical world has been considered a crucial pathway to exploit the efficiency of LLMs in robotics. Nevertheless, connecting LLMs' representations to the external world with multimodal approaches or with robots' bodies is not enough to let them understand the meaning of the language they are manipulating. Taking inspiration from humans, this work draws attention to three necessary elements for an agent to grasp and experience the world. The roadmap for LLMs grounding is envisaged in an active bodily system as the reference point for experiencing the environment, a temporally structured experience for a coherent, self-related interaction with the external world, and social skills to acquire a common-grounded shared experience.

Robots Can Multitask Too: Integrating a Memory Architecture and LLMs for Enhanced Cross-Task Robot Action Generation

Jul 18, 2024Abstract:Large Language Models (LLMs) have been recently used in robot applications for grounding LLM common-sense reasoning with the robot's perception and physical abilities. In humanoid robots, memory also plays a critical role in fostering real-world embodiment and facilitating long-term interactive capabilities, especially in multi-task setups where the robot must remember previous task states, environment states, and executed actions. In this paper, we address incorporating memory processes with LLMs for generating cross-task robot actions, while the robot effectively switches between tasks. Our proposed dual-layered architecture features two LLMs, utilizing their complementary skills of reasoning and following instructions, combined with a memory model inspired by human cognition. Our results show a significant improvement in performance over a baseline of five robotic tasks, demonstrating the potential of integrating memory with LLMs for combining the robot's action and perception for adaptive task execution.

"iCub, We Forgive You!" Investigating Trust in a Game Scenario with Kids

Sep 04, 2022

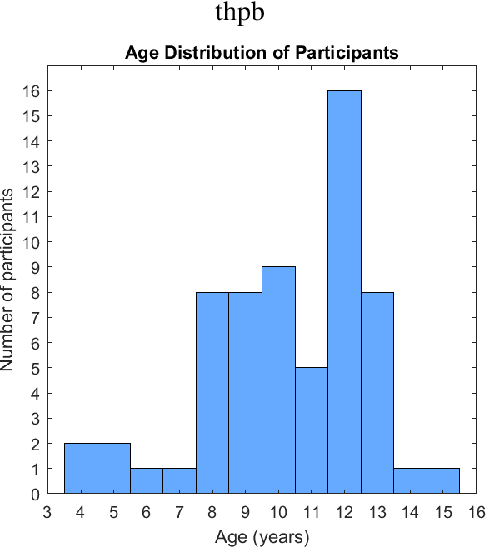

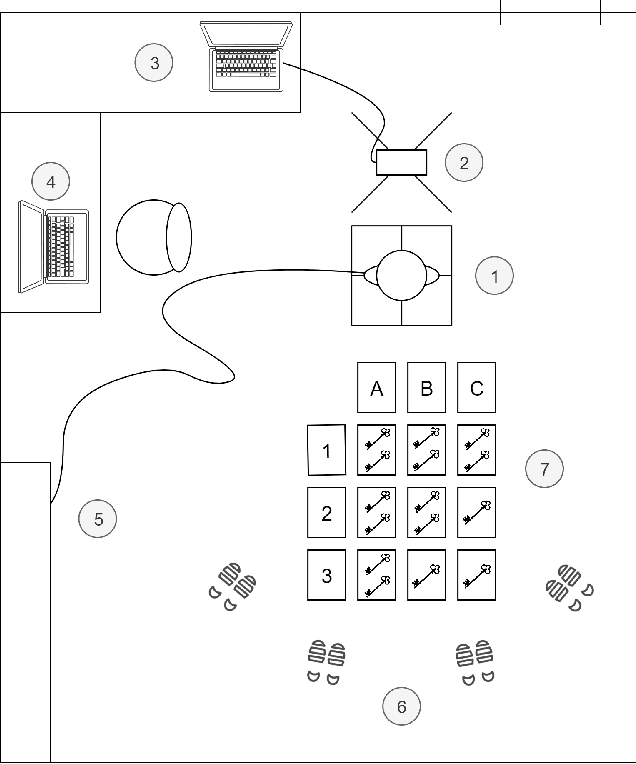

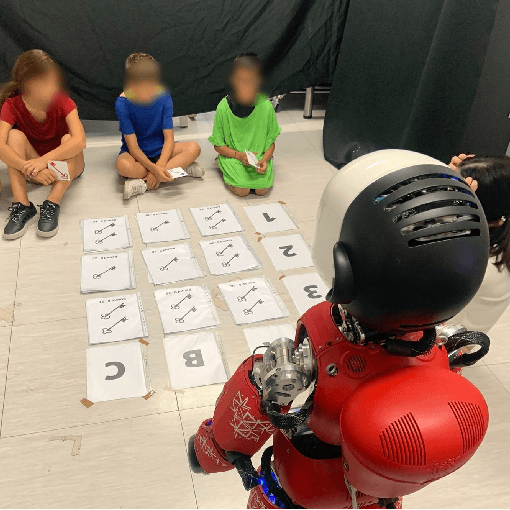

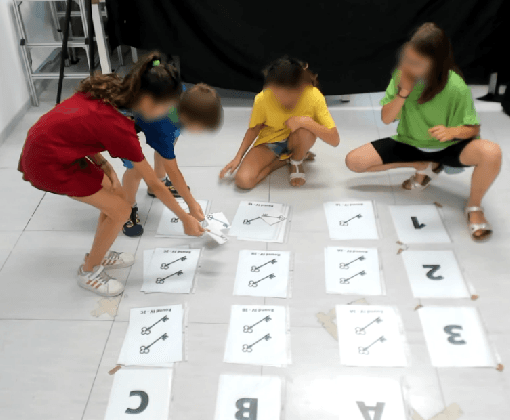

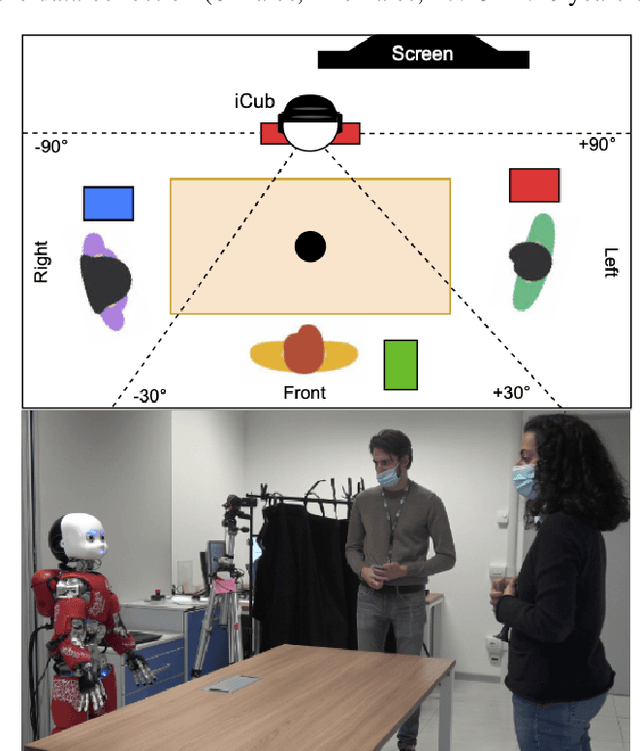

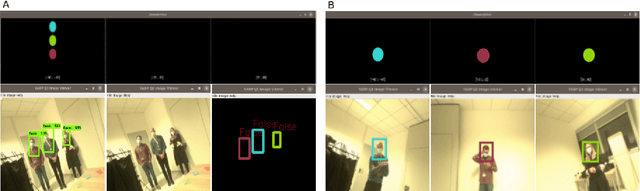

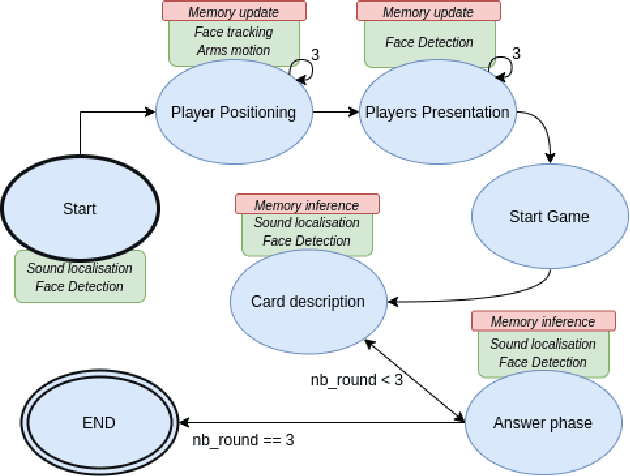

Abstract:This study presents novel strategies to investigate the mutual influence of trust and group dynamics in children-robot interaction. We implemented a game-like experimental activity with the humanoid robot iCub and designed a questionnaire to assess how the children perceived the interaction. We also aim to verify if the sensors, setups, and tasks are suitable for studying such aspects. The questionnaires' results demonstrate that youths perceive iCub as a friend and, typically, in a positive way. Other preliminary results suggest that, generally, children trusted iCub during the activity and, after its mistakes, they tried to reassure it with sentences such as: "Don't worry iCub, we forgive you". Furthermore, trust towards the robot in group cognitive activity appears to change according to gender: after two consecutive mistakes by the robot, girls tended to trust iCub more than boys. Finally, no significant difference has been evidenced between different age groups across points computed from the game and the self-reported scales. The tool we proposed is suitable for studying trust in human-robot interaction (HRI) across different ages and seems appropriate to understand the mechanism of trust in group interactions.

Cognitive architecture aided by working-memory for self-supervised multi-modal humans recognition

Mar 16, 2021

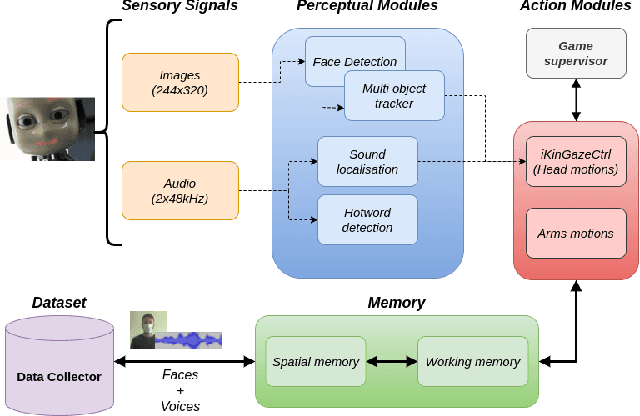

Abstract:The ability to recognize human partners is an important social skill to build personalized and long-term human-robot interactions, especially in scenarios like education, care-giving, and rehabilitation. Faces and voices constitute two important sources of information to enable artificial systems to reliably recognize individuals. Deep learning networks have achieved state-of-the-art results and demonstrated to be suitable tools to address such a task. However, when those networks are applied to different and unprecedented scenarios not included in the training set, they can suffer a drop in performance. For example, with robotic platforms in ever-changing and realistic environments, where always new sensory evidence is acquired, the performance of those models degrades. One solution is to make robots learn from their first-hand sensory data with self-supervision. This allows coping with the inherent variability of the data gathered in realistic and interactive contexts. To this aim, we propose a cognitive architecture integrating low-level perceptual processes with a spatial working memory mechanism. The architecture autonomously organizes the robot's sensory experience into a structured dataset suitable for human recognition. Our results demonstrate the effectiveness of our architecture and show that it is a promising solution in the quest of making robots more autonomous in their learning process.

A Humanoid Social Agent Embodying Physical Assistance Enhances Motor Training Experience

Jul 12, 2020

Abstract:Skilled motor behavior is critical in many human daily life activities and professions. The design of robots that can effectively teach motor skills is an important challenge in the robotics field. In particular, it is important to understand whether the involvement in the training of a robot exhibiting social behaviors impacts on the learning and the experience of the human pupils. In this study, we addressed this question and we asked participants to learn a complex task - stabilizing an inverted pendulum - by training with physical assistance provided by a robotic manipulandum, the Wristbot. One group of participants performed the training only using the Wristbot, whereas for another group the same physical assistance was attributed to the humanoid robot iCub, who played the role of an expert trainer and exhibited also some social behaviors. The results obtained show that participants of both groups effectively acquired the skill by leveraging the physical assistance, as they significantly improved their stabilization performance even when the assistance was removed. Moreover, learning in a context of interaction with a humanoid robot assistant led subjects to increased motivation and more enjoyable training experience, without negative effects on attention and perceived effort. With the experimental approach presented in this study, it is possible to investigate the relative contribution of haptic and social signals in the context of motor learning mediated by human-robot interaction, with the aim of developing effective robot trainers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge