Carlo Mazzola

Examining the legibility of humanoid robot arm movements in a pointing task

Aug 07, 2025Abstract:Human--robot interaction requires robots whose actions are legible, allowing humans to interpret, predict, and feel safe around them. This study investigates the legibility of humanoid robot arm movements in a pointing task, aiming to understand how humans predict robot intentions from truncated movements and bodily cues. We designed an experiment using the NICO humanoid robot, where participants observed its arm movements towards targets on a touchscreen. Robot cues varied across conditions: gaze, pointing, and pointing with congruent or incongruent gaze. Arm trajectories were stopped at 60\% or 80\% of their full length, and participants predicted the final target. We tested the multimodal superiority and ocular primacy hypotheses, both of which were supported by the experiment.

A Roadmap for Embodied and Social Grounding in LLMs

Sep 25, 2024Abstract:The fusion of Large Language Models (LLMs) and robotic systems has led to a transformative paradigm in the robotic field, offering unparalleled capabilities not only in the communication domain but also in skills like multimodal input handling, high-level reasoning, and plan generation. The grounding of LLMs knowledge into the empirical world has been considered a crucial pathway to exploit the efficiency of LLMs in robotics. Nevertheless, connecting LLMs' representations to the external world with multimodal approaches or with robots' bodies is not enough to let them understand the meaning of the language they are manipulating. Taking inspiration from humans, this work draws attention to three necessary elements for an agent to grasp and experience the world. The roadmap for LLMs grounding is envisaged in an active bodily system as the reference point for experiencing the environment, a temporally structured experience for a coherent, self-related interaction with the external world, and social skills to acquire a common-grounded shared experience.

Robots Can Multitask Too: Integrating a Memory Architecture and LLMs for Enhanced Cross-Task Robot Action Generation

Jul 18, 2024Abstract:Large Language Models (LLMs) have been recently used in robot applications for grounding LLM common-sense reasoning with the robot's perception and physical abilities. In humanoid robots, memory also plays a critical role in fostering real-world embodiment and facilitating long-term interactive capabilities, especially in multi-task setups where the robot must remember previous task states, environment states, and executed actions. In this paper, we address incorporating memory processes with LLMs for generating cross-task robot actions, while the robot effectively switches between tasks. Our proposed dual-layered architecture features two LLMs, utilizing their complementary skills of reasoning and following instructions, combined with a memory model inspired by human cognition. Our results show a significant improvement in performance over a baseline of five robotic tasks, demonstrating the potential of integrating memory with LLMs for combining the robot's action and perception for adaptive task execution.

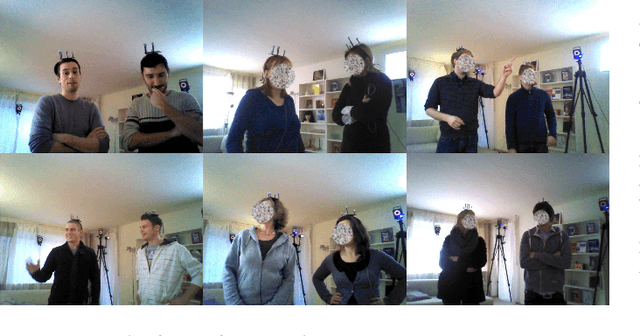

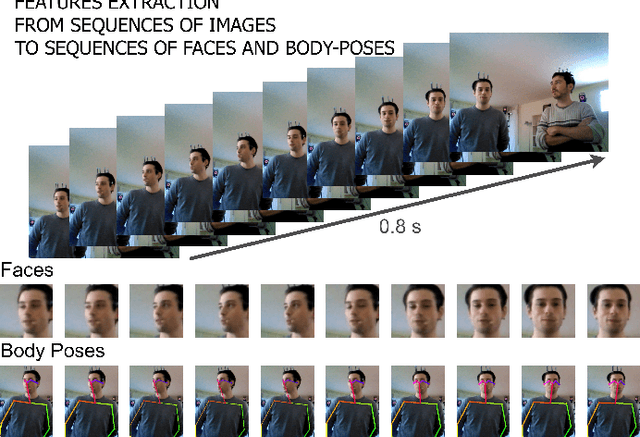

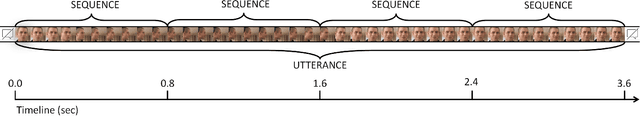

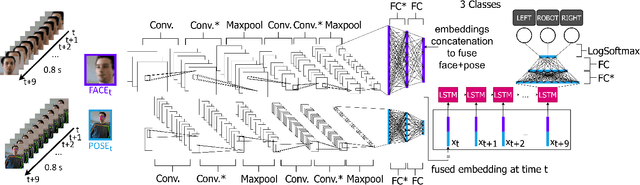

Real-time Addressee Estimation: Deployment of a Deep-Learning Model on the iCub Robot

Nov 09, 2023Abstract:Addressee Estimation is the ability to understand to whom a person is talking, a skill essential for social robots to interact smoothly with humans. In this sense, it is one of the problems that must be tackled to develop effective conversational agents in multi-party and unstructured scenarios. As humans, one of the channels that mainly lead us to such estimation is the non-verbal behavior of speakers: first of all, their gaze and body pose. Inspired by human perceptual skills, in the present work, a deep-learning model for Addressee Estimation relying on these two non-verbal features is designed, trained, and deployed on an iCub robot. The study presents the procedure of such implementation and the performance of the model deployed in real-time human-robot interaction compared to previous tests on the dataset used for the training.

To Whom are You Talking? A Deep Learning Model to Endow Social Robots with Addressee Estimation Skills

Aug 21, 2023

Abstract:Communicating shapes our social word. For a robot to be considered social and being consequently integrated in our social environment it is fundamental to understand some of the dynamics that rule human-human communication. In this work, we tackle the problem of Addressee Estimation, the ability to understand an utterance's addressee, by interpreting and exploiting non-verbal bodily cues from the speaker. We do so by implementing an hybrid deep learning model composed of convolutional layers and LSTM cells taking as input images portraying the face of the speaker and 2D vectors of the speaker's body posture. Our implementation choices were guided by the aim to develop a model that could be deployed on social robots and be efficient in ecological scenarios. We demonstrate that our model is able to solve the Addressee Estimation problem in terms of addressee localisation in space, from a robot ego-centric point of view.

* Accepted version of a paper published at 2023 International Joint Conference on Neural Networks (IJCNN). Please find the published version and info to cite the paper at https://doi.org/10.1109/IJCNN54540.2023.10191452 . 10 pages, 8 Figures, 3 Tables

Shared perception is different from individual perception: a new look on context dependency

Jul 14, 2022

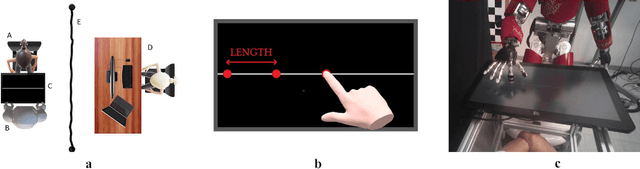

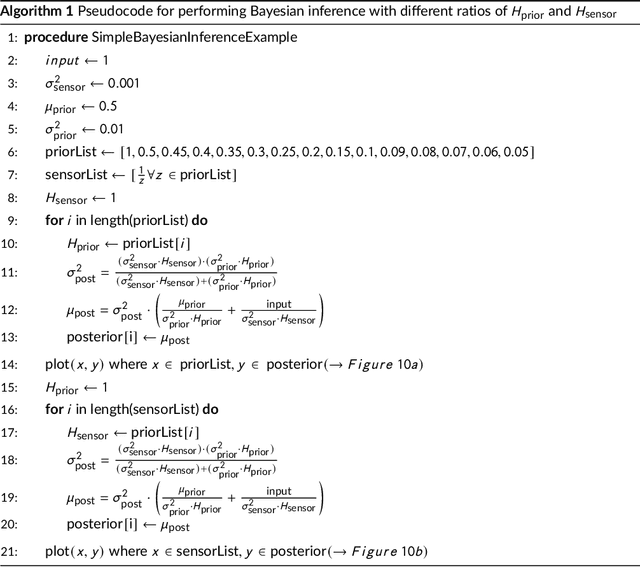

Abstract:Human perception is based on unconscious inference, where sensory input integrates with prior information. This phenomenon, known as context dependency, helps in facing the uncertainty of the external world with predictions built upon previous experience. On the other hand, human perceptual processes are inherently shaped by social interactions. However, how the mechanisms of context dependency are affected is to date unknown. If using previous experience - priors - is beneficial in individual settings, it could represent a problem in social scenarios where other agents might not have the same priors, causing a perceptual misalignment on the shared environment. The present study addresses this question. We studied context dependency in an interactive setting with a humanoid robot iCub that acted as a stimuli demonstrator. Participants reproduced the lengths shown by the robot in two conditions: one with iCub behaving socially and another with iCub acting as a mechanical arm. The different behavior of the robot significantly affected the use of prior in perception. Moreover, the social robot positively impacted perceptual performances by enhancing accuracy and reducing participants overall perceptual errors. Finally, the observed phenomenon has been modelled following a Bayesian approach to deepen and explore a new concept of shared perception.

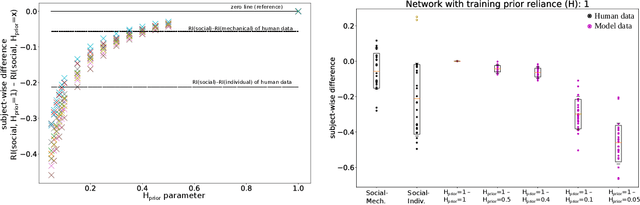

The world seems different in a social context: a neural network analysis of human experimental data

Mar 03, 2022

Abstract:Human perception and behavior are affected by the situational context, in particular during social interactions. A recent study demonstrated that humans perceive visual stimuli differently depending on whether they do the task by themselves or together with a robot. Specifically, it was found that the central tendency effect is stronger in social than in non-social task settings. The particular nature of such behavioral changes induced by social interaction, and their underlying cognitive processes in the human brain are, however, still not well understood. In this paper, we address this question by training an artificial neural network inspired by the predictive coding theory on the above behavioral data set. Using this computational model, we investigate whether the change in behavior that was caused by the situational context in the human experiment could be explained by continuous modifications of a parameter expressing how strongly sensory and prior information affect perception. We demonstrate that it is possible to replicate human behavioral data in both individual and social task settings by modifying the precision of prior and sensory signals, indicating that social and non-social task settings might in fact exist on a continuum. At the same time an analysis of the neural activation traces of the trained networks provides evidence that information is coded in fundamentally different ways in the network in the individual and in the social conditions. Our results emphasize the importance of computational replications of behavioral data for generating hypotheses on the underlying cognitive mechanisms of shared perception and may provide inspiration for follow-up studies in the field of neuroscience.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge