Marta Romeo

Attributes-aware Visual Emotion Representation Learning

Apr 09, 2025

Abstract:Visual emotion analysis or recognition has gained considerable attention due to the growing interest in understanding how images can convey rich semantics and evoke emotions in human perception. However, visual emotion analysis poses distinctive challenges compared to traditional vision tasks, especially due to the intricate relationship between general visual features and the different affective states they evoke, known as the affective gap. Researchers have used deep representation learning methods to address this challenge of extracting generalized features from entire images. However, most existing methods overlook the importance of specific emotional attributes such as brightness, colorfulness, scene understanding, and facial expressions. Through this paper, we introduce A4Net, a deep representation network to bridge the affective gap by leveraging four key attributes: brightness (Attribute 1), colorfulness (Attribute 2), scene context (Attribute 3), and facial expressions (Attribute 4). By fusing and jointly training all aspects of attribute recognition and visual emotion analysis, A4Net aims to provide a better insight into emotional content in images. Experimental results show the effectiveness of A4Net, showcasing competitive performance compared to state-of-the-art methods across diverse visual emotion datasets. Furthermore, visualizations of activation maps generated by A4Net offer insights into its ability to generalize across different visual emotion datasets.

Socially Pertinent Robots in Gerontological Healthcare

Apr 11, 2024

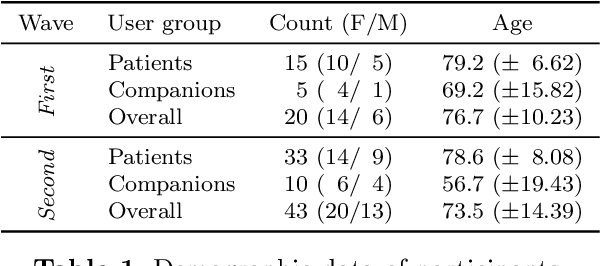

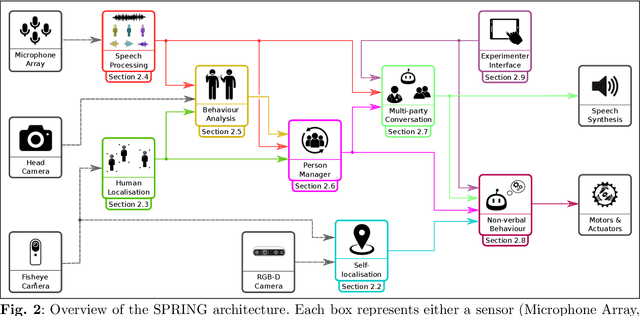

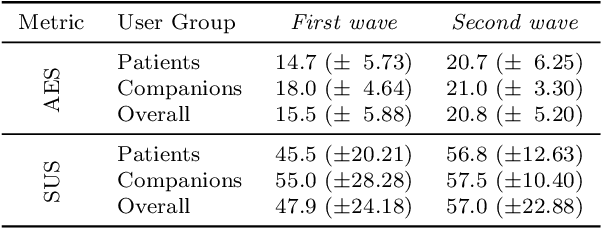

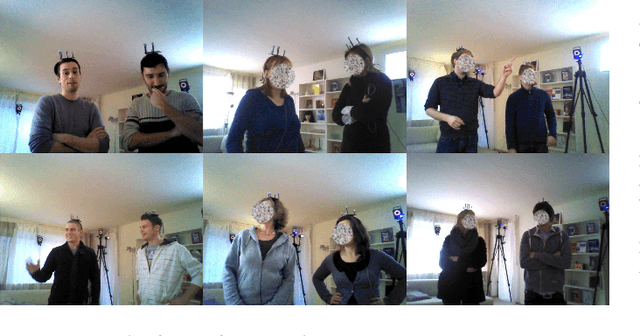

Abstract:Despite the many recent achievements in developing and deploying social robotics, there are still many underexplored environments and applications for which systematic evaluation of such systems by end-users is necessary. While several robotic platforms have been used in gerontological healthcare, the question of whether or not a social interactive robot with multi-modal conversational capabilities will be useful and accepted in real-life facilities is yet to be answered. This paper is an attempt to partially answer this question, via two waves of experiments with patients and companions in a day-care gerontological facility in Paris with a full-sized humanoid robot endowed with social and conversational interaction capabilities. The software architecture, developed during the H2020 SPRING project, together with the experimental protocol, allowed us to evaluate the acceptability (AES) and usability (SUS) with more than 60 end-users. Overall, the users are receptive to this technology, especially when the robot perception and action skills are robust to environmental clutter and flexible to handle a plethora of different interactions.

To Whom are You Talking? A Deep Learning Model to Endow Social Robots with Addressee Estimation Skills

Aug 21, 2023

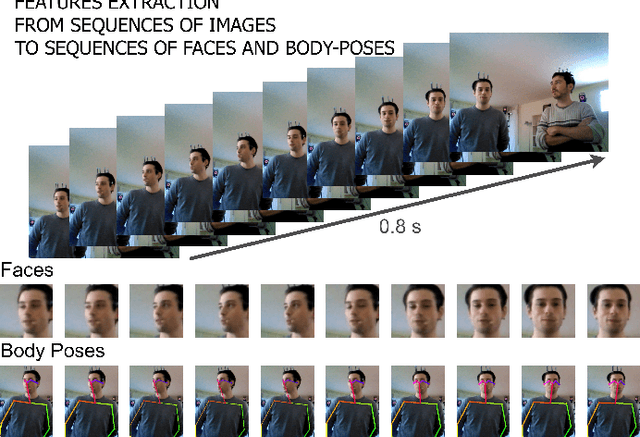

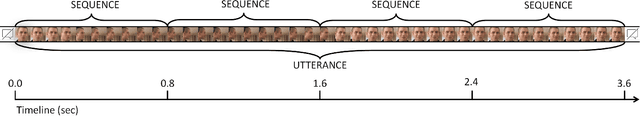

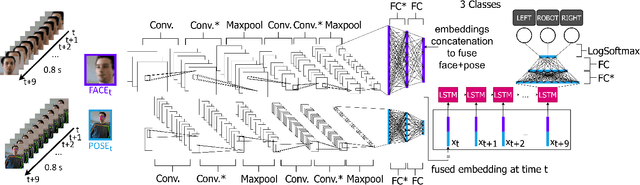

Abstract:Communicating shapes our social word. For a robot to be considered social and being consequently integrated in our social environment it is fundamental to understand some of the dynamics that rule human-human communication. In this work, we tackle the problem of Addressee Estimation, the ability to understand an utterance's addressee, by interpreting and exploiting non-verbal bodily cues from the speaker. We do so by implementing an hybrid deep learning model composed of convolutional layers and LSTM cells taking as input images portraying the face of the speaker and 2D vectors of the speaker's body posture. Our implementation choices were guided by the aim to develop a model that could be deployed on social robots and be efficient in ecological scenarios. We demonstrate that our model is able to solve the Addressee Estimation problem in terms of addressee localisation in space, from a robot ego-centric point of view.

* Accepted version of a paper published at 2023 International Joint Conference on Neural Networks (IJCNN). Please find the published version and info to cite the paper at https://doi.org/10.1109/IJCNN54540.2023.10191452 . 10 pages, 8 Figures, 3 Tables

WLASL-LEX: a Dataset for Recognising Phonological Properties in American Sign Language

Mar 11, 2022

Abstract:Signed Language Processing (SLP) concerns the automated processing of signed languages, the main means of communication of Deaf and hearing impaired individuals. SLP features many different tasks, ranging from sign recognition to translation and production of signed speech, but has been overlooked by the NLP community thus far. In this paper, we bring to attention the task of modelling the phonology of sign languages. We leverage existing resources to construct a large-scale dataset of American Sign Language signs annotated with six different phonological properties. We then conduct an extensive empirical study to investigate whether data-driven end-to-end and feature-based approaches can be optimised to automatically recognise these properties. We find that, despite the inherent challenges of the task, graph-based neural networks that operate over skeleton features extracted from raw videos are able to succeed at the task to a varying degree. Most importantly, we show that this performance pertains even on signs unobserved during training.

Proceedings of the SREC Workshop at HRI 2019

Sep 05, 2019Abstract:Robot-Assisted Therapy (RAT) has successfully been used in Human Robot Interaction (HRI) research by including social robots in health-care interventions by virtue of their ability to engage human users in both social and emotional dimensions. Robots used for these tasks must be designed with several user groups in mind, including both individuals receiving therapy and care professionals responsible for the treatment. These robots must also be able to perceive their context of use, recognize human actions and intentions, and follow the therapeutic goals to perform meaningful and personalized treatment. Effective interactions require for robots to be capable of coordinated, timely behavior in response to social cues. This means being able to estimate and predict levels of engagement, attention, intentionality and emotional state during human-robot interactions. An additional challenge for social robots in therapy and care is the wide range of needs and conditions the different users can have during their interventions, even if they may share the same pathologies their current requirements and the objectives of their therapies can varied extensively. Therefore, it becomes crucial for robots to adapt their behaviors and interaction scenario to the specific needs, preferences and requirements of the patients they interact with. This personalization should be considered in terms of the robot behavior and the intervention scenario and must reflect the needs, preferences and requirements of the user.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge