Linda Lastrico

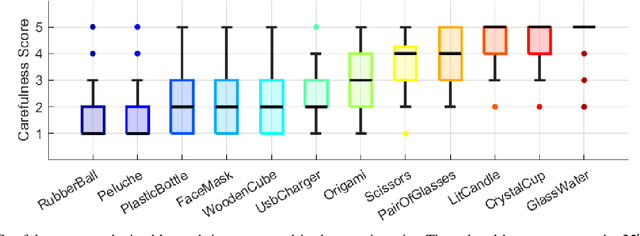

The Effects of Selected Object Features on a Pick-and-Place Task: a Human Multimodal Dataset

Jul 18, 2024Abstract:We propose a dataset to study the influence of object-specific characteristics on human pick-and-place movements and compare the quality of the motion kinematics extracted by various sensors. This dataset is also suitable for promoting a broader discussion on general learning problems in the hand-object interaction domain, such as intention recognition or motion generation with applications in the Robotics field. The dataset consists of the recordings of 15 subjects performing 80 repetitions of a pick-and-place action under various experimental conditions, for a total of 1200 pick-and-places. The data has been collected thanks to a multimodal setup composed of multiple cameras, observing the actions from different perspectives, a motion capture system, and a wrist-worn inertial measurement unit. All the objects manipulated in the experiments are identical in shape, size, and appearance but differ in weight and liquid filling, which influences the carefulness required for their handling.

* Camera ready version. Full paper available in open-access at https://doi.org/10.1177/02783649231210965 Dataset available on Kaggle (DOI: 10.34740/KAGGLE/DS/2319925, https://www.kaggle.com/datasets/alessandrocarf/effects-of-object-characteristics-on-manipulations)

A Modular Framework for Flexible Planning in Human-Robot Collaboration

Jun 07, 2024

Abstract:This paper presents a comprehensive framework to enhance Human-Robot Collaboration (HRC) in real-world scenarios. It introduces a formalism to model articulated tasks, requiring cooperation between two agents, through a smaller set of primitives. Our implementation leverages Hierarchical Task Networks (HTN) planning and a modular multisensory perception pipeline, which includes vision, human activity recognition, and tactile sensing. To showcase the system's scalability, we present an experimental scenario where two humans alternate in collaborating with a Baxter robot to assemble four pieces of furniture with variable components. This integration highlights promising advancements in HRC, suggesting a scalable approach for complex, cooperative tasks across diverse applications.

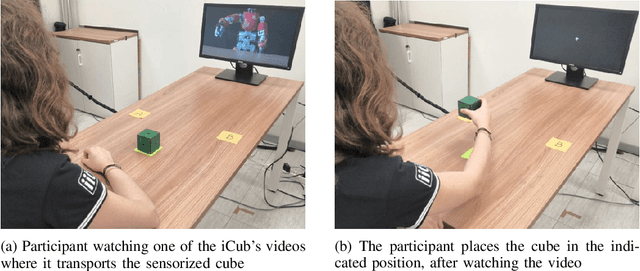

Expressing and Inferring Action Carefulness in Human-to-Robot Handovers

Sep 30, 2023Abstract:Implicit communication plays such a crucial role during social exchanges that it must be considered for a good experience in human-robot interaction. This work addresses implicit communication associated with the detection of physical properties, transport, and manipulation of objects. We propose an ecological approach to infer object characteristics from subtle modulations of the natural kinematics occurring during human object manipulation. Similarly, we take inspiration from human strategies to shape robot movements to be communicative of the object properties while pursuing the action goals. In a realistic HRI scenario, participants handed over cups - filled with water or empty - to a robotic manipulator that sorted them. We implemented an online classifier to differentiate careful/not careful human movements, associated with the cups' content. We compared our proposed "expressive" controller, which modulates the movements according to the cup filling, against a neutral motion controller. Results show that human kinematics is adjusted during the task, as a function of the cup content, even in reach-to-grasp motion. Moreover, the carefulness during the handover of full cups can be reliably inferred online, well before action completion. Finally, although questionnaires did not reveal explicit preferences from participants, the expressive robot condition improved task efficiency.

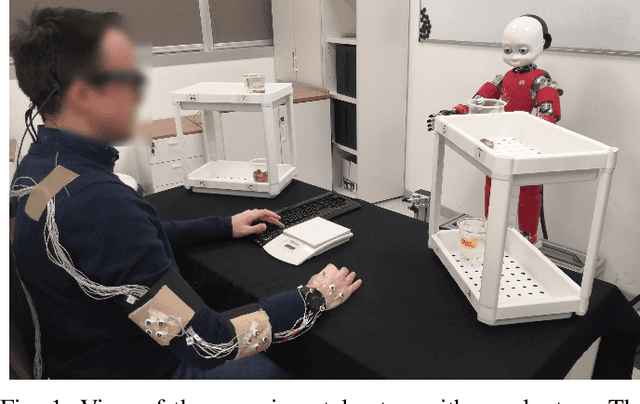

If You Are Careful, So Am I! How Robot Communicative Motions Can Influence Human Approach in a Joint Task

Oct 24, 2022Abstract:As humans, we have a remarkable capacity for reading the characteristics of objects only by observing how another person carries them. Indeed, how we perform our actions naturally embeds information on the item features. Collaborative robots can achieve the same ability by modulating the strategy used to transport objects with their end-effector. A contribution in this sense would promote spontaneous interactions by making an implicit yet effective communication channel available. This work investigates if humans correctly perceive the implicit information shared by a robotic manipulator through its movements during a dyadic collaboration task. Exploiting a generative approach, we designed robot actions to convey virtual properties of the transported objects, particularly to inform the partner if any caution is required to handle the carried item. We found that carefulness is correctly interpreted when observed through the robot movements. In the experiment, we used identical empty plastic cups; nevertheless, participants approached them differently depending on the attitude shown by the robot: humans change how they reach for the object, being more careful whenever the robot does the same. This emerging form of motor contagion is entirely spontaneous and happens even if the task does not require it.

"iCub, We Forgive You!" Investigating Trust in a Game Scenario with Kids

Sep 04, 2022

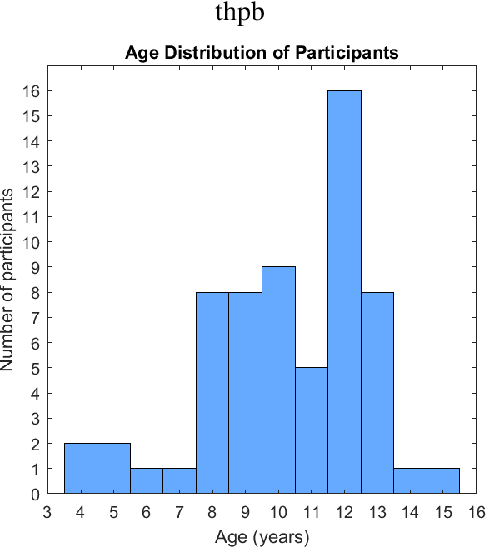

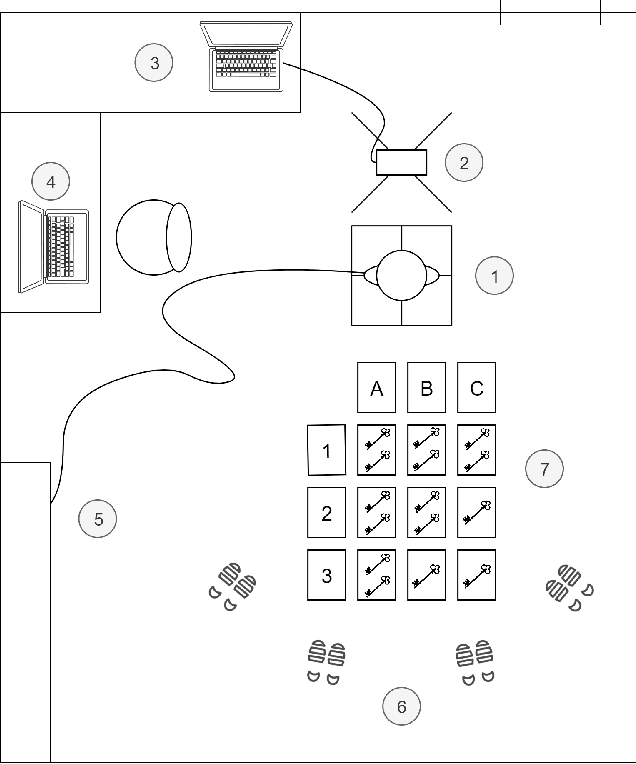

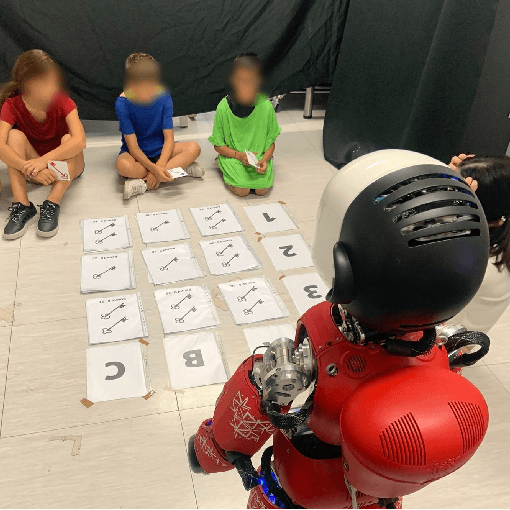

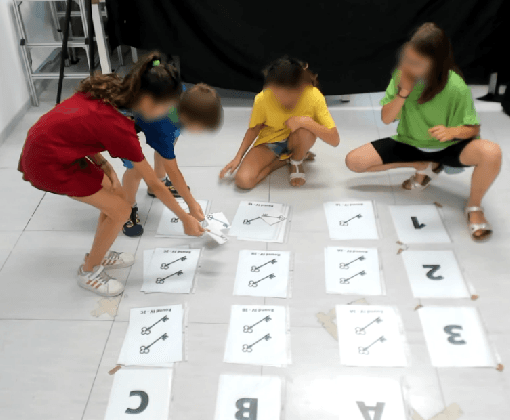

Abstract:This study presents novel strategies to investigate the mutual influence of trust and group dynamics in children-robot interaction. We implemented a game-like experimental activity with the humanoid robot iCub and designed a questionnaire to assess how the children perceived the interaction. We also aim to verify if the sensors, setups, and tasks are suitable for studying such aspects. The questionnaires' results demonstrate that youths perceive iCub as a friend and, typically, in a positive way. Other preliminary results suggest that, generally, children trusted iCub during the activity and, after its mistakes, they tried to reassure it with sentences such as: "Don't worry iCub, we forgive you". Furthermore, trust towards the robot in group cognitive activity appears to change according to gender: after two consecutive mistakes by the robot, girls tended to trust iCub more than boys. Finally, no significant difference has been evidenced between different age groups across points computed from the game and the self-reported scales. The tool we proposed is suitable for studying trust in human-robot interaction (HRI) across different ages and seems appropriate to understand the mechanism of trust in group interactions.

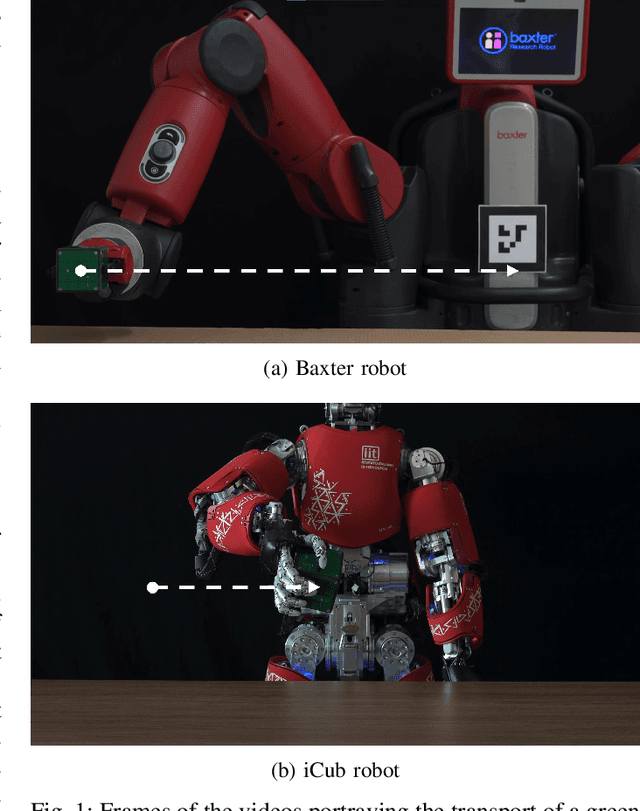

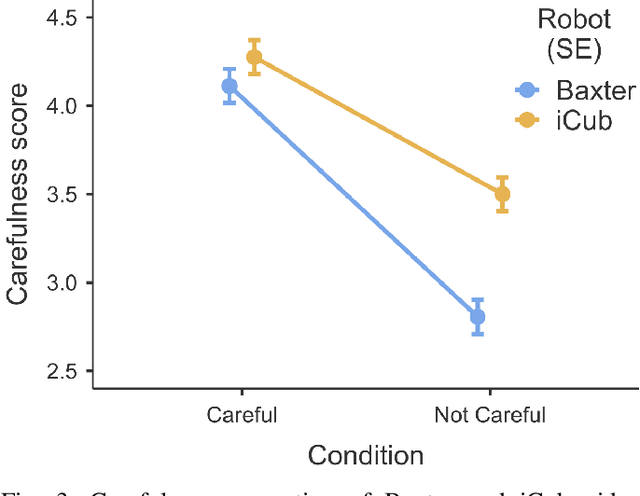

Robots with Different Embodiments Can Express and Influence Carefulness in Object Manipulation

Aug 03, 2022

Abstract:Humans have an extraordinary ability to communicate and read the properties of objects by simply watching them being carried by someone else. This level of communicative skills and interpretation, available to humans, is essential for collaborative robots if they are to interact naturally and effectively. For example, suppose a robot is handing over a fragile object. In that case, the human who receives it should be informed of its fragility in advance, through an immediate and implicit message, i.e., by the direct modulation of the robot's action. This work investigates the perception of object manipulations performed with a communicative intent by two robots with different embodiments (an iCub humanoid robot and a Baxter robot). We designed the robots' movements to communicate carefulness or not during the transportation of objects. We found that not only this feature is correctly perceived by human observers, but it can elicit as well a form of motor adaptation in subsequent human object manipulations. In addition, we get an insight into which motion features may induce to manipulate an object more or less carefully.

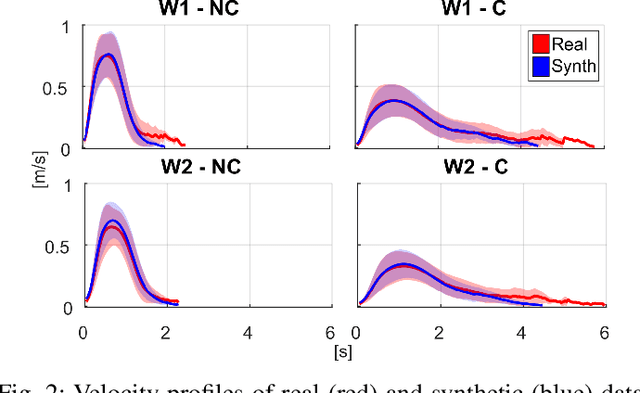

Synthesis and Execution of Communicative Robotic Movements with Generative Adversarial Networks

Mar 31, 2022

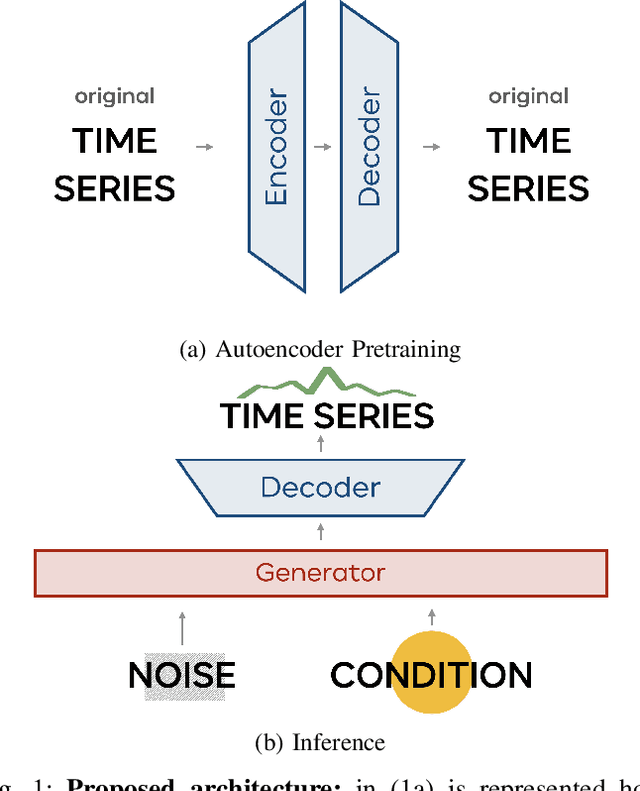

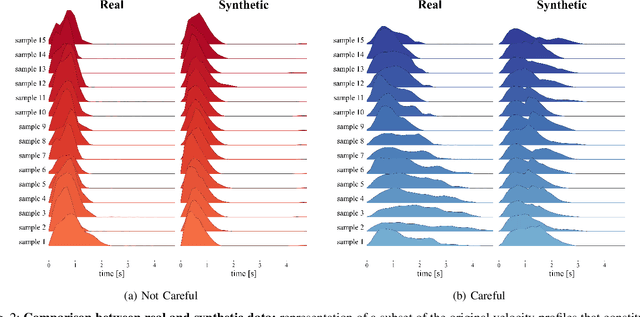

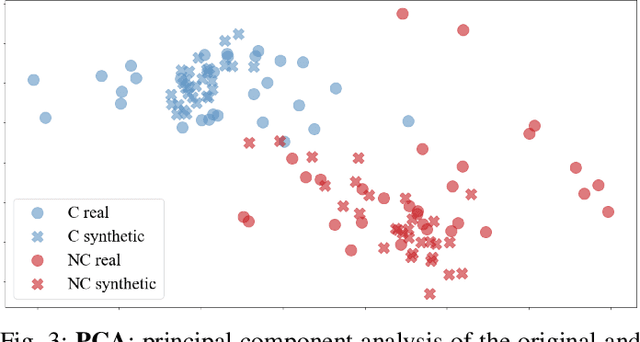

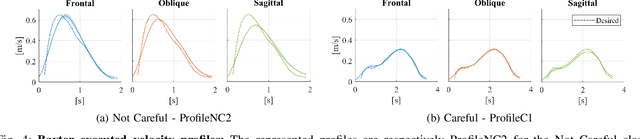

Abstract:Object manipulation is a natural activity we perform every day. How humans handle objects can communicate not only the willfulness of the acting, or key aspects of the context where we operate, but also the properties of the objects involved, without any need for explicit verbal description. Since human intelligence comprises the ability to read the context, allowing robots to perform actions that intuitively convey this kind of information would greatly facilitate collaboration. In this work, we focus on how to transfer on two different robotic platforms the same kinematics modulation that humans adopt when manipulating delicate objects, aiming to endow robots with the capability to show carefulness in their movements. We choose to modulate the velocity profile adopted by the robots' end-effector, inspired by what humans do when transporting objects with different characteristics. We exploit a novel Generative Adversarial Network architecture, trained with human kinematics examples, to generalize over them and generate new and meaningful velocity profiles, either associated with careful or not careful attitudes. This approach would allow next generation robots to select the most appropriate style of movement, depending on the perceived context, and autonomously generate their motor action execution.

From Movement Kinematics to Object Properties: Online Recognition of Human Carefulness

Sep 01, 2021

Abstract:When manipulating objects, humans finely adapt their motions to the characteristics of what they are handling. Thus, an attentive observer can foresee hidden properties of the manipulated object, such as its weight, temperature, and even whether it requires special care in manipulation. This study is a step towards endowing a humanoid robot with this last capability. Specifically, we study how a robot can infer online, from vision alone, whether or not the human partner is careful when moving an object. We demonstrated that a humanoid robot could perform this inference with high accuracy (up to 81.3%) even with a low-resolution camera. Only for short movements without obstacles, carefulness recognition was insufficient. The prompt recognition of movement carefulness from observing the partner's action will allow robots to adapt their actions on the object to show the same degree of care as their human partners.

Property-Aware Robot Object Manipulation: a Generative Approach

Jun 08, 2021

Abstract:When transporting an object, we unconsciously adapt our movement to its properties, for instance by slowing down when the item is fragile. The most relevant features of an object are immediately revealed to a human observer by the way the handling occurs, without any need for verbal description. It would greatly facilitate collaboration to enable humanoid robots to perform movements that convey similar intuitive cues to the observers. In this work, we focus on how to generate robot motion adapted to the hidden properties of the manipulated objects, such as their weight and fragility. We explore the possibility of leveraging Generative Adversarial Networks to synthesize new actions coherent with the properties of the object. The use of a generative approach allows us to create new and consistent motion patterns, without the need of collecting a large number of recorded human-led demonstrations. Besides, the informative content of the actions is preserved. Our results show that Generative Adversarial Nets can be a powerful tool for the generation of novel and meaningful transportation actions, which result effectively modulated as a function of the object weight and the carefulness required in its handling.

Careful with That! Observation of Human Movements to Estimate Objects Properties

Mar 10, 2021

Abstract:Humans are very effective at interpreting subtle properties of the partner's movement and use this skill to promote smooth interactions. Therefore, robotic platforms that support human partners in daily activities should acquire similar abilities. In this work we focused on the features of human motor actions that communicate insights on the weight of an object and the carefulness required in its manipulation. Our final goal is to enable a robot to autonomously infer the degree of care required in object handling and to discriminate whether the item is light or heavy, just by observing a human manipulation. This preliminary study represents a promising step towards the implementation of those abilities on a robot observing the scene with its camera. Indeed, we succeeded in demonstrating that it is possible to reliably deduct if the human operator is careful when handling an object, through machine learning algorithms relying on the stream of visual acquisition from either a robot camera or from a motion capture system. On the other hand, we observed that the same approach is inadequate to discriminate between light and heavy objects.

* Preprint version - 13th International Workshop of Human-Friendly Robotics (HFR 2020)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge