Alessandro Carfì

Dept. of Informatics, Bioengineering, Robotics, and Systems Engineering, University of Genoa, Genoa, Italy

Factored Reasoning with Inner Speech and Persistent Memory for Evidence-Grounded Human-Robot Interaction

Jan 31, 2026Abstract:Dialogue-based human-robot interaction requires robot cognitive assistants to maintain persistent user context, recover from underspecified requests, and ground responses in external evidence, while keeping intermediate decisions verifiable. In this paper we introduce JANUS, a cognitive architecture for assistive robots that models interaction as a partially observable Markov decision process and realizes control as a factored controller with typed interfaces. To this aim, Janus (i) decomposes the overall behavior into specialized modules, related to scope detection, intent recognition, memory, inner speech, query generation, and outer speech, and (ii) exposes explicit policies for information sufficiency, execution readiness, and tool grounding. A dedicated memory agent maintains a bounded recent-history buffer, a compact core memory, and an archival store with semantic retrieval, coupled through controlled consolidation and revision policies. Models inspired by the notion of inner speech in cognitive theories provide a control-oriented internal textual flow that validates parameter completeness and triggers clarification before grounding, while a faithfulness constraint ties robot-to-human claims to an evidence bundle combining working context and retrieved tool outputs. We evaluate JANUS through module-level unit tests in a dietary assistance domain grounded on a knowledge graph, reporting high agreement with curated references and practical latency profiles. These results support factored reasoning as a promising path to scalable, auditable, and evidence-grounded robot assistance over extended interaction horizons.

A Comparative Study of Human Activity Recognition: Motion, Tactile, and multi-modal Approaches

May 13, 2025Abstract:Human activity recognition (HAR) is essential for effective Human-Robot Collaboration (HRC), enabling robots to interpret and respond to human actions. This study evaluates the ability of a vision-based tactile sensor to classify 15 activities, comparing its performance to an IMU-based data glove. Additionally, we propose a multi-modal framework combining tactile and motion data to leverage their complementary strengths. We examined three approaches: motion-based classification (MBC) using IMU data, tactile-based classification (TBC) with single or dual video streams, and multi-modal classification (MMC) integrating both. Offline validation on segmented datasets assessed each configuration's accuracy under controlled conditions, while online validation on continuous action sequences tested online performance. Results showed the multi-modal approach consistently outperformed single-modality methods, highlighting the potential of integrating tactile and motion sensing to enhance HAR systems for collaborative robotics.

A Social Robot with Inner Speech for Dietary Guidance

May 13, 2025Abstract:We explore the use of inner speech as a mechanism to enhance transparency and trust in social robots for dietary advice. In humans, inner speech structures thought processes and decision-making; in robotics, it improves explainability by making reasoning explicit. This is crucial in healthcare scenarios, where trust in robotic assistants depends on both accurate recommendations and human-like dialogue, which make interactions more natural and engaging. Building on this, we developed a social robot that provides dietary advice, and we provided the architecture with inner speech capabilities to validate user input, refine reasoning, and generate clear justifications. The system integrates large language models for natural language understanding and a knowledge graph for structured dietary information. By making decisions more transparent, our approach strengthens trust and improves human-robot interaction in healthcare. We validated this by measuring the computational efficiency of our architecture and conducting a small user study, which assessed the reliability of inner speech in explaining the robot's behavior.

IFRA: a machine learning-based Instrumented Fall Risk Assessment Scale derived from Instrumented Timed Up and Go test in stroke patients

Jan 16, 2025

Abstract:Effective fall risk assessment is critical for post-stroke patients. The present study proposes a novel, data-informed fall risk assessment method based on the instrumented Timed Up and Go (ITUG) test data, bringing in many mobility measures that traditional clinical scales fail to capture. IFRA, which stands for Instrumented Fall Risk Assessment, has been developed using a two-step process: first, features with the highest predictive power among those collected in a ITUG test have been identified using machine learning techniques; then, a strategy is proposed to stratify patients into low, medium, or high-risk strata. The dataset used in our analysis consists of 142 participants, out of which 93 were used for training (15 synthetically generated), 17 for validation and 32 to test the resulting IFRA scale (22 non-fallers and 10 fallers). Features considered in the IFRA scale include gait speed, vertical acceleration during sit-to-walk transition, and turning angular velocity, which align well with established literature on the risk of fall in neurological patients. In a comparison with traditional clinical scales such as the traditional Timed Up & Go and the Mini-BESTest, IFRA demonstrates competitive performance, being the only scale to correctly assign more than half of the fallers to the high-risk stratum (Fischer's Exact test p = 0.004). Despite the dataset's limited size, this is the first proof-of-concept study to pave the way for future evidence regarding the use of IFRA tool for continuous patient monitoring and fall prevention both in clinical stroke rehabilitation and at home post-discharge.

The Effects of Selected Object Features on a Pick-and-Place Task: a Human Multimodal Dataset

Jul 18, 2024Abstract:We propose a dataset to study the influence of object-specific characteristics on human pick-and-place movements and compare the quality of the motion kinematics extracted by various sensors. This dataset is also suitable for promoting a broader discussion on general learning problems in the hand-object interaction domain, such as intention recognition or motion generation with applications in the Robotics field. The dataset consists of the recordings of 15 subjects performing 80 repetitions of a pick-and-place action under various experimental conditions, for a total of 1200 pick-and-places. The data has been collected thanks to a multimodal setup composed of multiple cameras, observing the actions from different perspectives, a motion capture system, and a wrist-worn inertial measurement unit. All the objects manipulated in the experiments are identical in shape, size, and appearance but differ in weight and liquid filling, which influences the carefulness required for their handling.

* Camera ready version. Full paper available in open-access at https://doi.org/10.1177/02783649231210965 Dataset available on Kaggle (DOI: 10.34740/KAGGLE/DS/2319925, https://www.kaggle.com/datasets/alessandrocarf/effects-of-object-characteristics-on-manipulations)

A Modular Framework for Flexible Planning in Human-Robot Collaboration

Jun 07, 2024

Abstract:This paper presents a comprehensive framework to enhance Human-Robot Collaboration (HRC) in real-world scenarios. It introduces a formalism to model articulated tasks, requiring cooperation between two agents, through a smaller set of primitives. Our implementation leverages Hierarchical Task Networks (HTN) planning and a modular multisensory perception pipeline, which includes vision, human activity recognition, and tactile sensing. To showcase the system's scalability, we present an experimental scenario where two humans alternate in collaborating with a Baxter robot to assemble four pieces of furniture with variable components. This integration highlights promising advancements in HRC, suggesting a scalable approach for complex, cooperative tasks across diverse applications.

Robotic in-hand manipulation with relaxed optimization

Jun 07, 2024

Abstract:Dexterous in-hand manipulation is a unique and valuable human skill requiring sophisticated sensorimotor interaction with the environment while respecting stability constraints. Satisfying these constraints with generated motions is essential for a robotic platform to achieve reliable in-hand manipulation skills. Explicitly modelling these constraints can be challenging, but they can be implicitly modelled and learned through experience or human demonstrations. We propose a learning and control approach based on dictionaries of motion primitives generated from human demonstrations. To achieve this, we defined an optimization process that combines motion primitives to generate robot fingertip trajectories for moving an object from an initial to a desired final pose. Based on our experiments, our approach allows a robotic hand to handle objects like humans, adhering to stability constraints without requiring explicit formalization. In other words, the proposed motion primitive dictionaries learn and implicitly embed the constraints crucial to the in-hand manipulation task.

A Systematic Review on Custom Data Gloves

May 24, 2024Abstract:Hands are a fundamental tool humans use to interact with the environment and objects. Through hand motions, we can obtain information about the shape and materials of the surfaces we touch, modify our surroundings by interacting with objects, manipulate objects and tools, or communicate with other people by leveraging the power of gestures. For these reasons, sensorized gloves, which can collect information about hand motions and interactions, have been of interest since the 1980s in various fields, such as Human-Machine Interaction (HMI) and the analysis and control of human motions. Over the last 40 years, research in this field explored different technological approaches and contributed to the popularity of wearable custom and commercial products targeting hand sensorization. Despite a positive research trend, these instruments are not widespread yet outside research environments and devices aimed at research are often ad hoc solutions with a low chance of being reused. This paper aims to provide a systematic literature review for custom gloves to analyze their main characteristics and critical issues, from the type and number of sensors to the limitations due to device encumbrance. The collection of this information lays the foundation for a standardization process necessary for future breakthroughs in this research field.

Gaze-Based Intention Recognition for Human-Robot Collaboration

May 13, 2024

Abstract:This work aims to tackle the intent recognition problem in Human-Robot Collaborative assembly scenarios. Precisely, we consider an interactive assembly of a wooden stool where the robot fetches the pieces in the correct order and the human builds the parts following the instruction manual. The intent recognition is limited to the idle state estimation and it is needed to ensure a better synchronization between the two agents. We carried out a comparison between two distinct solutions involving wearable sensors and eye tracking integrated into the perception pipeline of a flexible planning architecture based on Hierarchical Task Networks. At runtime, the wearable sensing module exploits the raw measurements from four 9-axis Inertial Measurement Units positioned on the wrists and hands of the user as an input for a Long Short-Term Memory Network. On the other hand, the eye tracking relies on a Head Mounted Display and Unreal Engine. We tested the effectiveness of the two approaches with 10 participants, each of whom explored both options in alternate order. We collected explicit metrics about the attractiveness and efficiency of the two techniques through User Experience Questionnaires as well as implicit criteria regarding the classification time and the overall assembly time. The results of our work show that the two methods can reach comparable performances both in terms of effectiveness and user preference. Future development could aim at joining the two approaches two allow the recognition of more complex activities and to anticipate the user actions.

Digital Twins for Human-Robot Collaboration: A Future Perspective

Nov 04, 2023

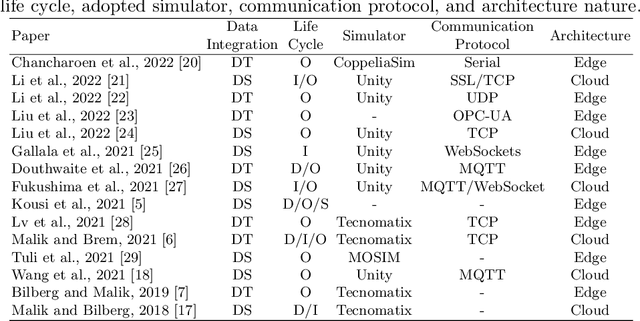

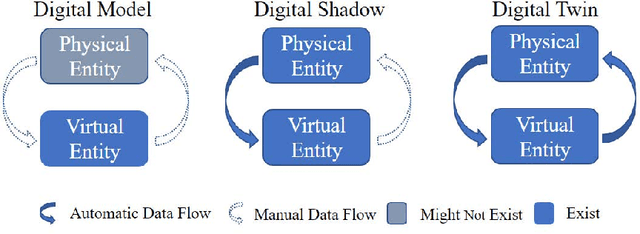

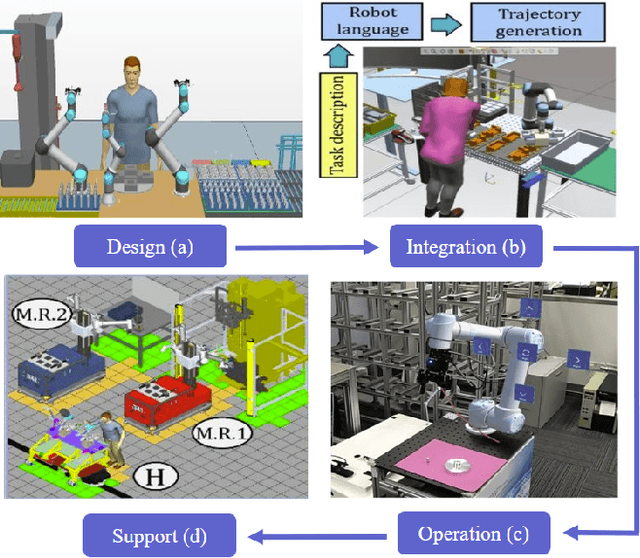

Abstract:As collaborative robot (Cobot) adoption in many sectors grows, so does the interest in integrating digital twins in human-robot collaboration (HRC). Virtual representations of physical systems (PT) and assets, known as digital twins, can revolutionize human-robot collaboration by enabling real-time simulation, monitoring, and control. In this article, we present a review of the state-of-the-art and our perspective on the future of digital twins (DT) in human-robot collaboration. We argue that DT will be crucial in increasing the efficiency and effectiveness of these systems by presenting compelling evidence and a concise vision of the future of DT in human-robot collaboration, as well as insights into the possible advantages and challenges associated with their integration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge