Alessio Capitanelli

On the Generalization Gap in LLM Planning: Tests and Verifier-Reward RL

Jan 20, 2026Abstract:Recent work shows that fine-tuned Large Language Models (LLMs) can achieve high valid plan rates on PDDL planning tasks. However, it remains unclear whether this reflects transferable planning competence or domain-specific memorization. In this work, we fine-tune a 1.7B-parameter LLM on 40,000 domain-problem-plan tuples from 10 IPC 2023 domains, and evaluate both in-domain and cross-domain generalization. While the model reaches 82.9% valid plan rate in in-domain conditions, it achieves 0% on two unseen domains. To analyze this failure, we introduce three diagnostic interventions, namely (i) instance-wise symbol anonymization, (ii) compact plan serialization, and (iii) verifier-reward fine-tuning using the VAL validator as a success-focused reinforcement signal. Symbol anonymization and compact serialization cause significant performance drops despite preserving plan semantics, thus revealing strong sensitivity to surface representations. Verifier-reward fine-tuning reaches performance saturation in half the supervised training epochs, but does not improve cross-domain generalization. For the explored configurations, in-domain performance plateaus around 80%, while cross-domain performance collapses, suggesting that our fine-tuned model relies heavily on domain-specific patterns rather than transferable planning competence in this setting. Our results highlight a persistent generalization gap in LLM-based planning and provide diagnostic tools for studying its causes.

Achieving Scalable Robot Autonomy via neurosymbolic planning using lightweight local LLM

May 13, 2025Abstract:PDDL-based symbolic task planning remains pivotal for robot autonomy yet struggles with dynamic human-robot collaboration due to scalability, re-planning demands, and delayed plan availability. Although a few neurosymbolic frameworks have previously leveraged LLMs such as GPT-3 to address these challenges, reliance on closed-source, remote models with limited context introduced critical constraints: third-party dependency, inconsistent response times, restricted plan length and complexity, and multi-domain scalability issues. We present Gideon, a novel framework that enables the transition to modern, smaller, local LLMs with extended context length. Gideon integrates a novel problem generator to systematically generate large-scale datasets of realistic domain-problem-plan tuples for any domain, and adapts neurosymbolic planning for local LLMs, enabling on-device execution and extended context for multi-domain support. Preliminary experiments in single-domain scenarios performed on Qwen-2.5 1.5B and trained on 8k-32k samples, demonstrate a valid plan percentage of 66.1% (32k model) and show that the figure can be further scaled through additional data. Multi-domain tests on 16k samples yield an even higher 70.6% planning validity rate, proving extensibility across domains and signaling that data variety can have a positive effect on learning efficiency. Although long-horizon planning and reduced model size make Gideon training much less efficient than baseline models based on larger LLMs, the results are still significant considering that the trained model is about 120x smaller than baseline and that significant advantages can be achieved in inference efficiency, scalability, and multi-domain adaptability, all critical factors in human-robot collaboration. Training inefficiency can be mitigated by Gideon's streamlined data generation pipeline.

IFRA: a machine learning-based Instrumented Fall Risk Assessment Scale derived from Instrumented Timed Up and Go test in stroke patients

Jan 16, 2025

Abstract:Effective fall risk assessment is critical for post-stroke patients. The present study proposes a novel, data-informed fall risk assessment method based on the instrumented Timed Up and Go (ITUG) test data, bringing in many mobility measures that traditional clinical scales fail to capture. IFRA, which stands for Instrumented Fall Risk Assessment, has been developed using a two-step process: first, features with the highest predictive power among those collected in a ITUG test have been identified using machine learning techniques; then, a strategy is proposed to stratify patients into low, medium, or high-risk strata. The dataset used in our analysis consists of 142 participants, out of which 93 were used for training (15 synthetically generated), 17 for validation and 32 to test the resulting IFRA scale (22 non-fallers and 10 fallers). Features considered in the IFRA scale include gait speed, vertical acceleration during sit-to-walk transition, and turning angular velocity, which align well with established literature on the risk of fall in neurological patients. In a comparison with traditional clinical scales such as the traditional Timed Up & Go and the Mini-BESTest, IFRA demonstrates competitive performance, being the only scale to correctly assign more than half of the fallers to the high-risk stratum (Fischer's Exact test p = 0.004). Despite the dataset's limited size, this is the first proof-of-concept study to pave the way for future evidence regarding the use of IFRA tool for continuous patient monitoring and fall prevention both in clinical stroke rehabilitation and at home post-discharge.

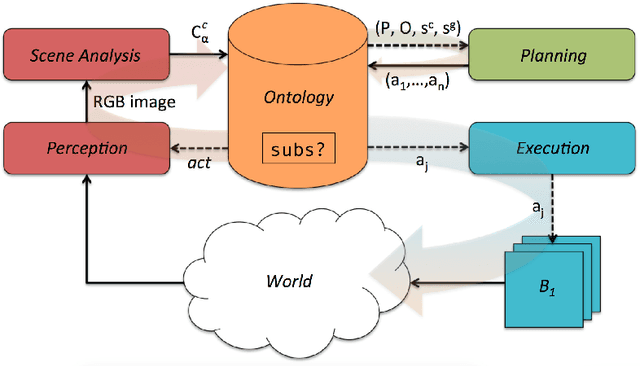

A Framework to Generate Neurosymbolic PDDL-compliant Planners

Mar 01, 2023

Abstract:The problem of integrating high-level task planning in the execution loop of a real-world robot architecture remains challenging, as the planning times of traditional symbolic planners explode combinatorially with the number of symbols to plan upon. In this paper, we present Teriyaki, a framework for training Large Language Models (LLMs), and in particular the now well-known GPT-3 model, into neurosymbolic planners compatible with the Planning Domain Definition Language (PDDL). Unlike symbolic approaches, LLMs require a training process. However, their response time scales with the combined length of the input and the output. Hence, LLM-based planners can potentially provide significant performance gains on complex planning problems as the technology matures and becomes more accessible. In this preliminary work, which to our knowledge is the first using LLMs for planning in robotics, we (i) outline a methodology for training LLMs as PDDL solvers, (ii) generate PDDL-compliant planners for two challenging PDDL domains, and (iii) test the planning times and the plan quality associated with the obtained planners, while also comparing them to a state-of-the-art PDDL planner, namely Probe. Results confirm the viability of the approach, with Teriyaki-based planners being able to solve 95.5% of problems in a test data set of 1000 samples, and even generating plans up to 13.5% shorter on average than the employed traditional planner, depending on the domain.

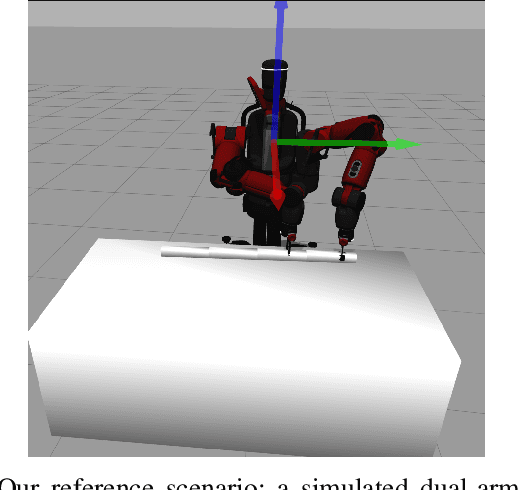

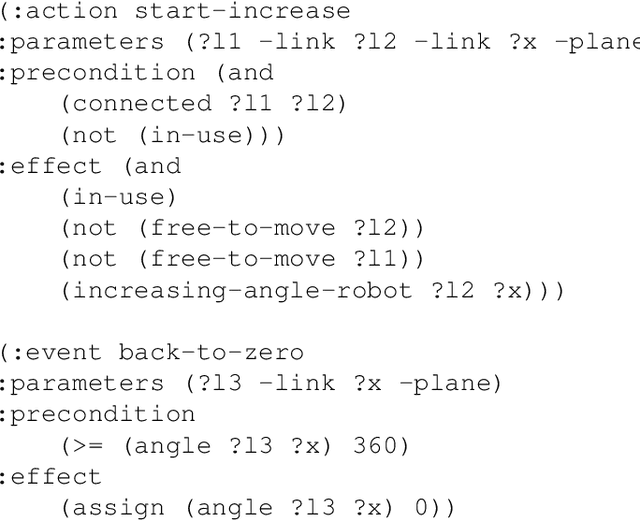

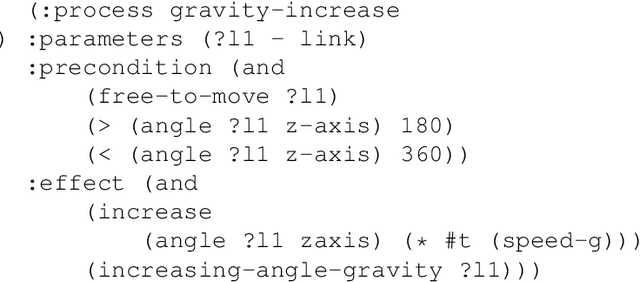

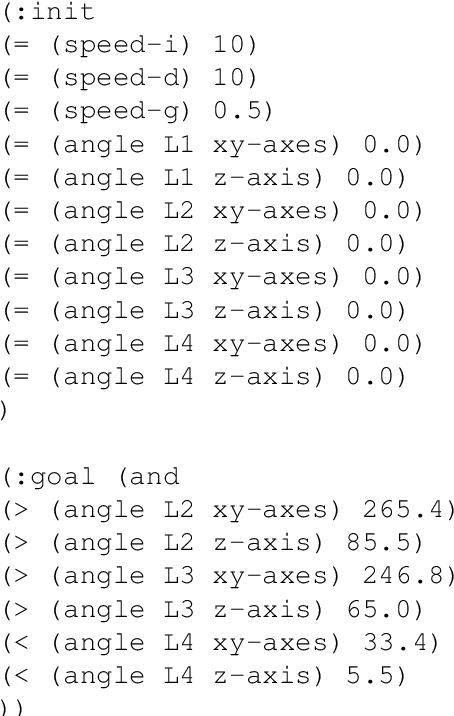

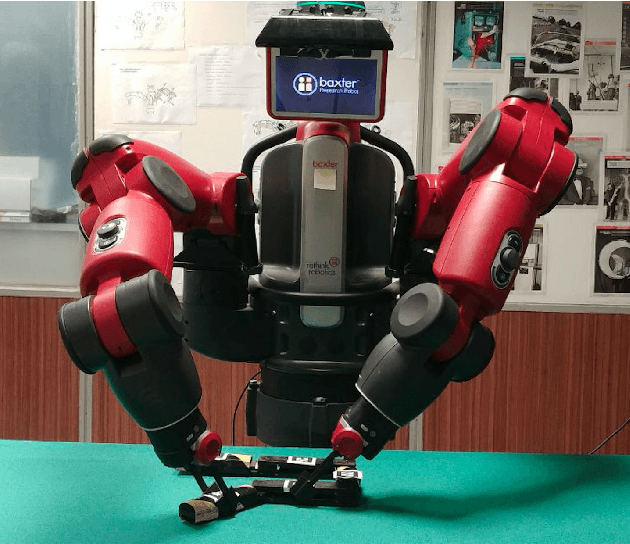

Collaborative Robotic Manipulation: A Use Case of Articulated Objects in Three-dimensions with Gravity

Nov 13, 2020

Abstract:This paper addresses two intertwined needs for collaborative robots operating in shop-floor environments. The first is the ability to perform complex manipulation operations, such as those on articulated or even flexible objects, in a way robust to a high degree of variability in the actions possibly carried out by human operators during collaborative tasks. The second is encoding in such operations a basic knowledge about physical laws (e.g., gravity), and their effects on the models used by the robot to plan its actions, to generate more robust plans. We adopt the manipulation in three-dimensional space of articulated objects as an effective use case to ground both needs, and we use a variant of the Planning Domain Definition Language to integrate the planning process with a notion of gravity. Different complexity levels in modelling gravity are evaluated, which trade-off model faithfulness and performance. A thorough validation of the framework is done in simulation using a dual-arm Baxter manipulator.

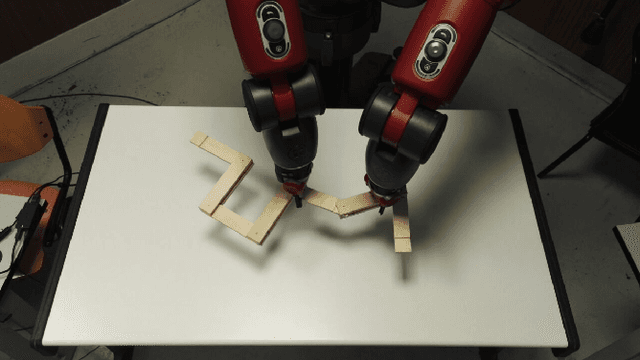

Manipulation of Articulated Objects using Dual-arm Robots via Answer Set Programming

Oct 02, 2020

Abstract:The manipulation of articulated objects is of primary importance in Robotics, and can be considered as one of the most complex manipulation tasks. Traditionally, this problem has been tackled by developing ad-hoc approaches, which lack flexibility and portability. In this paper we present a framework based on Answer Set Programming (ASP) for the automated manipulation of articulated objects in a robot control architecture. In particular, ASP is employed for representing the configuration of the articulated object, for checking the consistency of such representation in the knowledge base, and for generating the sequence of manipulation actions. The framework is exemplified and validated on the Baxter dual-arm manipulator in a first, simple scenario. Then, we extend such scenario to improve the overall setup accuracy, and to introduce a few constraints in robot actions execution to enforce their feasibility. The extended scenario entails a high number of possible actions that can be fruitfully combined together. Therefore, we exploit macro actions from automated planning in order to provide more effective plans. We validate the overall framework in the extended scenario, thereby confirming the applicability of ASP also in more realistic Robotics settings, and showing the usefulness of macro actions for the robot-based manipulation of articulated objects. Under consideration in Theory and Practice of Logic Programming (TPLP).

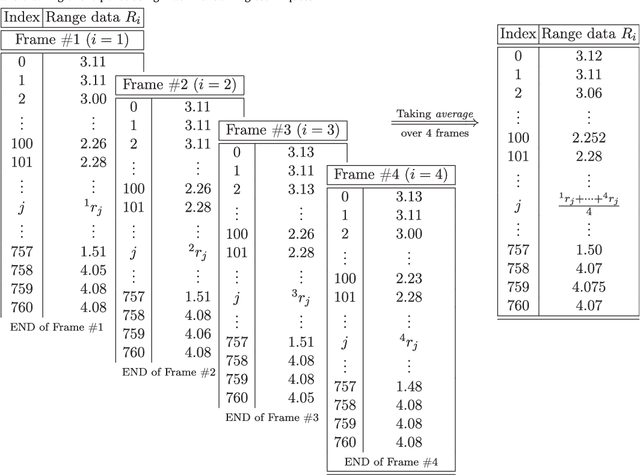

A 2D laser rangefinder scans dataset of standard EUR pallets

Mar 13, 2019

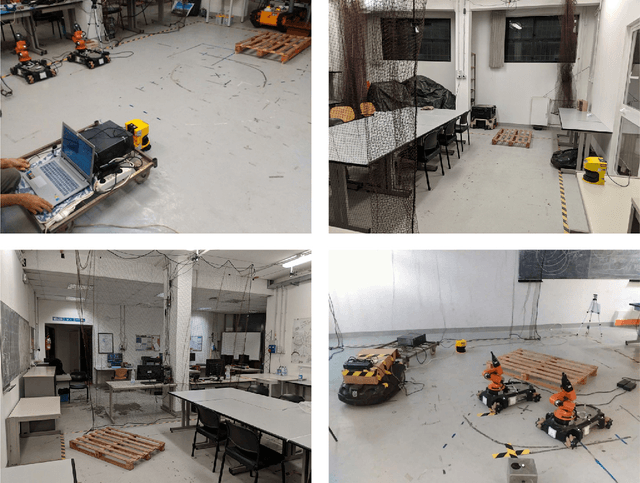

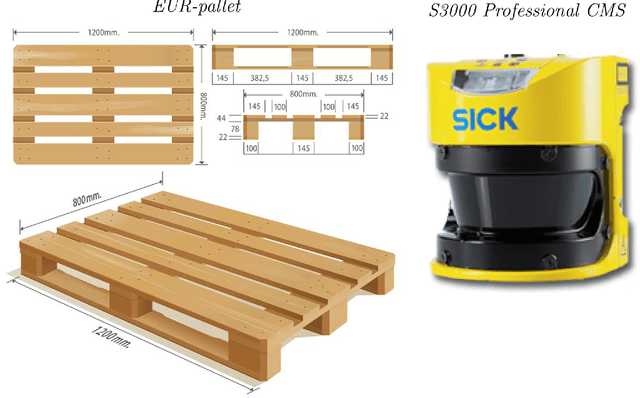

Abstract:In the past few years, the technology of automated guided vehicles (AGVs) has notably advanced. In particular, in the context of factory and warehouse automation, different approaches have been presented for detecting and localizing pallets inside warehouses and shop-floor environments. In a related research paper [1], we show that an AGVs can detect, localize, and track pallets using machine learning techniques based only on the data of an on-board 2D laser rangefinder. Such sensor is very common in industrial scenarios due to its simplicity and robustness, but it can only provide a limited amount of data. Therefore, it has been neglected in the past in favor of more complex solutions. In this paper, we release to the community the data we collected in [1] for further research activities in the field of pallet localization and tracking. The dataset comprises a collection of 565 2D scans from real-world environments, which are divided into 340 samples where pallets are present, and 225 samples where they are not. The data have been manually labelled and are provided in different formats.

Detection, localisation and tracking of pallets using machine learning techniques and 2D range data

Feb 22, 2019

Abstract:The problem of autonomous transportation in industrial scenarios is receiving a renewed interest due to the way it can revolutionise internal logistics, especially in unstructured environments. This paper presents a novel architecture allowing a robot to detect, localise, and track (possibly multiple) pallets using machine learning techniques based on an on-board 2D laser rangefinder only. The architecture is composed of two main components: the first stage is a pallet detector employing a Faster Region-based Convolutional Neural Network (Faster R-CNN) detector cascaded with a CNN-based classifier; the second stage is a Kalman filter for localising and tracking detected pallets, which we also use to defer commitment to a pallet detected in the first stage until sufficient confidence has been acquired via a sequential data acquisition process. For fine-tuning the CNNs, the architecture has been systematically evaluated using a real-world dataset containing 340 labeled 2D scans, which have been made freely available in an online repository. Detection performance has been assessed on the basis of the average accuracy over k-fold cross-validation, and it scored 99.58% in our tests. Concerning pallet localisation and tracking, experiments have been performed in a scenario where the robot is approaching the pallet to fork. Although data have been originally acquired by considering only one pallet as per specification of the use case we consider, artificial data have been generated as well to mimic the presence of multiple pallets in the robot workspace. Our experimental results confirm that the system is capable of identifying, localising and tracking pallets with a high success rate while being robust to false positives.

Long-term area coverage and radio relay positioning using swarms of UAVs

Oct 29, 2018

Abstract:Unmanned Aerial Vehicles (UAVs) are becoming increasingly useful for tasks which require the acquisition of data over large areas. The coverage problem, i.e., the problem of periodically visiting all subregions of an area at a desired frequency, is especially interesting because of its practical applications, both in industry and long-term monitoring of areas hit by a natural disaster. We focus here on the latter scenario, and take into consideration its peculiar characteristic, i.e. the a coverage system should be resilient to a changing environment and not be dependent on pre-existing infrastructures for communication. To this purpose, we designed a novel algorithm for online area coverage and simultaneous signal relay that allows a UAV to cover an area freely, while a variable number of other UAVs provide a stable communication with the base and support in the coverage process at the same time. Finally, a test architecture based on the algorithm has been developed and tests have been performed. By comparison with a simple relay chain system, our approach employs up to 64% less time to reach a certain goal of coverage iterations over the map with only 17% worse average communication cost and no impact on the worst case communication cost.

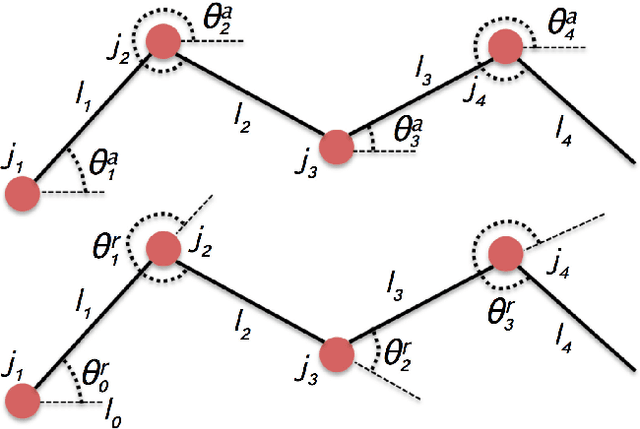

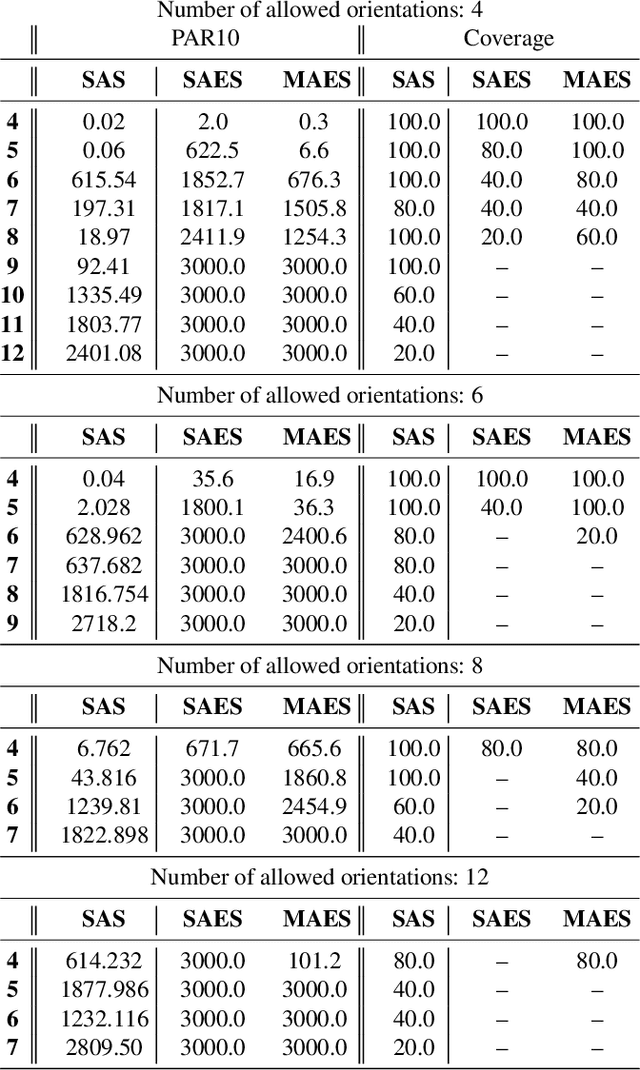

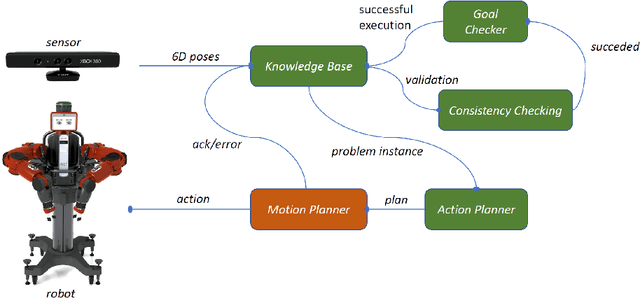

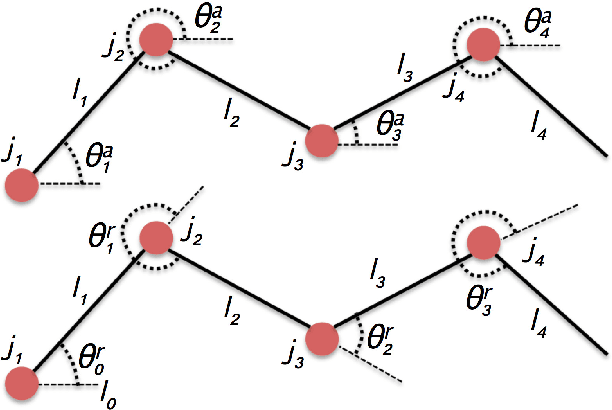

On the manipulation of articulated objects in human-robot cooperation scenarios

Jan 13, 2018

Abstract:Articulated and flexible objects constitute a challenge for robot manipulation tasks but are present in different real-world settings, including home and industrial environments. Current approaches to the manipulation of articulated and flexible objects employ ad hoc strategies to sequence and perform actions on them depending on a number of physical or geometrical characteristics related to those objects, as well as on an a priori classification of target object configurations. In this paper, we propose an action planning and execution framework, which (i) considers abstract representations of articulated or flexible objects, (ii) integrates action planning to reason upon such configurations and to sequence an appropriate set of actions with the aim of obtaining a target configuration provided as a goal, and (iii) is able to cooperate with humans to collaboratively carry out the plan. On the one hand, we show that a trade-off exists between the way articulated or flexible objects are perceived and how the system represents them. Such a trade-off greatly impacts on the complexity of the planning process. On the other hand, we demonstrate the system's capabilities in allowing humans to interrupt robot action execution, and - in general - to contribute to the whole manipulation process. Results related to planning performance are discussed, and examples of a Baxter dual-arm manipulator performing actions collaboratively with humans are shown.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge