Giulio Sandini

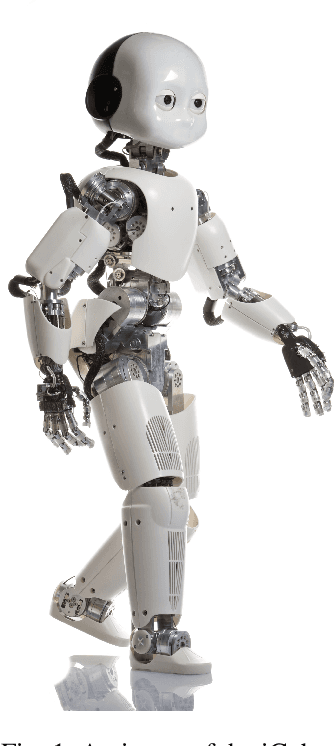

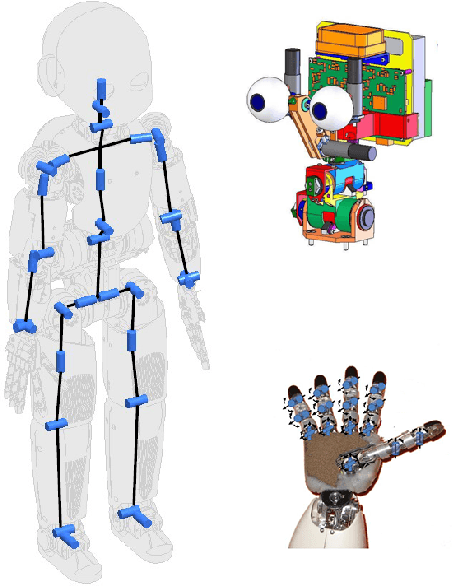

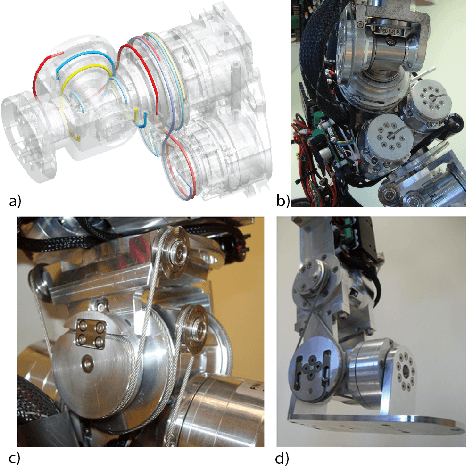

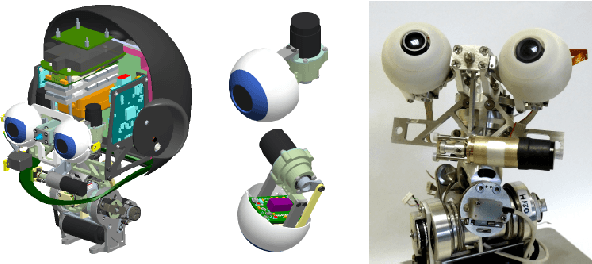

iCub

May 07, 2021

Abstract:In this chapter we describe the history and evolution of the iCub humanoid platform. We start by describing the first version as it was designed during the RobotCub EU project and illustrate how it evolved to become the platform that is adopted by more than 30 laboratories world wide. We complete the chapter by illustrating some of the research activities that are currently carried out on the iCub robot, i.e. visual perception, event driven sensing and dynamic control. We conclude the Chapter with a discussion of the lessons we learned and a preview of the upcoming next release of the robot, iCub 3.0.

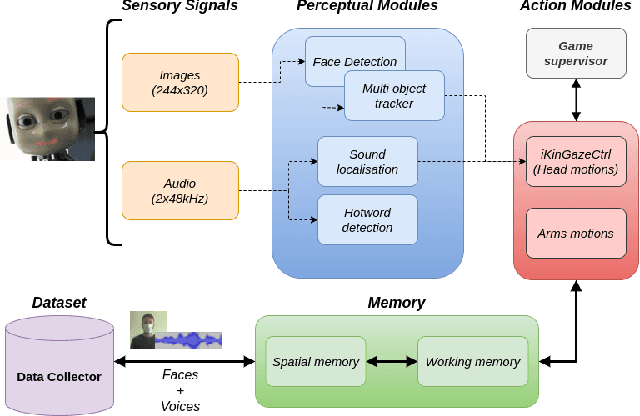

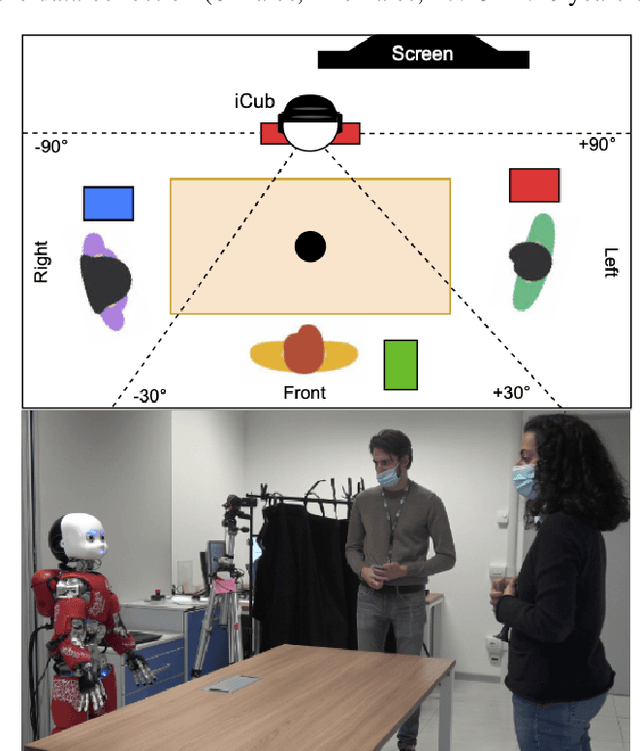

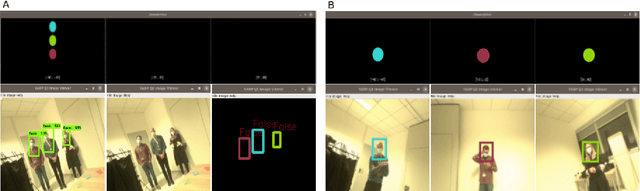

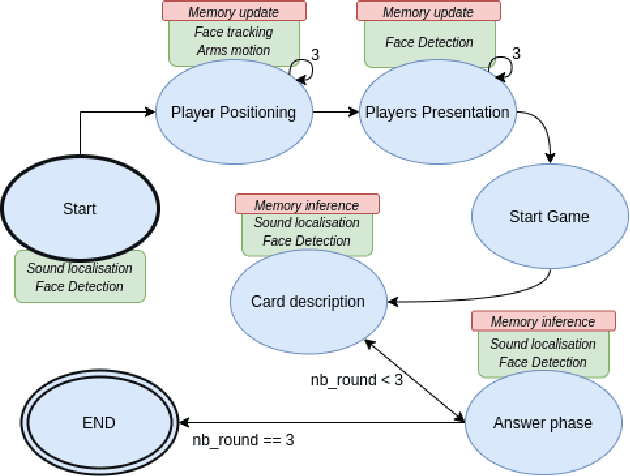

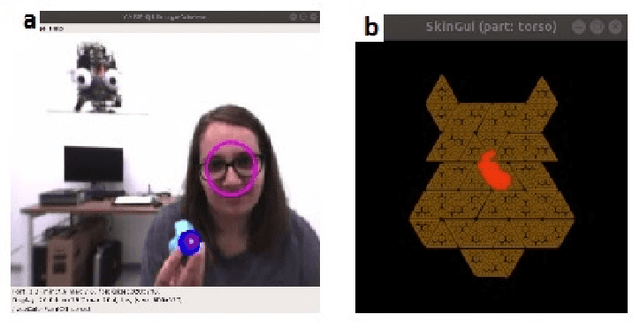

Cognitive architecture aided by working-memory for self-supervised multi-modal humans recognition

Mar 16, 2021

Abstract:The ability to recognize human partners is an important social skill to build personalized and long-term human-robot interactions, especially in scenarios like education, care-giving, and rehabilitation. Faces and voices constitute two important sources of information to enable artificial systems to reliably recognize individuals. Deep learning networks have achieved state-of-the-art results and demonstrated to be suitable tools to address such a task. However, when those networks are applied to different and unprecedented scenarios not included in the training set, they can suffer a drop in performance. For example, with robotic platforms in ever-changing and realistic environments, where always new sensory evidence is acquired, the performance of those models degrades. One solution is to make robots learn from their first-hand sensory data with self-supervision. This allows coping with the inherent variability of the data gathered in realistic and interactive contexts. To this aim, we propose a cognitive architecture integrating low-level perceptual processes with a spatial working memory mechanism. The architecture autonomously organizes the robot's sensory experience into a structured dataset suitable for human recognition. Our results demonstrate the effectiveness of our architecture and show that it is a promising solution in the quest of making robots more autonomous in their learning process.

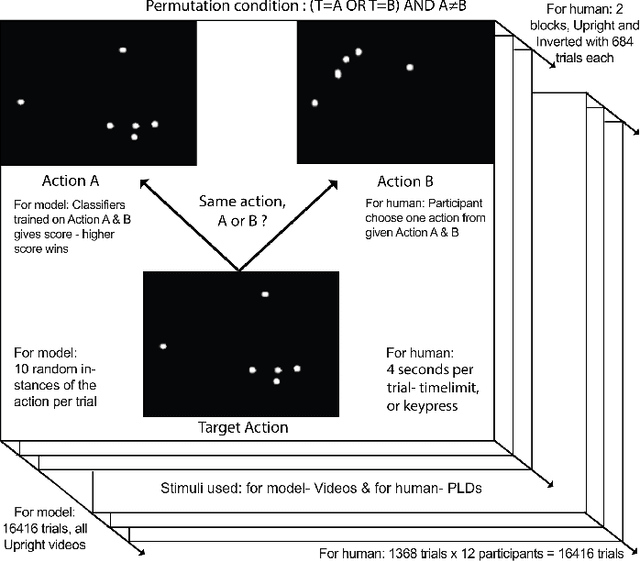

Action similarity judgment based on kinematic primitives

Aug 30, 2020

Abstract:Understanding which features humans rely on -- in visually recognizing action similarity is a crucial step towards a clearer picture of human action perception from a learning and developmental perspective. In the present work, we investigate to which extent a computational model based on kinematics can determine action similarity and how its performance relates to human similarity judgments of the same actions. To this aim, twelve participants perform an action similarity task, and their performances are compared to that of a computational model solving the same task. The chosen model has its roots in developmental robotics and performs action classification based on learned kinematic primitives. The comparative experiment results show that both the model and human participants can reliably identify whether two actions are the same or not. However, the model produces more false hits and has a greater selection bias than human participants. A possible reason for this is the particular sensitivity of the model towards kinematic primitives of the presented actions. In a second experiment, human participants' performance on an action identification task indicated that they relied solely on kinematic information rather than on action semantics. The results show that both the model and human performance are highly accurate in an action similarity task based on kinematic-level features, which can provide an essential basis for classifying human actions.

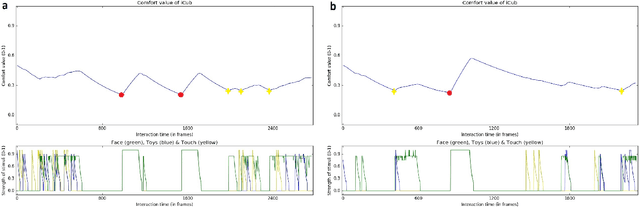

A Socially Adaptable Framework for Human-Robot Interaction

Mar 25, 2020

Abstract:In our everyday lives we are accustomed to partake in complex, personalized, adaptive interactions with our peers. For a social robot to be able to recreate this same kind of rich, human-like interaction, it should be aware of our needs and affective states and be capable of continuously adapting its behavior to them. One proposed solution to this problem would involve the robot to learn how to select the behaviors that would maximize the pleasantness of the interaction for its peers, guided by an internal motivation system that would provide autonomy to its decision-making process. We are interested in studying how an adaptive robotic framework of this kind would function and personalize to different users. In addition we explore whether including the element of adaptability and personalization in a cognitive framework will bring any additional richness to the human-robot interaction (HRI), or if it will instead bring uncertainty and unpredictability that would not be accepted by the robot`s human peers. To this end, we designed a socially-adaptive framework for the humanoid robot iCub which allows it to perceive and reuse the affective and interactive signals from the person as input for the adaptation based on internal social motivation. We propose a comparative interaction study with iCub where users act as the robot's caretaker, and iCub's social adaptation is guided by an internal comfort level that varies with the amount of stimuli iCub receives from its caretaker. We investigate and compare how the internal dynamics of the robot would be perceived by people in a condition when the robot does not personalize its interaction, and in a condition where it is adaptive. Finally, we establish the potential benefits that an adaptive framework could bring to the context of having repeated interactions with a humanoid robot.

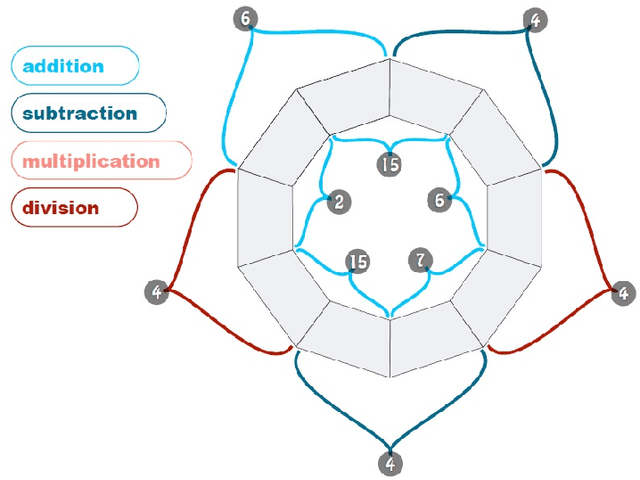

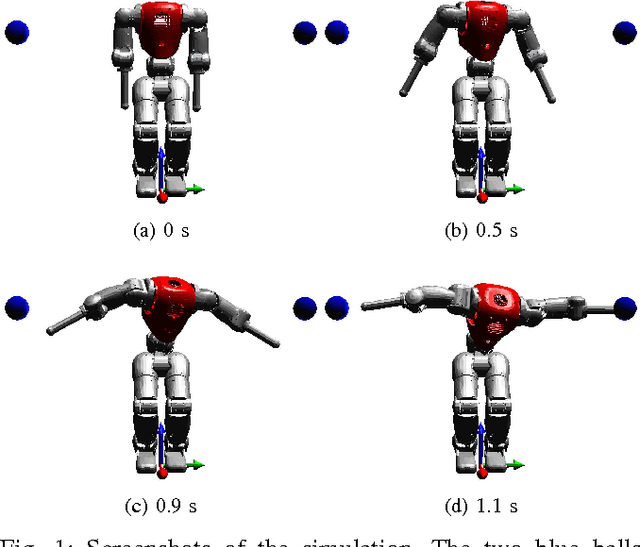

Prioritized Optimal Control

Oct 16, 2014

Abstract:This paper presents a new technique to control highly redundant mechanical systems, such as humanoid robots. We take inspiration from two approaches. Prioritized control is a widespread multi-task technique in robotics and animation: tasks have strict priorities and they are satisfied only as long as they do not conflict with any higher-priority task. Optimal control instead formulates an optimization problem whose solution is either a feedback control policy or a feedforward trajectory of control inputs. We introduce strict priorities in multi-task optimal control problems, as an alternative to weighting task errors proportionally to their importance. This ensures the respect of the specified priorities, while avoiding numerical conditioning issues. We compared our approach with both prioritized control and optimal control with tests on a simulated robot with 11 degrees of freedom.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge