Lola Cañamero

ETIS, CY, CNRS, ENSEA

What Can Robots Teach Us About Trust and Reliance? An interdisciplinary dialogue between Social Sciences and Social Robotics

Jul 17, 2025Abstract:As robots find their way into more and more aspects of everyday life, questions around trust are becoming increasingly important. What does it mean to trust a robot? And how should we think about trust in relationships that involve both humans and non-human agents? While the field of Human-Robot Interaction (HRI) has made trust a central topic, the concept is often approached in fragmented ways. At the same time, established work in sociology, where trust has long been a key theme, is rarely brought into conversation with developments in robotics. This article argues that we need a more interdisciplinary approach. By drawing on insights from both social sciences and social robotics, we explore how trust is shaped, tested and made visible. Our goal is to open up a dialogue between disciplines and help build a more grounded and adaptable framework for understanding trust in the evolving world of human-robot interaction.

Human-Robot Mutual Learning through Affective-Linguistic Interaction and Differential Outcomes Training [Pre-Print]

Jul 01, 2024Abstract:Owing to the recent success of Large Language Models, Modern A.I has been much focused on linguistic interactions with humans but less focused on non-linguistic forms of communication between man and machine. In the present paper, we test how affective-linguistic communication, in combination with differential outcomes training, affects mutual learning in a human-robot context. Taking inspiration from child-caregiver dynamics, our human-robot interaction setup consists of a (simulated) robot attempting to learn how best to communicate internal, homeostatically-controlled needs; while a human "caregiver" attempts to learn the correct object to satisfy the robot's present communicated need. We studied the effects of i) human training type, and ii) robot reinforcement learning type, to assess mutual learning terminal accuracy and rate of learning (as measured by the average reward achieved by the robot). Our results find mutual learning between a human and a robot is significantly improved with Differential Outcomes Training (DOT) compared to Non-DOT (control) conditions. We find further improvements when the robot uses an exploration-exploitation policy selection, compared to purely exploitation policy selection. These findings have implications for utilizing socially assistive robots (SAR) in therapeutic contexts, e.g. for cognitive interventions, and educational applications.

A Human-Robot Mutual Learning System with Affect-Grounded Language Acquisition and Differential Outcomes Training

Oct 20, 2023Abstract:This paper presents a novel human-robot interaction setup for robot and human learning of symbolic language for identifying robot homeostatic needs. The robot and human learn to use and respond to the same language symbols that convey homeostatic needs and the stimuli that satisfy the homeostatic needs, respectively. We adopted a differential outcomes training (DOT) protocol whereby the robot provides feedback specific (differential) to its internal needs (e.g. `hunger') when satisfied by the correct stimulus (e.g. cookie). We found evidence that DOT can enhance the human's learning efficiency, which in turn enables more efficient robot language acquisition. The robot used in the study has a vocabulary similar to that of a human infant in the linguistic ``babbling'' phase. The robot software architecture is built upon a model for affect-grounded language acquisition where the robot associates vocabulary with internal needs (hunger, thirst, curiosity) through interactions with the human. The paper presents the results of an initial pilot study conducted with the interactive setup, which reveal that the robot's language acquisition achieves higher convergence rate in the DOT condition compared to the non-DOT control condition. Additionally, participants reported positive affective experiences, feeling of being in control, and an empathetic connection with the robot. This mutual learning (teacher-student learning) approach offers a potential contribution of facilitating cognitive interventions with DOT (e.g. for people with dementia) through increased therapy adherence as a result of engaging humans more in training tasks by taking an active teaching-learning role. The homeostatic motivational grounding of the robot's language acquisition has potential to contribute to more ecologically valid and social (collaborative/nurturing) interactions with robots.

A Socially Adaptable Framework for Human-Robot Interaction

Mar 25, 2020

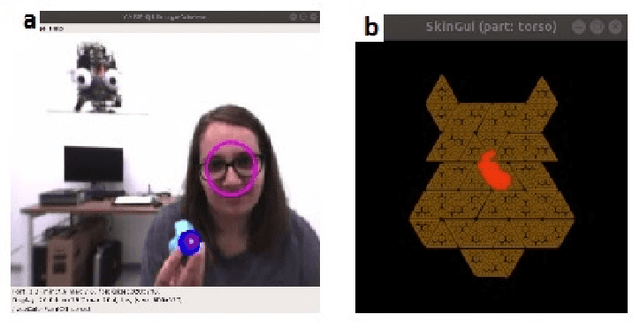

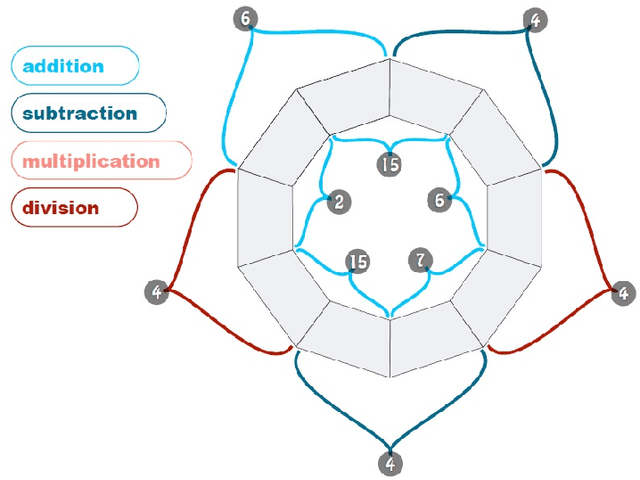

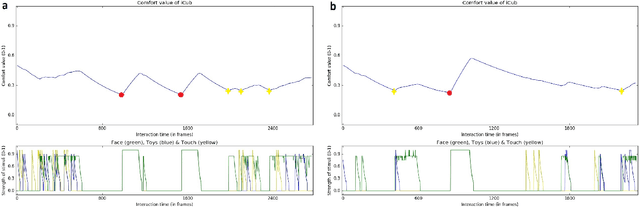

Abstract:In our everyday lives we are accustomed to partake in complex, personalized, adaptive interactions with our peers. For a social robot to be able to recreate this same kind of rich, human-like interaction, it should be aware of our needs and affective states and be capable of continuously adapting its behavior to them. One proposed solution to this problem would involve the robot to learn how to select the behaviors that would maximize the pleasantness of the interaction for its peers, guided by an internal motivation system that would provide autonomy to its decision-making process. We are interested in studying how an adaptive robotic framework of this kind would function and personalize to different users. In addition we explore whether including the element of adaptability and personalization in a cognitive framework will bring any additional richness to the human-robot interaction (HRI), or if it will instead bring uncertainty and unpredictability that would not be accepted by the robot`s human peers. To this end, we designed a socially-adaptive framework for the humanoid robot iCub which allows it to perceive and reuse the affective and interactive signals from the person as input for the adaptation based on internal social motivation. We propose a comparative interaction study with iCub where users act as the robot's caretaker, and iCub's social adaptation is guided by an internal comfort level that varies with the amount of stimuli iCub receives from its caretaker. We investigate and compare how the internal dynamics of the robot would be perceived by people in a condition when the robot does not personalize its interaction, and in a condition where it is adaptive. Finally, we establish the potential benefits that an adaptive framework could bring to the context of having repeated interactions with a humanoid robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge