Lorenzo Natale

Gaussian-Augmented Physics Simulation and System Identification with Complex Colliders

Nov 10, 2025

Abstract:System identification involving the geometry, appearance, and physical properties from video observations is a challenging task with applications in robotics and graphics. Recent approaches have relied on fully differentiable Material Point Method (MPM) and rendering for simultaneous optimization of these properties. However, they are limited to simplified object-environment interactions with planar colliders and fail in more challenging scenarios where objects collide with non-planar surfaces. We propose AS-DiffMPM, a differentiable MPM framework that enables physical property estimation with arbitrarily shaped colliders. Our approach extends existing methods by incorporating a differentiable collision handling mechanism, allowing the target object to interact with complex rigid bodies while maintaining end-to-end optimization. We show AS-DiffMPM can be easily interfaced with various novel view synthesis methods as a framework for system identification from visual observations.

6-DoF Object Tracking with Event-based Optical Flow and Frames

Aug 20, 2025Abstract:Tracking the position and orientation of objects in space (i.e., in 6-DoF) in real time is a fundamental problem in robotics for environment interaction. It becomes more challenging when objects move at high-speed due to frame rate limitations in conventional cameras and motion blur. Event cameras are characterized by high temporal resolution, low latency and high dynamic range, that can potentially overcome the impacts of motion blur. Traditional RGB cameras provide rich visual information that is more suitable for the challenging task of single-shot object pose estimation. In this work, we propose using event-based optical flow combined with an RGB based global object pose estimator for 6-DoF pose tracking of objects at high-speed, exploiting the core advantages of both types of vision sensors. Specifically, we propose an event-based optical flow algorithm for object motion measurement to implement an object 6-DoF velocity tracker. By integrating the tracked object 6-DoF velocity with low frequency estimated pose from the global pose estimator, the method can track pose when objects move at high-speed. The proposed algorithm is tested and validated on both synthetic and real world data, demonstrating its effectiveness, especially in high-speed motion scenarios.

PCHands: PCA-based Hand Pose Synergy Representation on Manipulators with N-DoF

Aug 11, 2025Abstract:We consider the problem of learning a common representation for dexterous manipulation across manipulators of different morphologies. To this end, we propose PCHands, a novel approach for extracting hand postural synergies from a large set of manipulators. We define a simplified and unified description format based on anchor positions for manipulators ranging from 2-finger grippers to 5-finger anthropomorphic hands. This enables learning a variable-length latent representation of the manipulator configuration and the alignment of the end-effector frame of all manipulators. We show that it is possible to extract principal components from this latent representation that is universal across manipulators of different structures and degrees of freedom. To evaluate PCHands, we use this compact representation to encode observation and action spaces of control policies for dexterous manipulation tasks learned with RL. In terms of learning efficiency and consistency, the proposed representation outperforms a baseline that learns the same tasks in joint space. We additionally show that PCHands performs robustly in RL from demonstration, when demonstrations are provided from a different manipulator. We further support our results with real-world experiments that involve a 2-finger gripper and a 4-finger anthropomorphic hand. Code and additional material are available at https://hsp-iit.github.io/PCHands/.

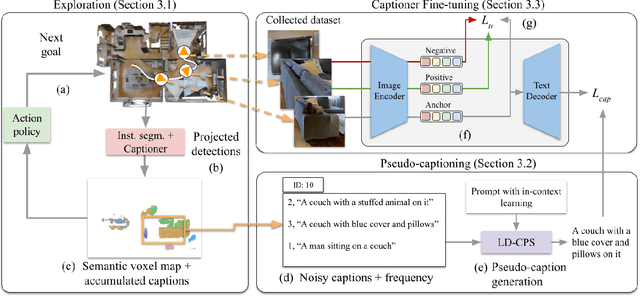

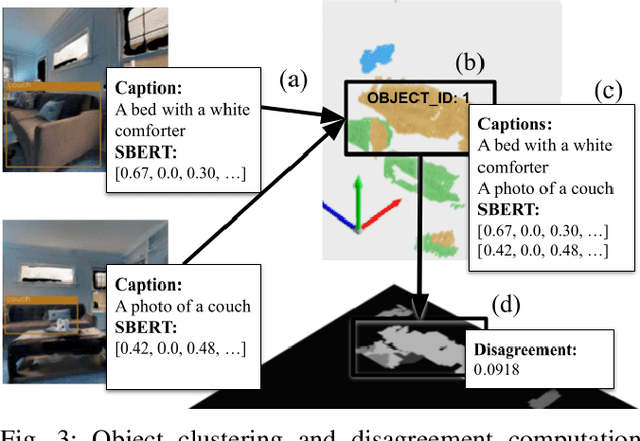

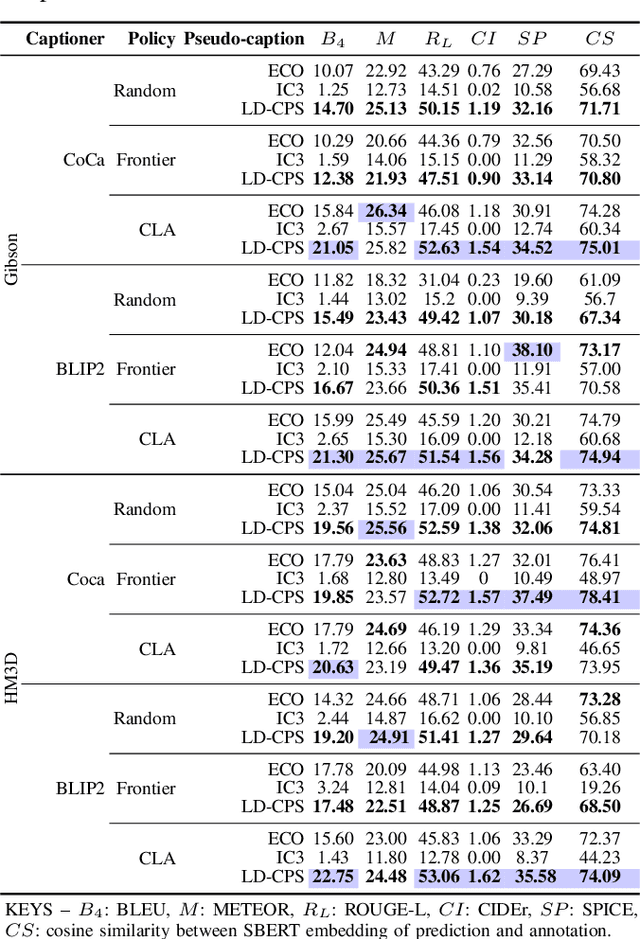

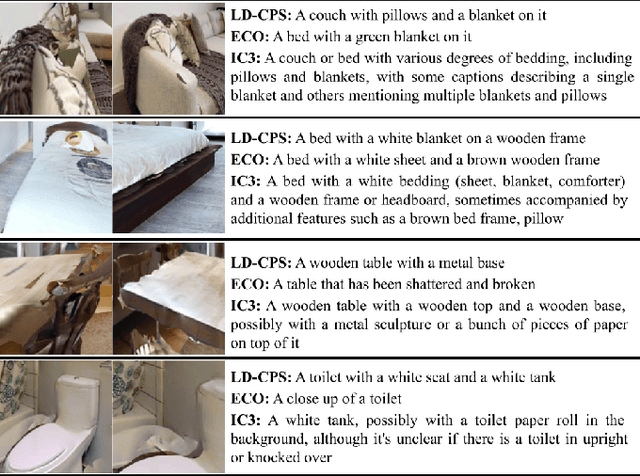

Embodied Image Captioning: Self-supervised Learning Agents for Spatially Coherent Image Descriptions

Apr 11, 2025

Abstract:We present a self-supervised method to improve an agent's abilities in describing arbitrary objects while actively exploring a generic environment. This is a challenging problem, as current models struggle to obtain coherent image captions due to different camera viewpoints and clutter. We propose a three-phase framework to fine-tune existing captioning models that enhances caption accuracy and consistency across views via a consensus mechanism. First, an agent explores the environment, collecting noisy image-caption pairs. Then, a consistent pseudo-caption for each object instance is distilled via consensus using a large language model. Finally, these pseudo-captions are used to fine-tune an off-the-shelf captioning model, with the addition of contrastive learning. We analyse the performance of the combination of captioning models, exploration policies, pseudo-labeling methods, and fine-tuning strategies, on our manually labeled test set. Results show that a policy can be trained to mine samples with higher disagreement compared to classical baselines. Our pseudo-captioning method, in combination with all policies, has a higher semantic similarity compared to other existing methods, and fine-tuning improves caption accuracy and consistency by a significant margin. Code and test set annotations available at https://hsp-iit.github.io/embodied-captioning/

Continuous Wrist Control on the Hannes Prosthesis: a Vision-based Shared Autonomy Framework

Feb 24, 2025

Abstract:Most control techniques for prosthetic grasping focus on dexterous fingers control, but overlook the wrist motion. This forces the user to perform compensatory movements with the elbow, shoulder and hip to adapt the wrist for grasping. We propose a computer vision-based system that leverages the collaboration between the user and an automatic system in a shared autonomy framework, to perform continuous control of the wrist degrees of freedom in a prosthetic arm, promoting a more natural approach-to-grasp motion. Our pipeline allows to seamlessly control the prosthetic wrist to follow the target object and finally orient it for grasping according to the user intent. We assess the effectiveness of each system component through quantitative analysis and finally deploy our method on the Hannes prosthetic arm. Code and videos: https://hsp-iit.github.io/hannes-wrist-control.

Gaze estimation learning architecture as support to affective, social and cognitive studies in natural human-robot interaction

Oct 25, 2024

Abstract:Gaze is a crucial social cue in any interacting scenario and drives many mechanisms of social cognition (joint and shared attention, predicting human intention, coordination tasks). Gaze direction is an indication of social and emotional functions affecting the way the emotions are perceived. Evidence shows that embodied humanoid robots endowing social abilities can be seen as sophisticated stimuli to unravel many mechanisms of human social cognition while increasing engagement and ecological validity. In this context, building a robotic perception system to automatically estimate the human gaze only relying on robot's sensors is still demanding. Main goal of the paper is to propose a learning robotic architecture estimating the human gaze direction in table-top scenarios without any external hardware. Table-top tasks are largely used in many studies in experimental psychology because they are suitable to implement numerous scenarios allowing agents to collaborate while maintaining a face-to-face interaction. Such an architecture can provide a valuable support in studies where external hardware might represent an obstacle to spontaneous human behaviour, especially in environments less controlled than the laboratory (e.g., in clinical settings). A novel dataset was also collected with the humanoid robot iCub, including images annotated from 24 participants in different gaze conditions.

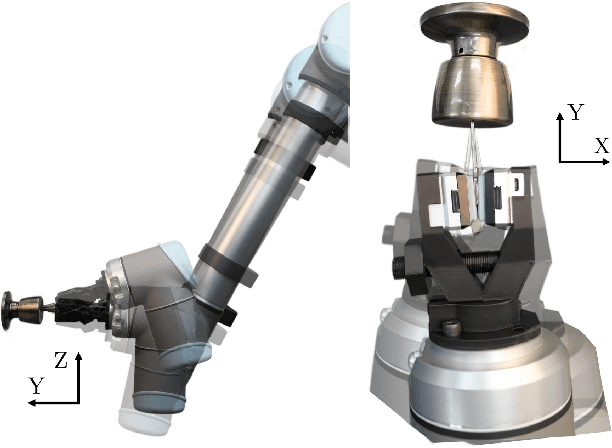

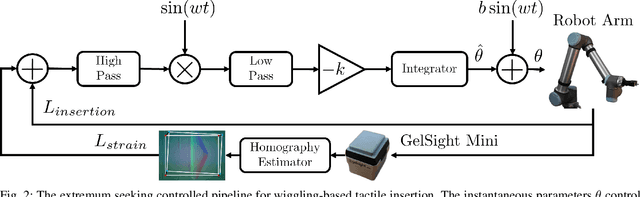

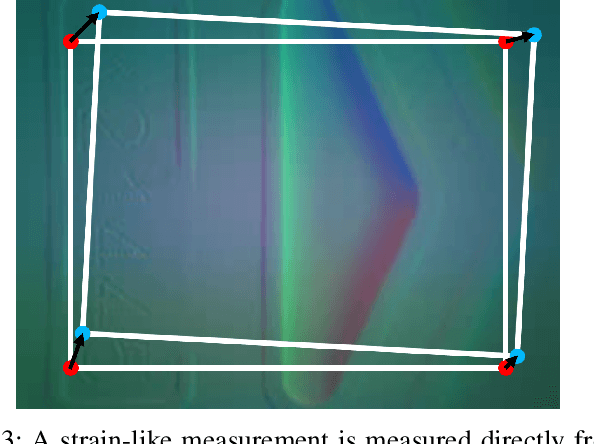

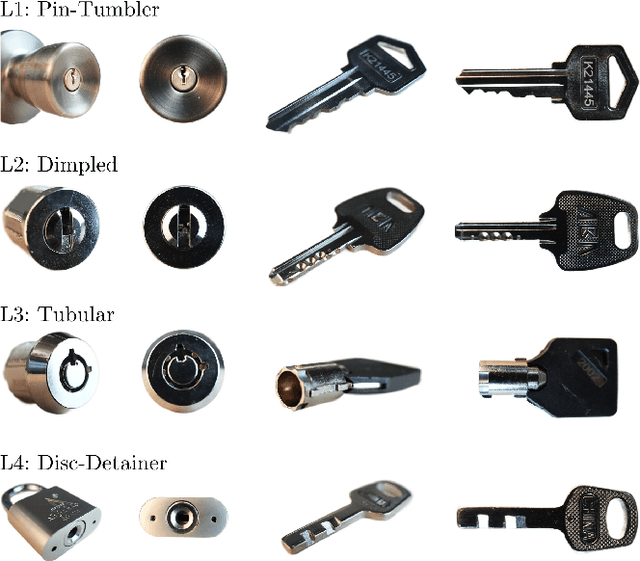

Extremum Seeking Controlled Wiggling for Tactile Insertion

Oct 03, 2024

Abstract:When humans perform insertion tasks such as inserting a cup into a cupboard, routing a cable, or key insertion, they wiggle the object and observe the process through tactile and proprioceptive feedback. While recent advances in tactile sensors have resulted in tactile-based approaches, there has not been a generalized formulation based on wiggling similar to human behavior. Thus, we propose an extremum-seeking control law that can insert four keys into four types of locks without control parameter tuning despite significant variation in lock type. The resulting model-free formulation wiggles the end effector pose to maximize insertion depth while minimizing strain as measured by a GelSight Mini tactile sensor that grasps a key. The algorithm achieves a 71\% success rate over 120 randomly initialized trials with uncertainty in both translation and orientation. Over 240 deterministically initialized trials, where only one translation or rotation parameter is perturbed, 84\% of trials succeeded. Given tactile feedback at 13 Hz, the mean insertion time for these groups of trials are 262 and 147 seconds respectively.

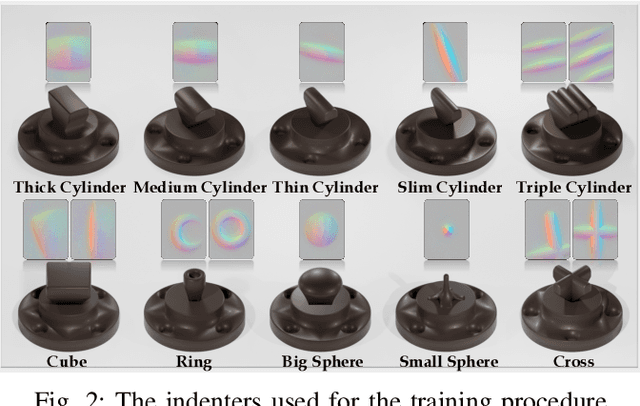

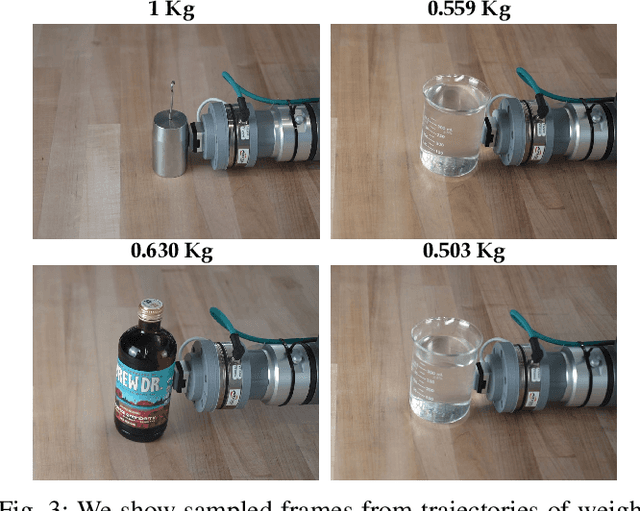

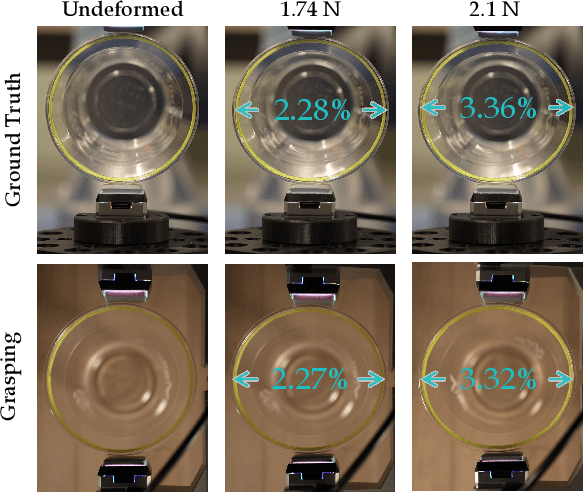

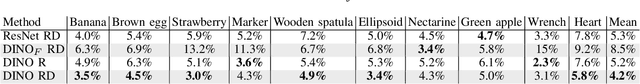

FeelAnyForce: Estimating Contact Force Feedback from Tactile Sensation for Vision-Based Tactile Sensors

Oct 02, 2024

Abstract:In this paper, we tackle the problem of estimating 3D contact forces using vision-based tactile sensors. In particular, our goal is to estimate contact forces over a large range (up to 15 N) on any objects while generalizing across different vision-based tactile sensors. Thus, we collected a dataset of over 200K indentations using a robotic arm that pressed various indenters onto a GelSight Mini sensor mounted on a force sensor and then used the data to train a multi-head transformer for force regression. Strong generalization is achieved via accurate data collection and multi-objective optimization that leverages depth contact images. Despite being trained only on primitive shapes and textures, the regressor achieves a mean absolute error of 4\% on a dataset of unseen real-world objects. We further evaluate our approach's generalization capability to other GelSight mini and DIGIT sensors, and propose a reproducible calibration procedure for adapting the pre-trained model to other vision-based sensors. Furthermore, the method was evaluated on real-world tasks, including weighing objects and controlling the deformation of delicate objects, which relies on accurate force feedback. Project webpage: http://prg.cs.umd.edu/FeelAnyForce

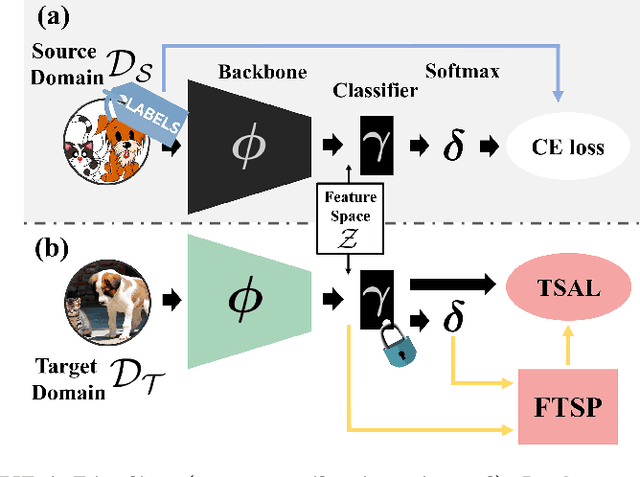

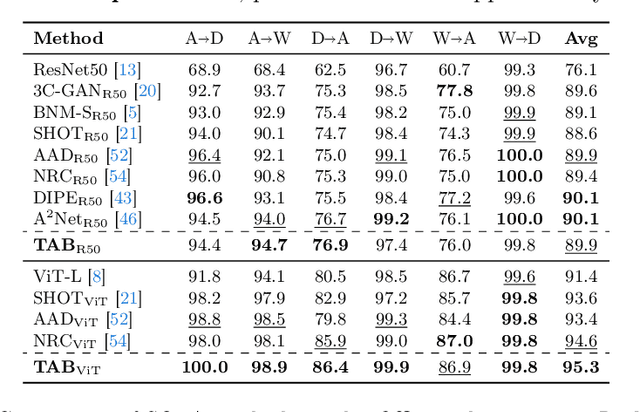

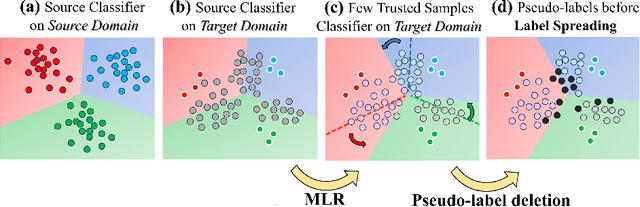

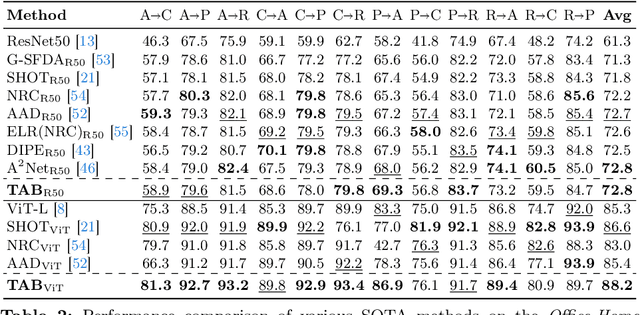

Trust And Balance: Few Trusted Samples Pseudo-Labeling and Temperature Scaled Loss for Effective Source-Free Unsupervised Domain Adaptation

Sep 01, 2024

Abstract:Deep Neural Networks have significantly impacted many computer vision tasks. However, their effectiveness diminishes when test data distribution (target domain) deviates from the one of training data (source domain). In situations where target labels are unavailable and the access to the labeled source domain is restricted due to data privacy or memory constraints, Source-Free Unsupervised Domain Adaptation (SF-UDA) has emerged as a valuable tool. Recognizing the key role of SF-UDA under these constraints, we introduce a novel approach marked by two key contributions: Few Trusted Samples Pseudo-labeling (FTSP) and Temperature Scaled Adaptive Loss (TSAL). FTSP employs a limited subset of trusted samples from the target data to construct a classifier to infer pseudo-labels for the entire domain, showing simplicity and improved accuracy. Simultaneously, TSAL, designed with a unique dual temperature scheduling, adeptly balance diversity, discriminability, and the incorporation of pseudo-labels in the unsupervised adaptation objective. Our methodology, that we name Trust And Balance (TAB) adaptation, is rigorously evaluated on standard datasets like Office31 and Office-Home, and on less common benchmarks such as ImageCLEF-DA and Adaptiope, employing both ResNet50 and ViT-Large architectures. Our results compare favorably with, and in most cases surpass, contemporary state-of-the-art techniques, underscoring the effectiveness of our methodology in the SF-UDA landscape.

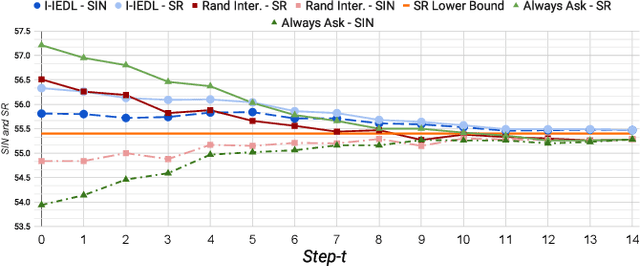

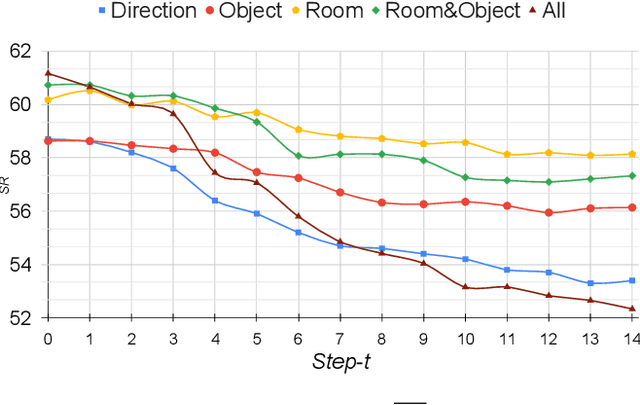

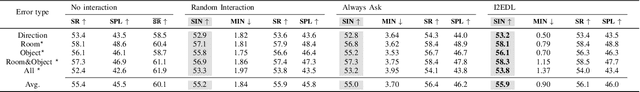

I2EDL: Interactive Instruction Error Detection and Localization

Jun 07, 2024

Abstract:In the Vision-and-Language Navigation in Continuous Environments (VLN-CE) task, the human user guides an autonomous agent to reach a target goal via a series of low-level actions following a textual instruction in natural language. However, most existing methods do not address the likely case where users may make mistakes when providing such instruction (e.g. "turn left" instead of "turn right"). In this work, we address a novel task of Interactive VLN in Continuous Environments (IVLN-CE), which allows the agent to interact with the user during the VLN-CE navigation to verify any doubts regarding the instruction errors. We propose an Interactive Instruction Error Detector and Localizer (I2EDL) that triggers the user-agent interaction upon the detection of instruction errors during the navigation. We leverage a pre-trained module to detect instruction errors and pinpoint them in the instruction by cross-referencing the textual input and past observations. In such way, the agent is able to query the user for a timely correction, without demanding the user's cognitive load, as we locate the probable errors to a precise part of the instruction. We evaluate the proposed I2EDL on a dataset of instructions containing errors, and further devise a novel metric, the Success weighted by Interaction Number (SIN), to reflect both the navigation performance and the interaction effectiveness. We show how the proposed method can ask focused requests for corrections to the user, which in turn increases the navigation success, while minimizing the interactions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge