Amir-Hossein Shahidzadeh

FeelAnyForce: Estimating Contact Force Feedback from Tactile Sensation for Vision-Based Tactile Sensors

Oct 02, 2024

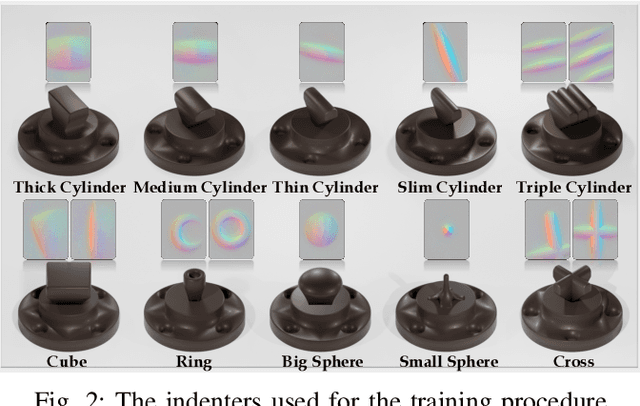

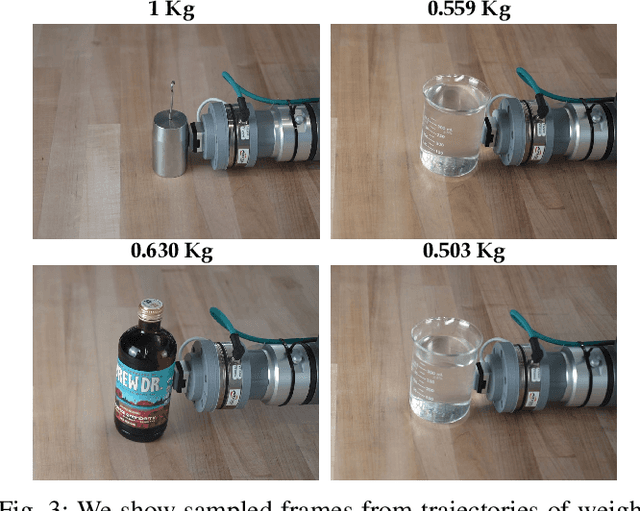

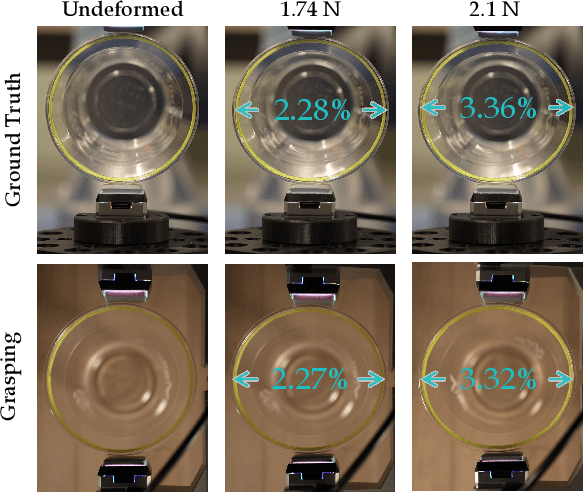

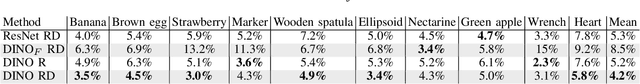

Abstract:In this paper, we tackle the problem of estimating 3D contact forces using vision-based tactile sensors. In particular, our goal is to estimate contact forces over a large range (up to 15 N) on any objects while generalizing across different vision-based tactile sensors. Thus, we collected a dataset of over 200K indentations using a robotic arm that pressed various indenters onto a GelSight Mini sensor mounted on a force sensor and then used the data to train a multi-head transformer for force regression. Strong generalization is achieved via accurate data collection and multi-objective optimization that leverages depth contact images. Despite being trained only on primitive shapes and textures, the regressor achieves a mean absolute error of 4\% on a dataset of unseen real-world objects. We further evaluate our approach's generalization capability to other GelSight mini and DIGIT sensors, and propose a reproducible calibration procedure for adapting the pre-trained model to other vision-based sensors. Furthermore, the method was evaluated on real-world tasks, including weighing objects and controlling the deformation of delicate objects, which relies on accurate force feedback. Project webpage: http://prg.cs.umd.edu/FeelAnyForce

AcTExplore: Active Tactile Exploration on Unknown Objects

Oct 18, 2023Abstract:Tactile exploration plays a crucial role in understanding object structures for fundamental robotics tasks such as grasping and manipulation. However, efficiently exploring such objects using tactile sensors is challenging, primarily due to the large-scale unknown environments and limited sensing coverage of these sensors. To this end, we present AcTExplore, an active tactile exploration method driven by reinforcement learning for object reconstruction at scales that automatically explores the object surfaces in a limited number of steps. Through sufficient exploration, our algorithm incrementally collects tactile data and reconstructs 3D shapes of the objects as well, which can serve as a representation for higher-level downstream tasks. Our method achieves an average of 95.97% IoU coverage on unseen YCB objects while just being trained on primitive shapes. Project Webpage: https://prg.cs.umd$.$edu/AcTExplore

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge