Alan F. T. Winfield

On the relationship between Benchmarking, Standards and Certification in Robotics and AI

Sep 21, 2023

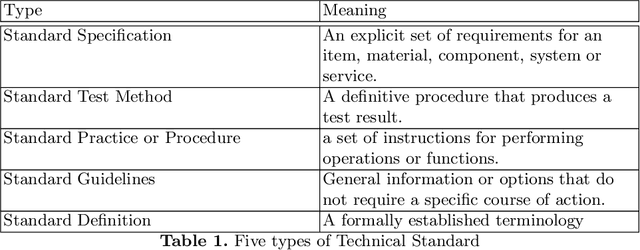

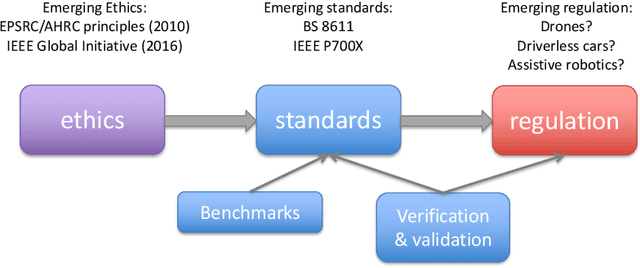

Abstract:Benchmarking, standards and certification are closely related processes. Standards can provide normative requirements that robotics and AI systems may or may not conform to. Certification generally relies upon conformance with one or more standards as the key determinant of granting a certificate to operate. And benchmarks are sets of standardised tests against which robots and AI systems can be measured. Benchmarks therefore can be thought of as informal standards. In this paper we will develop these themes with examples from benchmarking, standards and certification, and argue that these three linked processes are not only useful but vital to the broader practice of Responsible Innovation.

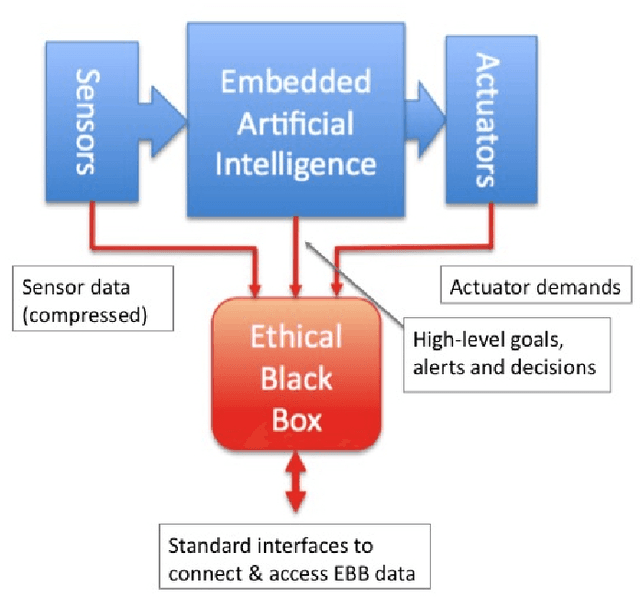

An Ethical Black Box for Social Robots: a draft Open Standard

May 13, 2022

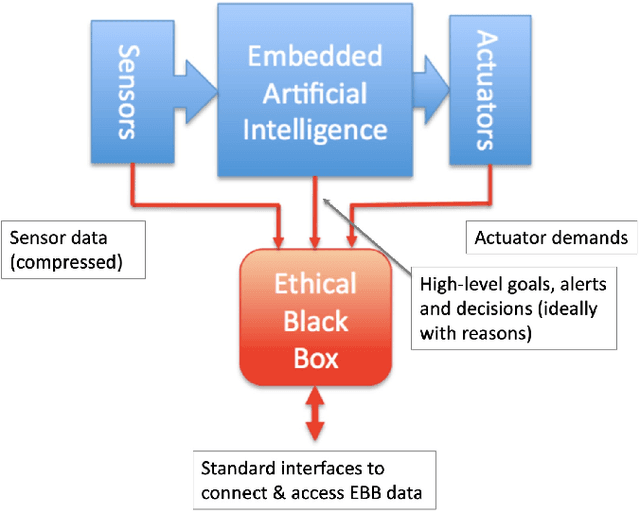

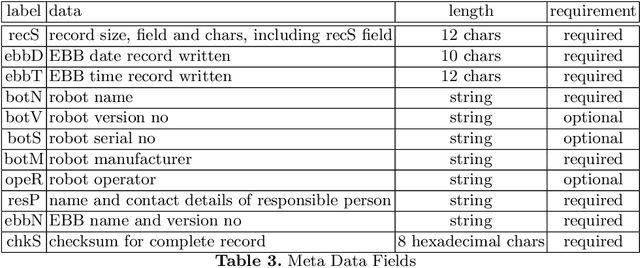

Abstract:This paper introduces a draft open standard for the robot equivalent of an aircraft flight data recorder, which we call an ethical black box. This is a device, or software module, capable of securely recording operational data (sensor, actuator and control decisions) for a social robot, in order to support the investigation of accidents or near-miss incidents. The open standard, presented as an annex to this paper, is offered as a first draft for discussion within the robot ethics community. Our intention is to publish further drafts following feedback, in the hope that the standard will become a useful reference for social robot designers, operators and robot accident/incident investigators.

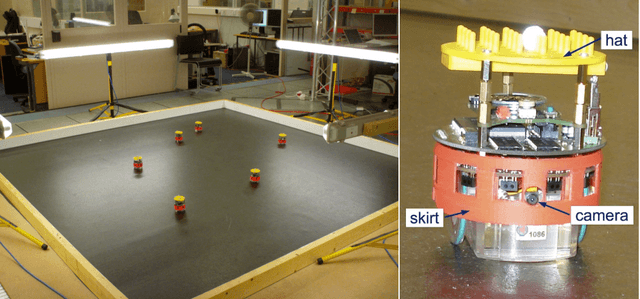

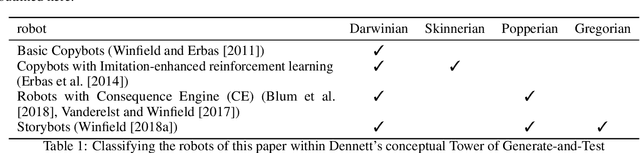

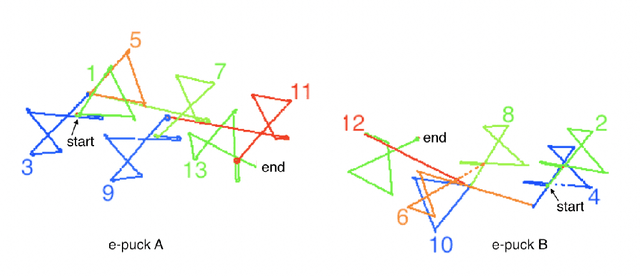

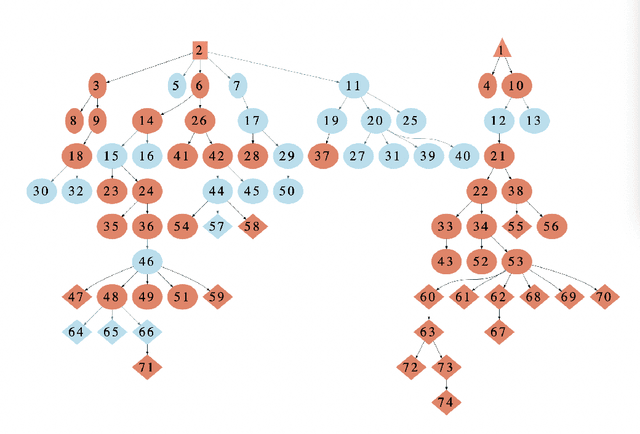

Experiments in Artificial Culture: from noisy imitation to storytelling robots

Jun 22, 2021

Abstract:This paper presents a series of experiments in collective social robotics, spanning more than 10 years, with the long-term aim of building embodied models of (aspects) of cultural evolution. Initial experiments demonstrated the emergence of behavioural traditions in a group of social robots programmed to imitate each other's behaviours (we call these Copybots). These experiments show that the noisy (i.e. less than perfect fidelity) imitation that comes for free with real physical robots gives rise naturally to variation in social learning. More recent experimental work extends the robots' cognitive capabilities with simulation-based internal models, equipping them with a simple artificial theory of mind. With this extended capability we explore, in our current work, social learning not via imitation but robot-robot storytelling, in an effort to model this very human mode of cultural transmission. In this paper we give an account of the methods and inspiration for these experiments, the experiments and their results, and an outline of possible directions for this programme of research. It is our hope that this paper stimulates not only discussion but suggestions for hypotheses to test with the Storybots.

RoboTed: a case study in Ethical Risk Assessment

Jul 31, 2020

Abstract:Risk Assessment is a well known and powerful method for discovering and mitigating risks, and hence improving safety. Ethical Risk Assessment uses the same approach but extends the envelope of risk to cover ethical risks in addition to safety risks. In this paper we outline Ethical Risk Assessment (ERA) and set ERA within the broader framework of Responsible Robotics. We then illustrate ERA with a case study of a hypothetical smart robot toy teddy bear: RoboTed. The case study shows the value of ERA and how consideration of ethical risks can prompt design changes, resulting in a more ethical and sustainable robot.

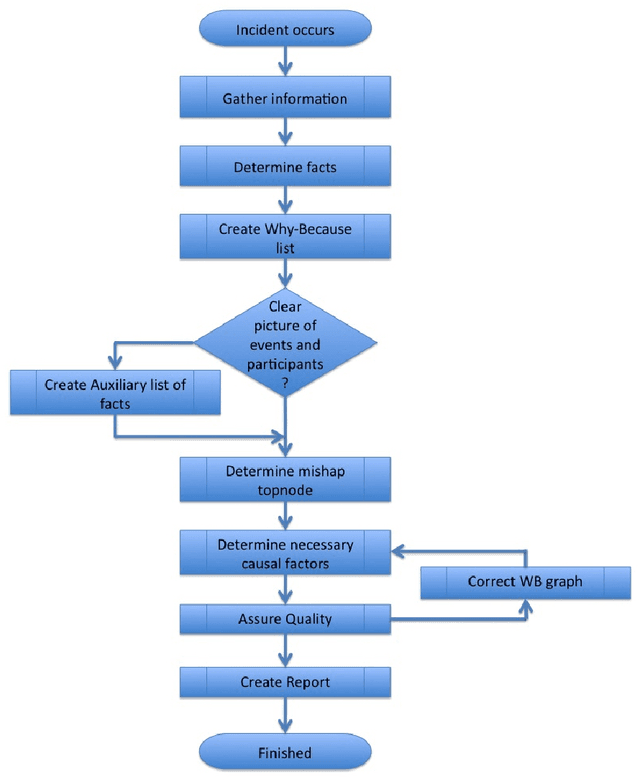

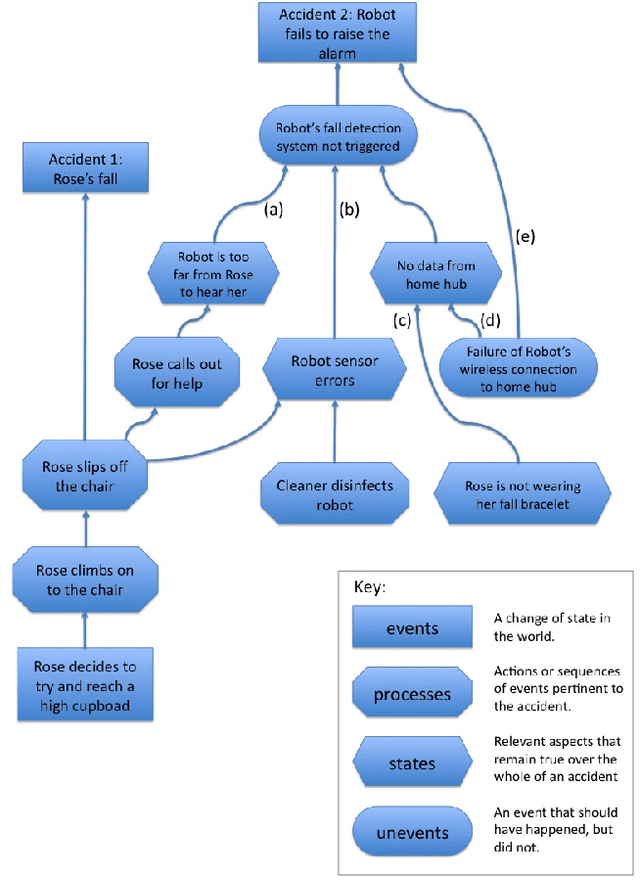

Robot Accident Investigation: a case study in Responsible Robotics

May 15, 2020

Abstract:Robot accidents are inevitable. Although rare, they have been happening since assembly-line robots were first introduced in the 1960s. But a new generation of social robots are now becoming commonplace. Often with sophisticated embedded artificial intelligence (AI) social robots might be deployed as care robots to assist elderly or disabled people to live independently. Smart robot toys offer a compelling interactive play experience for children and increasingly capable autonomous vehicles (AVs) the promise of hands-free personal transport and fully autonomous taxis. Unlike industrial robots which are deployed in safety cages, social robots are designed to operate in human environments and interact closely with humans; the likelihood of robot accidents is therefore much greater for social robots than industrial robots. This paper sets out a draft framework for social robot accident investigation; a framework which proposes both the technology and processes that would allow social robot accidents to be investigated with no less rigour than we expect of air or rail accident investigations. The paper also places accident investigation within the practice of responsible robotics, and makes the case that social robotics without accident investigation would be no less irresponsible than aviation without air accident investigation.

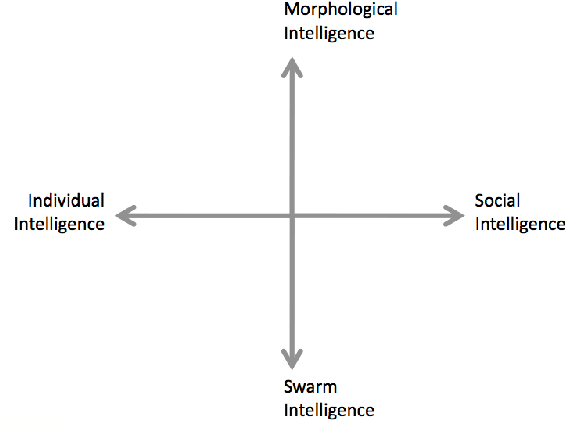

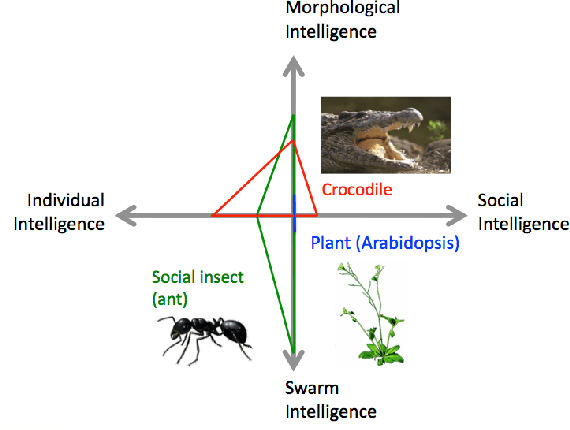

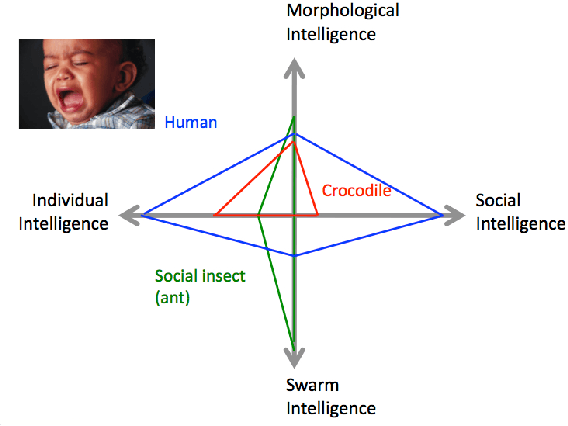

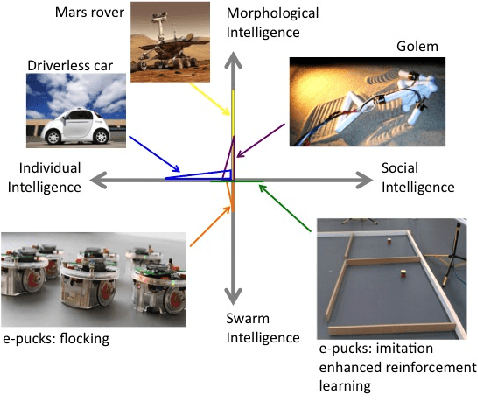

How Intelligent is your Intelligent Robot?

Dec 24, 2017

Abstract:How intelligent is robot A compared with robot B? And how intelligent are robots A and B compared with animals (or plants) X and Y? These are both interesting and deeply challenging questions. In this paper we address the question "how intelligent is your intelligent robot?" by proposing that embodied intelligence emerges from the interaction and integration of four different and distinct kinds of intelligence. We then suggest a simple diagrammatic representation on which these kinds of intelligence are shown as four axes in a star diagram. A crude qualitative comparison of the intelligence graphs of animals and robots both exposes and helps to explain the chronic intelligence deficit of intelligent robots. Finally we examine the options for determining numerical values for the four kinds of intelligence in an effort to move toward a quantifiable intelligence vector.

Towards Moral Autonomous Systems

Oct 31, 2017

Abstract:Both the ethics of autonomous systems and the problems of their technical implementation have by now been studied in some detail. Less attention has been given to the areas in which these two separate concerns meet. This paper, written by both philosophers and engineers of autonomous systems, addresses a number of issues in machine ethics that are located at precisely the intersection between ethics and engineering. We first discuss the main challenges which, in our view, machine ethics posses to moral philosophy. We them consider different approaches towards the conceptual design of autonomous systems and their implications on the ethics implementation in such systems. Then we examine problematic areas regarding the specification and verification of ethical behavior in autonomous systems, particularly with a view towards the requirements of future legislation. We discuss transparency and accountability issues that will be crucial for any future wide deployment of autonomous systems in society. Finally we consider the, often overlooked, possibility of intentional misuse of AI systems and the possible dangers arising out of deliberately unethical design, implementation, and use of autonomous robots.

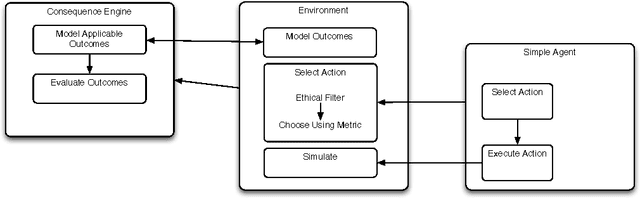

Towards Verifiably Ethical Robot Behaviour

Apr 14, 2015

Abstract:Ensuring that autonomous systems work ethically is both complex and difficult. However, the idea of having an additional `governor' that assesses options the system has, and prunes them to select the most ethical choices is well understood. Recent work has produced such a governor consisting of a `consequence engine' that assesses the likely future outcomes of actions then applies a Safety/Ethical logic to select actions. Although this is appealing, it is impossible to be certain that the most ethical options are actually taken. In this paper we extend and apply a well-known agent verification approach to our consequence engine, allowing us to verify the correctness of its ethical decision-making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge