Louise Dennis

Towards Continuous Assurance with Formal Verification and Assurance Cases

Nov 17, 2025

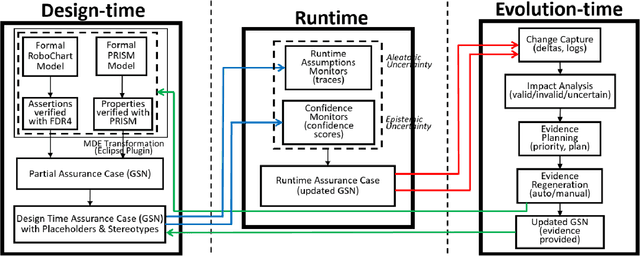

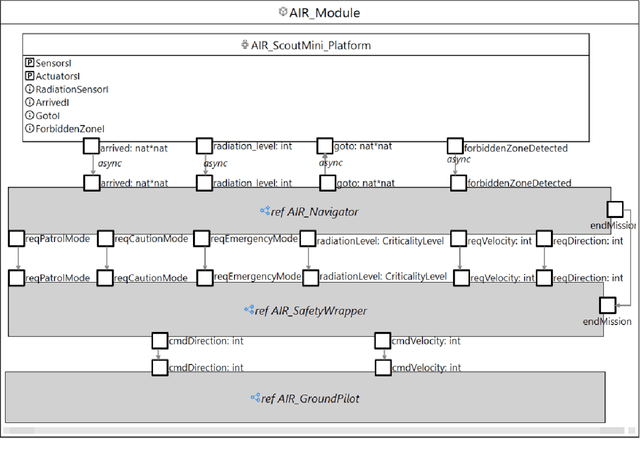

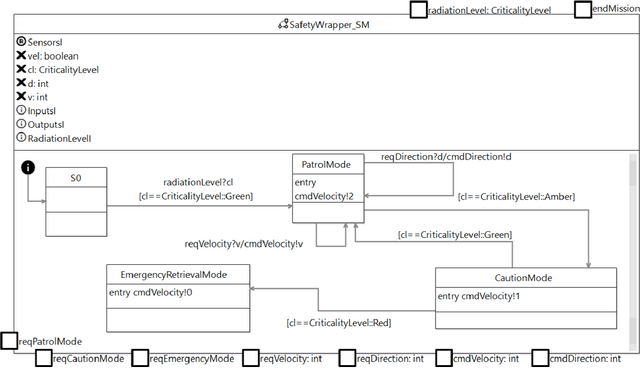

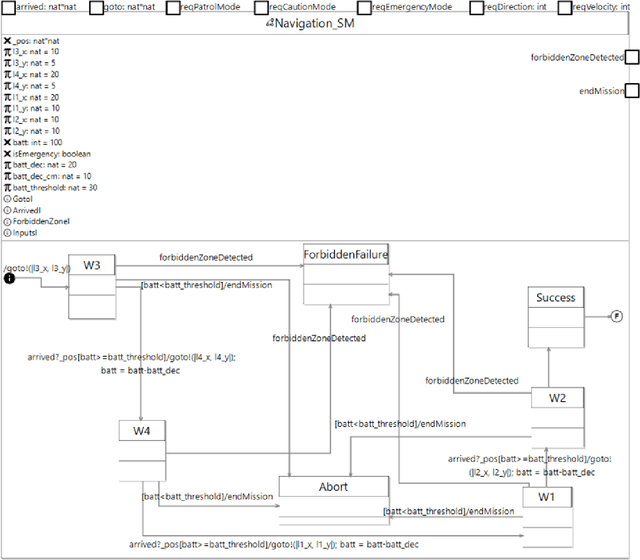

Abstract:Autonomous systems must sustain justified confidence in their correctness and safety across their operational lifecycle-from design and deployment through post-deployment evolution. Traditional assurance methods often separate development-time assurance from runtime assurance, yielding fragmented arguments that cannot adapt to runtime changes or system updates - a significant challenge for assured autonomy. Towards addressing this, we propose a unified Continuous Assurance Framework that integrates design-time, runtime, and evolution-time assurance within a traceable, model-driven workflow as a step towards assured autonomy. In this paper, we specifically instantiate the design-time phase of the framework using two formal verification methods: RoboChart for functional correctness and PRISM for probabilistic risk analysis. We also propose a model-driven transformation pipeline, implemented as an Eclipse plugin, that automatically regenerates structured assurance arguments whenever formal specifications or their verification results change, thereby ensuring traceability. We demonstrate our approach on a nuclear inspection robot scenario, and discuss its alignment with the Trilateral AI Principles, reflecting regulator-endorsed best practices.

Autonomy and Safety Assurance in the Early Development of Robotics and Autonomous Systems

Jan 30, 2025

Abstract:This report provides an overview of the workshop titled Autonomy and Safety Assurance in the Early Development of Robotics and Autonomous Systems, hosted by the Centre for Robotic Autonomy in Demanding and Long-Lasting Environments (CRADLE) on September 2, 2024, at The University of Manchester, UK. The event brought together representatives from six regulatory and assurance bodies across diverse sectors to discuss challenges and evidence for ensuring the safety of autonomous and robotic systems, particularly autonomous inspection robots (AIR). The workshop featured six invited talks by the regulatory and assurance bodies. CRADLE aims to make assurance an integral part of engineering reliable, transparent, and trustworthy autonomous systems. Key discussions revolved around three research questions: (i) challenges in assuring safety for AIR; (ii) evidence for safety assurance; and (iii) how assurance cases need to differ for autonomous systems. Following the invited talks, the breakout groups further discussed the research questions using case studies from ground (rail), nuclear, underwater, and drone-based AIR. This workshop offered a valuable opportunity for representatives from industry, academia, and regulatory bodies to discuss challenges related to assured autonomy. Feedback from participants indicated a strong willingness to adopt a design-for-assurance process to ensure that robots are developed and verified to meet regulatory expectations.

Uncertain Machine Ethical Decisions Using Hypothetical Retrospection

May 02, 2023Abstract:We propose the use of the hypothetical retrospection argumentation procedure, developed by Sven Hansson, to improve existing approaches to machine ethical reasoning by accounting for probability and uncertainty from a position of Philosophy that resonates with humans. Actions are represented with a branching set of potential outcomes, each with a state, utility, and either a numeric or poetic probability estimate. Actions are chosen based on comparisons between sets of arguments favouring actions from the perspective of their branches, even those branches that led to an undesirable outcome. This use of arguments allows a variety of philosophical theories for ethical reasoning to be used, potentially in flexible combination with each other. We implement the procedure, applying consequentialist and deontological ethical theories, independently and concurrently, to an autonomous library system use case. We introduce a a preliminary framework that seems to meet the varied requirements of a machine ethics system: versatility under multiple theories and a resonance with humans that enables transparency and explainability.

Formal Specification and Verification of Autonomous Robotic Systems: A Survey

Sep 03, 2018

Abstract:Robotic systems are complex and critical: they are inherently hybrid, combining both hardware and software; they typically exhibit both cyber-physical attributes and autonomous capabilities; and are required to be at least safe and often ethical. While for many engineered systems testing, either through real deployment or via simulation, is deemed sufficient the uniquely challenging elements of robotic systems, together with the crucial dependence on sophisticated software control and decision-making, requires a stronger form of verification. The increasing deployment of robotic systems in safety-critical scenarios exacerbates this still further and leads us towards the use of formal methods to ensure the correctness of, and provide sufficient evidence for the certification of, robotic systems. There have been many approaches that have used some variety of formal specification or formal verification in autonomous robotics, but there is no resource that collates this activity in to one place. This paper systematically surveys the state-of-the art in specification formalisms and tools for verifying robotic systems. Specifically, it describes the challenges arising from autonomy and software architectures, avoiding low-level hardware control and is subsequently identifies approaches for the specification and verification of robotic systems, while avoiding more general approaches.

Practical Challenges in Explicit Ethical Machine Reasoning

Jan 04, 2018

Abstract:We examine implemented systems for ethical machine reasoning with a view to identifying the practical challenges (as opposed to philosophical challenges) posed by the area. We identify a need for complex ethical machine reasoning not only to be multi-objective, proactive, and scrutable but that it must draw on heterogeneous evidential reasoning. We also argue that, in many cases, it needs to operate in real time and be verifiable. We propose a general architecture involving a declarative ethical arbiter which draws upon multiple evidential reasoners each responsible for a particular ethical feature of the system's environment. We claim that this architecture enables some separation of concerns among the practical challenges that ethical machine reasoning poses.

Towards Moral Autonomous Systems

Oct 31, 2017

Abstract:Both the ethics of autonomous systems and the problems of their technical implementation have by now been studied in some detail. Less attention has been given to the areas in which these two separate concerns meet. This paper, written by both philosophers and engineers of autonomous systems, addresses a number of issues in machine ethics that are located at precisely the intersection between ethics and engineering. We first discuss the main challenges which, in our view, machine ethics posses to moral philosophy. We them consider different approaches towards the conceptual design of autonomous systems and their implications on the ethics implementation in such systems. Then we examine problematic areas regarding the specification and verification of ethical behavior in autonomous systems, particularly with a view towards the requirements of future legislation. We discuss transparency and accountability issues that will be crucial for any future wide deployment of autonomous systems in society. Finally we consider the, often overlooked, possibility of intentional misuse of AI systems and the possible dangers arising out of deliberately unethical design, implementation, and use of autonomous robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge