Matt Luckcuck

University of Nottingham, UK

Proceedings Seventh International Workshop on Formal Methods for Autonomous Systems

Nov 17, 2025Abstract:This EPTCS volume contains the papers from the Seventh International Workshop on Formal Methods for Autonomous Systems (FMAS 2025), which was held between the 17th and 19th of November 2025. The goal of the FMAS workshop series is to bring together leading researchers who are using formal methods to tackle the unique challenges that autonomous systems present, so that they can publish and discuss their work with a growing community of researchers. FMAS 2025 was co-located with the 20th International Conference on integrated Formal Methods (iFM'25), hosted by Inria Paris, France at the Inria Paris Center. In total, FMAS 2025 received 16 submissions from researchers at institutions in: Canada, China, France, Germany, Ireland, Italy, Japan, the Netherlands, Portugal, Sweden, the United States of America, and the United Kingdom. Though we received fewer submissions than last year, we are encouraged to see the submissions being sent from a wide range of countries. Submissions come from both past and new FMAS authors, which shows us that the existing community appreciates the network that FMAS has built over the past 7 years, while new authors also show the FMAS community's great potential of growth.

Proceedings Sixth International Workshop on Formal Methods for Autonomous Systems

Nov 20, 2024Abstract:This EPTCS volume contains the papers from the Sixth International Workshop on Formal Methods for Autonomous Systems (FMAS 2024), which was held between the 11th and 13th of November 2024. FMAS 2024 was co-located with 19th International Conference on integrated Formal Methods (iFM'24), hosted by the University of Manchester in the United Kingdom, in the University of Manchester's Core Technology Facility.

Proceedings Fifth International Workshop on Formal Methods for Autonomous Systems

Nov 15, 2023Abstract:This EPTCS volume contains the proceedings for the Fifth International Workshop on Formal Methods for Autonomous Systems (FMAS 2023), which was held on the 15th and 16th of November 2023. FMAS 2023 was co-located with 18th International Conference on integrated Formal Methods (iFM) (iFM'22), organised by Leiden Institute of Advanced Computer Science of Leiden University. The workshop itself was held at Scheltema Leiden, a renovated 19th Century blanket factory alongside the canal. FMAS 2023 received 25 submissions. We received 11 regular papers, 3 experience reports, 6 research previews, and 5 vision papers. The researchers who submitted papers to FMAS 2023 were from institutions in: Australia, Canada, Colombia, France, Germany, Ireland, Italy, the Netherlands, Sweden, the United Kingdom, and the United States of America. Increasing our number of submissions for the third year in a row is an encouraging sign that FMAS has established itself as a reputable publication venue for research on the formal modelling and verification of autonomous systems. After each paper was reviewed by three members of our Programme Committee we accepted a total of 15 papers: 8 long papers and 7 short papers.

Proceedings Fourth International Workshop on Formal Methods for Autonomous Systems (FMAS) and Fourth International Workshop on Automated and verifiable Software sYstem DEvelopment (ASYDE)

Sep 27, 2022Abstract:This EPTCS volume contains the joint proceedings for the fourth international workshop on Formal Methods for Autonomous Systems (FMAS 2022) and the fourth international workshop on Automated and verifiable Software sYstem DEvelopment (ASYDE 2022), which were held on the 26th and 27th of September 2022. FMAS 2022 and ASYDE 2022 were held in conjunction with 20th International Conference on Software Engineering and Formal Methods (SEFM'22), at Humboldt University in Berlin. For FMAS, this year's workshop was our return to having in-person attendance after two editions of FMAS that were entirely online because of the restrictions necessitated by COVID-19. We were also keen to ensure that FMAS 2022 remained easily accessible to people who were unable to travel, so the workshop facilitated remote presentation and attendance. The goal of FMAS is to bring together leading researchers who are using formal methods to tackle the unique challenges presented by autonomous systems, to share their recent and ongoing work. Autonomous systems are highly complex and present unique challenges for the application of formal methods. Autonomous systems act without human intervention, and are often embedded in a robotic system, so that they can interact with the real world. As such, they exhibit the properties of safety-critical, cyber-physical, hybrid, and real-time systems. We are interested in work that uses formal methods to specify, model, or verify autonomous and/or robotic systems; in whole or in part. We are also interested in successful industrial applications and potential directions for this emerging application of formal methods.

Modelling the Turtle Python library in CSP

Jul 20, 2022

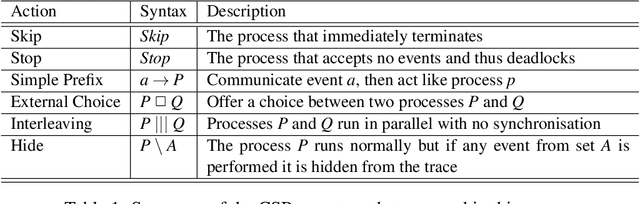

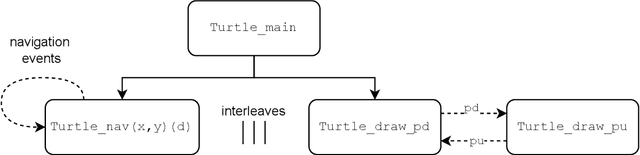

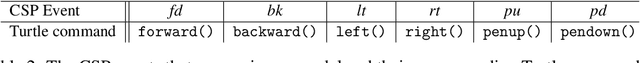

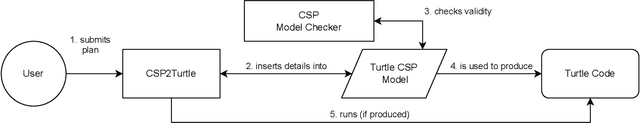

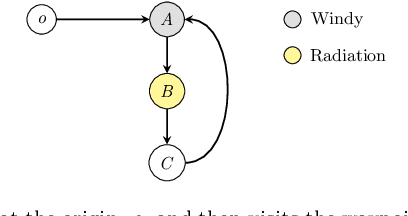

Abstract:Software verification is an important tool in establishing the reliability of critical systems. One potential area of application is in the field of robotics, as robots take on more tasks in both day-to-day areas and highly specialised domains. Robots are usually given a plan to follow, if there are errors in this plan the robot will not perform reliably. The capability to check plans for errors in advance could prevent this. Python is a popular programming language in the robotics domain, through the use of the Robot Operating System (ROS) and various other libraries. Python's Turtle package provides a mobile agent, which we formally model here using Communicating Sequential Processes (CSP). Our interactive toolchain CSP2Turtle with CSP model and Python components, enables Turtle plans to be verified in CSP before being executed in Python. This means that certain classes of errors can be avoided, and provides a starting point for more detailed verification of Turtle programs and more complex robotic systems. We illustrate our approach with examples of robot navigation and obstacle avoidance in a 2D grid-world.

* In Proceedings AREA 2022, arXiv:2207.09058

Proceedings Third Workshop on Formal Methods for Autonomous Systems

Oct 22, 2021Abstract:Autonomous systems are highly complex and present unique challenges for the application of formal methods. Autonomous systems act without human intervention, and are often embedded in a robotic system, so that they can interact with the real world. As such, they exhibit the properties of safety-critical, cyber-physical, hybrid, and real-time systems. This EPTCS volume contains the proceedings for the third workshop on Formal Methods for Autonomous Systems (FMAS 2021), which was held virtually on the 21st and 22nd of October 2021. Like the previous workshop, FMAS 2021 was an online, stand-alone event, as an adaptation to the ongoing COVID-19 restrictions. Despite the challenges this brought, we were determined to build on the success of the previous two FMAS workshops. The goal of FMAS is to bring together leading researchers who are tackling the unique challenges of autonomous systems using formal methods, to present recent and ongoing work. We are interested in the use of formal methods to specify, model, or verify autonomous and/or robotic systems; in whole or in part. We are also interested in successful industrial applications and potential future directions for this emerging application of formal methods.

Towards Compositional Verification for Modular Robotic Systems

Dec 03, 2020

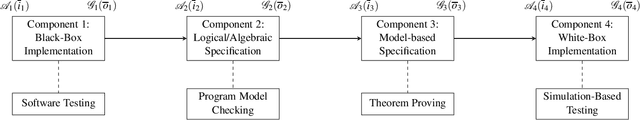

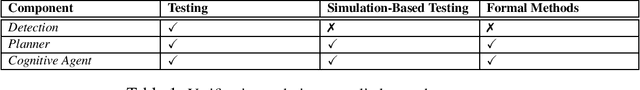

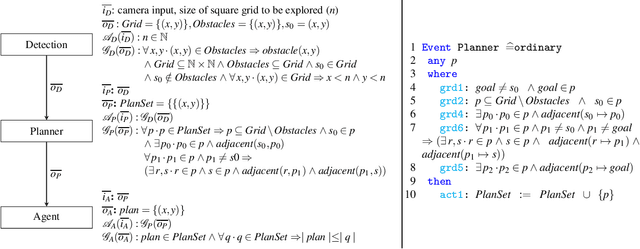

Abstract:Software engineering of modular robotic systems is a challenging task, however, verifying that the developed components all behave as they should individually and as a whole presents its own unique set of challenges. In particular, distinct components in a modular robotic system often require different verification techniques to ensure that they behave as expected. Ensuring whole system consistency when individual components are verified using a variety of techniques and formalisms is difficult. This paper discusses how to use compositional verification to integrate the various verification techniques that are applied to modular robotic software, using a First-Order Logic (FOL) contract that captures each component's assumptions and guarantees. These contracts can then be used to guide the verification of the individual components, be it by testing or the use of a formal method. We provide an illustrative example of an autonomous robot used in remote inspection. We also discuss a way of defining confidence for the verification associated with each component.

* In Proceedings FMAS 2020, arXiv:2012.01176

Proceedings Second Workshop on Formal Methods for Autonomous Systems

Dec 02, 2020Abstract:Autonomous systems are highly complex and present unique challenges for the application of formal methods. Autonomous systems act without human intervention, and are often embedded in a robotic system, so that they can interact with the real world. As such, they exhibit the properties of safety-critical, cyber-physical, hybrid, and real-time systems. The goal of FMAS is to bring together leading researchers who are tackling the unique challenges of autonomous systems using formal methods, to present recent and ongoing work. We are interested in the use of formal methods to specify, model, or verify autonomous or robotic systems; in whole or in part. We are also interested in successful industrial applications and potential future directions for this emerging application of formal methods.

Heterogeneous Verification of an Autonomous Curiosity Rover

Jul 20, 2020

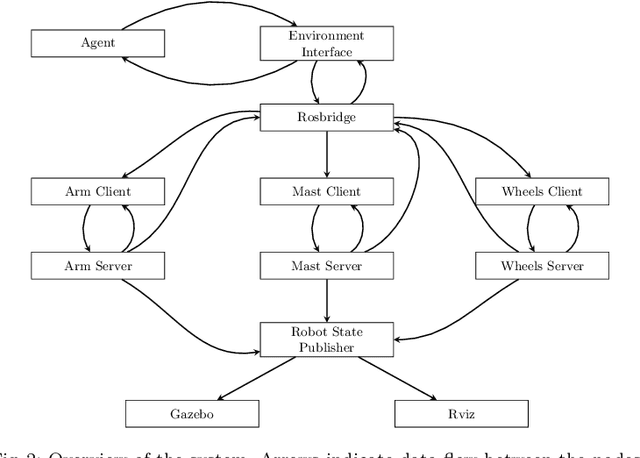

Abstract:The Curiosity rover is one of the most complex systems successfully deployed in a planetary exploration mission to date. It was sent by NASA to explore the surface of Mars and to identify potential signs of life. Even though it has limited autonomy on-board, most of its decisions are made by the ground control team. This hinders the speed at which the Curiosity reacts to its environment, due to the communication delays between Earth and Mars. Depending on the orbital position of both planets, it can take 4--24 minutes for a message to be transmitted between Earth and Mars. If the Curiosity were controlled autonomously, it would be able to perform its activities much faster and more flexibly. However, one of the major barriers to increased use of autonomy in such scenarios is the lack of assurances that the autonomous behaviour will work as expected. In this paper, we use a Robot Operating System (ROS) model of the Curiosity that is simulated in Gazebo and add an autonomous agent that is responsible for high-level decision-making. Then, we use a mixture of formal and non-formal techniques to verify the distinct system components (ROS nodes). This use of heterogeneous verification techniques is essential to provide guarantees about the nodes at different abstraction levels, and allows us to bring together relevant verification evidence to provide overall assurance.

Monitoring Robotic Systems using CSP: From Safety Designs to Safety Monitors

Jul 07, 2020

Abstract:Runtime Verification (RV) involves monitoring a system to check if it satisfies or violates a property. It is effective at bridging the reality gap between design-time assumptions and run-time environments; which is especially useful for robotic systems, because they operate in the real-world. This paper presents an RV approach that uses a Communicating Sequential Processes (CSP) model, derived from natural-language safety documents, as a runtime monitor. We describe our modelling process and monitoring toolchain, Varanus. The approach is demonstrated on a teleoperated robotic system, called MASCOT, which enables remote operations inside a nuclear reactor. We show how the safety design documents for the MASCOT system were modelled (including how modelling revealed an underspecification in the document) and evaluate the utility of the Varanus toolchain. As far as we know, this is the first RV approach to directly use a CSP model. This approach provides traceability of the safety properties from the documentation to the system, a verified monitor for RV, and validation of the safety documents themselves.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge