Mario Gleirscher

University of Bremen

Synthesising Robust Controllers for Robot Collectives with Recurrent Tasks: A Case Study

Nov 21, 2024Abstract:When designing correct-by-construction controllers for autonomous collectives, three key challenges are the task specification, the modelling, and its use at practical scale. In this paper, we focus on a simple yet useful abstraction for high-level controller synthesis for robot collectives with optimisation goals (e.g., maximum cleanliness, minimum energy consumption) and recurrence (e.g., re-establish contamination and charge thresholds) and safety (e.g., avoid full discharge, mutually exclusive room occupation) constraints. Due to technical limitations (related to scalability and using constraints in the synthesis), we simplify our graph-based setting from a stochastic two-player game into a single-player game on a partially observable Markov decision process (POMDP). Robustness against environmental uncertainty is encoded via partial observability. Linear-time correctness properties are verified separately after synthesising the POMDP strategy. We contribute at-scale guidance on POMDP modelling and controller synthesis for tasked robot collectives exemplified by the scenario of battery-driven robots responsible for cleaning public buildings with utilisation constraints.

* In Proceedings FMAS2024, arXiv:2411.13215

A Stochastic Approach to Classification Error Estimates in Convolutional Neural Networks

Dec 21, 2023Abstract:This technical report presents research results achieved in the field of verification of trained Convolutional Neural Network (CNN) used for image classification in safety-critical applications. As running example, we use the obstacle detection function needed in future autonomous freight trains with Grade of Automation (GoA) 4. It is shown that systems like GoA 4 freight trains are indeed certifiable today with new standards like ANSI/UL 4600 and ISO 21448 used in addition to the long-existing standards EN 50128 and EN 50129. Moreover, we present a quantitative analysis of the system-level hazard rate to be expected from an obstacle detection function. It is shown that using sensor/perceptor fusion, the fused detection system can meet the tolerable hazard rate deemed to be acceptable for the safety integrity level to be applied (SIL-3). A mathematical analysis of CNN models is performed which results in the identification of classification clusters and equivalence classes partitioning the image input space of the CNN. These clusters and classes are used to introduce a novel statistical testing method for determining the residual error probability of a trained CNN and an associated upper confidence limit. We argue that this greybox approach to CNN verification, taking into account the CNN model's internal structure, is essential for justifying that the statistical tests have covered the trained CNN with its neurons and inter-layer mappings in a comprehensive way.

Proceedings Fifth International Workshop on Formal Methods for Autonomous Systems

Nov 15, 2023Abstract:This EPTCS volume contains the proceedings for the Fifth International Workshop on Formal Methods for Autonomous Systems (FMAS 2023), which was held on the 15th and 16th of November 2023. FMAS 2023 was co-located with 18th International Conference on integrated Formal Methods (iFM) (iFM'22), organised by Leiden Institute of Advanced Computer Science of Leiden University. The workshop itself was held at Scheltema Leiden, a renovated 19th Century blanket factory alongside the canal. FMAS 2023 received 25 submissions. We received 11 regular papers, 3 experience reports, 6 research previews, and 5 vision papers. The researchers who submitted papers to FMAS 2023 were from institutions in: Australia, Canada, Colombia, France, Germany, Ireland, Italy, the Netherlands, Sweden, the United Kingdom, and the United States of America. Increasing our number of submissions for the third year in a row is an encouraging sign that FMAS has established itself as a reputable publication venue for research on the formal modelling and verification of autonomous systems. After each paper was reviewed by three members of our Programme Committee we accepted a total of 15 papers: 8 long papers and 7 short papers.

Probabilistic Risk Assessment of an Obstacle Detection System for GoA 4 Freight Trains

Jun 26, 2023Abstract:In this paper, a quantitative risk assessment approach is discussed for the design of an obstacle detection function for low-speed freight trains with grade of automation (GoA)~4. In this 5-step approach, starting with single detection channels and ending with a three-out-of-three (3oo3) model constructed of three independent dual-channel modules and a voter, a probabilistic assessment is exemplified, using a combination of statistical methods and parametric stochastic model checking. It is illustrated that, under certain not unreasonable assumptions, the resulting hazard rate becomes acceptable for specific application settings. The statistical approach for assessing the residual risk of misclassifications in convolutional neural networks and conventional image processing software suggests that high confidence can be placed into the safety-critical obstacle detection function, even though its implementation involves realistic machine learning uncertainties.

Complete Test of Synthesised Safety Supervisors for Robots and Autonomous Systems

Oct 25, 2021

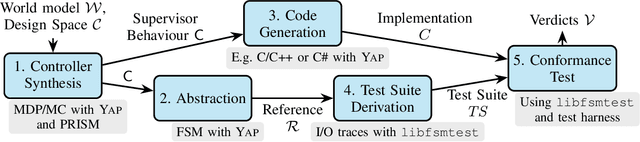

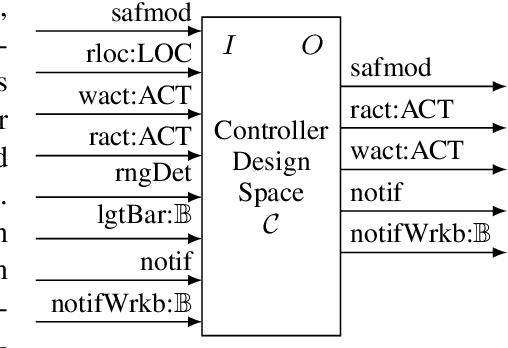

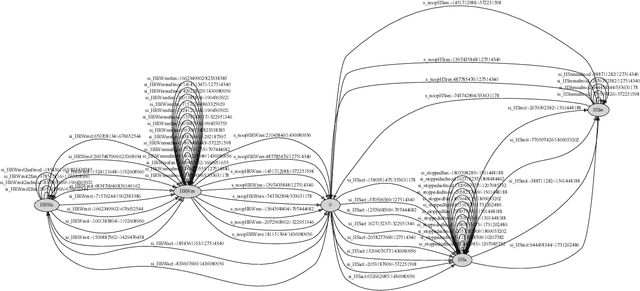

Abstract:Verified controller synthesis uses world models that comprise all potential behaviours of humans, robots, further equipment, and the controller to be synthesised. A world model enables quantitative risk assessment, for example, by stochastic model checking. Such a model describes a range of controller behaviours some of which -- when implemented correctly -- guarantee that the overall risk in the actual world is acceptable, provided that the stochastic assumptions have been made to the safe side. Synthesis then selects an acceptable-risk controller behaviour. However, because of crossing abstraction, formalism, and tool boundaries, verified synthesis for robots and autonomous systems has to be accompanied by rigorous testing. In general, standards and regulations for safety-critical systems require testing as a key element to obtain certification credit before entry into service. This work-in-progress paper presents an approach to the complete testing of synthesised supervisory controllers that enforce safety properties in domains such as human-robot collaboration and autonomous driving. Controller code is generated from the selected controller behaviour. The code generator, however, is hard, if not infeasible, to verify in a formal and comprehensive way. Instead, utilising testing, an abstract test reference is generated, a symbolic finite state machine with simpler semantics than code semantics. From this reference, a complete test suite is derived and applied to demonstrate the observational equivalence between the synthesised abstract test reference and the generated concrete controller code running on a control system platform.

* In Proceedings FMAS 2021, arXiv:2110.11527

Verified Synthesis of Optimal Safety Controllers for Human-Robot Collaboration

Jun 11, 2021

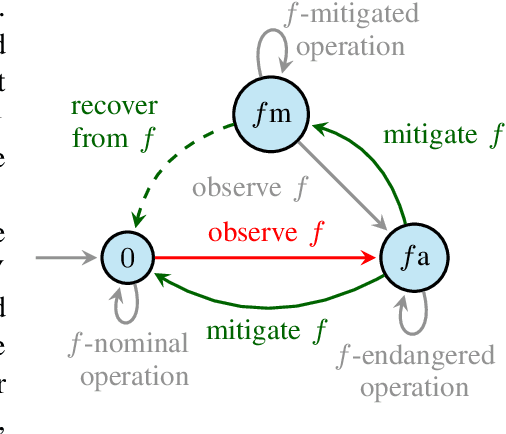

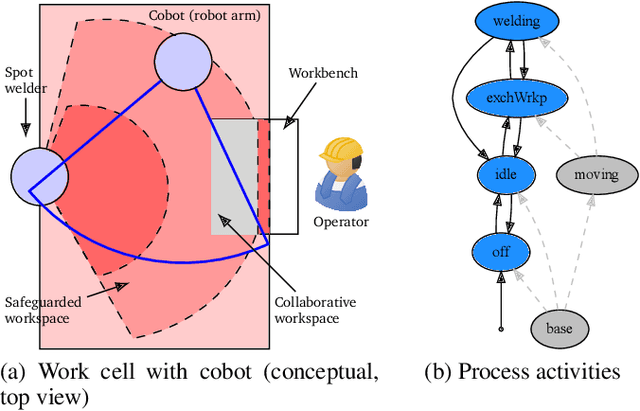

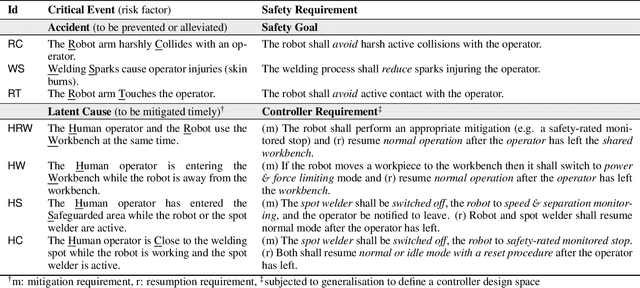

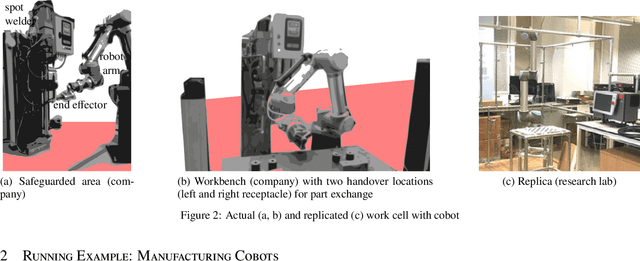

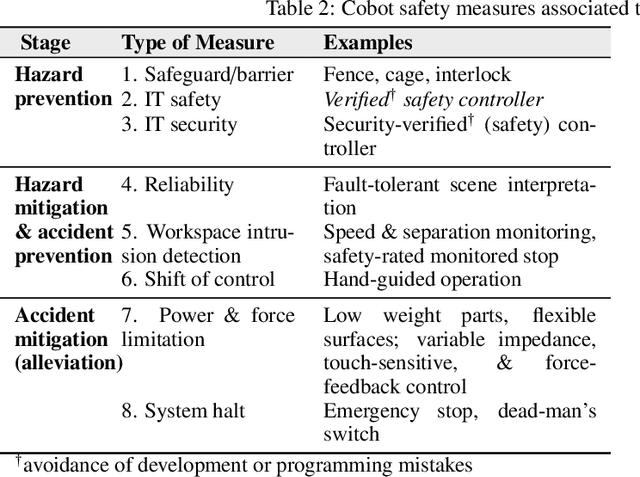

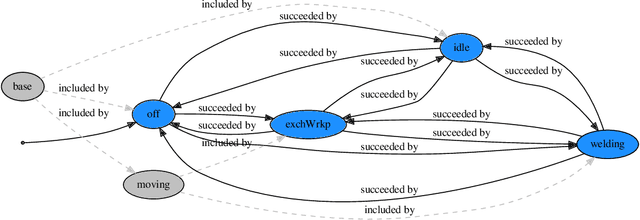

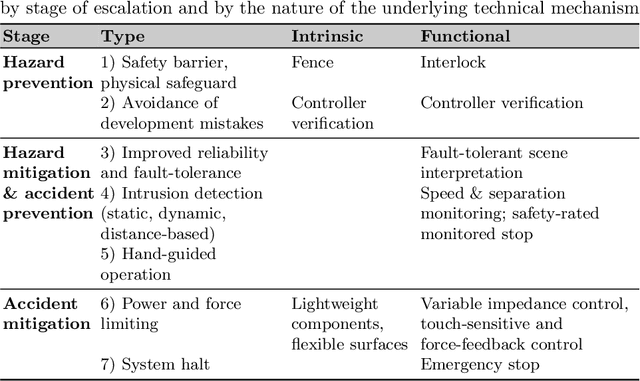

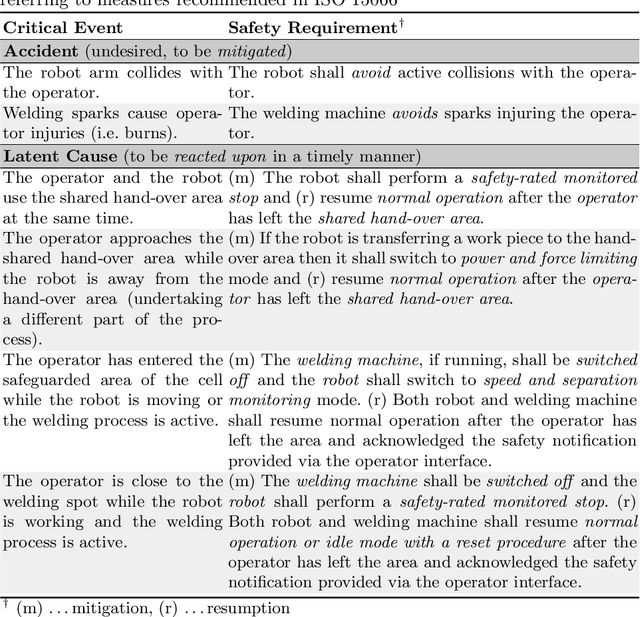

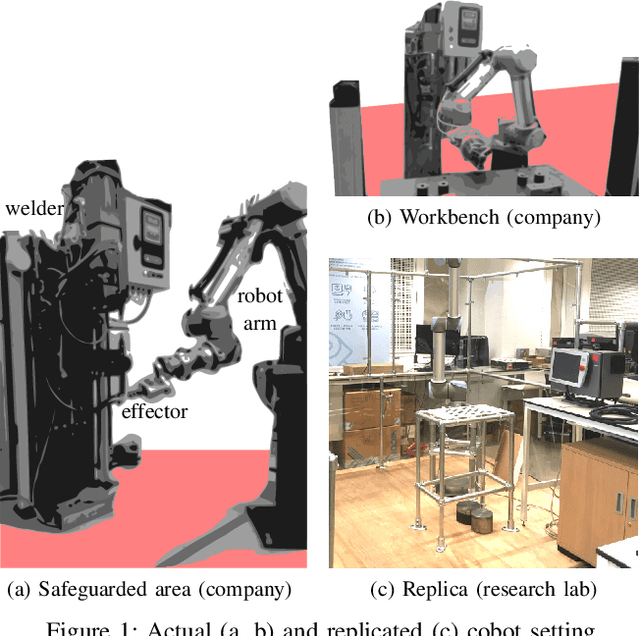

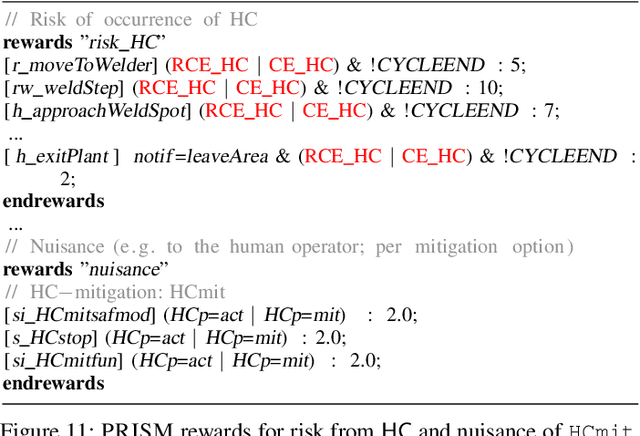

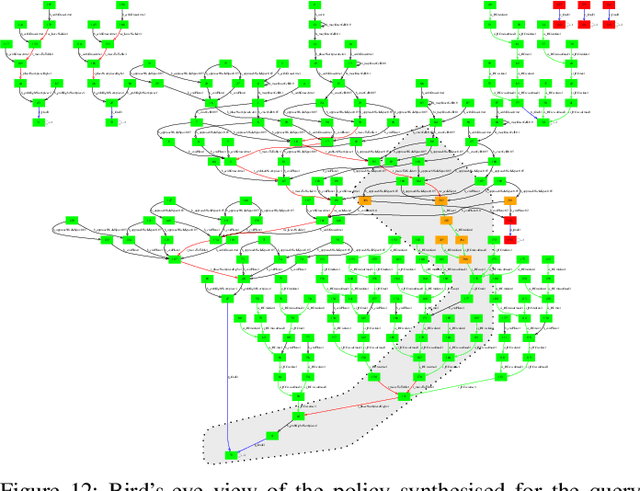

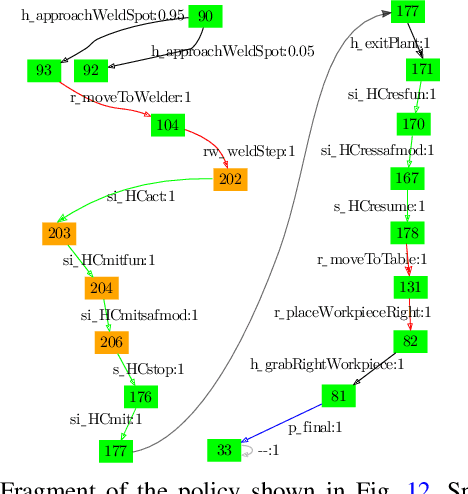

Abstract:We present a tool-supported approach for the synthesis, verification and validation of the control software responsible for the safety of the human-robot interaction in manufacturing processes that use collaborative robots. In human-robot collaboration, software-based safety controllers are used to improve operational safety, e.g., by triggering shutdown mechanisms or emergency stops to avoid accidents. Complex robotic tasks and increasingly close human-robot interaction pose new challenges to controller developers and certification authorities. Key among these challenges is the need to assure the correctness of safety controllers under explicit (and preferably weak) assumptions. Our controller synthesis, verification and validation approach is informed by the process, risk analysis, and relevant safety regulations for the target application. Controllers are selected from a design space of feasible controllers according to a set of optimality criteria, are formally verified against correctness criteria, and are translated into executable code and validated in a digital twin. The resulting controller can detect the occurrence of hazards, move the process into a safe state, and, in certain circumstances, return the process to an operational state from which it can resume its original task. We show the effectiveness of our software engineering approach through a case study involving the development of a safety controller for a manufacturing work cell equipped with a collaborative robot.

Maintaining driver attentiveness in shared-control autonomous driving

Feb 05, 2021

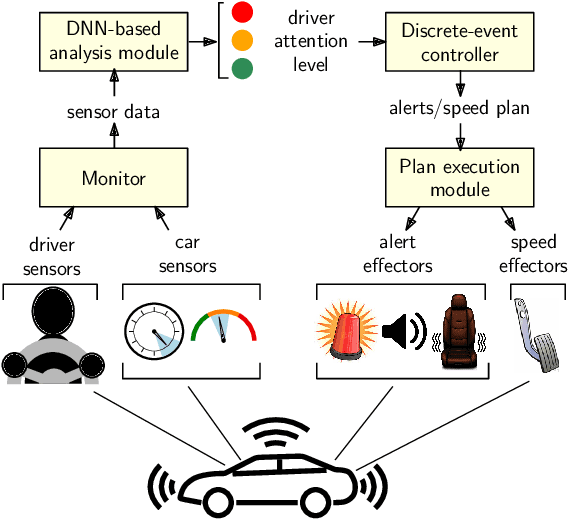

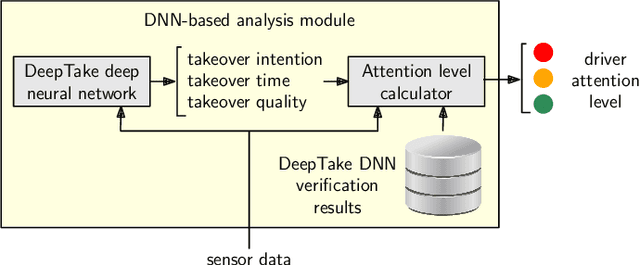

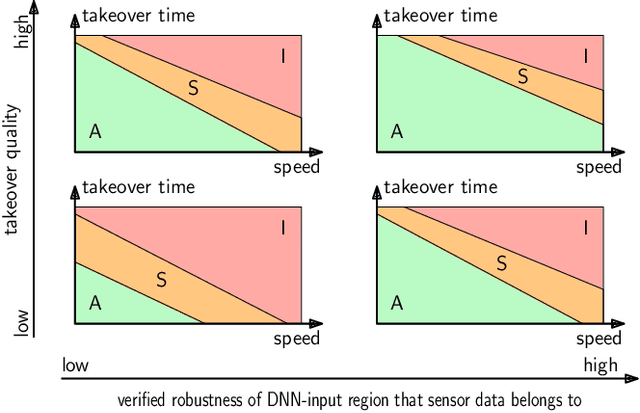

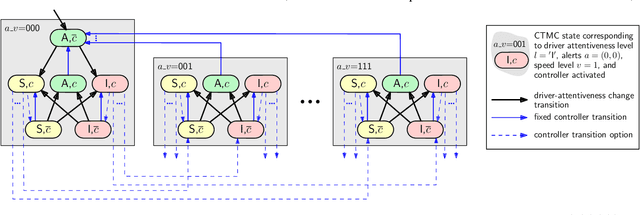

Abstract:We present a work-in-progress approach to improving driver attentiveness in cars provided with automated driving systems. The approach is based on a control loop that monitors the driver's biometrics (eye movement, heart rate, etc.) and the state of the car; analyses the driver's attentiveness level using a deep neural network; plans driver alerts and changes in the speed of the car using a formally verified controller; and executes this plan using actuators ranging from acoustic and visual to haptic devices. The paper presents (i) the self-adaptive system formed by this monitor-analyse-plan-execute (MAPE) control loop, the car and the monitored driver, and (ii) the use of probabilistic model checking to synthesise the controller for the planning step of the MAPE loop.

YAP: Tool Support for Deriving Safety Controllers from Hazard Analysis and Risk Assessments

Dec 03, 2020

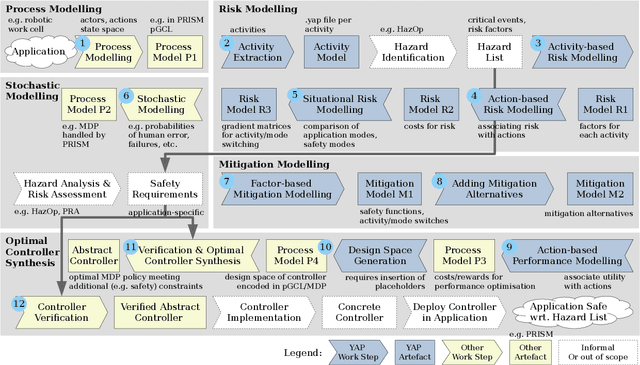

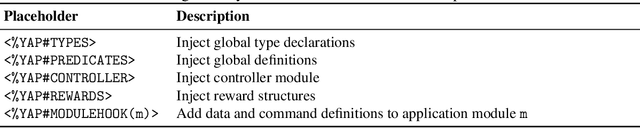

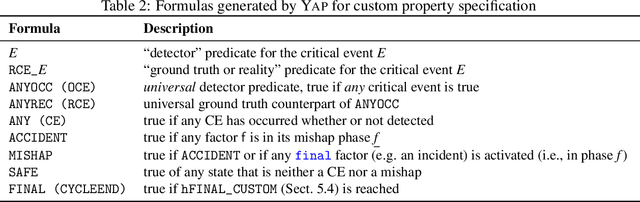

Abstract:Safety controllers are system or software components responsible for handling risk in many machine applications. This tool paper describes a use case and a workflow for YAP, a research tool for risk modelling and discrete-event safety controller design. The goal of this use case is to derive a safety controller from hazard analysis and risk assessment, to define a design space for this controller, and to select a verified optimal controller instance from this design space. We represent this design space as a stochastic model and use YAP for risk modelling and generation of parts of this stochastic model. For the controller verification and selection step, we use a stochastic model checker. The approach is illustrated by an example of a collaborative robot operated in a manufacturing work cell.

* In Proceedings FMAS 2020, arXiv:2012.01176

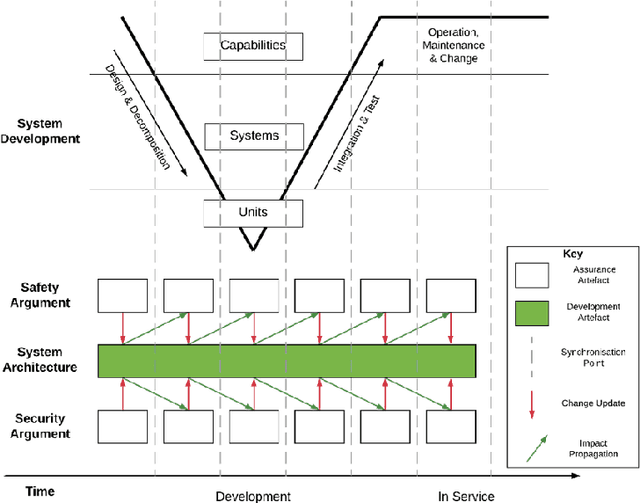

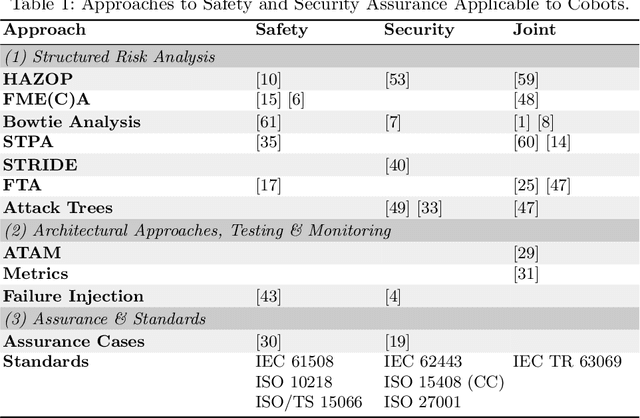

Challenges in the Safety-Security Co-Assurance of Collaborative Industrial Robots

Jul 17, 2020

Abstract:The coordinated assurance of interrelated critical properties, such as system safety and cyber-security, is one of the toughest challenges in critical systems engineering. In this chapter, we summarise approaches to the coordinated assurance of safety and security. Then, we highlight the state of the art and recent challenges in human-robot collaboration in manufacturing both from a safety and security perspective. We conclude with a list of procedural and technological issues to be tackled in the coordinated assurance of collaborative industrial robots.

Safety Controller Synthesis for Collaborative Robots

Jul 07, 2020

Abstract:In human-robot collaboration (HRC), software-based automatic safety controllers (ASCs) are used in various forms (e.g. shutdown mechanisms, emergency brakes, interlocks) to improve operational safety. Complex robotic tasks and increasingly close human-robot interaction pose new challenges to ASC developers and certification authorities. Key among these challenges is the need to assure the correctness of ASCs under reasonably weak assumptions. To address this need, we introduce and evaluate a tool-supported ASC synthesis method for HRC in manufacturing. Our ASC synthesis is: (i) informed by the manufacturing process, risk analysis, and regulations; (ii) formally verified against correctness criteria; and (iii) selected from a design space of feasible controllers according to a set of optimality criteria. The synthesised ASC can detect the occurrence of hazards, move the process into a safe state, and, in certain circumstances, return the process to an operational state from which it can resume its original task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge