Jan Peleska

University of Bremen

A Stochastic Approach to Classification Error Estimates in Convolutional Neural Networks

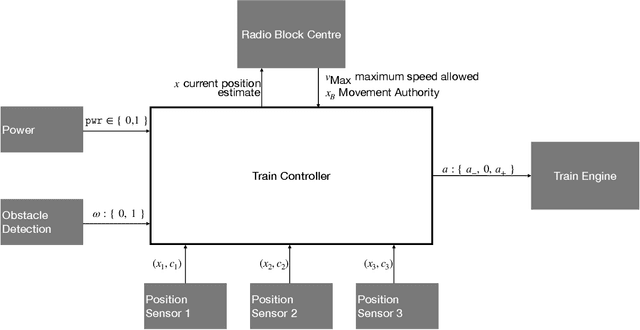

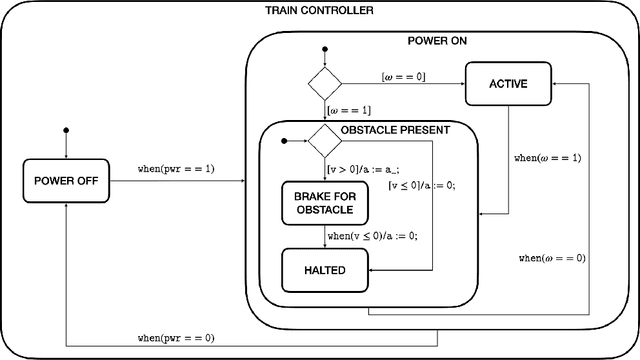

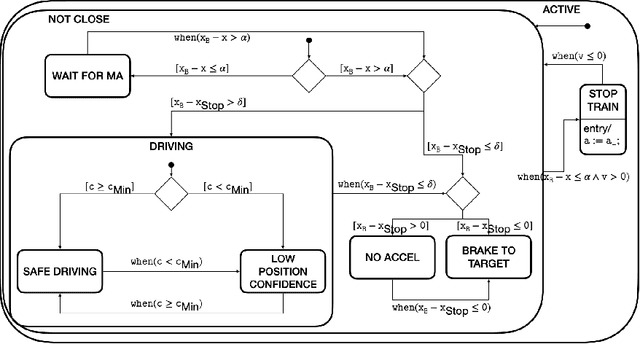

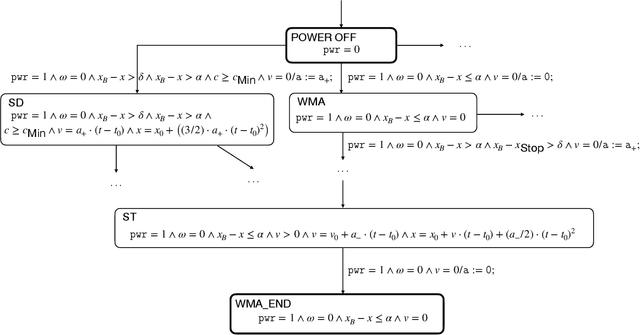

Dec 21, 2023Abstract:This technical report presents research results achieved in the field of verification of trained Convolutional Neural Network (CNN) used for image classification in safety-critical applications. As running example, we use the obstacle detection function needed in future autonomous freight trains with Grade of Automation (GoA) 4. It is shown that systems like GoA 4 freight trains are indeed certifiable today with new standards like ANSI/UL 4600 and ISO 21448 used in addition to the long-existing standards EN 50128 and EN 50129. Moreover, we present a quantitative analysis of the system-level hazard rate to be expected from an obstacle detection function. It is shown that using sensor/perceptor fusion, the fused detection system can meet the tolerable hazard rate deemed to be acceptable for the safety integrity level to be applied (SIL-3). A mathematical analysis of CNN models is performed which results in the identification of classification clusters and equivalence classes partitioning the image input space of the CNN. These clusters and classes are used to introduce a novel statistical testing method for determining the residual error probability of a trained CNN and an associated upper confidence limit. We argue that this greybox approach to CNN verification, taking into account the CNN model's internal structure, is essential for justifying that the statistical tests have covered the trained CNN with its neurons and inter-layer mappings in a comprehensive way.

Probabilistic Risk Assessment of an Obstacle Detection System for GoA 4 Freight Trains

Jun 26, 2023Abstract:In this paper, a quantitative risk assessment approach is discussed for the design of an obstacle detection function for low-speed freight trains with grade of automation (GoA)~4. In this 5-step approach, starting with single detection channels and ending with a three-out-of-three (3oo3) model constructed of three independent dual-channel modules and a voter, a probabilistic assessment is exemplified, using a combination of statistical methods and parametric stochastic model checking. It is illustrated that, under certain not unreasonable assumptions, the resulting hazard rate becomes acceptable for specific application settings. The statistical approach for assessing the residual risk of misclassifications in convolutional neural networks and conventional image processing software suggests that high confidence can be placed into the safety-critical obstacle detection function, even though its implementation involves realistic machine learning uncertainties.

Complete Test of Synthesised Safety Supervisors for Robots and Autonomous Systems

Oct 25, 2021

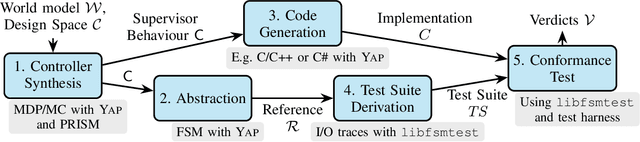

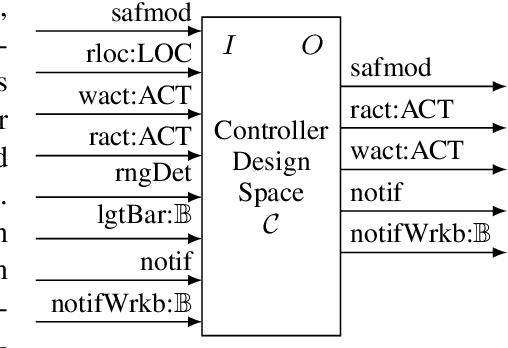

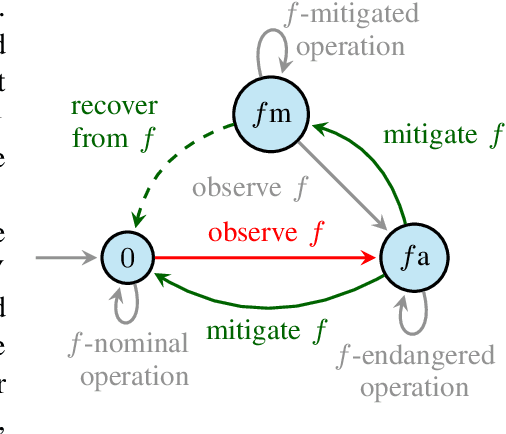

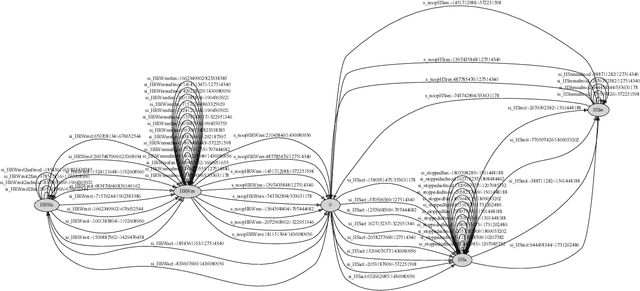

Abstract:Verified controller synthesis uses world models that comprise all potential behaviours of humans, robots, further equipment, and the controller to be synthesised. A world model enables quantitative risk assessment, for example, by stochastic model checking. Such a model describes a range of controller behaviours some of which -- when implemented correctly -- guarantee that the overall risk in the actual world is acceptable, provided that the stochastic assumptions have been made to the safe side. Synthesis then selects an acceptable-risk controller behaviour. However, because of crossing abstraction, formalism, and tool boundaries, verified synthesis for robots and autonomous systems has to be accompanied by rigorous testing. In general, standards and regulations for safety-critical systems require testing as a key element to obtain certification credit before entry into service. This work-in-progress paper presents an approach to the complete testing of synthesised supervisory controllers that enforce safety properties in domains such as human-robot collaboration and autonomous driving. Controller code is generated from the selected controller behaviour. The code generator, however, is hard, if not infeasible, to verify in a formal and comprehensive way. Instead, utilising testing, an abstract test reference is generated, a symbolic finite state machine with simpler semantics than code semantics. From this reference, a complete test suite is derived and applied to demonstrate the observational equivalence between the synthesised abstract test reference and the generated concrete controller code running on a control system platform.

* In Proceedings FMAS 2021, arXiv:2110.11527

Complete Agent-driven Model-based System Testing for Autonomous Systems

Oct 25, 2021

Abstract:In this position paper, a novel approach to testing complex autonomous transportation systems (ATS) in the automotive, avionic, and railway domains is described. It is intended to mitigate some of the most critical problems regarding verification and validation (V&V) effort for ATS. V&V is known to become infeasible for complex ATS, when using conventional methods only. The approach advocated here uses complete testing methods on the module level, because these establish formal proofs for the logical correctness of the software. Having established logical correctness, system-level tests are performed in simulated cloud environments and on the target system. To give evidence that 'sufficiently many' system tests have been performed with the target system, a formally justified coverage criterion is introduced. To optimise the execution of very large system test suites, we advocate an online testing approach where multiple tests are executed in parallel, and test steps are identified on-the-fly. The coordination and optimisation of these executions is achieved by an agent-based approach. Each aspect of the testing approach advocated here is shown to either be consistent with existing standards for development and V&V of safety-critical transportation systems, or it is justified why it should become acceptable in future revisions of the applicable standards.

* In Proceedings FMAS 2021, arXiv:2110.11527

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge