Angelo Ferrando

University of Modena and Reggio Emilia

Watchdogs and Oracles: Runtime Verification Meets Large Language Models for Autonomous Systems

Nov 18, 2025

Abstract:Assuring the safety and trustworthiness of autonomous systems is particularly difficult when learning-enabled components and open environments are involved. Formal methods provide strong guarantees but depend on complete models and static assumptions. Runtime verification (RV) complements them by monitoring executions at run time and, in its predictive variants, by anticipating potential violations. Large language models (LLMs), meanwhile, excel at translating natural language into formal artefacts and recognising patterns in data, yet they remain error-prone and lack formal guarantees. This vision paper argues for a symbiotic integration of RV and LLMs. RV can serve as a guardrail for LLM-driven autonomy, while LLMs can extend RV by assisting specification capture, supporting anticipatory reasoning, and helping to handle uncertainty. We outline how this mutual reinforcement differs from existing surveys and roadmaps, discuss challenges and certification implications, and identify future research directions towards dependable autonomy.

* In Proceedings FMAS 2025, arXiv:2511.13245

ROSMonitoring 2.0: Extending ROS Runtime Verification to Services and Ordered Topics

Nov 21, 2024Abstract:Formal verification of robotic applications presents challenges due to their hybrid nature and distributed architecture. This paper introduces ROSMonitoring 2.0, an extension of ROSMonitoring designed to facilitate the monitoring of both topics and services while considering the order in which messages are published and received. The framework has been enhanced to support these novel features for ROS1 -- and partially ROS2 environments -- offering improved real-time support, security, scalability, and interoperability. We discuss the modifications made to accommodate these advancements and present results obtained from a case study involving the runtime monitoring of specific components of a fire-fighting Uncrewed Aerial Vehicle (UAV).

* In Proceedings FMAS2024, arXiv:2411.13215

RV4Chatbot: Are Chatbots Allowed to Dream of Electric Sheep?

Nov 21, 2024

Abstract:Chatbots have become integral to various application domains, including those with safety-critical considerations. As a result, there is a pressing need for methods that ensure chatbots consistently adhere to expected, safe behaviours. In this paper, we introduce RV4Chatbot, a Runtime Verification framework designed to monitor deviations in chatbot behaviour. We formalise expected behaviours as interaction protocols between the user and the chatbot. We present the RV4Chatbot design and describe two implementations that instantiate it: RV4Rasa, for monitoring chatbots created with the Rasa framework, and RV4Dialogflow, for monitoring Dialogflow chatbots. Additionally, we detail experiments conducted in a factory automation scenario using both RV4Rasa and RV4Dialogflow.

* In Proceedings FMAS2024, arXiv:2411.13215

Open Challenges in the Formal Verification of Autonomous Driving

Nov 21, 2024Abstract:In the realm of autonomous driving, the development and integration of highly complex and heterogeneous systems are standard practice. Modern vehicles are not monolithic systems; instead, they are composed of diverse hardware components, each running its own software systems. An autonomous vehicle comprises numerous independent components, often developed by different and potentially competing companies. This diversity poses significant challenges for the certification process, as it necessitates certifying components that may not disclose their internal behaviour (black-boxes). In this paper, we present a real-world case study of an autonomous driving system, identify key open challenges associated with its development and integration, and explore how formal verification techniques can address these challenges to ensure system reliability and safety.

* In Proceedings FMAS2024, arXiv:2411.13215

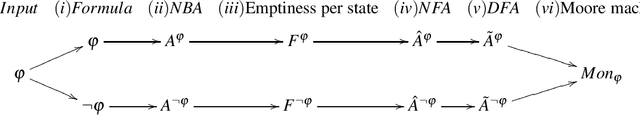

3vLTL: A Tool to Generate Automata for Three-valued LTL

Nov 16, 2023

Abstract:Multi-valued logics have a long tradition in the literature on system verification, including run-time verification. However, comparatively fewer model-checking tools have been developed for multi-valued specification languages. We present 3vLTL, a tool to generate Buchi automata from formulas in Linear-time Temporal Logic (LTL) interpreted on a three-valued semantics. Given an LTL formula, a set of atomic propositions as the alphabet for the automaton, and a truth value, our procedure generates a Buchi automaton that accepts all the words that assign the chosen truth value to the LTL formula. Given the particular type of the output of the tool, it can also be seamlessly processed by third-party libraries in a natural way. That is, the Buchi automaton can then be used in the context of formal verification to check whether an LTL formula is true, false, or undefined on a given model.

* In Proceedings FMAS 2023, arXiv:2311.08987

Runtime Verification for Trustworthy Computing

Oct 03, 2023Abstract:Autonomous and robotic systems are increasingly being trusted with sensitive activities with potentially serious consequences if that trust is broken. Runtime verification techniques present a natural source of inspiration for monitoring and enforcing the desirable properties of the communication protocols in place, providing a formal basis and ways to limit intrusiveness. A recently proposed approach, RV-TEE, shows how runtime verification can enhance the level of trust to the Rich Execution Environment (REE), consequently adding a further layer of protection around the Trusted Execution Environment (TEE). By reflecting on the implication of deploying RV in the context of trustworthy computing, we propose practical solutions to two threat models for the RV-TEE monitoring process: one where the adversary has gained access to the system without elevated privileges, and another where the adversary gains all privileges to the host system but fails to steal secrets from the TEE.

* In Proceedings AREA 2023, arXiv:2310.00333

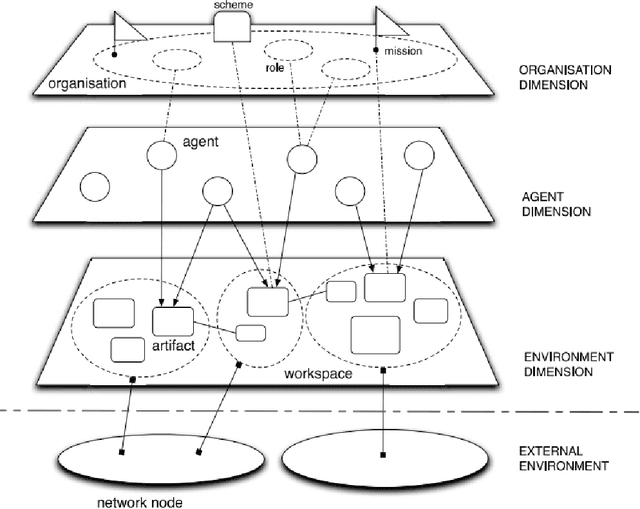

Proceedings of the Third Workshop on Agents and Robots for reliable Engineered Autonomy

Sep 30, 2023Abstract:The volume comprises the proceedings of the Third Workshop on Agents and Robots for reliable Engineered Autonomy (AREA 2023), held alongside the 26th European Conference on Artificial Intelligence (ECAI 2023). It explores the convergence of autonomous agents and robotics, emphasizing the practical application of agents in real-world scenarios with physical interactions. The workshop highlights the growing importance of enhanced autonomy and reliable behavior in robotic systems and the need for novel verification and validation methods. Its primary objective is to promote collaboration between researchers in these fields, aiming to address complex challenges in autonomous robotic systems. The volume includes 7 full papers and 5 short papers.

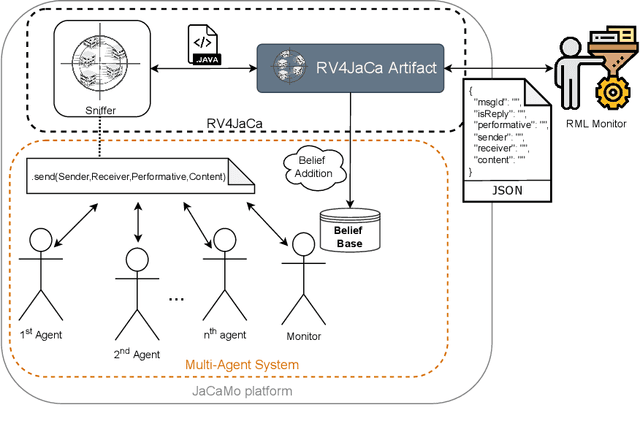

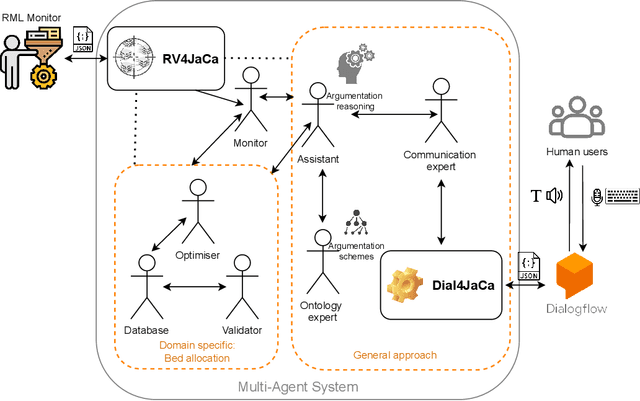

RV4JaCa -- Runtime Verification for Multi-Agent Systems

Jul 20, 2022

Abstract:This paper presents a Runtime Verification (RV) approach for Multi-Agent Systems (MAS) using the JaCaMo framework. Our objective is to bring a layer of security to the MAS. This layer is capable of controlling events during the execution of the system without needing a specific implementation in the behaviour of each agent to recognise the events. MAS have been used in the context of hybrid intelligence. This use requires communication between software agents and human beings. In some cases, communication takes place via natural language dialogues. However, this kind of communication brings us to a concern related to controlling the flow of dialogue so that agents can prevent any change in the topic of discussion that could impair their reasoning. We demonstrate the implementation of a monitor that aims to control this dialogue flow in a MAS that communicates with the user through natural language to aid decision-making in hospital bed allocation.

* In Proceedings AREA 2022, arXiv:2207.09058

Proceedings of the Second Workshop on Agents and Robots for reliable Engineered Autonomy

Jul 19, 2022Abstract:This volume contains the proceedings of the Second Workshop on Agents and Robots for reliable Engineered Autonomy (AREA 2022), co-located with the 31st International Joint Conference on Artificial Intelligence and the 25th European Conference on Artificial Intelligence (IJCAI-ECAI 2022). The AREA workshop brings together researchers from autonomous agents, software engineering and robotic communities, as combining knowledge coming from these research areas may lead to innovative approaches that solve complex problems related with the verification and validation of autonomous robotic systems.

Towards Partial Monitoring: It is Always too Soon to Give Up

Oct 25, 2021

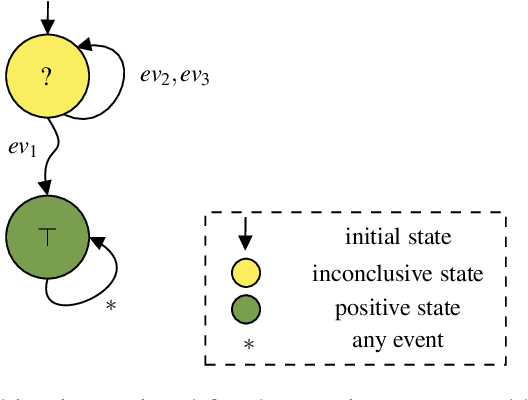

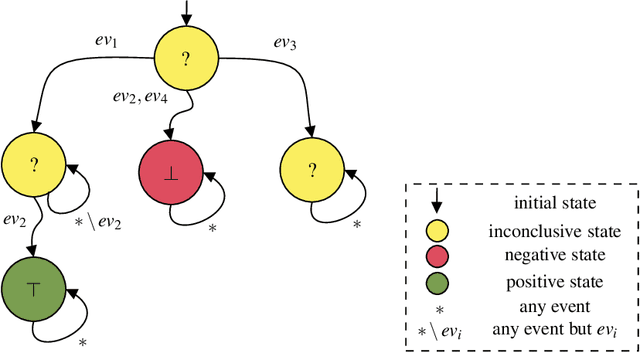

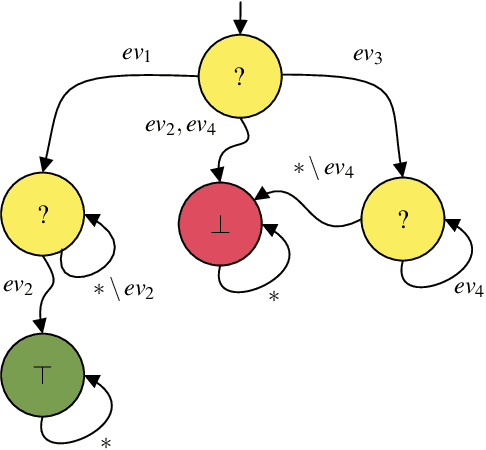

Abstract:Runtime Verification is a lightweight formal verification technique. It is used to verify at runtime whether the system under analysis behaves as expected. The expected behaviour is usually formally specified by means of properties, which are used to automatically synthesise monitors. A monitor is a device that, given a sequence of events representing a system execution, returns a verdict symbolising the satisfaction or violation of the formal property. Properties that can (resp. cannot) be verified at runtime by a monitor are called monitorable and non-monitorable, respectively. In this paper, we revise the notion of monitorability from a practical perspective, where we show how non-monitorable properties can still be used to generate partial monitors, which can partially check the properties. Finally, we present the implications both from a theoretical and practical perspectives.

* In Proceedings FMAS 2021, arXiv:2110.11527

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge