Mehrnoosh Askarpour

McMaster University

Proceedings of the Second Workshop on Agents and Robots for reliable Engineered Autonomy

Jul 19, 2022Abstract:This volume contains the proceedings of the Second Workshop on Agents and Robots for reliable Engineered Autonomy (AREA 2022), co-located with the 31st International Joint Conference on Artificial Intelligence and the 25th European Conference on Artificial Intelligence (IJCAI-ECAI 2022). The AREA workshop brings together researchers from autonomous agents, software engineering and robotic communities, as combining knowledge coming from these research areas may lead to innovative approaches that solve complex problems related with the verification and validation of autonomous robotic systems.

Is the Rush to Machine Learning Jeopardizing Safety? Results of a Survey

Nov 29, 2021

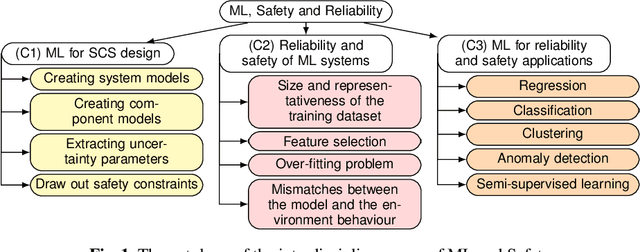

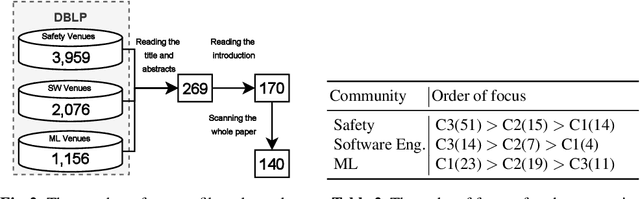

Abstract:Machine learning (ML) is finding its way into safety-critical systems (SCS). Current safety standards and practice were not designed to cope with ML techniques, and it is difficult to be confident that SCSs that contain ML components are safe. Our hypothesis was that there has been a rush to deploy ML techniques at the expense of a thorough examination as to whether the use of ML techniques introduces safety problems that we are not yet adequately able to detect and mitigate against. We thus conducted a targeted literature survey to determine the research effort that has been expended in applying ML to SCS compared with that spent on evaluating the safety of SCSs that deploy ML components. This paper presents the (surprising) results of the survey.

How to Formally Model Human in Collaborative Robotics

Dec 03, 2020Abstract:Human-robot collaboration (HRC) is an emerging trend of robotics that promotes the co-presence and cooperation of humans and robots in common workspaces. Physical vicinity and interaction between humans and robots, combined with the uncertainty of human behaviour, could lead to undesired situations where humans are injured. Thus, safety is a priority for HRC applications. Safety analysis via formal modelling and verification techniques could considerably avoid dangerous consequences, but only if the models of HRC systems are comprehensive and realistic, which requires reasonably realistic models of human behaviour. This paper explores state-of-the-art solutions for modelling human and discusses which ones are suitable for HRC scenarios.

* In Proceedings FMAS 2020, arXiv:2012.01176

Statistical Model Checking of Human-Robot Interaction Scenarios

Jul 23, 2020

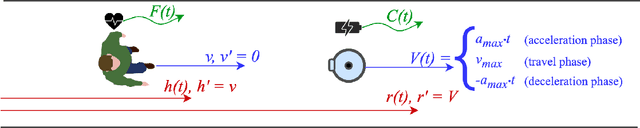

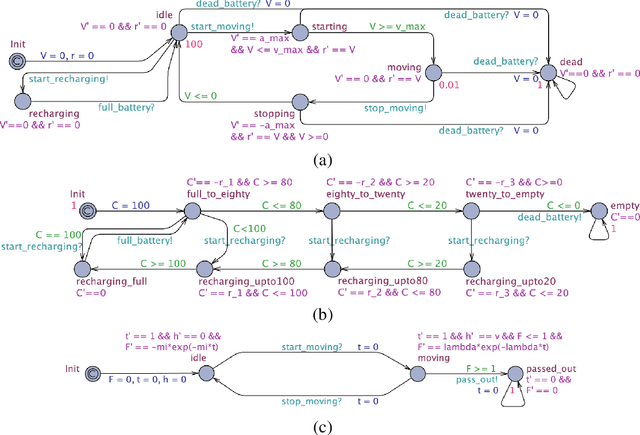

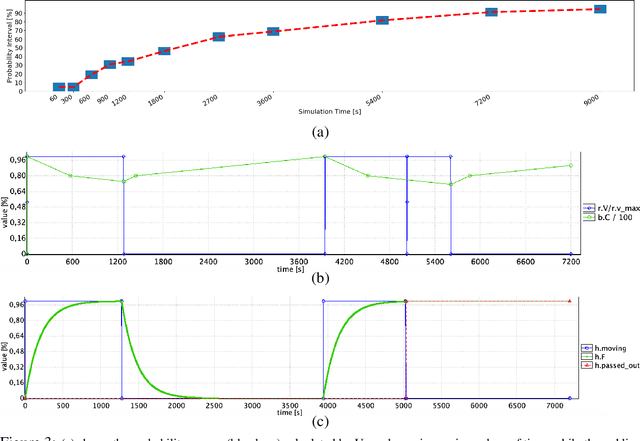

Abstract:Robots are soon going to be deployed in non-industrial environments. Before society can take such a step, it is necessary to endow complex robotic systems with mechanisms that make them reliable enough to operate in situations where the human factor is predominant. This calls for the development of robotic frameworks that can soundly guarantee that a collection of properties are verified at all times during operation. While developing a mission plan, robots should take into account factors such as human physiology. In this paper, we present an example of how a robotic application that involves human interaction can be modeled through hybrid automata, and analyzed by using statistical model-checking. We exploit statistical techniques to determine the probability with which some properties are verified, thus easing the state-space explosion problem. The analysis is performed using the Uppaal tool. In addition, we used Uppaal to run simulations that allowed us to show non-trivial time dynamics that describe the behavior of the real system, including human-related variables. Overall, this process allows developers to gain useful insights into their application and to make decisions about how to improve it to balance efficiency and user satisfaction.

* In Proceedings AREA 2020, arXiv:2007.11260

Co-Simulation of Human-Robot Collaboration: from Temporal Logic to 3D Simulation

Jul 23, 2020Abstract:Human-Robot Collaboration (HRC) is rapidly replacing the traditional application of robotics in the manufacturing industry. Robots and human operators no longer have to perform their tasks in segregated areas and are capable of working in close vicinity and performing hybrid tasks -- performed partially by humans and by robots. We have presented a methodology in an earlier work [16] to promote and facilitate formally modeling HRC systems, which are notoriously safety-critical. Relying on temporal logic modeling capabilities and automated model checking tools, we built a framework to formally model HRC systems and verify the physical safety of human operator against ISO 10218-2 [10] standard. In order to make our proposed formal verification framework more appealing to safety engineers, whom are usually not very fond of formal modeling and verification techniques, we decided to couple our model checking approach with a 3D simulator that demonstrates the potential hazardous situations to the safety engineers in a more transparent way. This paper reports our co-simulation approach, using Morse simulator [4] and Zot model checker [14].

* In Proceedings AREA 2020, arXiv:2007.11260

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge